【防忘笔记】一个例子理解Pytorch中一维卷积nn.Conv1d

一维卷积层的各项参数如下

torch.nn.Conv1d(in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, bias=True, padding_mode='zeros', device=None, dtype=None)

nn.Conv1d输入

输入形状一般应为:(N, Cin, Lin) 或 (Cin, Lin), (N, Cin, Lin)

N = 批量大小,例如 32 或 64;

Cin = 表示通道数;

Lin = 它是信号序列的长度;

nn.Conv1d输出

torch.nn.Conv1d() 的输出形状为:(N, Cout, Lout) 或 (Cout, Lout)

其中,Cout由给Conv1d的参数out_channels决定,即Cout == out_channels

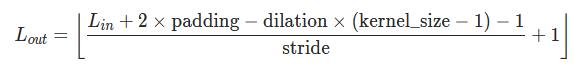

Lout则是使用Lin与padding、stride等参数计算后得到的结果,计算公式如下:

例子:

import torch

N = 40

C_in = 40

L_in = 100

inputs = torch.rand([N, C_in, L_in])

padding = 3

kernel_size = 3

stride = 2

C_out = 10

x = torch.nn.Conv1d(C_in, C_out, kernel_size, stride=stride, padding=padding)

y = x(inputs)

print(y)

print(y.shape)

运行上述示例后会得到以下结果

tensor([[[-0.0850, 0.3896, 0.7539, ..., 0.4054, 0.3753, 0.2802],

[ 0.0181, -0.0184, -0.0605, ..., 0.0114, -0.0016, -0.0268],

[-0.0570, -0.4591, -0.3195, ..., -0.2958, -0.1871, 0.0635],

...,

[ 0.0554, 0.1234, -0.0150, ..., 0.0763, -0.3085, -0.2996],

[-0.0516, 0.2781, 0.3457, ..., 0.2195, 0.1143, -0.0742],

[ 0.0281, -0.0804, -0.3606, ..., -0.3509, -0.2694, -0.0084]]],

grad_fn=<SqueezeBackward1>)

torch.Size([40, 10, 52])

y 是输出,它的形状是: 40* 10* 52

40是batchsize;10是用户设定的Cout(即out_channels),52是经过一维卷积层计算后目前序列的长度(即Lout,也可以理解为某个一维矩阵的形状)

注意:

对于一维卷积,

通道数被视为“输入向量的数量”(in_channels)和“输出特征向量的数量”(out_channels);

Lout是输出特征向量的大小(不是数量);

参考:

1、https://stackoverflow.com/questions/60671530/how-can-i-have-a-pytorch-conv1d-work-over-a-vector

2、https://www.tutorialexample.com/understand-torch-nn-conv1d-with-examples-pytorch-tutorial/

浙公网安备 33010602011771号

浙公网安备 33010602011771号