09 spark连接mysql数据库

spark连接mysql数据库

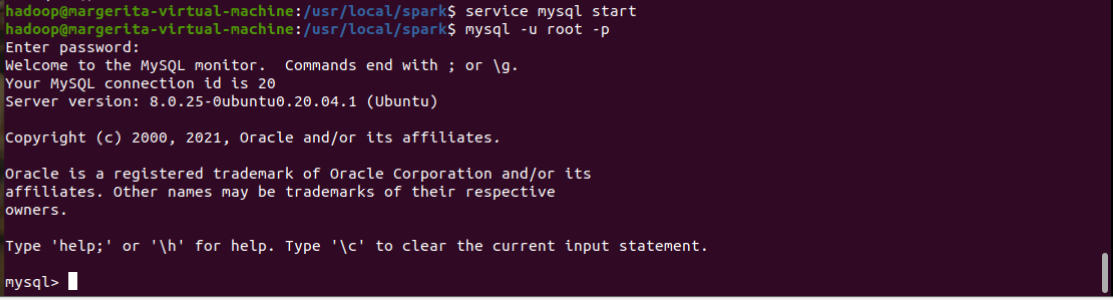

1.启动Mysql服务。

2.spark 连接mysql驱动程序,找到mysql-connector-java-8.0.22.jar并将mysql-connector-java-8.0.22.jar复制到/usr/local/spark/jars目录下。

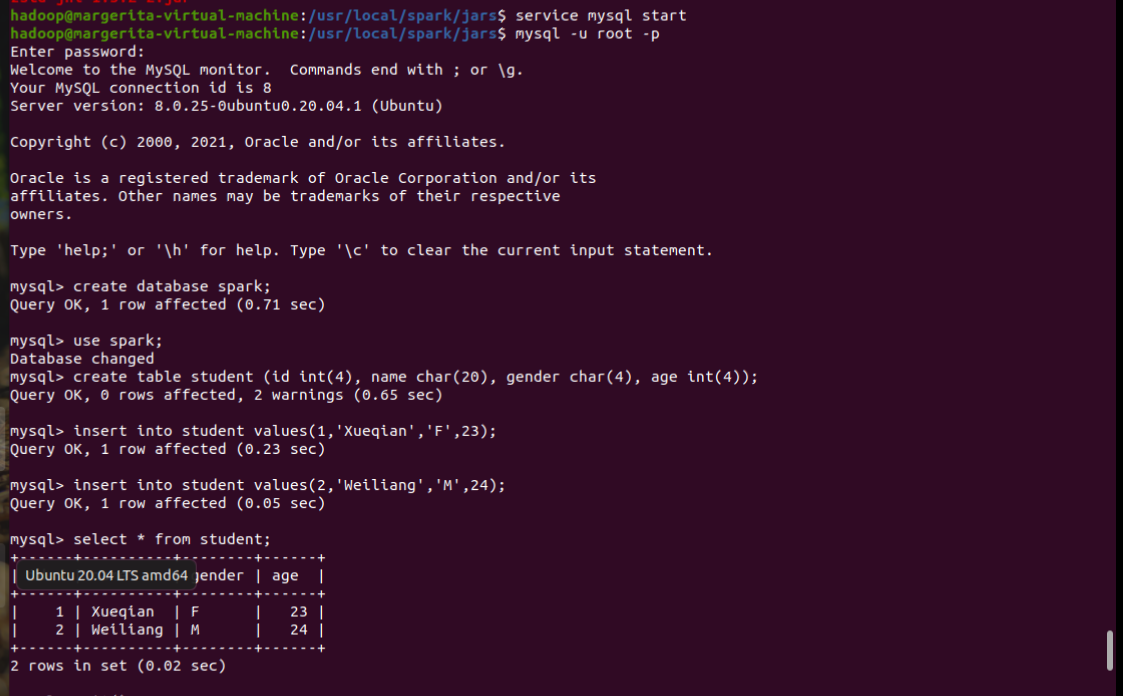

3.启动 Mysql shell,新建数据库spark,表student。

create database spark;

use spark;

create table student (id int(4), name char(20), gender char(4), age int(4));

insert into student values(1,'Xueqian','F',23);

insert into student values(2,'Weiliang','M',24);

select * from student;

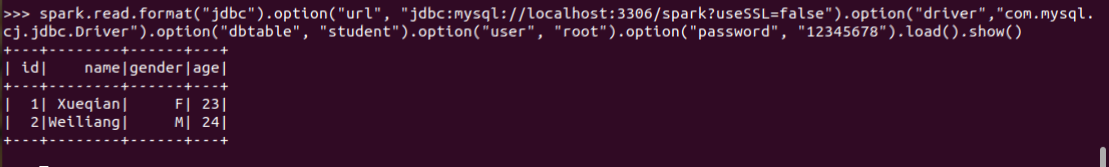

4.启动spark shell,spark读取MySQL数据库中的数据

./bin/pyspark --jars /usr/local/spark/jars/mysql-connector-java-8.0.22.jar --jars /usr/local/spark/jars/mysql-connector-java-8.0.22.jar

spark.read.format("jdbc").option("url", "jdbc:mysql://localhost:3306/spark?useSSL=false").option("driver","com.mysql.cj.jdbc.Driver").option("dbtable", "student").option("user", "root").option("password", "12345678").load().show()

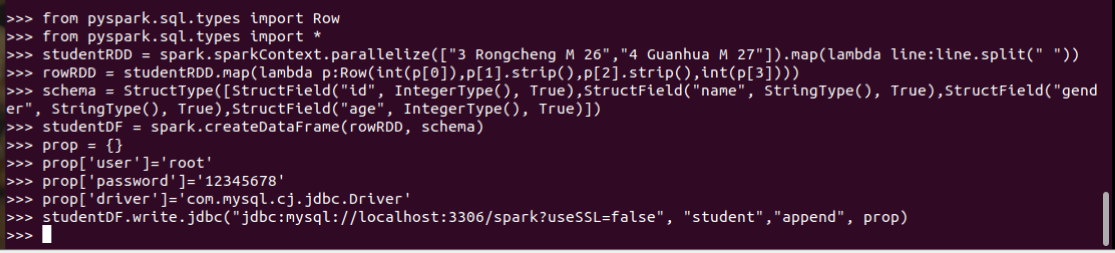

5.spark向MySQL数据库写入数据

from pyspark.sql.types import Row

from pyspark.sql.types import *

studentRDD = spark.sparkContext.parallelize(["3 Rongcheng M 26","4 Guanhua M 27"]).map(lambda line:line.split(" "))

rowRDD = studentRDD.map(lambda p:Row(int(p[0]),p[1].strip(),p[2].strip(),int(p[3])))

schema = StructType([StructField("id", IntegerType(), True),StructField("name", StringType(), True),StructField("gender", StringType(), True),StructField("age", IntegerType(), True)])

studentDF = spark.createDataFrame(rowRDD, schema)

prop = {}

prop['user']='root'

prop['password']='12345678'

prop['driver']='com.mysql.cj.jdbc.Driver'

studentDF.write.jdbc("jdbc:mysql://localhost:3306/spark?useSSL=false", "student","append", prop)

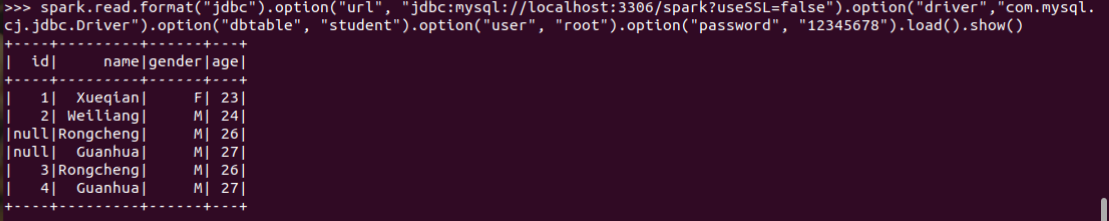

spark.read.format("jdbc").option("url", "jdbc:mysql://localhost:3306/spark?useSSL=false").option("driver","com.mysql.cj.jdbc.Driver").option("dbtable", "student").option("user", "root").option("password", "12345678").load().show()

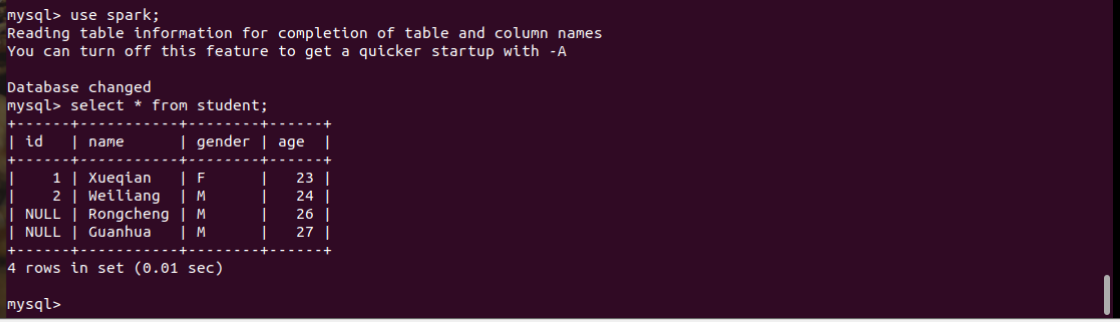

6.回到mysql数据库查看数据

use spark;

select * from student;