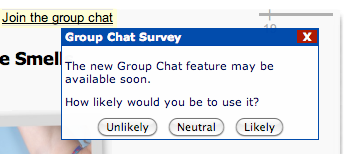

Feature fake , new view in comment.

Fast, Frugal Learning with a Feature Fake

| Unique Logins with Feature Present | User who tried the feature | Users who Took Survey | Users who clicked "Unlikely" | Users who clicked "Neutral" | Users who clicked "Likely" |

|---|---|---|---|---|---|

| 100 | 22 (22%) | 14 | 1 (7%) | 7 (50%) | 6 (43%) |

"In my experience, feature fakes do have to be used carefully. For example, I wouldn't have several going at once, and I wouldn't leave them in place for very long - just long enough to get the information you need to make a decision.

Remember, if a lot of users are clicking on the feature, that means they're excited about it, and you should probably build it pretty quickly. If very few users are clicking on it, you're not going to disappoint that many users when you don't build it. If you stick a stub out there for a few days, get great feedback on it, and then prioritize building the feature immediately, people may actually be more excited once it gets built because they've been anticipating it."

- an excellent technique from the Lean UX community (thanks to Laura Klein for introducing them!)

- an invitation to your users to try a feature

- a fast way to validate interest or non-interest in a feature

- not meant to live for very long

- not proof that the feature itself will be popular (you're only testing the fake version)

Rate this Page

(0 Ratings)

(0 Ratings)

(0 Ratings)

(0 Ratings)Comment testing fake features

Here's another angle of thought on this:

Chat is pretty complex to evaluate with a survey. The user is being asked, not just to evaluate a feature, but to evaluate the quality of the community your company has created, which is what produces the quality of the help they are likely to receive.

100 clicks is decent evidence of interest. I'd move my thinking from the "feature" level up to the "strategy" level and ask whether a vibrant online community would make this a killer ap. If you are excited, find another way to test it by offering users an actual experience of quality interaction with each other. It could be a Q&A, an interactive seminar, or use your imagination.

Give them a taste of what you could provide -- then test that. Again, your metrics are how many people clicked to participate, and what did they think of the experience. You'll never have the size of community Quicken has....but you can deliver the WOW experience, just don't copy the way Quicken does it.

posted on 2011-11-01 13:38 compilerTech 阅读(240) 评论(0) 编辑 收藏 举报