Find an Optimal Solution without Repetitions - Summary of Ch 3

- 1. Find an Optimal Solution without Repetitions - Why DP?

- 2. Two Features of DP

- 3. Matrix Multiplication - Steps to Solve a DP Task

- 4. General structure of DP

- 5. Equations of Assignment Questions

- 6. Pair Work

- 7. Materials

1. Find an Optimal Solution without Repetitions - Why DP?

Dynamic programming (DP) can be used for tasks that are to find the optimal solutions, which are usually the minima or the maxima. Tasks that can be solved using DP have a feature that the optimal solution to the tasks relies on the optimal solution to their substructures (thus there are more than one solution to the substructures). That is, DP problems have an optimal substructure. When we are finding out the optimal substructure, many subproblems may be calculated more than once. In other words, the task has many overlapping subproblems. Overlapping subproblems will lead to an exponential time complexity, like \(O(2^N)\), where \(N\) is the size of the task. To avoid such a dilemma, DP stores solutions to the subproblems in a table to avoid repeatedly solving the same problem, which may decrease the time complexity to a polynomial level.

2. Two Features of DP

DP has two features:

- Optimal Substructure

- Overlapping Subproblems

When we are finding out the optimal solution to a task, if the task has the two features, we can use DP to solve it. Thus the two features can be used to determine if we can use DP to solve a problem which is to find out the optimal solution.

2.1. Optimal Substructure

When a task has an optimal substructure, it can be solved using DP. A task with an optimal substructure means that the optimal solution to the task relies on the optimal solution to its substructure.

Finding out the sub-solution to the substructure is a subtask of the original task with smaller data size, and the process of finding is the same, thus each DP task can be represented with a recursive part, which is like the divide-and-conquer algorithm. However, the recursive part in DP is not implemented with recursive functions, but with for-loops.

In order to find the optimal solution to a subtask, which means that the subtask has more than one possible solutions, not the same as divide-and-conquer, DP iterates over all the solutions to find the optional one, with one or more for-loops.

We can use reduction to absurdity to see if the task has an optimal substructure. Suppose we have a solution (but may not be the optimal one) to the subtask which can be used to calculate the solution to the task.If we can find a better solution to the subtask, and after using it to replace the original solution to the subtask we get a better solution to the task calculated with the better solution to the subtask, we say that the task has an optimal substructure, for that when we find the best, or optimal (from all the "better solutions") solution to the subtask, the solution to the task will become the optimal (in all the "better solutions").

2.2. Overlapping Subproblems

When we are finding the optimal solutions to subtasks, we may calculate many problems more than once.

Suppose we are to find out the optimal solution of a task in a sequence \(a_1, a_2, ..., a_n\) with DP. let's say the optimal solution depends on the subsequence \(a_1, a_2, ..., a_{n - 1}\), and the optimal solution of the subsequence depends on \(a_1, a_2, ..., a_{n - 2}\). And when we are finding out the optimal solution from \(a_1, a_2, ..., a_{n - 1}\), we also need to find out the optimal solution from \(a_1, a_2, ..., a_{n - 2}\), thus here the optimal solution to the sequence from \(a_1\) to \(a_{n - 2}\) is calculated more than once, thus overlaps.

A large number of overlapping problems may lead to an exponential time complexity, which means that the algorithm has a bad performance. What DP does to solve the problem is storing the optimal solutions to subtasks in a table, thus they can be used multiple times once they are calculated. Such a way reduces repetitions of solving the same problems multiple times and decreases the time complexity of the task to a polynomial level, making the algorithm performs much better.

3. Matrix Multiplication - Steps to Solve a DP Task

There are mainly two steps to solve a DP problem once we are sure that the problem can be solved using DP with considering about if the problem has the two features.

- Think of an Equation, which helps the implementation of the task.

- Fill a Table, which means storing the optimal solutions to subtasks in a table so that we can use them repeatedly.

They will be explained with a concrete example: matrix multiplication.

Suppose we have two matrices \(M_{1_{p * q}}\) and \(M_{2_{q * r}}\), where \(p\) and \(q\) is the row number and column number of \(M_1\), and \(q\), \(r\) the row and column number of \(M_2\). When the column number of \(M_1\) equals to the row number of \(M_2\), the two matrices can operate the matrix multiplication operation \(M_1 * M_2\). It turns out that when we implement the multiplication using for-loops, we should calculate \(p * q * r\) times in total, according to the rule of matrix multiplication. Note that the order cannot be changed to \(M_2 * M_1\) when the column number of \(M_2\) does not equal to the row number of \(M_1\).

When we have multiple matrices, we have many possible combinations of multiplication. When we have 3 matrices \(M_{1_{100 * 200}} M_{2_{200 * 300}}, M_{3_{300 * 600}}\), we have \((M_1 * M_2) * M_3\) and \(M_1 * (M_2 * M_3)\). And the corresponding times needed for the first combination are \((100 * 200 * 300) + (100 * 300 * 600) = 24000000\), where the first parentheses calculate the times needed for \(M_1 * M_2\), and the second the times needed for multiplying the outcome of \((M_1 * M_2)\) with \(M_3\). And times for the second parentheses combination are \((200 * 300 * 600) + (100 * 200 * 600) = 48000000\), the first parentheses for \(M_2 * M_3\) and the second for the outcome of \(M_2 * M_3\) with \(M_1\). Basically we get this by adding up the times needed for matrices in the first parentheses (which is omitted here for \(M_1\) in the first case and \(M_3\) for the second case), the times needed for matrices in the second parentheses, and the times for the two resulting matrices (or for the resulting matrix (like the outcome of \(M_1 * M_2\) in the first example) with the matrix whose parentheses are omitted (like \(M_3\) in the first example). Obviously the optimal combination here is the first one, since \(24000000 < 48000000\).

Our task is to find out the optimal multiplication times for matrices \(M_{1_{30 * 35}}, M_{2_{35 * 15}}, M_{3_{15 * 5}}, M_{4_{5 * 10}}, M_{5_{20 * 25}}\) (the optimal solution), that is, the optimal combination of parentheses so that the time needed for the multiplication can be the least.

As mentioned above, we can use DP to find out the optimal solution. But before using DP, we should make sure that DP can be used for the task by considering about the two features.

3.1. Two Features

In order to find out the optimal multiplication times for the matrices, maybe we can first consider the optimal solution to \(M_1 * M_2 * ...* M_k\) (\(M_{1k}\) for brief representation) which is a substructure of the original task, and \(M_k* ...* M_5\) or shortly \(M_{k5}\) which is the other substructure, and calculate the total times by adding up the times for \(M_{1k}\), \(M_{k5}\) and the times for \(M_{1k} * M_{k5}\). Let's say after finding out a solution to the subtask above, we find a better solution to \(M_{1k}\) with less times. Then the total times will decrease.. So is it for \(M_{k5}\). Thus, when we find the optimal solutions to \(M_{1k}\) and \(M_{k5}\), we can get the optimal solution to the task. After the analysis we have confidence to say that the task has an optimal substructure, so that we can solve it with DP.

Let's say \(k = 3\) now. In order to find the optima we should calculate the least multiplication times of \(M_{13}\). And what about \(k = 4\)? We should calculate the optimal solution to \(M_{14}\), during which process we will still have to calculate the optima of \(M_{13}\) in the combination of \((M_1* ...* M_3) * (M_4)\). Thus the optima of \(M_{13}\) is calculated multiple times. There may be many similar cases. Thus the task has overlapping subproblems.

3.2. Think of An Equation

3.2.1. Recursive Part

Actually we've found out part of the equation when analyzing the optimal substructure feature. Our goal is to find out the least times of multiplication from matrix \(M_1\) to \(M_5\). And during the process we may calculate the least times for other matrices like from \(M_1\) to \(M_3\), \(M_3\) to \(M_4\), as the example above. Thus we should use a 2-dimensional array to store the optimal solutions (least times for each parentheses combination). Let's say the 2-d array, which can be viewed as a matrix, is m[i][j], and each element stores the least times from \(M_i\) to \(M_j\). And let's say the shapes of each matrix is stored in a 1-dimensional array d[] in the form of {row num of \(M_1\), col num of \(M_1\) (or row num of \(M_2\)), col num of \(M_2\) (or row num of \(M_3\)), ..., col of \(M_5\)}. The equation can be:

3.2.2.1. Max and Min

The equation here is a lot like the recursive part in equations of divide-and-conquer problems, except that there is a "min" (or sometimes "max" for other tasks). That's because we are to find out the smallest value since we want to find the least times, the optimal solution to the task here. And in order to find out the optimal solution to a substructure (or a subtask), we should try each possible \(k\) to find the optimal one (smallest one here) since there may be many solutions to a substructure. The "min" or "max" is not always needed. In some tasks there may be just two or three possible solutions to a substructure, and we can just use if-statements or the trinary operator ? : to find out the optima. Some cases can be seen below.

The range of \(k\) here is greater than \(i\) and less than \(j\). So it is because when \(k = j\), m[i][k] becomes m[i][j], which is the subtask to be calculated, then

thus

It is not an equation and m[j + 1][j] is not valid here, so it's wrong.

3.2.2. Boundary Conditions

The operation of matrix multiplication in the task is implemented from left to right. And since \(M_i\) represents a matrix on the left and \(M_j\) on the right, \(i\) should be less than \(j\).

There's a special condition when there is only one matrix in the parentheses like \((M_1)\). It's corresponding to calculating the least times for matrices from \(M_1\) to \(M_1\), that is m[1][1], which is 0. So i and j are the same for the case.

When \(i = j\), the optimal solution to the subtask is an actual number 0 here, which is also the optima since there is only one possible solution here. Thus we need not find the minimum.

And the equation for this case can be:

It's also pretty like the divide-and-conquer algorithm, a common case and one or more special cases.

3.2.3. the Entire Equation

3.3. Fill a Table - Implement the Equation

To fill the table for the task we generally have three things to consider about:

- Dimension of the Table

- Range of the Table to Be Filled, that is, whether we should fill the whole table or part of it.

- filling order, that is, whether we should fill the table from left to right or from right to left, from top to bottom or from bottom to top.

3.3.1. Dimensions

Though we say DP uses a table to store the optimal solutions, the table may not be 2-demensional. Sometimes it is a vector. We determine the dimension of the table from the equation, or from what we are calculating.

Since we are to calculate m[i][j], and it's a matrix, which is 2-dimensional, the table of the task is 2-dimensional.

There's also tasks with 1-dimensioal vectors below.

3.3.1.1. Is a New Matrix Needed?

We created a new matrix m[i][j] for the task here. Actually it is not always needed to create a new array to store the optimal solutions. Sometimes we can fill the optimal solutions to the array where we store inputs. Whether we should create a new array to store the optimal solutions can be determined by the equation. Here the equation has m[i][j] and d[i], 2 separate arrays, where d[i] is used for storing inputs. Thus a new array is created. Tasks that don't need new arrays can be seen below.

3.3.2. Range to be Filled

Sometimes we do not need to fill the entire table. We can know how we should fill the table from the conditions of the equation. Since in the equation we only have conditions \(i == j\) and \(i < j\), we should only fill the table in \(i <= j\); For the task here \(i > j\) is not needed since we consider the multiplication order from left to right, and \(M_i\) corresponds to the matrix on the left and \(M_j\) the one on the right.

Finding the range to fill saves time and improves performance, in some degree. But it's not a must to do so. If the whole table is filled instead when it can only be filled partly, the program will run well and we will get the same answers, though when the size of data is large the time needed may increase.

3.3.3. Filling Order

One more thing we should consider about is in what order we should fill the table. The order is also determined by the equation. Elements representing the optimal solution to a substructure on the right hand side of the equal sign determine the optimal solution to the task on the left hand side of the equal sign.

Notice that in the equation, m[i][j] is determined by m[i][k] and m[k][j]. Since \(k < j\), m[i][k] can be viewed as m[i][j - c], where \(c\) is a constant greater than 0. Thus to get the optima with a greater j, we should first get a smaller j. Therefore we should fill the table with j increasing gradually. And since \(i \leq k\), m[k][j] can be viewed as m[i + c][j], where \(c\) is a constant greater than 0. To get the optima with a smaller i we should first get a greater i. Therefore fill the table with a gradually decreasing i.

3.4. Implementation

Through the analysis it's not hard to write code for the DP part. Below is the c++ implementation.

for (int i = n; i >= 1; i--) { // filling order of i: decrease

for (int j = 1; j <= n; j++) { // filling order of j: increase

if (i == j) { // the boundary condition

m[i][j] = 0;

} else if (i < j) { // find the optima, the smallest one here

m[i][j] = m[i][i] + m[i + 1][j] + d[i - 1] * d[i] * d[j]; // (a)

for (int k = i; k < j; k++) {

if (m[i][k] + m[k + 1[j] + d[i - 1] * d[k] * d[j] < m[i][j]) { // find the smallest one

m[i][j] = m[i][k] + m[k + 1[j] + d[i - 1] * d[k] * d[j];

}

}

}

}

}

Note that the initial value of the m array may be zeros if it's a global array, or random values if it's created in a function. Since we're to find out the smallest solution, we had better initialize m[i][j] in the condition where k = i to ensure that values in m[i][j] all comes from the data we feed.

3.5. Construct the Solution

Til now we have just finished the part of finding out the values of optimal solutions to subtasks and the whole task. Sometimes we need to find out how the optimal solution is found out. For the task here, we may want to know how the parentheses are put to get the least times.

To satisfy the need we should create one more matrix to store something like the path of the optima. Here we put all the \(k\) s in a matrix so that we are able to know where to put parentheses. Let's call this matrix p[i][j]. It means that to get the optima of m[i][j], we should first calculate the least times from \(M_i\) to \(M_{p[i][j]}\), where \(p[i][j]\) is the \(k\) for the corresponding substructure. Then we calculate the least times from \(M_{p[i][j] + 1}\) to \(M_j\), that is, \(M_{k + 1}\), to \(M_j\). To get all the \(k\)s related to the optima we can use a recursive function.

void display(int i, int j) {

if (i == j) {

printf("M_%d", i);

} else {

cout << "(";

display(i, p[i][j]);

cout << "*";

display(p[i][j] + 1, j);

cout << ")";

}

}

4. General structure of DP

We can work out a general structure for DP problems so that every time we use DP, we can quickly figure out what we need and what we should do.

4.1. Equation

Recall that the equation for a DP task contains several parts:

- Recursive Part, which contains a \(min{}\) or \(max{}\)

- Boundary Conditions, which deal with a common case and one or more special cases respectively

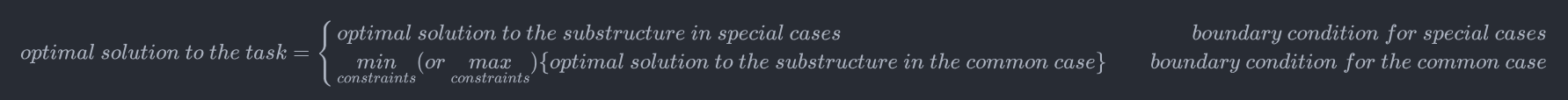

It turns out that we can write a general structure of the equation as follows:

Note that the constraints are optional. There may be cases when there are only 2 or 3 possible solutions to a substructure. The number of possible solutions in these cases is not large so that we can find the optimal one by comparison with if-statements or the trinary operator ? : . And thus we don't need a for-loop in this case, so we don't have something like a k used for iterating over all possible solutions, so a constraint is not needed.

And here's the corresponding parts in the matrix multiplication task

optimal solution to the task: m[i][j]

boundary condition for special cases: \(i = j\)

optimal solution to the substructure for special cases: \(0\)

boundary condition for the common case: \(i < j\)

optimal solution to the substructure for common cases: m[i][k] + m[k + 1][j] + d[i - 1] * d[k] * d[j]

constraints: \(i \leq k < j\)

4.2. Implementation

The equation contains a recursive part, and in DP the recursive part is implemented with for-loops. And in the process of finding the optimal solution to a substructure we should iterate over all the possible solutions. This can also be done with a for-loop. Thus the implementation of DP may contain lots of for-loops. And here's the structure.

for (filling order for i) {

for (filling order for j) {

if (boundary condition for special cases) {

optimal solutions to the substructure in special cases

keep the "path"

} else if (boundary condition for the common case) {

for (constraints) {

if (comparison) { // (a)

optimal solution to the substructure

keep the "path"

}

}

}

}

}

Note that the two outer for loops are for filling the table. And when we are finding the optimal solutions to the substructure with a for loop, we should set an if-statement in the for loop to judge whether the current solution is smaller (or greater) than the solution stored in the table. Sometimes when we have only two or three possible solutions we can find the optimal one without a for-loop.

The general structure is not always fixed and can be modified to fit the requirement of the task.

5. Equations of Assignment Questions

Here's the equations for questions in assignments for chapter 3 in PTA. Detailed info of the questions can be seen in 7. Materials.

Although there is only an longest[i] array in the solution part of the equation, there is still an a[i] array in the conditions, which is used for storing inputs. Thus a new array is needed to store the optimal solutions.

k here starts from 0 for that if k starts from 1, when \(i\) is 1 the constraints will not be satisfied since \(1 \leq 1 < 1\) is wrong. Then the solution for \(i = 1\) is omitted, resulting in wrong answers. Another way to prevent this problem is to initialize longest[i] outside the for-loop for searching for the optimal solution. The reason for this is that every optimal solution is initially treated as the solution to the special case a[k] >= a[i], in which the solution is 1. Then the for loop is executed. When a better solution is found out it will replace the original one.

The equation above is true after padding a row and a column with 0s above the first row and on the left of the first column with a large number, like 200.

Note that it is not needed to use a loop to find the optimal solution to a substructure here since there are only two possible solutions.6. Pair Work

This time Yang Yizhou wrote the main part of the code for the first and second questions. The method of the first question he worked out was from top to bottom, which could be improved to eliminate some boundary conditions caused by both edges of the triangle. I then found another way, from bottom to top, to avoid special conditions to handle. We couldn't work out a solution to the third question in class, which is a classic task for DP. He used the Baidu Oriented Programming strategy (BOP) to find a method for question 3.