爬虫大作业

1.选一个自己感兴趣的主题(所有人不能雷同)。

2.用python 编写爬虫程序,从网络上爬取相关主题的数据。

3.对爬了的数据进行文本分析,生成词云。

4.对文本分析结果进行解释说明。

5.写一篇完整的博客,描述上述实现过程、遇到的问题及解决办法、数据分析思想及结论。

6.最后提交爬取的全部数据、爬虫及数据分析源代码。

本人选取的是顶点小说网 网站为http://www.booktxt.net/

1).用于数据爬取并生成txt文件的py文件:

# -*- coding: UTF-8 -*- import urllib.request import re import os import time import threading import shutil txt_content_partern = '<div id="content">(.*?)</div>' txt_name_partern = '<h1>(.*?)</h1>' catalog_partern = '<dd><a href="/\w+_\w+/(.*?).html">(.*?)</a></dd>' flag = -1 max_len = 0 atalog = [] # 章节间隔 txt_max = 20 # 线程数量 max_thread = 20 thread_stop = 0 start_time = time.clock() headers = { 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8', 'Accept-Language': 'zh-CN,zh;q=0.8', 'Cache-Control': 'max-age=0', 'Proxy-Connection': 'keep-alive', 'Host': 'http://www.booktxt.net', 'Referer': 'https://www.google.com.hk/', 'Upgrade-Insecure-Requests': '1', 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/55.0.2883.87 Safari/537.36' } def down_txt(url, txtname, filename): # print(url) fo = open(filename, "a") for i in range(0, 10): try: html_data = urllib.request.urlopen(url).read().decode('gbk') content = re.findall(txt_content_partern, html_data, re.S | re.M) fo.write("\r\n" + txtname + "\r\n") fo.write(content[0].replace(" ", "").replace("<br />", "").replace("\r\n\r\n", "\r\n").replace("<", "").replace("/p>", "")) fo.close() break except: if i == 9: print("请求失败次数过多,请重新下载") print("请求失败,正在重试...") time.sleep(0.5) continue def down_mul(url, cnt, file_path): global flag, max_len, atalog, txt_max, thread_stop down_flag = 1 while flag * txt_max < max_len - 1: flag += 1 star = flag * txt_max end = star + txt_max if star >= end: break if end > max_len: end = max_len print("正在抓取章节" + str(star) + '-' + str(end) + '...') down_flag = 0 for i in range(star, end): if i >= max_len: break for j in range(0, 10): try: down_txt(url + atalog[i][0] + ".html", atalog[i][1], file_path + '\\' + str(star + 1) + '.txt') break except: if i == 9: print("请求失败次数过多,请重新下载") print("请求失败,正在重试...") time.sleep(0.5) continue thread_stop += 1 if down_flag: print("线程[" + str(cnt) + "]未获取到任务...") else: print("线程[" + str(cnt) + "]运行完毕...") def main(): global atalog, max_len, thread_stop, max_thread, start_time basic_url = 'www.booktxt.net' # url_1 = input("请输入需要下载的小说目录地址,仅限顶点小说网[www.booktxt.net]:") # print('正在抓取目录章节...') url_1='http://www.booktxt.net/1_1137/' for i in range(0, 10): try: html_data = urllib.request.urlopen(url_1).read().decode('gbk') txt_name = re.compile(txt_name_partern).findall(html_data) print('小说名称:' + txt_name[0]) atalog = re.compile(catalog_partern).findall(html_data) print('章节目录抓取完毕...总章节数:' + str(len(atalog))) break except: if i == 9: print("请求失败次数过多,请重新下载") print("请求失败,正在重试...") time.sleep(0.5) continue files = txt_name[0] if not os.path.exists(files): os.mkdir(files) else: file_path_list = os.listdir(files) for file in file_path_list: os.remove(files + '\\' + file) # print(atalog) max_len = len(atalog) atalog.sort(key=len) # max_len =19 for x in range(0, max_thread): t = threading.Thread(target=down_mul, args=(url_1, x + 1, files)) print('线程[' + str(x + 1) + ']Runing Star') t.start() while (1): if thread_stop == max_thread: break print("正在抓取...请稍后...剩余线程数:" + str(max_thread - thread_stop)) time.sleep(5) print("等待合并章节...") filenames = os.listdir(files) filenames.sort(key=len) print(filenames) fo = open(txt_name[0] + '.txt', "w") for file in filenames: filepath = files + '\\' + file for line in open(filepath): fo.write(line) fo.close() print("合并章节完成...等待删除工作目录...") shutil.rmtree(files) times = time.clock() - start_time h = int(times) // 3600 m = int(times) % 3600 // 60 s = int(times) % 60 print("小说下载完成,总共消耗时间:", h, "小时", m, '分钟', s, '秒') s = input() if __name__ == '__main__': opener = urllib.request.build_opener() header_list = [] for key, value in headers.items(): header_list.append((key, value)) opener.addheaders = header_list urllib.request.install_opener(opener) main()

2).利用python相关的包生成词云相关操作的py文件

#coding:utf-8 import matplotlib.pyplot as plt from wordcloud import WordCloud,ImageColorGenerator,STOPWORDS import jieba import numpy as np from PIL import Image #读入背景图片 abel_mask = np.array(Image.open("timg.jpg")) #读取要生成词云的文件 text_from_file_with_apath = open('一念永恒.txt',encoding='gbk').read() #通过jieba分词进行分词并通过空格分隔 wordlist_after_jieba = jieba.cut(text_from_file_with_apath, cut_all = True) wl_space_split = " ".join(wordlist_after_jieba) #my_wordcloud = WordCloud().generate(wl_space_split) 默认构造函数 my_wordcloud = WordCloud( background_color='white', # 设置背景颜色 mask = abel_mask, # 设置背景图片 max_words = 100, # 设置最大现实的字数 stopwords = {}.fromkeys(['nbsp', 'br']), # 设置停用词 font_path = 'C:/Users/Windows/fonts/simkai.ttf',# 设置字体格式,如不设置显示不了中文 max_font_size = 150, # 设置字体最大值 random_state = 30, # 设置有多少种随机生成状态,即有多少种配色方案 scale=.5 ).generate(wl_space_split) # 根据图片生成词云颜色 image_colors = ImageColorGenerator(abel_mask) # 以下代码显示图片 plt.imshow(my_wordcloud) plt.axis("off") plt.show()

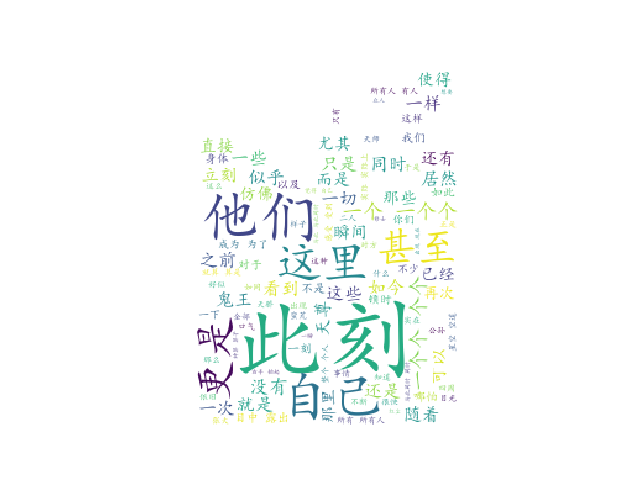

效果图如下

遇到的问题以及解决方案:

1.word cloud安装失败,根据错误报告提示缺少vs c++构建工具。通过安装一个vs2017解决。

2.词云生成的图片过于模糊,文字看不清楚。多次尝试发现跟原图的分辨率有关,换张分辨率高的图片获得不错的效果。

结论:

爬虫是个好东西。