scrapy框架的日志等级和请求传参

阅读目录:

Scrapy的日志等级

- 在使用scrapy crawl spiderFileName运行程序时,在终端里打印输出的就是scrapy的日志信息。

- 日志信息的种类:

ERROR : 一般错误

WARNING : 警告

INFO : 一般的信息

DEBUG : 调试信息

- 设置日志信息指定输出:

在settings.py配置文件中,加入

LOG_LEVEL = ‘指定日志信息种类’即可。

LOG_FILE = 'log.txt'则表示将日志信息写入到指定文件中进行存储。

请求传参

- 在某些情况下,我们爬取的数据不在同一个页面中,例如,我们爬取一个电影网站,电影的名称,评分在一级页面,而要爬取的其他电影详情在其二级子页面中。这时我们就需要用到请求传参。

- 案例展示:爬取https://www.4567tv.tv/frim/index6.html电影网,将一级页面中的电影名称,类型,评分一级二级页面中的上映时间,导演,片长进行爬取。

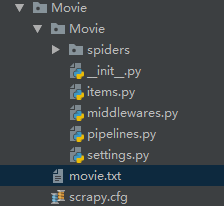

爬虫文件:movie

# -*- coding: utf-8 -*- import scrapy from ..items import MovieItem class MovieSpider(scrapy.Spider): name = 'movie' # allowed_domains = ['www.mv.com'] start_urls = ['https://www.4567tv.tv/frim/index6.html'] # 接收一个请求传递过来的数据 def detail_parse(self,response): item = response.meta['item'] desc = response.xpath('/html/body/div[1]/div/div/div/div[2]/p[5]/span[2]/text()').extract_first() item['desc'] = desc yield item def parse(self, response): li_list = response.xpath('//div[@class="stui-pannel_bd"]/ul/li') for li in li_list: name = li.xpath(".//h4[@class='title text-overflow']/a/text()").extract_first() detail_url = 'https://www.4567tv.tv' + li.xpath('.//h4[@class="title text-overflow"]/a/@href').extract_first() item = MovieItem() item["name"] = name # meta是一个字典,字典中所有的键值对都可以传递给指定好的回调函数 yield scrapy.Request(url=detail_url,callback= self.detail_parse,meta={'item':item})

items.py中

# -*- coding: utf-8 -*- # Define here the models for your scraped items # # See documentation in: # https://doc.scrapy.org/en/latest/topics/items.html import scrapy class MovieItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() name = scrapy.Field() desc = scrapy.Field()

pipelines.py中

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html class MoviePipeline(object): f = None def open_spider(self, spider): print('开始爬虫!') self.f = open('./movie.txt', 'w', encoding='utf-8') def process_item(self, item, spider): name = item['name'] desc = item['desc'] self.f.write(name + ':' + desc + '\n') return item def close_spider(self, spider): print('结束爬虫!') self.f.close()

settings.py中

ITEM_PIPELINES = { 'Movie.pipelines.MoviePipeline': 300, }

案例:爬取网易新闻

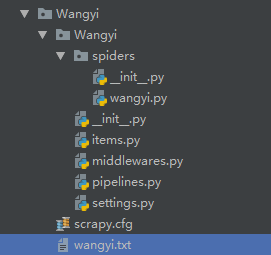

爬虫文件:

# -*- coding: utf-8 -*- import scrapy from ..items import WangyiItem import re class WangyiSpider(scrapy.Spider): name = 'wangyi' # allowed_domains = ['www.yu.com'] start_urls = ['https://temp.163.com/special/00804KVA/cm_guonei.js?callback=data_callback'] def detail_parse(self,response): item = response.meta["item"] detail_msg = response.xpath('//div[@class="post_text"]/p[1]/text()').extract_first() item["detail_msg"]=detail_msg # print(item) yield item def parse(self, response): str_msg = response.text title_list = re.findall('"title":.*',str_msg) docurl_list = re.findall('"docurl":"(.*)"', str_msg) title_lst = [] for title in title_list: title = title.encode('iso-8859-1').decode('gbk').split(":")[-1] title_lst.append(title) item =WangyiItem() item["title"]=title_lst for docurl in docurl_list: yield scrapy.Request(url=docurl,callback= self.detail_parse,meta={"item":item})

items.py

# Define here the models for your scraped items # # See documentation in: # https://doc.scrapy.org/en/latest/topics/items.html import scrapy class WangyiItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() title =scrapy.Field() detail_msg=scrapy.Field()

管道文件:

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html class WangyiPipeline(object): f = None l=0 def open_spider(self,spider): print("开始爬虫") self.f = open("./wangyi.txt","w",encoding="utf-8") def process_item(self, item, spider): title_list = item["title"] detail_msg= item["detail_msg"] if self.l<=len(title_list): title = title_list[self.l] self.f.write(title + ":" + detail_msg) self.l +=1 return item def close_spider(self,spider): print("爬虫结束") self.f.close()

settinss.py文件

ITEM_PIPELINES = { 'Wangyi.pipelines.WangyiPipeline': 300, }

注意点:本案例的难点在于新闻标题是动态加载的,需要对标签进行全局的搜索,发现是在js文件中

然后通过正则的方式进行匹配出内容