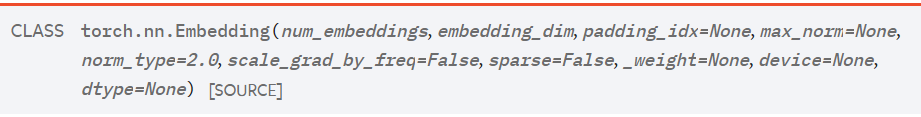

torch.nn.Embedding

Parameters

-

num_embeddings (int) – size of the dictionary of embeddings

-

embedding_dim (int) – the size of each embedding vector

-

padding_idx (int, optional) – If specified, the entries at

padding_idxdo not contribute to the gradient; therefore, the embedding vector atpadding_idxis not updated during training, i.e. it remains as a fixed “pad”. For a newly constructed Embedding, the embedding vector atpadding_idxwill default to all zeros, but can be updated to another value to be used as the padding vector. -

max_norm (float, optional) – If given, each embedding vector with norm larger than

max_normis renormalized to have normmax_norm. -

norm_type (float, optional) – The p of the p-norm to compute for the

max_normoption. Default2. -

scale_grad_by_freq (boolean, optional) – If given, this will scale gradients by the inverse of frequency of the words in the mini-batch. Default

False. -

sparse (bool, optional) – If

True, gradient w.r.t.weightmatrix will be a sparse tensor. See Notes for more details regarding sparse gradients.

Examples:

import torch

from torch import nn

embedding = nn.Embedding(5, 4) # 假定字典中只有5个词,词向量维度为4

word = [[1, 2, 3],

[2, 3, 4]] # 每个数字代表一个词,例如 {'!':0,'how':1, 'are':2, 'you':3, 'ok':4}

#而且这些数字的范围只能在0~4之间,因为上面定义了只有5个词

embed = embedding(torch.LongTensor(word))

print(embed)

print(embed.size())

结果:

tensor([[[-0.0436, -1.0037, 0.2681, -0.3834],

[ 0.0222, -0.7280, -0.6952, -0.7877],

[ 1.4341, -0.0511, 1.3429, -1.2345]],

[[ 0.0222, -0.7280, -0.6952, -0.7877],

[ 1.4341, -0.0511, 1.3429, -1.2345],

[-0.2014, -0.4946, -0.0273, 0.5654]]], grad_fn=<EmbeddingBackward0>)

torch.Size([2, 3, 4])

因上求缘,果上努力~~~~ 作者:图神经网络,转载请注明原文链接:https://www.cnblogs.com/BlairGrowing/p/15683850.html