视频爬取

爬取樱花视频中小林家的龙女仆2

# UAPool.py

def UserAgent():

user_agents = [

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.95 Safari/537.36 OPR/26.0.1656.60',

'Opera/8.0 (Windows NT 5.1; U; en)',

'Mozilla/5.0 (Windows NT 5.1; U; en; rv:1.8.1) Gecko/20061208 Firefox/2.0.0 Opera 9.50',

'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; en) Opera 9.50',

'Mozilla/5.0 (Windows NT 6.1; WOW64; rv:34.0) Gecko/20100101 Firefox/34.0',

'Mozilla/5.0 (X11; U; Linux x86_64; zh-CN; rv:1.9.2.10) Gecko/20100922 Ubuntu/10.10 (maverick) Firefox/3.6.10',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/534.57.2 (KHTML, like Gecko) Version/5.1.7 Safari/534.57.2 ',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.71 Safari/537.36',

'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.11 (KHTML, like Gecko) Chrome/23.0.1271.64 Safari/537.11',

'Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US) AppleWebKit/534.16 (KHTML, like Gecko) Chrome/10.0.648.133 Safari/534.16',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/30.0.1599.101 Safari/537.36',

'Mozilla/5.0 (Windows NT 6.1; WOW64; Trident/7.0; rv:11.0) like Gecko',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.11 (KHTML, like Gecko) Chrome/20.0.1132.11 TaoBrowser/2.0 Safari/536.11',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/21.0.1180.71 Safari/537.1 LBBROWSER',

'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; QQDownload 732; .NET4.0C; .NET4.0E)',

'Mozilla/5.0 (Windows NT 5.1) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.84 Safari/535.11 SE 2.X MetaSr 1.0',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Trident/4.0; SV1; QQDownload 732; .NET4.0C; .NET4.0E; SE 2.X MetaSr 1.0) ',

]

return user_agents

import requests

from lxml import etree

import time

from UA.UAPool import UserAgent

import random

import os

import asyncio

import aiohttp

from concurrent.futures import ThreadPoolExecutor

from contextlib import closing

folder_name = 'video_folder/'

if not os.path.exists(folder_name):

os.makedirs(folder_name)

headers = {

'User-Agent': random.choice(UserAgent()) # 从UA池中挑选

}

start_time = time.time()

all_data = []

async def downMP4(html, names):

video_name = names

video_url = html.replace('https://play.lcdck.com/vip.php?url=', '')

# print(video_name)

# print(video_url)

with closing(requests.get(video_url, headers=headers, stream=True)) as response:

chunk_size = 1024 # 单次请求最大值

content_size = int(response.headers['content-length']) # 内容体总大小

data_count = 0

# 同步下载

with open(folder_name+video_name+'.mp4', "wb") as file:

for data in response.iter_content(chunk_size=chunk_size):

file.write(data)

data_count = data_count + len(data)

now_jd = (data_count / content_size) * 100

print("\r文件下载进度:%d%%(%d/%d) - %s" % (now_jd, data_count, content_size, video_name+'.mp4'), end=" ")

print(video_name + " 下载完成")

# 异步下载

# async with aiohttp.ClientSession() as session:

# async with session.get(url=video_url, headers=headers) as video_response:

# video_content = await video_response.read()

# with open(folder_name+video_name+'.mp4', 'wb') as f:

# f.write(video_content)

# print(video_name + " 下载完成")

def parsePage():

# 第一步,确定爬虫地址

urls = ['https://www.dangniao.com/play/3361-0-{}.html'.format(i) for i in range(0, 10)] # 控制下载集数

for url in urls:

# 第二步:发送请求

response = requests.get(url=url, headers=headers)

# 第三步:获取数据

html_content = response.text

sel = etree.HTML(html_content)

video_list = sel.xpath('//div[@id="cms_player"]/iframe/@src')[0]

names = sel.xpath('//h2[@class="text-nowrap"]/a[2]/text()')[0] + sel.xpath('//h2[@class="text-nowrap"]/small/text()')[0]

data = {

'video_list': video_list,

'names': names

}

all_data.append(data)

# 多线程

# executor = ThreadPoolExecutor(4)

# for i in range(len(all_data)):

# executor.submit(downMP4, all_data[i]['video_list'], all_data[i]['names'])

# executor.shutdown(True)

# 协程

loop = asyncio.get_event_loop()

downMP4Task = []

for i in range(len(all_data)):

downMP4Proxy = downMP4(all_data[i]['video_list'], all_data[i]['names'])

future = asyncio.ensure_future(downMP4Proxy)

downMP4Task.append(future)

loop.run_until_complete(asyncio.wait(downMP4Task))

loop.close()

finish_time = time.time()

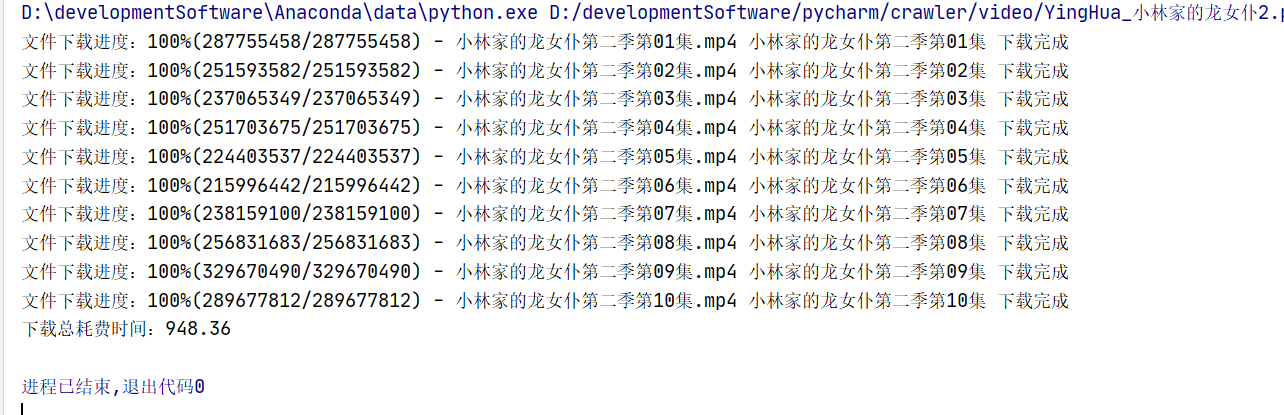

print('下载总耗费时间:' + str(round(finish_time-start_time, 2)))

if __name__ == '__main__':

parsePage()

使用 协程 + 异步 下载时容易出现超时。所以这里我选用了协程+同步下载。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 记一次.NET内存居高不下排查解决与启示

· 探究高空视频全景AR技术的实现原理

· 阿里最新开源QwQ-32B,效果媲美deepseek-r1满血版,部署成本又又又降低了!

· 单线程的Redis速度为什么快?

· SQL Server 2025 AI相关能力初探

· AI编程工具终极对决:字节Trae VS Cursor,谁才是开发者新宠?

· 展开说说关于C#中ORM框架的用法!