Mapreduce实例——MapReduce自定义输出格式

当面对一些特殊的<key,value>键值对时,要求开发人员继承FileOutputFormat,用于实现一种新的输出格式。同时还需继承RecordWriter,用于实现新输出格式key和value的写入方法。现在我们有某电商数据表cat_group1,包含(分组id,分组名称,分组码,奢侈品标记)四个字段cat_group1的数据内容如下:

分组id,分组名称,分组码,奢侈品标记

512,奢侈品,c,1

675,箱包,1,1

676,化妆品,2,1

677,家电,3,1

501,有机食品,1,0

502,蔬菜水果,2,0

503,肉禽蛋奶,3,0

504,深海水产,4,0

505,地方特产,5,0

506,进口食品,6,0

要求把相同奢侈品标记(flag)的数据放入到一个文件里,并且以该字段来命名文件的名称,输出时key与value 以“:”分割,形如"key:value"

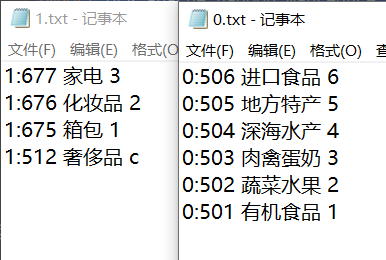

结果输出0.txt和1.txt两文件:

MyMultipleOutputFormat类

package mapreduce12; import java.io.DataOutputStream; import java.io.IOException; import java.io.PrintWriter; import java.io.UnsupportedEncodingException; import java.util.HashMap; import java.util.Iterator; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.FSDataOutputStream; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.io.Writable; import org.apache.hadoop.io.WritableComparable; import org.apache.hadoop.io.compress.CompressionCodec; import org.apache.hadoop.io.compress.GzipCodec; import org.apache.hadoop.mapreduce.OutputCommitter; import org.apache.hadoop.mapreduce.RecordWriter; import org.apache.hadoop.mapreduce.TaskAttemptContext; import org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import org.apache.hadoop.util.ReflectionUtils; //11. Mapreduce实例——MapReduce自定义输出格式 public abstract class MyMultipleOutputFormat <K extends WritableComparable<?>,V extends Writable> extends FileOutputFormat<K,V>{ private MultiRecordWriter writer=null; public RecordWriter<K,V> getRecordWriter(TaskAttemptContext job) throws IOException{ if(writer==null){ writer=new MultiRecordWriter(job,getTaskOutputPath(job)); } return writer; } private Path getTaskOutputPath(TaskAttemptContext conf) throws IOException{ Path workPath=null; OutputCommitter committer=super.getOutputCommitter(conf); if(committer instanceof FileOutputCommitter){ workPath=((FileOutputCommitter) committer).getWorkPath(); }else{ Path outputPath=super.getOutputPath(conf); if(outputPath==null){ throw new IOException("Undefined job output-path"); } workPath=outputPath; } return workPath; } protected abstract String generateFileNameForKayValue(K key,V value,Configuration conf); protected static class LineRecordWriter<K,V> extends RecordWriter<K, V> { private static final String utf8 = "UTF-8"; private static final byte[] newline; private PrintWriter tt; static { try { newline = "\n".getBytes(utf8); } catch (UnsupportedEncodingException uee) { throw new IllegalArgumentException("can't find " + utf8 + " encoding"); } } protected DataOutputStream out; private final byte[] keyValueSeparator; public LineRecordWriter(DataOutputStream out, String keyValueSeparator) { this.out = out; try { this.keyValueSeparator = keyValueSeparator.getBytes(utf8); } catch (UnsupportedEncodingException uee) { throw new IllegalArgumentException("can't find " + utf8 + " encoding"); } } public LineRecordWriter(DataOutputStream out) { this(out, ":"); } private void writeObject(Object o) throws IOException { if (o instanceof Text) { Text to = (Text) o; out.write(to.getBytes(), 0, to.getLength()); } else { out.write(o.toString().getBytes(utf8)); } } public synchronized void write(K key, V value) throws IOException { boolean nullKey = key == null || key instanceof NullWritable; boolean nullValue = value == null || value instanceof NullWritable; if (nullKey && nullValue) {// return; } if (!nullKey) { writeObject(key); } if (!(nullKey || nullValue)) { out.write(keyValueSeparator); } if (!nullValue) { writeObject(value); } out.write(newline); } public synchronized void close(TaskAttemptContext context) throws IOException { out.close(); } } public class MultiRecordWriter extends RecordWriter<K,V>{ private HashMap<String,RecordWriter<K,V> >recordWriters=null; private TaskAttemptContext job=null; private Path workPath=null; public MultiRecordWriter(TaskAttemptContext job,Path workPath){ super(); this.job=job; this.workPath=workPath; recordWriters=new HashMap<String,RecordWriter<K,V>>(); } public void close(TaskAttemptContext context) throws IOException, InterruptedException{ Iterator<RecordWriter<K,V>> values=this.recordWriters.values().iterator(); while(values.hasNext()){ values.next().close(context); } this.recordWriters.clear(); } public void write(K key,V value) throws IOException, InterruptedException{ String baseName=generateFileNameForKayValue(key ,value,job.getConfiguration()); RecordWriter<K,V> rw=this.recordWriters.get(baseName); if(rw==null){ rw=getBaseRecordWriter(job,baseName); this.recordWriters.put(baseName,rw); } rw.write(key, value); } private RecordWriter<K,V> getBaseRecordWriter(TaskAttemptContext job,String baseName)throws IOException,InterruptedException{ Configuration conf=job.getConfiguration(); boolean isCompressed=getCompressOutput(job); String keyValueSeparator= ":"; RecordWriter<K,V> recordWriter=null; if(isCompressed){ Class<?extends CompressionCodec> codecClass=getOutputCompressorClass(job,(Class<?extends CompressionCodec>) GzipCodec.class); CompressionCodec codec=ReflectionUtils.newInstance(codecClass,conf); Path file=new Path(workPath,baseName+codec.getDefaultExtension()); FSDataOutputStream fileOut=file.getFileSystem(conf).create(file,false); recordWriter=new LineRecordWriter<K,V>(new DataOutputStream(codec.createOutputStream(fileOut)),keyValueSeparator); }else{ Path file=new Path(workPath,baseName); FSDataOutputStream fileOut=file.getFileSystem(conf).create(file,false); recordWriter =new LineRecordWriter<K,V>(fileOut,keyValueSeparator); } return recordWriter; } } }

FileOutputMR类

package mapreduce12; import java.io.IOException; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.Reducer; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; //11. Mapreduce实例——MapReduce自定义输出格式 public class FileOutputMR { public static class TokenizerMapper extends Mapper<Object,Text,Text,Text>{ private Text val=new Text(); public void map(Object key,Text value,Context context)throws IOException,InterruptedException{ String str[]=value.toString().split(","); val.set(str[0]+" "+str[1]+" "+str[2]); context.write(new Text(str[3]), val); } } public static class IntSumReducer extends Reducer<Text,Text,Text,Text>{ public void reduce(Text key,Iterable<Text> values,Context context) throws IOException,InterruptedException{ for(Text val:values){ context.write(key,val); } } } public static class AlphabetOutputFormat extends MyMultipleOutputFormat<Text,Text>{ protected String generateFileNameForKayValue(Text key,Text value,Configuration conf){ return key+".txt"; } } public static void main(String[] args) throws IOException, InterruptedException, ClassNotFoundException{ Configuration conf=new Configuration(); Job job=new Job(conf,"FileOutputMR"); job.setJarByClass(FileOutputMR.class); job.setMapperClass(TokenizerMapper.class); job.setCombinerClass(IntSumReducer.class); job.setReducerClass(IntSumReducer.class); job.setOutputKeyClass(Text.class); job.setOutputValueClass(Text.class); job.setOutputFormatClass(AlphabetOutputFormat.class); FileInputFormat.addInputPath(job,new Path("hdfs://192.168.51.100:8020/mymapreduce12/in/cat_group1")); FileOutputFormat.setOutputPath(job,new Path("hdfs://192.168.51.100:8020/mymapreduce12/out")); System.exit(job.waitForCompletion(true)?0:1); } }

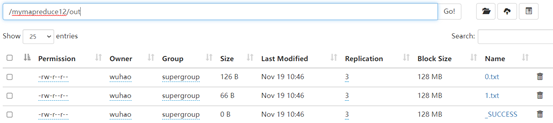

结果:

原理:

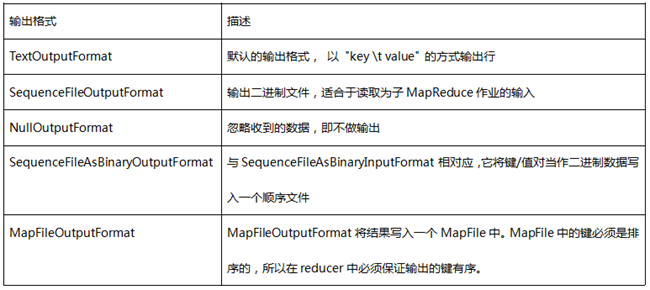

1.输出格式:提供给OutputCollector的键值对会被写到输出文件中,写入的方式由输出格式控制。OutputFormat的功能跟前面描述的InputFormat类很像,Hadoop提供的OutputFormat的实例会把文件写在本地磁盘或HDFS上。在不做设置的情况下,计算结果会以part-000*输出成多个文件,并且输出的文件数量和reduce数量一样,文件内容格式也不能随心所欲。每一个reducer会把结果输出写在公共文件夹中一个单独的文件内,这些文件的命名一般是part-nnnnn,nnnnn是关联到某个reduce任务的partition的id,输出文件夹通过FileOutputFormat.setOutputPath() 来设置。你可以通过具体MapReduce作业的JobConf对象的setOutputFormat()方法来设置具体用到的输出格式。下表给出了已提供的输出格式:

Hadoop提供了一些OutputFormat实例用于写入文件,基本的(默认的)实例是TextOutputFormat,它会以一行一个键值对的方式把数据写入一个文本文件里。这样后面的MapReduce任务就可以通过KeyValueInputFormat类简单的重新读取所需的输入数据了,而且也适合于人的阅读。还有一个更适合于在MapReduce作业间使用的中间格式,那就是SequenceFileOutputFormat,它可以快速的序列化任意的数据类型到文件中,而对应SequenceFileInputFormat则会把文件反序列化为相同的类型并提交为下一个Mapper的输入数据,方式和前一个Reducer的生成方式一样。NullOutputFormat不会生成输出文件并丢弃任何通过OutputCollector传递给它的键值对,如果你在要reduce()方法中显式的写你自己的输出文件并且不想Hadoop框架输出额外的空输出文件,那这个类是很有用的。

RecordWriter:这个跟InputFormat中通过RecordReader读取单个记录的实现很相似,OutputFormat类是RecordWriter对象的工厂方法,用来把单个的记录写到文件中,就像是OuputFormat直接写入的一样。

2.与IntputFormat相似, 当面对一些特殊情况时,如想要Reduce支持多个输出,这时Hadoop本身提供的TextOutputFormat、SequenceFileOutputFormat、NullOutputFormat等肯定是无法满足我们的需求,这时我们需要自定义输出数据格式。类似输入数据格式,自定义输出数据格式同样可以参考下面的步骤:

(1) 自定义一个继承OutputFormat的类,不过一般继承FileOutputFormat即可;

(2)实现其getRecordWriter方法,返回一个RecordWriter类型;

(3)自定义一个继承RecordWriter的类,定义其write方法,针对每个<key,value>写入文件数据;