[Cloud Architect] 5. Cost and Monitoring

Cloud Cost Pricing

Key Points

An accurate cost estimation that meets and exceeds your organization’s budgetary goals requires you to ask important questions, interpret data, and implement AWS best practices

Paid AWS Cloud Services include:

- Running Compute Resources

- Storage

- Provisioned Databases

- Data Transfer

Remember, you only pay for services you use, and once you stop using them, AWS stops charging you immediately and doesn’t levy any termination fees.

AWS does not charge for:

- AWS Elastic Beanstalk - Rapid application deployment

- AWS Cloud Formation - AWS Branded Infrastructure as Code service

- Auto-Scaling - Scaling EC2 instances up/down or in/out based on your application requirements

- AWS IAM - User and access management

There is no cost for uploading data into the AWS cloud, although you will pay for storage and data transfer back out. Because of the massive scale of the AWS technology platform, there is no limit to how much data you can upload.

AWS Costs Include Maintenance

Good Cost Hygiene Practices

- Establish a naming convention for Servers and Databases

-

Use Tags to track costs by:

- Group

- Lifecycle

- Person

- Application

-

Create IT Governance rules

- Set Billing Alarms

Tips for Reducing Costs

- Use AWS CloudFront to cache data close to end users

- Avoid inter-region data transfer costs

- Peering via AWS Transit Gateway for VPCs reduces costs

Tools

We will use the AWS Pricing Calculator to estimate the monthly cost

Learn More About Cloud Costs and Pricing

The links below take you to cloud provider documentation on pricing and cost strategies. You will also learn more information about how VPC peering and the AWS CloudFront CDN work. Please follow the links to gain a greater understanding of these technologies when you can.

- AWS Cloud Services Pricing

- Public Cloud Pricing, Explained

- Daily AWS Billing Alert

- VPC Peering

- CloudFront Content Delivery Network

Instance Pricing

Key Points

AWS EC2 instance pricing is straightforward, but it can quickly become complex when you take up the task of optimizing your environment to achieve the ideal cost/performance balance.

- Explore OS licensing pricing and options

- Limit the users and roles that can launch production instances

- Choose the best instance for your workload

- Save by moving to new generation instances when available

Instance cost by:

- Instance size

- Operating system

- Region

- Memory / RAM

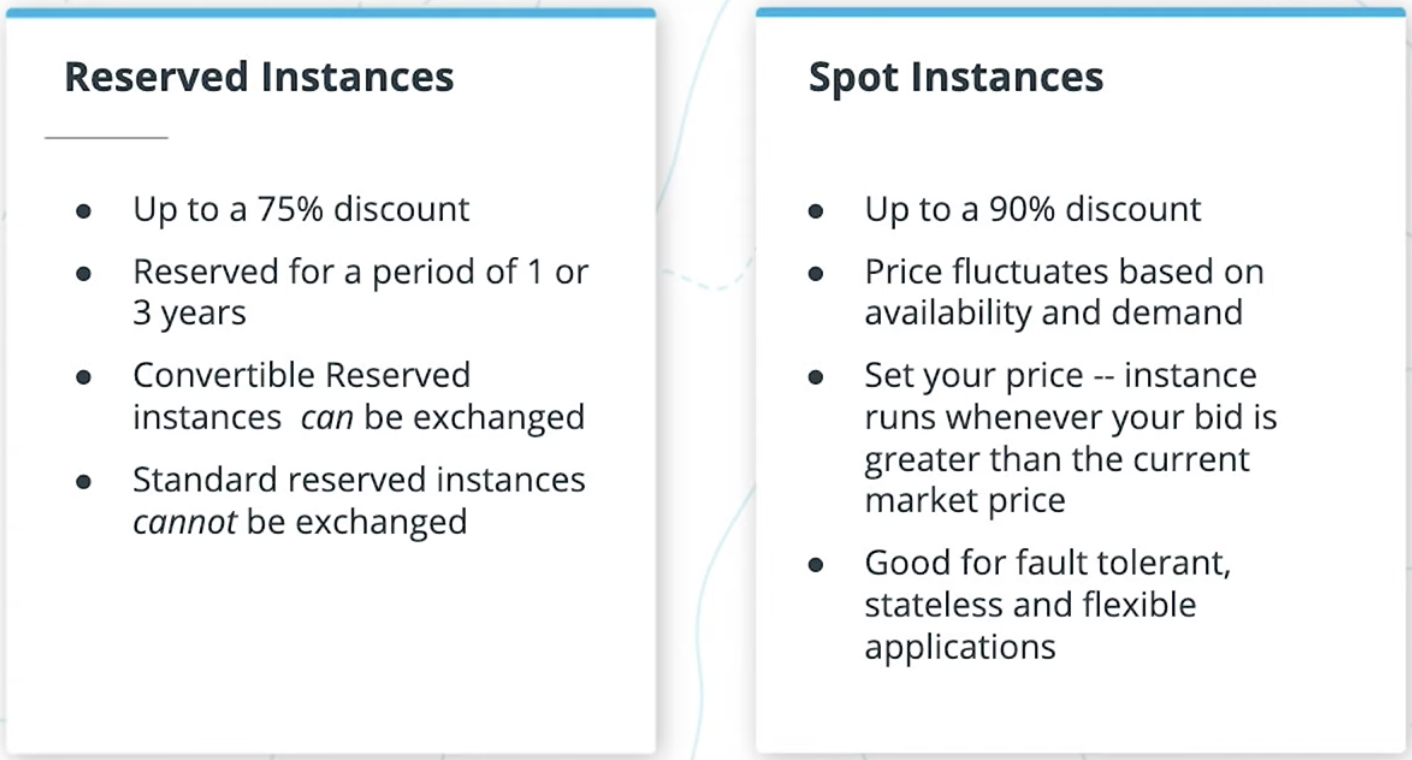

Purchasing Options

When optimizing your computing usage to reduce your monthly spend, one of the first places you want to count your costs is in your EC2 instances, and one of the best resources here is using reserved instances.

| Option | How it Works |

|---|---|

| Capacity Reservations | Reserve capacity for your EC2 instances in a specific Availability Zone for any duration. |

| Dedicated Hosts | Pay for a physical host that is fully dedicated to running your instances, and bring your existing per-socket, per-core, or per-VM software licenses to reduce costs. |

| Dedicated Instances | Pay, by the hour, for instances that run on single-tenant hardware. |

| On-Demand Instances | An AWS service or technology that can be acquired at any time for a predetermined standard cos |

| Reserved Instances | An AWS service or technology that can be reserved for a period of time at a discount in exchange for a payment commitment |

| Savings Plans | Reduces your Amazon EC2 costs by making a commitment to a consistent amount of usage, in USD per hour, for a term of 1 or 3 years. |

| Scheduled Instances | Purchase instances that are always available on the specified recurring schedule, for a one-year term. |

| Spot Instances | an EC2 instance that can be acquired by bidding for a low price in exchange for the understanding that AWS can reclaim it at any time |

Storage Pricing

Key Points

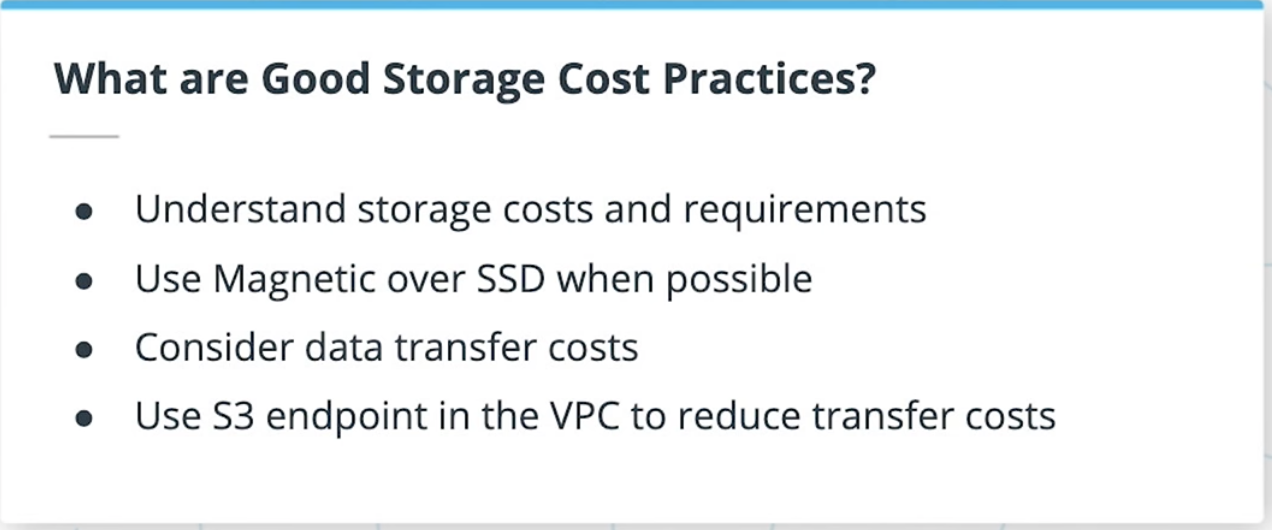

-

Storage costs vary considerably depending on usage and requirements.

-

Applications require different types of optimized storage depending on their primary function.

Selecting the Right Storage for Your Application.

Selecting the right storage is the key to both satisfied customers and high-performing databases. In general you should select storage based on the following criteria:

SSD Solid State Drives

- Use flash memory to deliver superior performance and durability without moving parts

- More durable, run cooler and use less energy

- Best for i/o intensive applications and database services and boot volumes

HDD Hard Disk Drives

- Proven technology

- Typically less expensive than solid state drives for the same amount of storage

- Available with more storage space than SSDs

- Best for throughput intensive workloads like big data analysis and log processing

- It is difficult to maintain if you didn't setup a good policy for objects stored in S3

- Most like you won't going to delete any objects stored in S3 because you are not sure whether they are still needed

- It is a good pratice that using Tag and Lifecycle policy in the very beginning.

Additional Reading on Storage Pricing

You can learn more about file and object storage in the cloud and how to optimize your storage solution for cost and functionality by following the links provided below.

- Buy a Storage Gateway on Amazon.com

- Save Money With AWS VPC Endpoints

- Reduce Your AWS Bill

- Real Savings Through Proper Cloud Setup

- AWS PrivateLink with VPC Endpoints

The following table shows the options you have if you decide to stay in private subnets.

| Gateway VPC Endpoint | Interface VPC Endpoint | NAT Gateway | |

|---|---|---|---|

| Supported AWS services | S3, DynamoDB | some | all |

| Price per hour |

free | $0.01 | $0.045 |

| Price per GB |

free | $0.01 | $0.045 |

List of saving ways:

VPC Endpoints over NAT Gateways

- VPC endpoint with PrivateLink

- Kicking Lambda Functions Out of the VPC

- Convertible Reserved EC2 Instances

- EC2 Spot Instances

- DynamoDB on-demand

- S3 Intelligent-Tiering

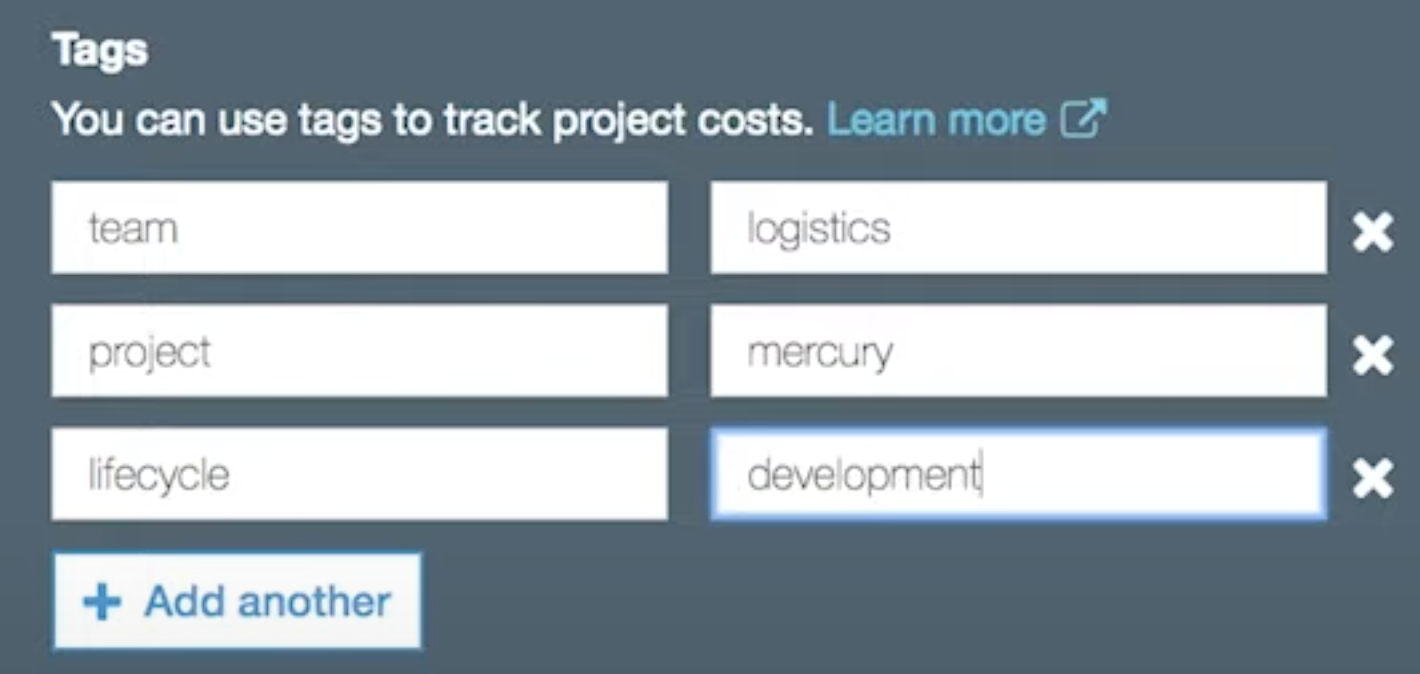

Tagging To Track Costs

Best Practices for Tagging

-

Gather input from a variety of cloud stakeholders to determine the best tagging strategy for your organization

-

Define your organization’s tags early in the cloud adoption process, and put systems in place to ensure they are being used consistently

-

AWS tags are case sensitive, so you must standardize how your tags are defined to avoid confusion and orphaned data

-

AWS allows you to tag nearly every service that generates costs, so tag everything you can to maintain accurate billing and cost estimates and reports

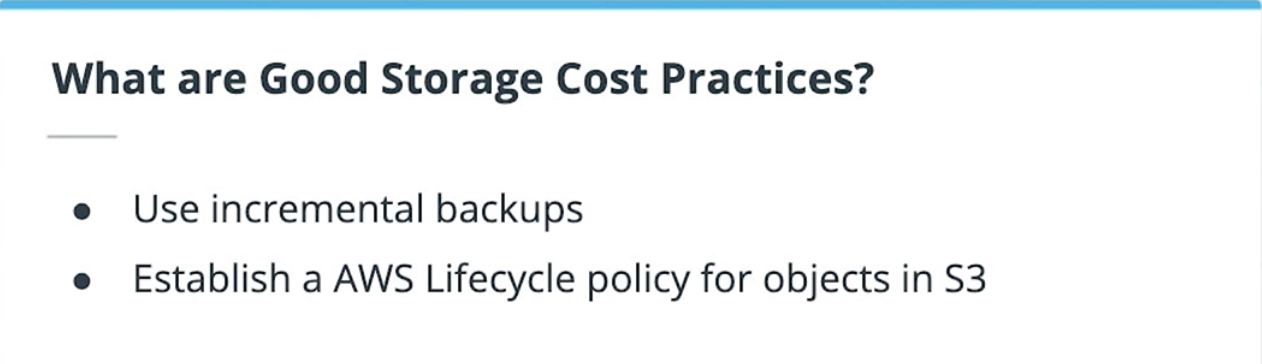

S3 Lifecycle Policy Configuration

S3 Object Lifecycle Policies simplify the task of object lifecycle management.

-

A lifecycle configuration is an XML file that defines the actions you want AWS to perform on the object in S3 storage during its lifecycle in AWS. The lifecycle policy is configured in the S3 management console.

-

Object lifecycle policies define both transitions and expirations

-

Object transitions define when an object is moved to another storage class; for example moving an object from S3 Standard to S3 Standard Infrequently Accessed after 60 days

-

Object expirations define when an object needs to expire out of the S3 bucket, and AWS deletes the file when the expiration conditions have been met

-

Once an object has transitioned out of S3 standard, it cannot go back. The object would have to be retrieved and re-uploaded to S3 Standard.

-

Lifecycle policies automate transitions and expirations to reduce storage costs by moving objects to less costly tiers, archiving them for long term storage, and deleting them to meet governance requirements

Additional Reading on Tagging and Lifecycle Policies

An effective tagging strategy helps you gain visibility into your costs and resource allocation and provide the insights necessary to track your cloud technology goals and direct your company’s cloud initiatives. Please follow the links below to dive deeper into the topic of resource tagging.

- Using Cost Allocation Tags

- Viewing CloudTrail Events

- AWS Tagging Strategies

- AWS Tagging Best Practices

- Create Lifecycle Policies

- Object Lifecycle Management

- Setting Lifecycle Configuration on a Bucket

- Transitioning Objects Using S3 Lifecycle

DB and Data Transfer Costs

Key Points About Relational Databases

Using AWS RDS provides customers with the advantage of AWS managed services for relational databases You don’t have to worry about managing the hardware supporting the database, patching, or updating. This frees you up to innovate in other areas of your stack.

Reducing Costs With RDS Reserved Instances

- Based on instance class, database engine and redundancy

- Can bring your own license for Oracle and SQL

- Pay for 1 or 3 year term

- Choose your region thoughtfully -- costs and discounts vary by region

Long Term Commitment!

- You can NOT change instance type after purchase

- Must do monitoring and testing before RDS reserved purchase

AWS DynamoDB

- Pricing is primarily based on Write Capacity Units (WCUs) and Read Capacity Units (RCUs).

- As with RDS, you also pay for autoscaling, indexing, data transfer, and caching

- DynamoDB has some additional features and costs that are not associated with RDS databases

- DAX - DynamoDB Accelerator (DAX) that uses in-memory caching to reduce latency 10x

- Global Tables - a multi-region, multi-master database providing fast local read/writes for large global applications by replicating DynamoDB tables across your selected regions

- DynamoDB Streams - captures and writes streaming records in near real-time for consumption by applications that consume and process streams

Best Practices for Managing Database Costs

- Keep only a subset of the data in RDS

- Move CLOBs and BLOBS to S3

- Move historical data to S3.

- Move high velocity frequently written data to DynamoDB

- Do incremental backups

- Communicate within the same Availability Zone

- Purchase reserved instances when possible

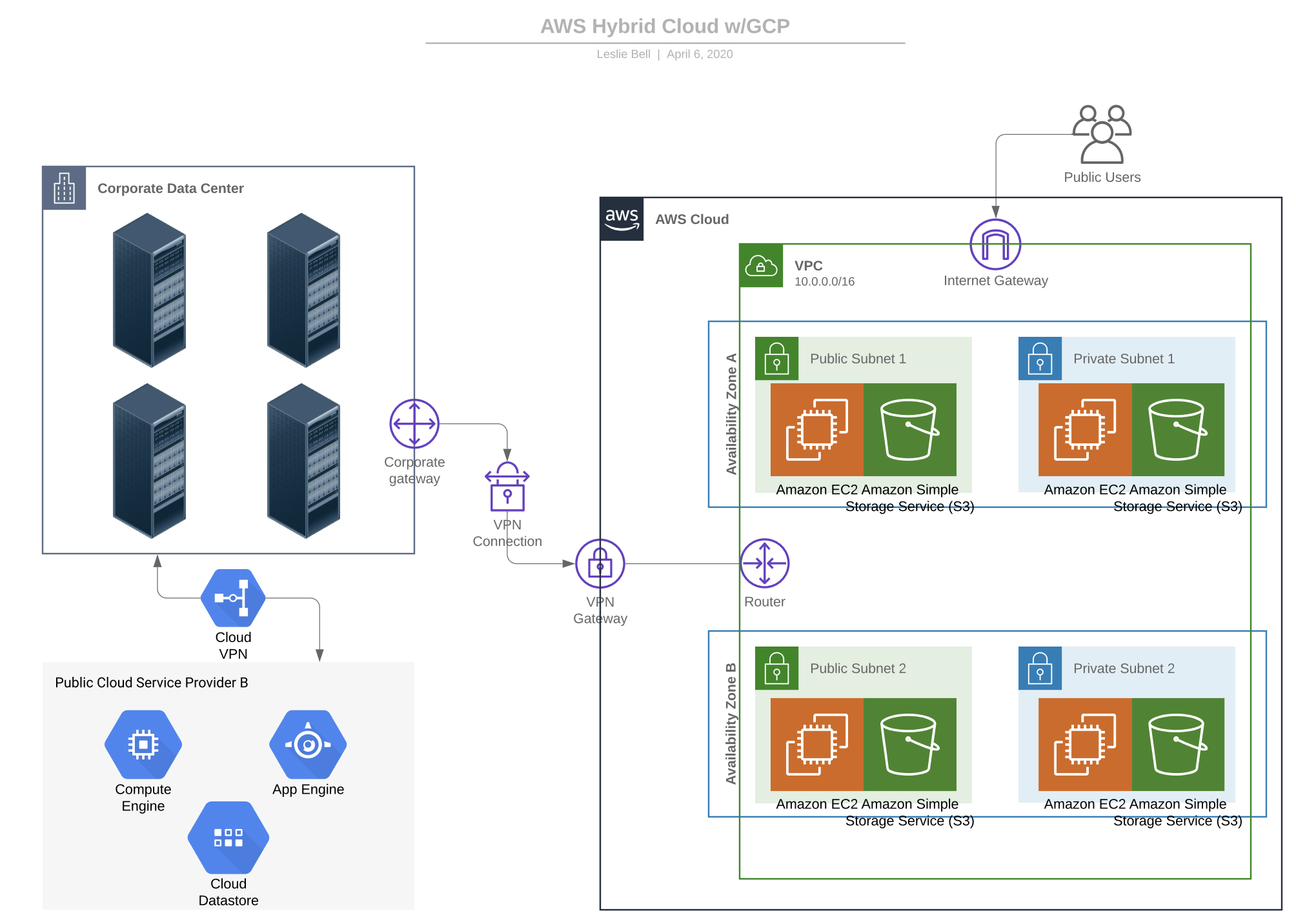

Hybrid Options

New Terms

| Term | Definition |

|---|---|

| AWS Outposts | A hybrid cloud service that includes a physical AWS compute and storage appliance that resides on -premises in the customer datacenter |

| Hybrid Cloud | A mix of public cloud, private cloud on-premise data centers and edge locations |

AWS Hybrid Cloud Services (AWS Outposts)

- Physical compute and storage appliance that resides on-premises in the customer's data center

- Provides secure transfer and retrieval

- Encrypts all data at rest and before moving it to the AWS public cloud

Key Points About Hybrid Architecture

- Helpful when data needs to be in a secure data center due to regulatory and privacy requirements

- Can be costly without proper planning (only data transfer in is free)

- Some data will require encryption before uplaod to the public cloud

Optimizing for Cost

Key Points

- Establish baselines, monitor peak periods and autoscale based on peaks

- Use spot instances strategically to reduce costs

- Private IPs allow you to transfer data for free within the same availability

- Use consolidated billing to save money if your organization has multiple AWS accounts

Additional Reading

Optimizing costs in the cloud is a skill that takes time, testing and a deep understanding of how costs are calculated. Please follow the links below for additional information to help you hone your cloud cost optimization expertise.

When to Start Big or Employ Elasticity

It makes sense to start with larger instances when you have already captured the metrics required to project your application’s future usage patterns and your application has consistent processing patterns. It also makes sense to start big when you are completely unsure of your applications usage patterns, but it is worth it to you to pay a little more to ensure you don’t experience an application outage due to exhausted resources. This is often the case when the cloud technology team doesn’t have an experienced cloud architect on the team.

It makes sense to employ elasticity when your application has bursts of activity at predictable intervals. For example, an application that is usually in use from 8-5 will scale up at 7 am and begin to scale down around 6 pm. It will run at reduced compute capacity until the next expected increase in activity and scale up.

For applications with completely unpredictable usage patterns, it makes sense to size according to the average usage and employ autoscaling so that AWS can respond to an increase/decrease in activity according to the criteria you specify.

Key Points

It makes sense to “start big” and buy your compute in advance when you know what you need because you have:

- Predictable Traffic and Workloads

- A sturdy CI/CD pipeline

- Strong IT and Dev support

It makes sense to employ elasticity when you ca

n easily predict your compute needs, for example if your app has:

- A history of periodic surges

- The potential for extreme usage

AWS Services to Help Manage Your Cloud Budget

-

AWS Budgets - allows you to configure custom budgets and alert you when costs exceed the pre-defined or projected cost threshold or are unexpectedly lower than your projected costs.

-

AWS Trusted Advisor - evaluates your AWS environment and provides recommendations for steps you can take to to optimize your AWS environment for cost, performance, security, and redundancy.

- Billing Alarms - notification that contacts you when your billing threshold is approaching or has been reached

- AWS CodeGuru - can evaluate code a it is being written to find the most inefficient, costly, and unproductive lines of code

Autoscaling

Key Points

-

Designed to to maintain stable performance and control costs

-

Scaling can add/remove system resources (vertical scaling) or add/remove nodes or workers (horizontal scaling)

-

Amazon EC2 Autoscaling uses health notifications and instance status checks to determine the health status of an instance. If an instance is marked unhealthy, it is scheduled for replacement.

-

Scaling for all AWS resources is done in the Auto Scaling interface

-

Scalable AWS resources include

- EC2

- EC2 Spot Fleets

- ECS

- DynamoDB

- AWS Aurora

What is the difference between Scaling Out and Scaling up? Do you believe they accomplish the same objective?

Scaling out is the process of adding more resources to spread out a workload. An example would be adding servers to a load balancer to share a workload. Scaling up is the process of making a resource larger and more powerful in response to an increased workload. An example would be adding RAM or CPUs to a server so it can manage an increase in demand.

Scaling out is also referred to as horizontal scaling

Scaling up is also referred to as vertical scaling

Auto Scaling Considerations

-

Launch configurations are templates used by auto scaling groups to determine what types of EC2 instances to launch

-

Launch configurations can be assigned to multiple auto scaling groups, but each auto scaling group can only have one launch configuration

-

Autoscaling groups maintain enough instances to meet capacity by performing periodic health checks and terminating and replacing instances that are not healthy.

-

Autoscaling groups use scaling policies to increase and decrease the number of running instances based on conditions you define, such as memory or CPU limits.

Additional Reading on Autoscaling

To learn more about Auto Scaling and how it can contribute to application stability by automating the AWS response to changes in demand, please follow the links below.

- AWS Auto Scaling

- Tpo 11 Hard-Won Lessons We’ve Learned about AWS Autoscaling

- Best Practices for AWS Auto Scaling Plans

- What is Amazon EC2 Auto Scaling?

Avoid Cost Surprises With Monitoring and Optimization

Key Points

Monitoring is one of the most important skills to features in AWS, and, without it, costs can run out of control because resources are unlimited. You need a response and support plan. You need appropriate alarms. You need to decide when you are done and when you are in an experimental phase. You need to decide how you are going to share results because monitoring metrics aren’t for everyone to view, particularly if they are tracking individual user activity.

AWS Cost Optimization Tools and Services

- AWS Cost Explorer - Enables you to view and analyze your costs and usage in a graph or a report. Cost Explorer is very useful in forecasting costs and predicting where reserved instances may offer cost savings.

- AWS Cost Optimization Monitor - Analyzes your billing reports and pulls out metrics to make them searchable and displays them in a customizable dashboard for analysis

- AWS Code Guru - Helps you proactively improve code quality and application performance with intelligent recommendations. It reviews your code and finds code issues during code reviews before they reach production, including needlessly costly lines of code, race conditions, memory leaks, and runaway logging.

- AWS Solution Architects - Professional AWS certified engineers who are deployed by AWS to assist customers in solving the most challenging engineering problems. Costs for this assistance vary based on your support agreement and of course, the relative value of your account.

- AWS Account Credits - Credits are available for startups, non-profits, and academic institutions. It never hurts to ask for AWS credits no matter who you are.

Baseline Monitoring

Maintaining application availability and performance is dependent on a robust and well-defined monitoring policy. Establish your monitoring policy by:

- Defining your goals

- Document the resources that require monitoring

- Selecting monitoring tools and tasks

- Deciding who will respond to notifications, alerts, and alarms

Once you have created a monitoring policy, you must monitor your resources to establish your monitoring baseline. Your baseline is defined as normal resource or service performance in your environment. In order to determine your baseline metrics, you should measure how your environment performs under all anticipated conditions at all times of the day. This will give you an idea of what types of deviations to anticipate, and gives you an opportunity to address them in your monitoring plans. Some typical monitoring metrics for EC2 instances include:

- CPU Utilization

- Memory Utilization

- Network Utilization

- Disk Performance and Read/Writes

- Disk Space

AWS CloudWatch Monitoring

Key Points

-

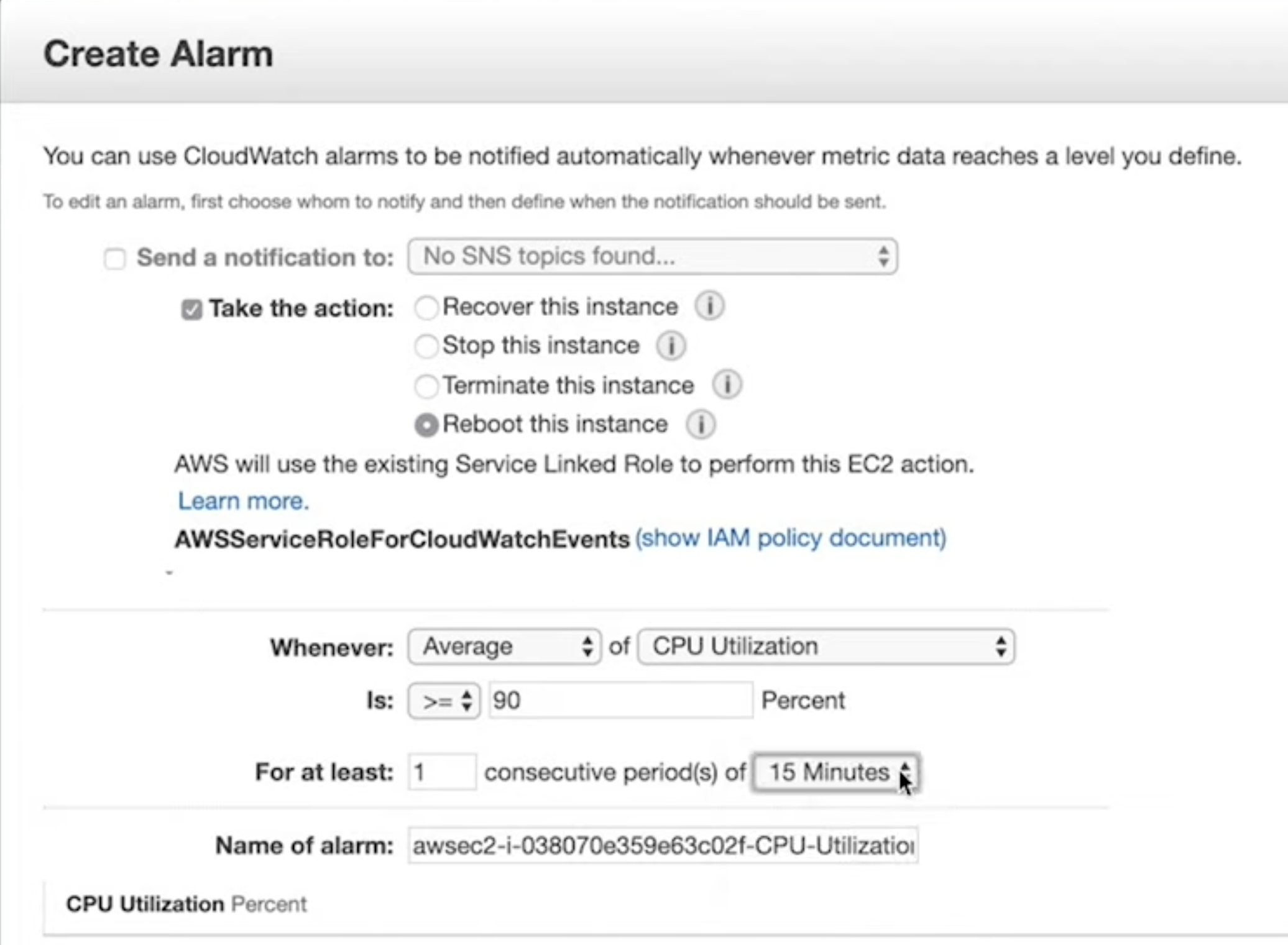

AWS CloudWatch is a service that monitors your AWS resources and application and reports performance metrics in real time

-

It can track metrics, alarm and notify based on usage, and make changes to the AWS environment based on changes it detects while monitoring

-

The CloudWatch management page has default dashboards configured that track useful metrics; users can configure additional custom dashboards tailored to the needs of their account

-

CloudWatch alarms can both notify when a threshold has been reached or breached, as well as take action based on the alarm; for example, it can restart an instance that is not responding or launch additional instances when a server CPU breaches its maximum threshold

AWS CloudTrail

Key Points

-

AWS CloudTrail is Amazon’s logging service that provides auditing, governance, and compliance visibility into API calls made in the AWS account.

-

The API call and details are written to a log file and maintained for 90 days

-

CloudTrail captures who or what made the call, what time it happened, the user or process that took the action, and the service or resource that was affected by the action

-

Cloud trail logs keep a running account of API calls and save them in a secure JSON file called an event. The log can be filtered and searched by metadata like date/time, user, or AWS service, but it can’t be modified.

-

CloudTrail Logs can be configured to be sent to CloudWatch. Publishing CloudTrail logs to CloudWatch provides the ability to create alarms based on CloudTrail log data and look at CloudTrail log data through the CloudWatch dashboards.

- IAM premission for CloudTrail to send event to CloudWatch is needed.

What would you do if you received a large bill from AWS for a mistake you made?

If you receive a large bill from AWS for a configuration mistake- for example, you may have opened a stream from a source that sends too much data to AWS or mistakenly uploaded a very large data set multiple times, you should first find out where you made your mistake so you can correct it as soon as possible. After you are certain you have mitigated your error and no additional unwanted charges are accumulating, reach out to AWS and explain what happened. In many cases, especially during your yearlong free tier testing, they will be understanding and reverse the charges, but there are no guarantees.

Unfortunately, they are not going to refund charges when you are surprised and disappointed by how much your crypto mining operations cost, versus how much currency you accumulated, so procure your AWS resources wisely.

Always make sure you configure a billing alarm for your account. Billing alarms prevent shocking surprise bills.

Additional Reading about Monitoring and Optimization

Taking the time to understand monitoring while you are early in your cloud architecture career will pay off in the long run. It's worth it to invest time into gaining a deep understanding of how cloud metrics relate to costs and performance and be able to translate monitoring insights into actions for your team and your company. Please follow the links below to read more about how monitoring

- Monitoring Amazon EC2

- Launch Autoscaling groups with On Demand, Reserved, and Spot Instances

- Automated and Manual EC2 Monitoring

- Monitor All Your Things (VIDEO)

- Monitoring Performance With CloudWatch Dashboards

Lesson Recap

Key Points

- Costs in the cloud have no equivalent in the data center because cloud services are billed like utilities - you only pay for what you use

- Costs vary between AWS Regions based on location, regulations, utilities, real estate costs, and the cost of doing business

- AWS can help architects by providing several calculation and cost optimization services to ensure customers are running cost and performance optimized workloads whenever possible

- AWS offers significant discounts when you commit to compute and when you need compute for fault-tolerant applications

Further Research on Cloud Costs and Monitoring

Managing the costs of your cloud services can be complex, and it requires your expertise and attention. Learning to manage cloud costs is a skill that will lead to successful budgetary goals and savings for your company. When you have time, please follow the links below to learn more about managing costs in the cloud.

浙公网安备 33010602011771号

浙公网安备 33010602011771号