[SAA + SAP] 22. Kinesis & AWS MQ

SAA

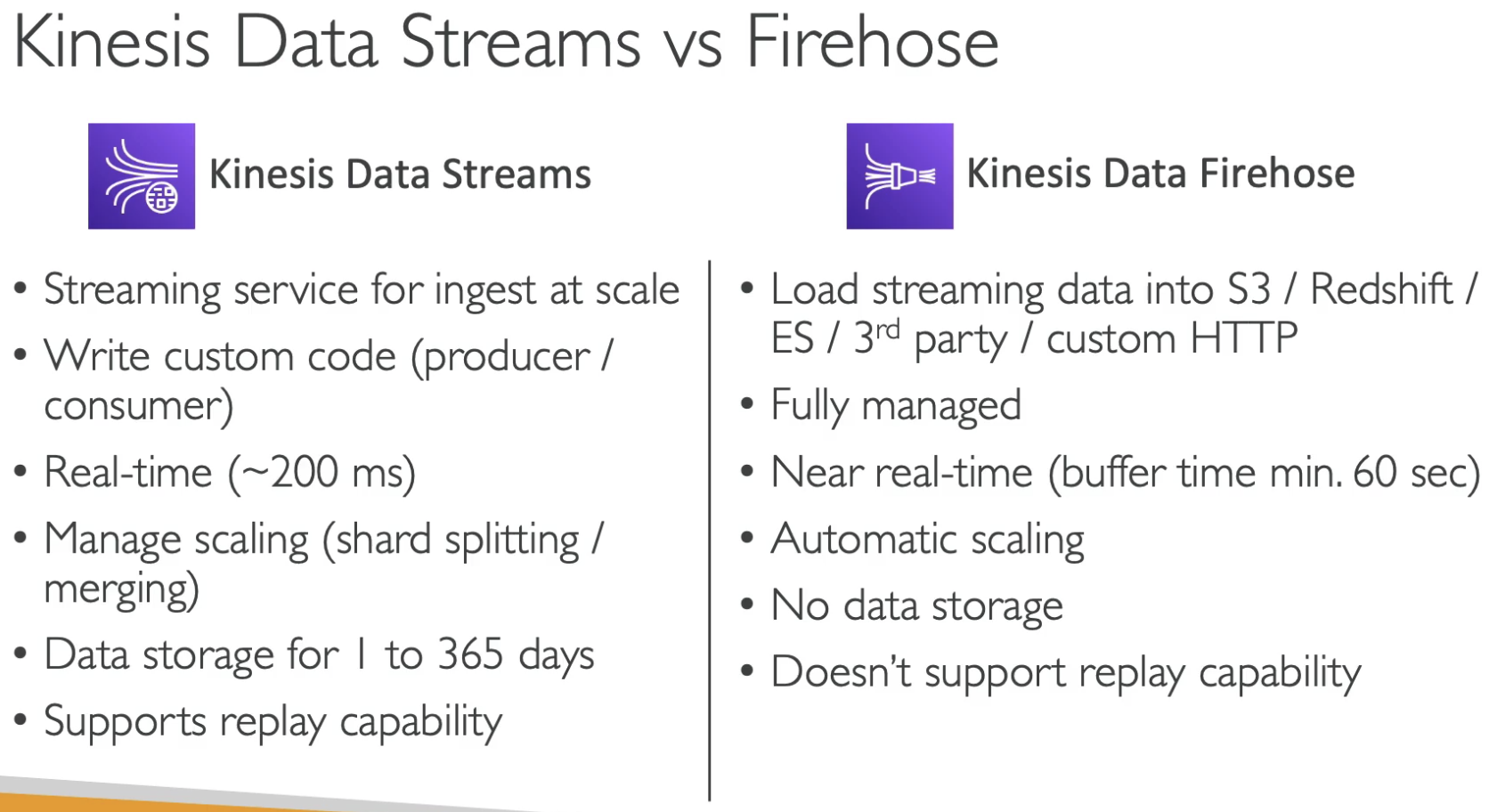

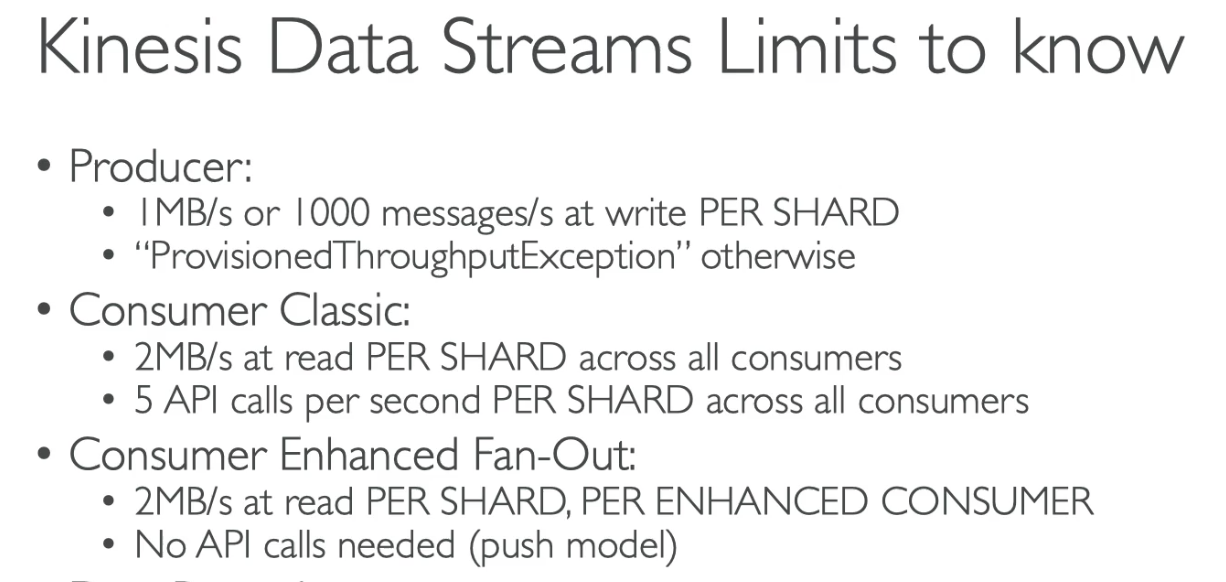

- Shared 2 MB/sec, all shards shared 2MB/s

- Enhanced: Per shard 2MB/sec

- Max retiention 365 days

- Can replay data

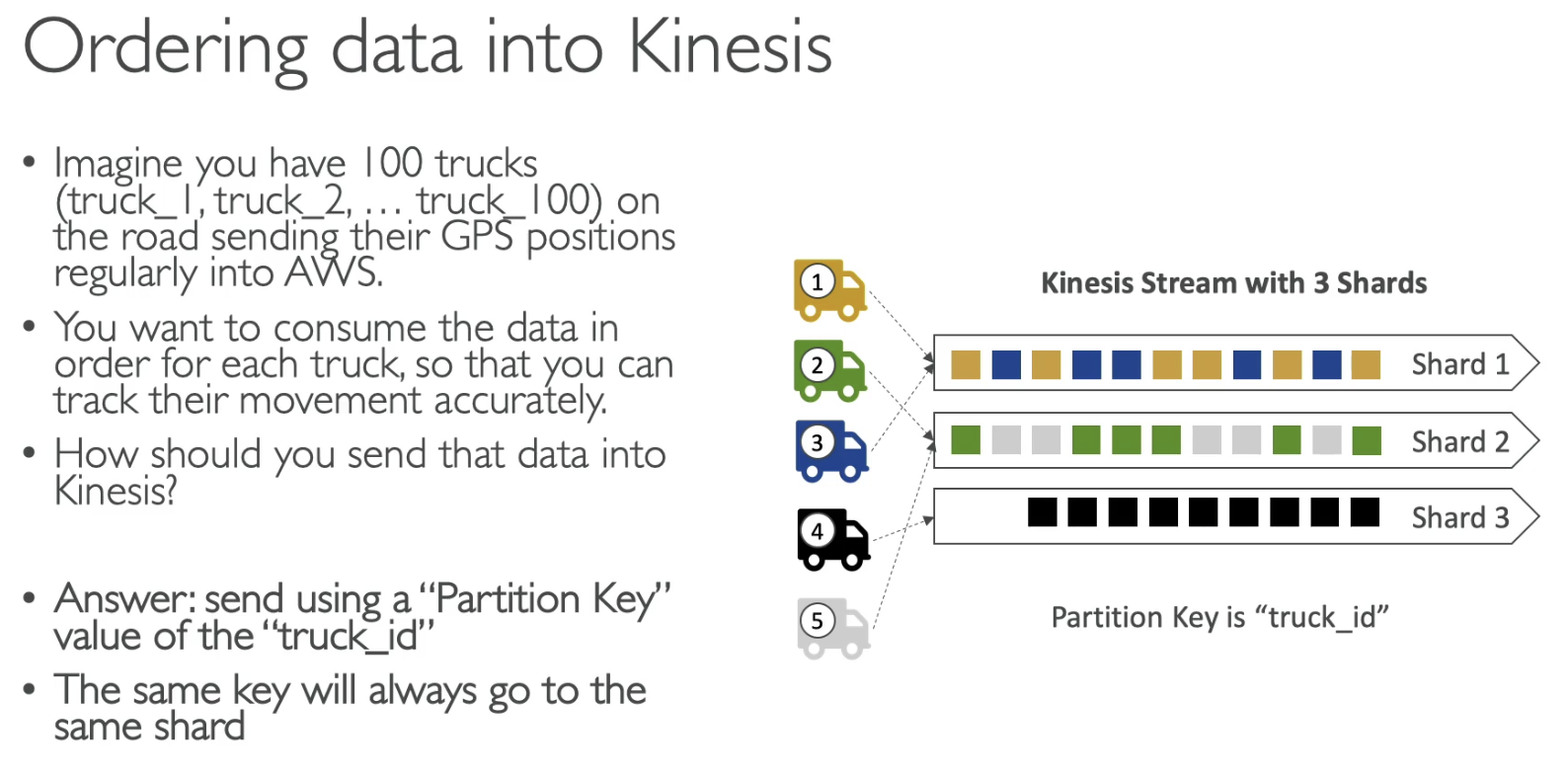

- same partition goes to same shard

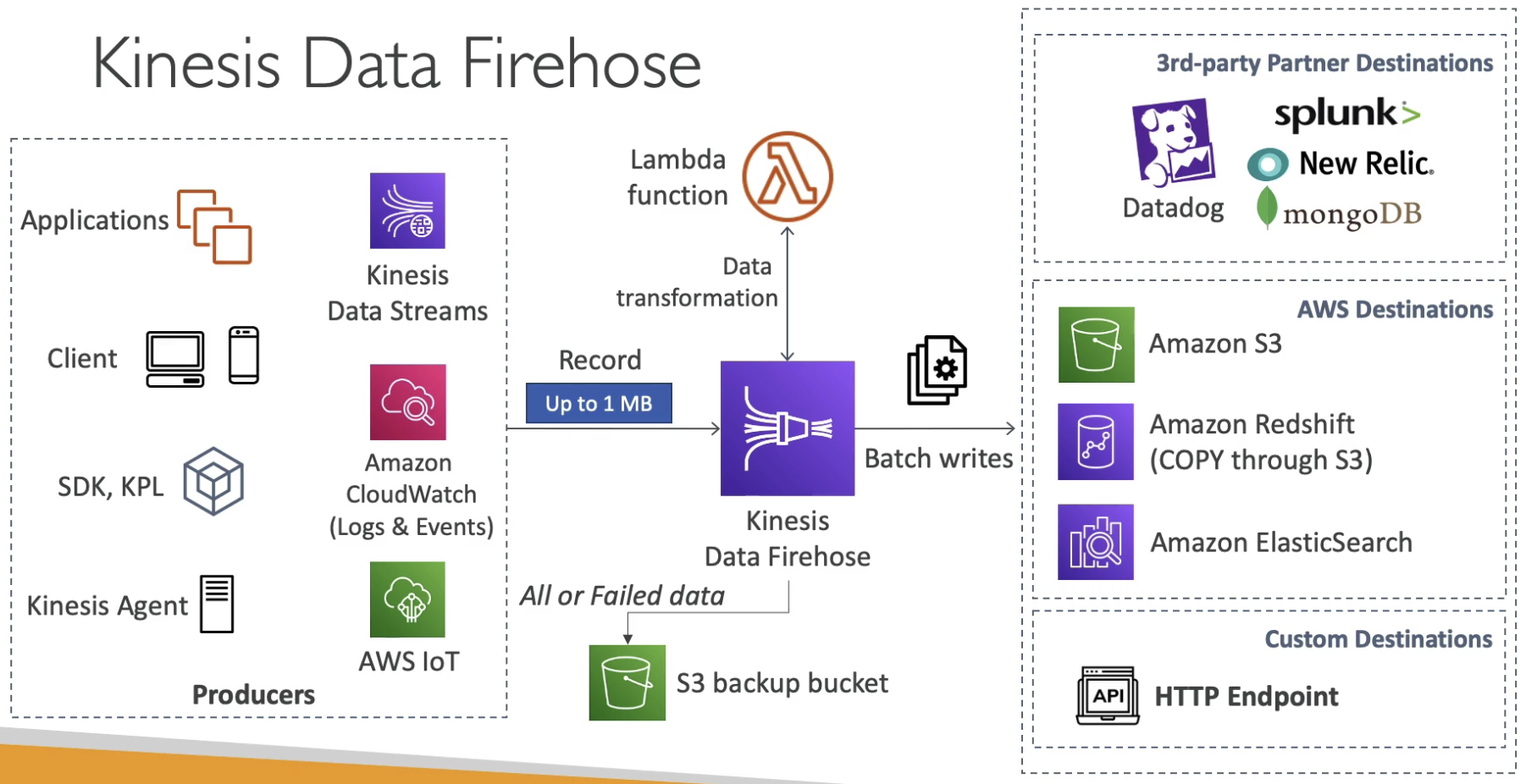

- Serverless

- Near real time

- Each record 1MB max

- S3 backup

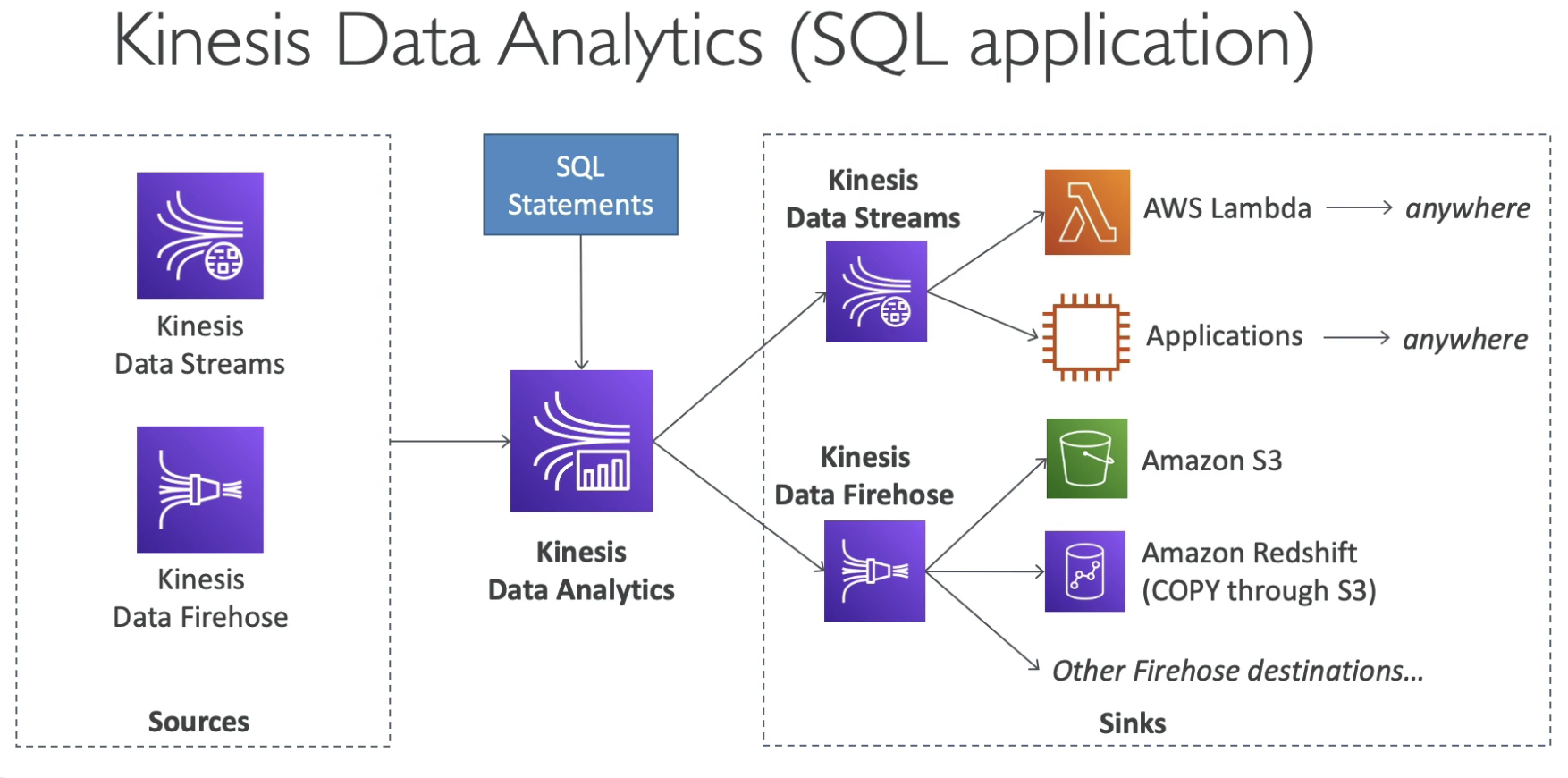

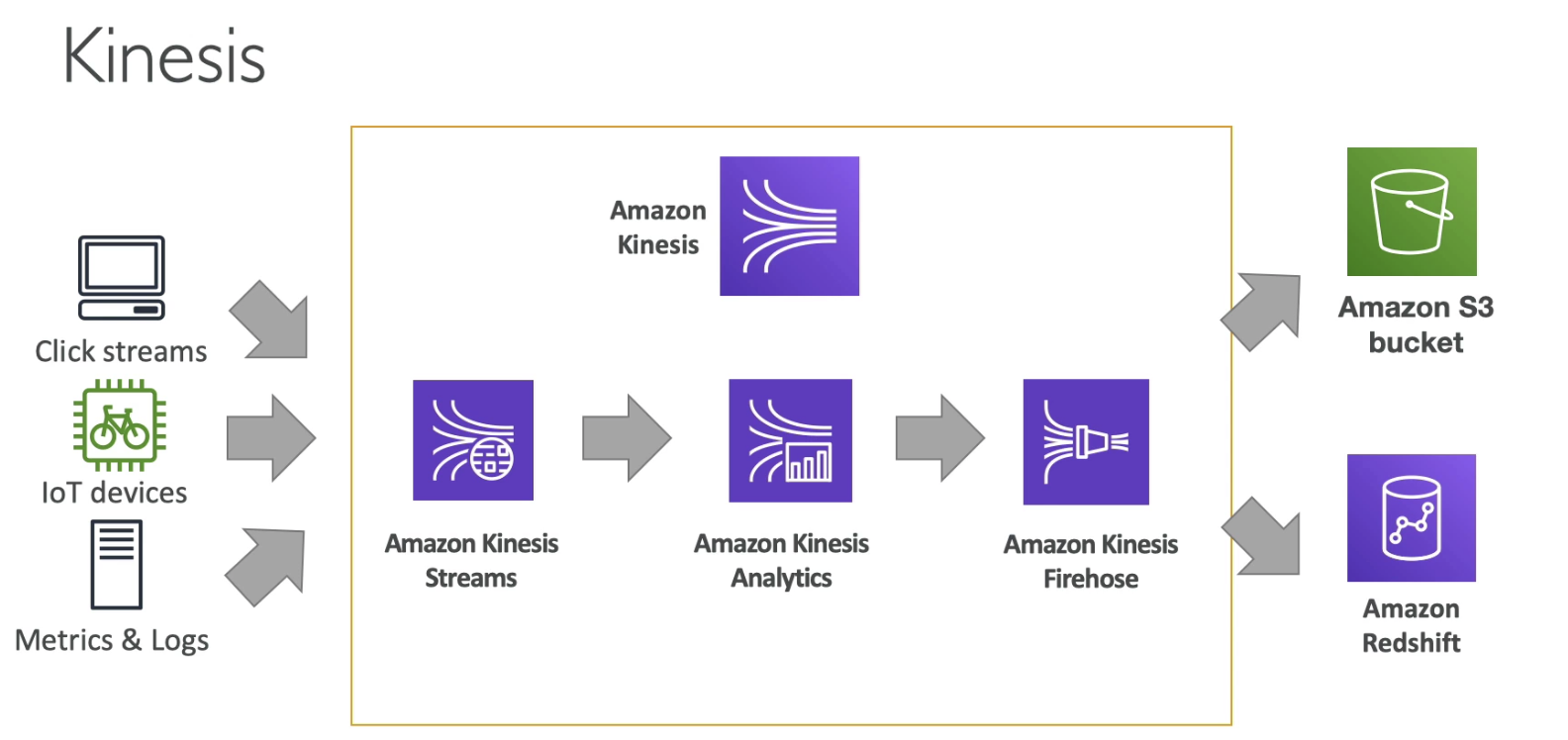

- Destionations: S3, Redshift, ElasticSearch

- Stream can be replayed

- Stream is realtime

- Firehose can do transformation using Lambda

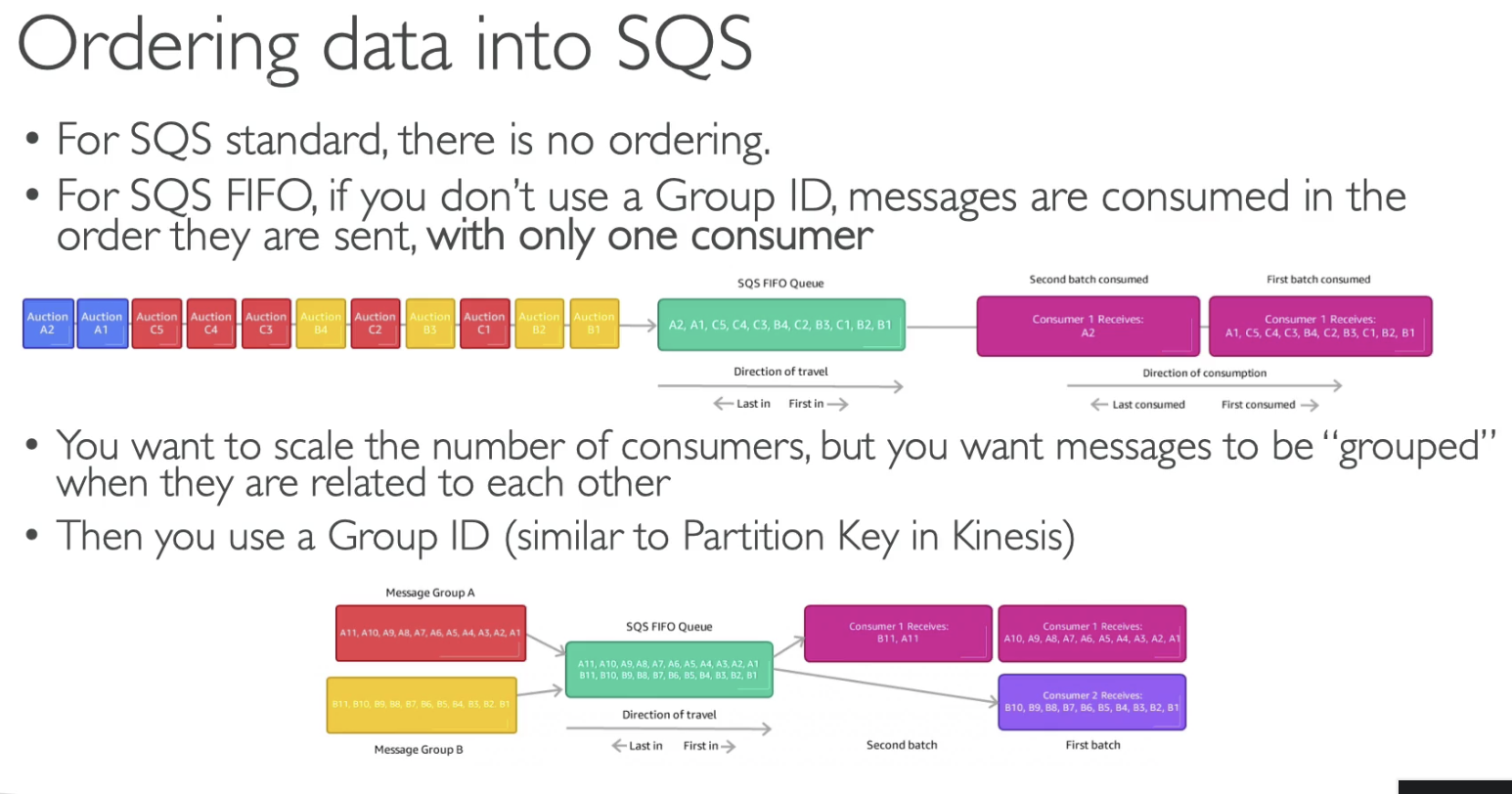

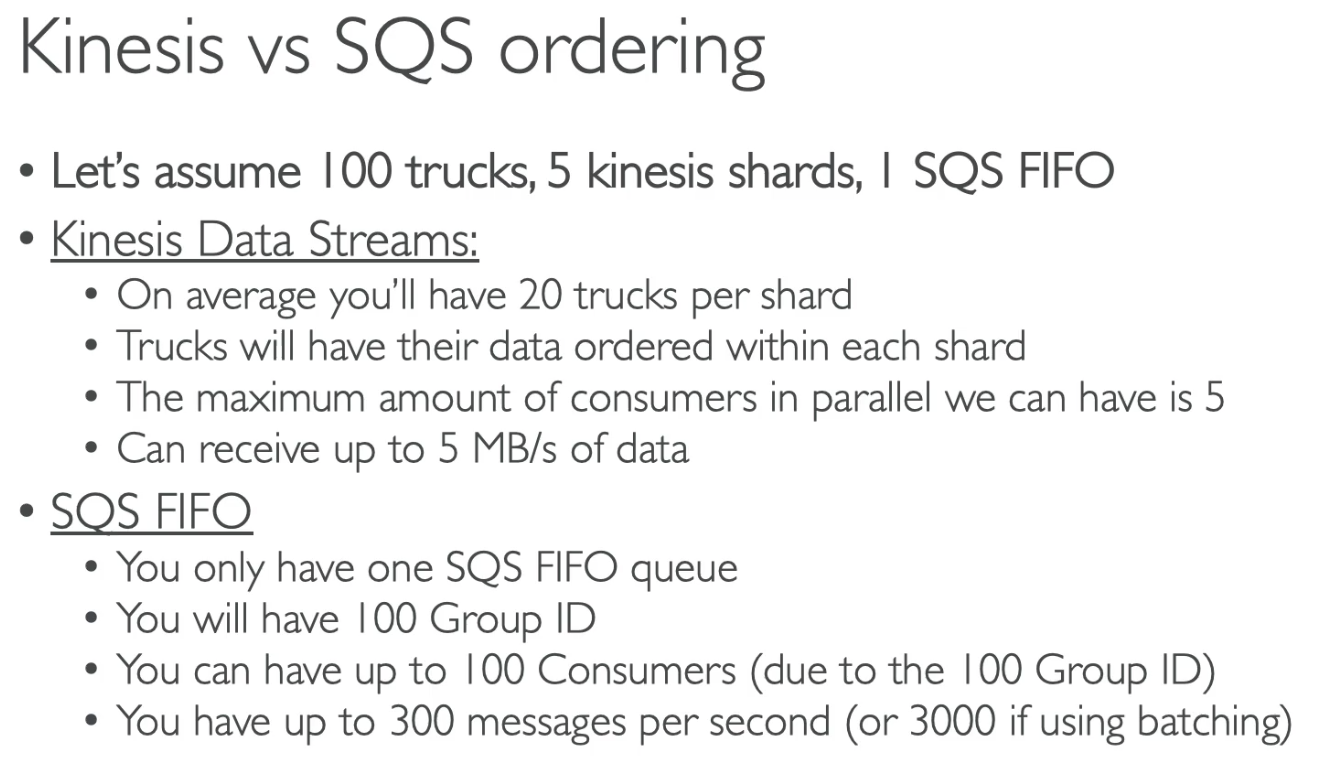

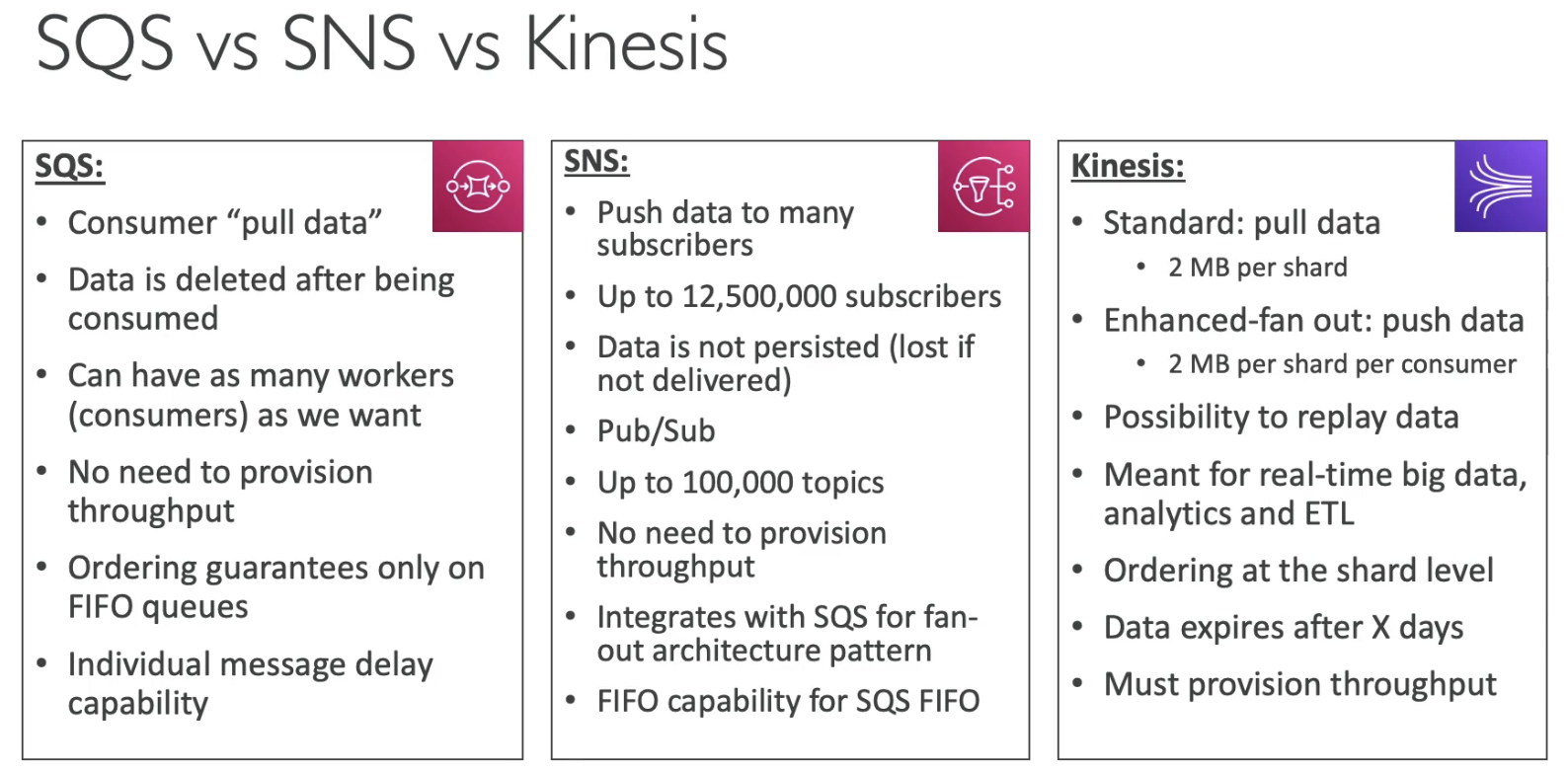

- For SQS, use GroupId in order to group message together

- Same patition key goes to same shard

Kinesis: max number of consumer = shard number

SISO: 100 GroupID = 100 consumers

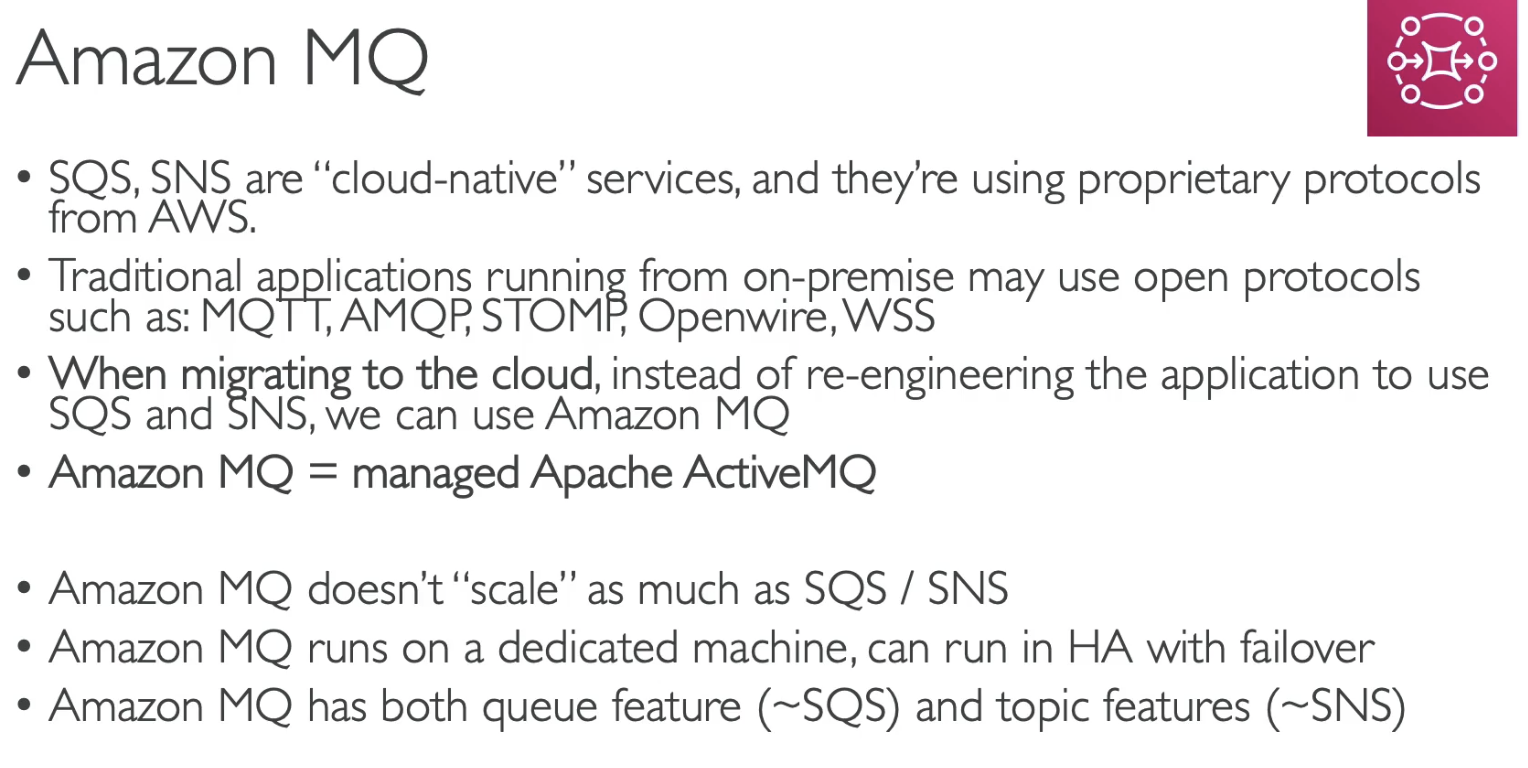

- STOMP, AMQP, MQTT protocols

- Migrating application to cloud

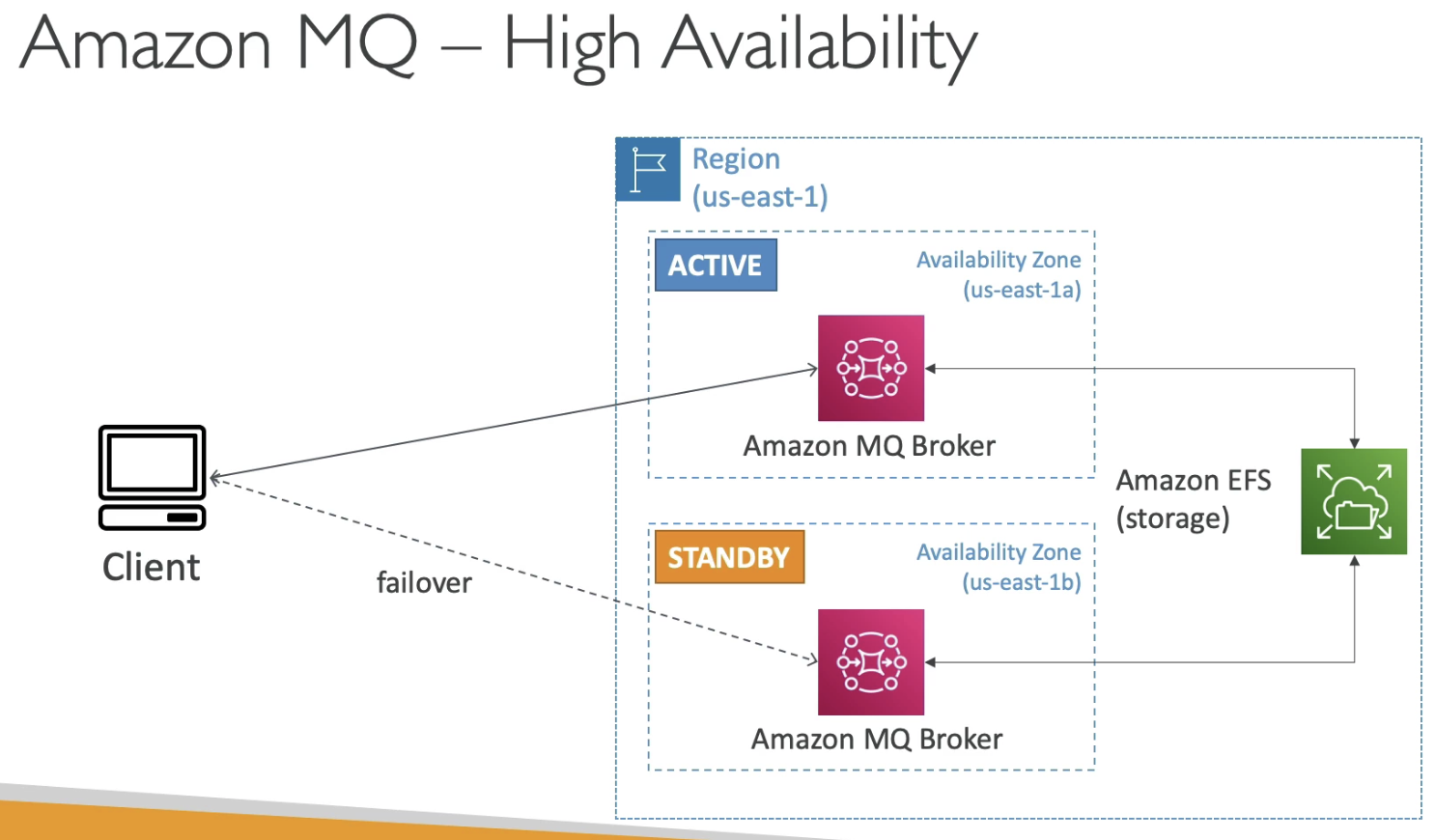

- Has Failover

- Using EFS as storage

SAP

-

Kinesis data is automatically replicated synchronously to 3 AZ

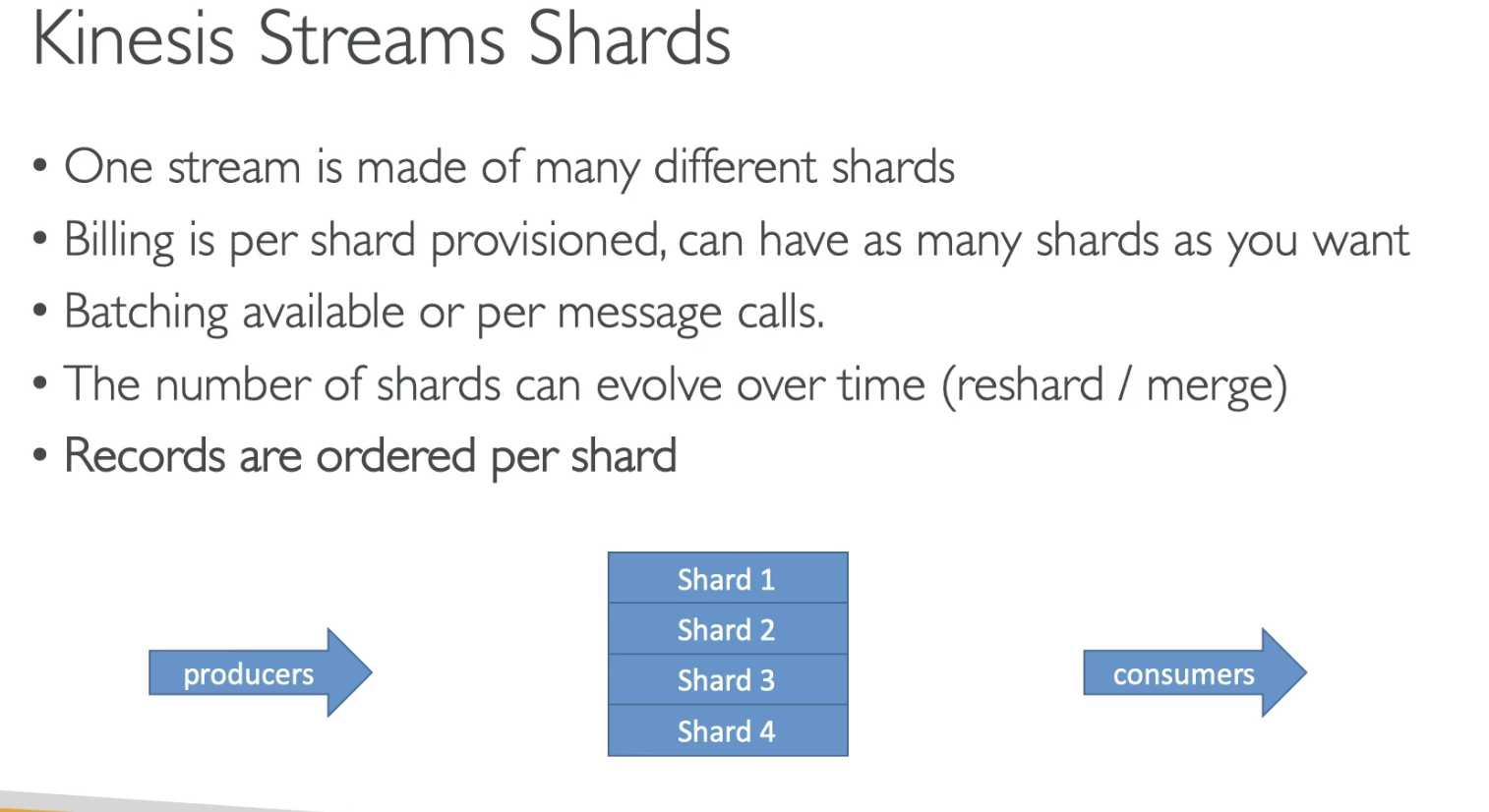

- Order at Shard level

- You can merge shard / reshard

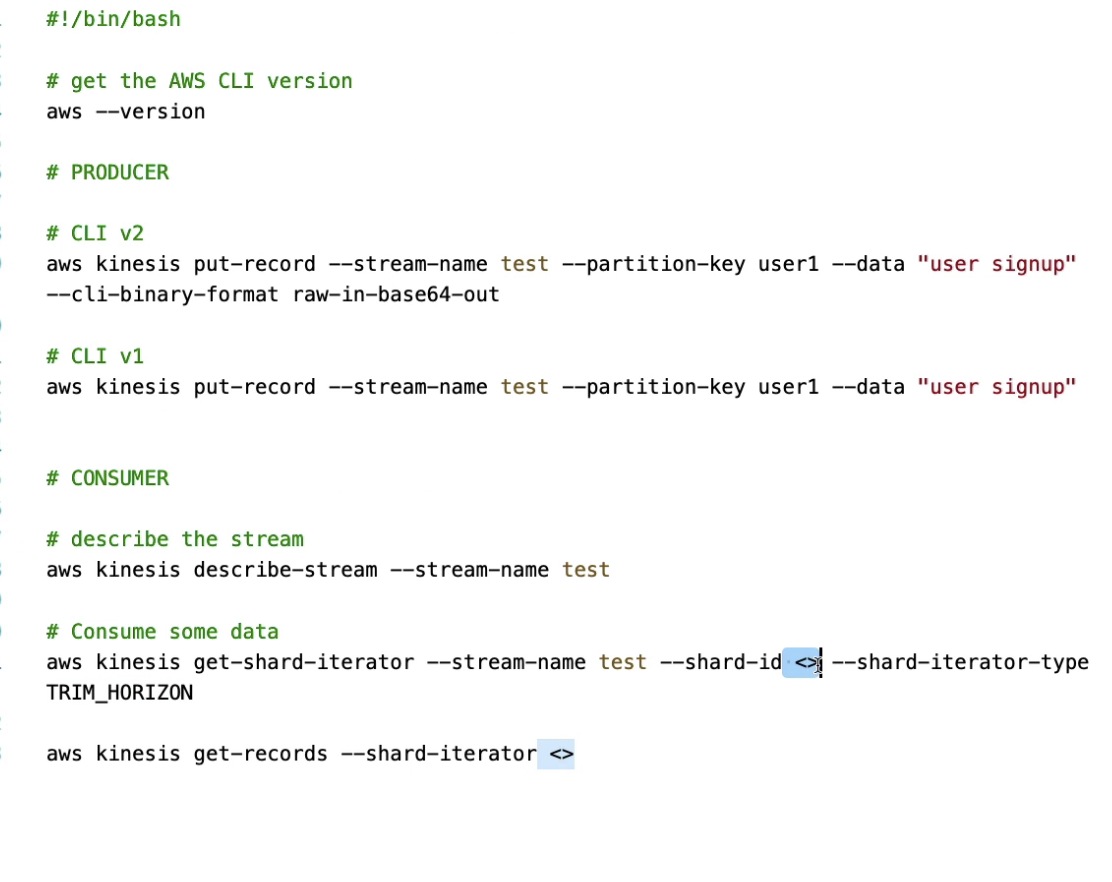

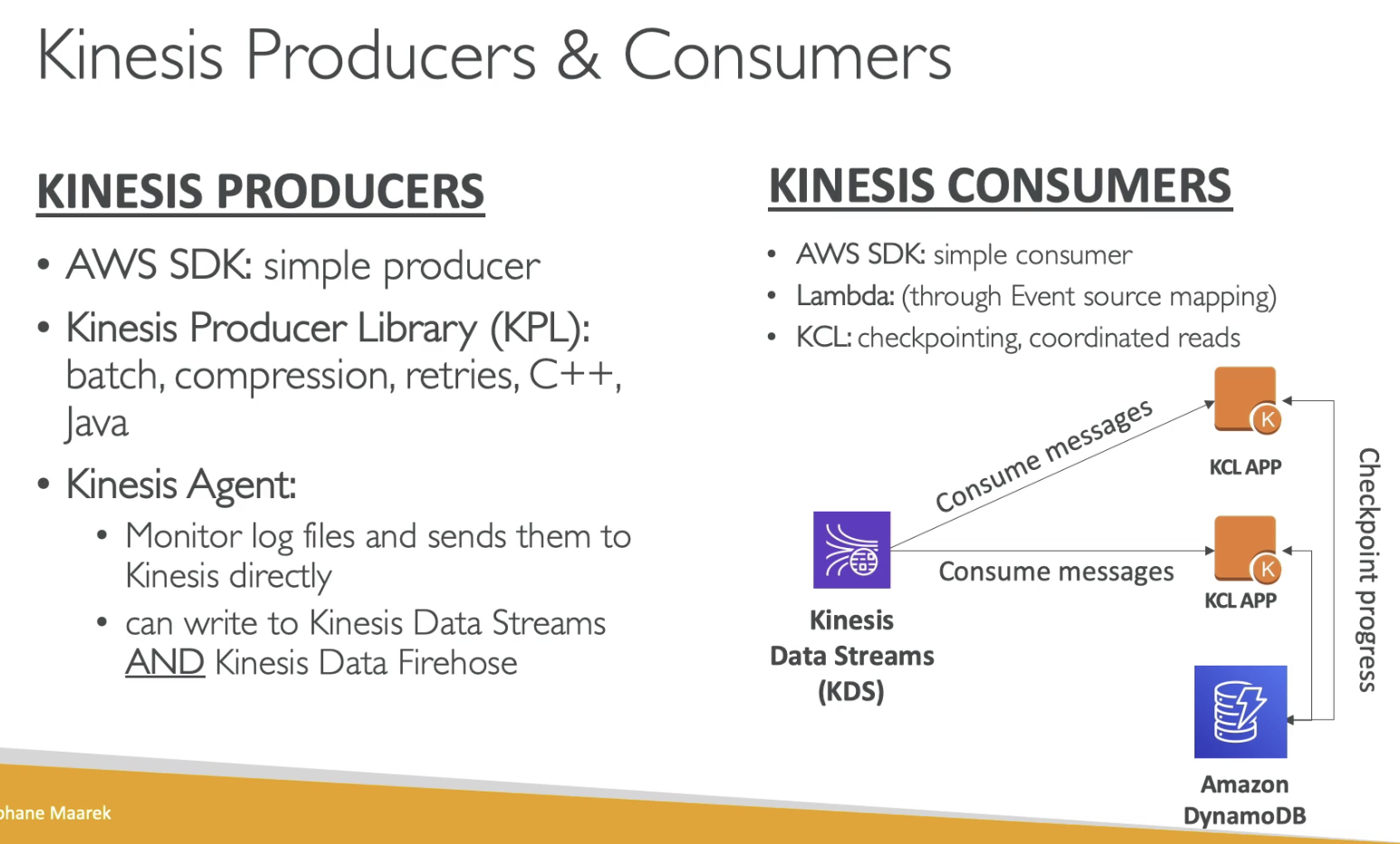

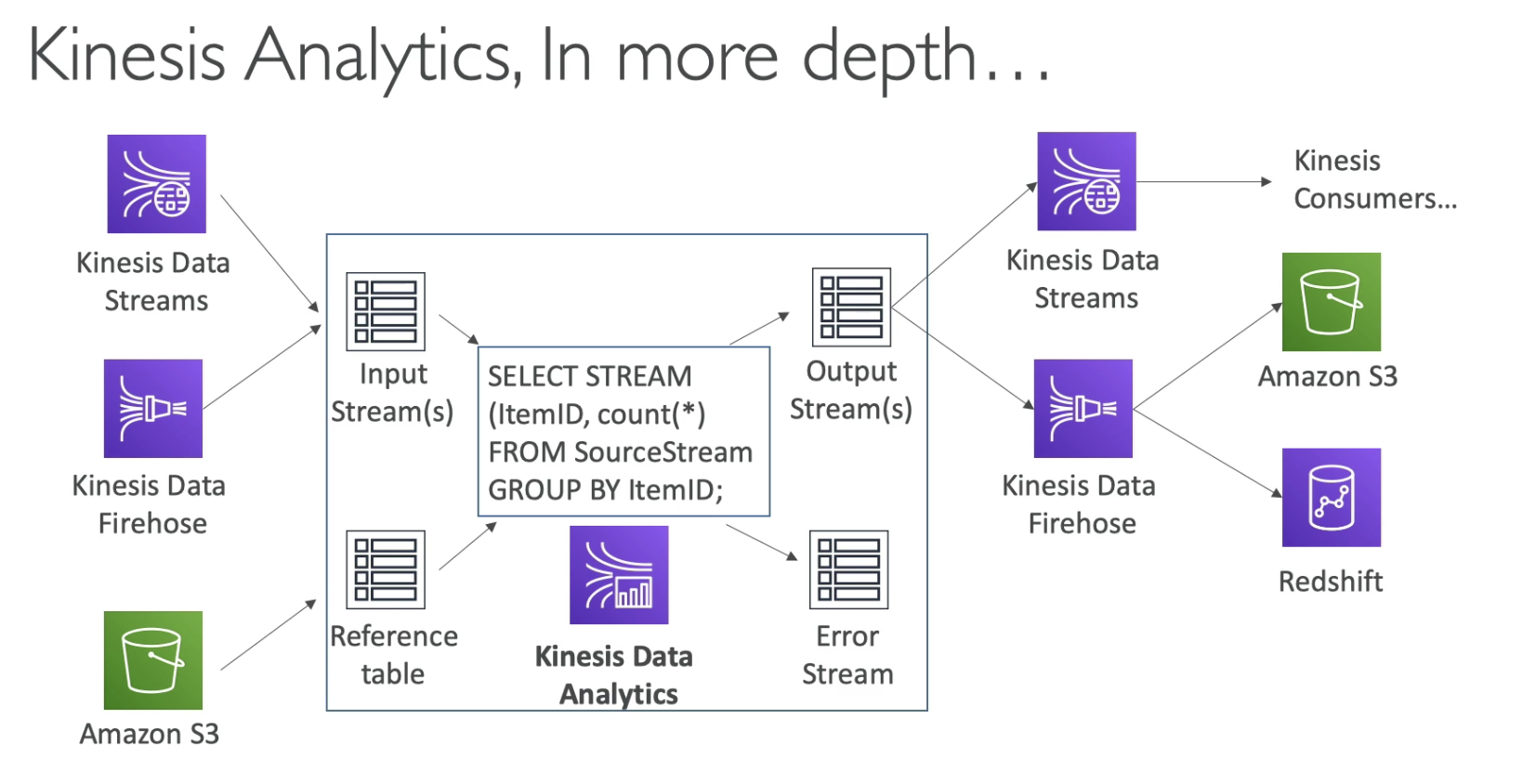

- Producers

- KPL for C++, Java

- Kinesis Agent: can write to Data Stream and Kinesis Data Firehose

- Consumers

- KCL: Checkpoint, coordinated reads write to DynamoDB

- You can put Source Records into S3

- You can put transformation failures job into S3

- You can put Delivery Failures job into S3

- FIrehose is near real time because it has buffer

- Max size for buffer is 32 MB, once reach that will flush buffer

- Max time is 1 min

- Buffer sizse can be automaticlly increase to increase throughput

- If you need real-time flush, use Data streams with Lambda

浙公网安备 33010602011771号

浙公网安备 33010602011771号