[SAA + SAP] 13. S3

SAA

- Max object size 5000 GB / 5 TB

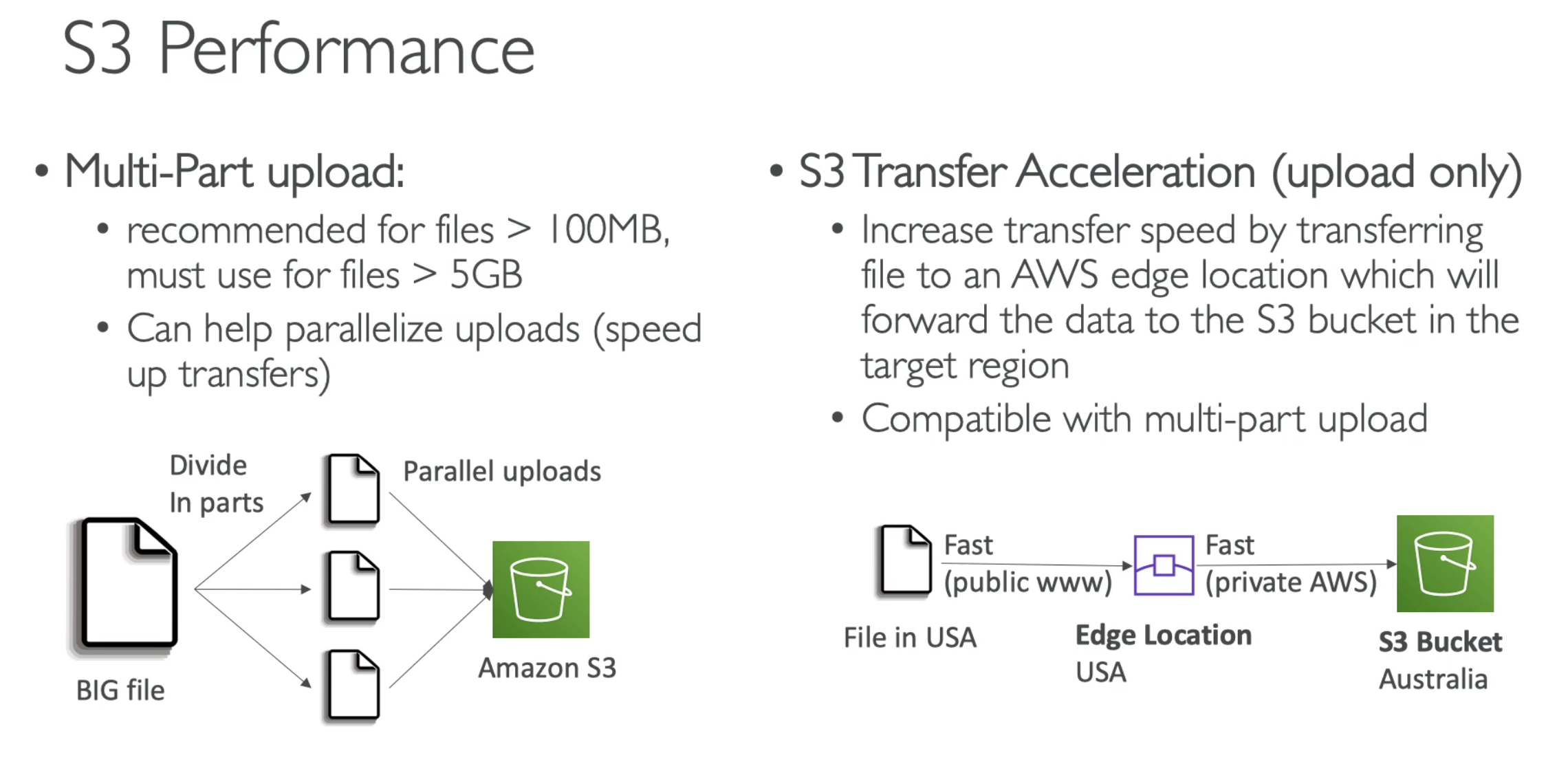

- Uploading more thatn 5 GB, then multi part upload

- root account for MFA

- MFA delete need to use CLI

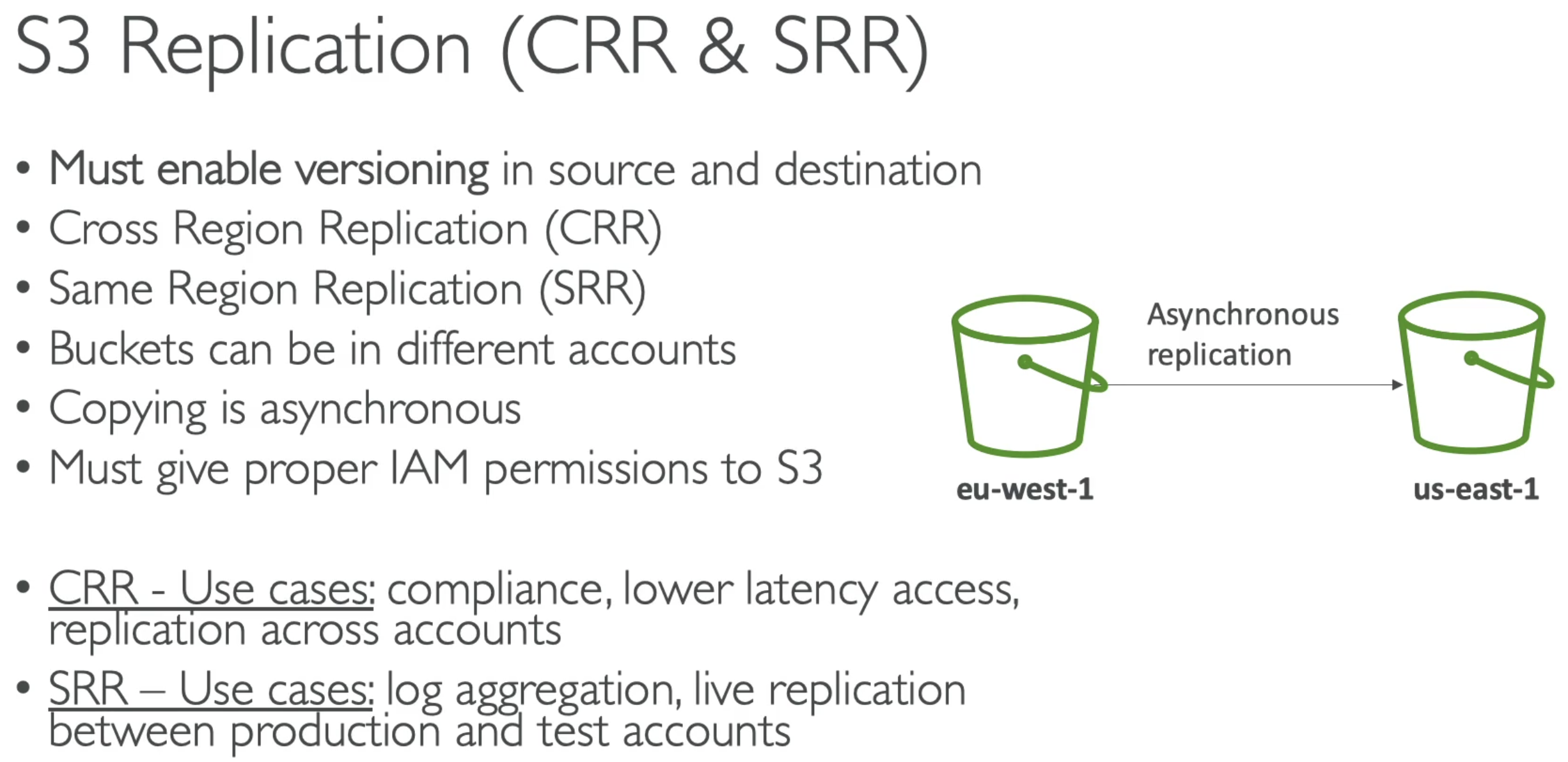

- Must enable versioning

- IAM permission

- After activating, only new objects are repliacated

- For Delete operations:

- Can replicate delete markers from source to target (optional setting)

- Deletions with a version ID are not repliacted (to avoid malicious deletes)

- There is no "chaning" of replication

- if bucket 1 has replication into bucket 2, which has replication into bukcet 3

- Then objects created in bucket 1 are not replicated to bucket 3

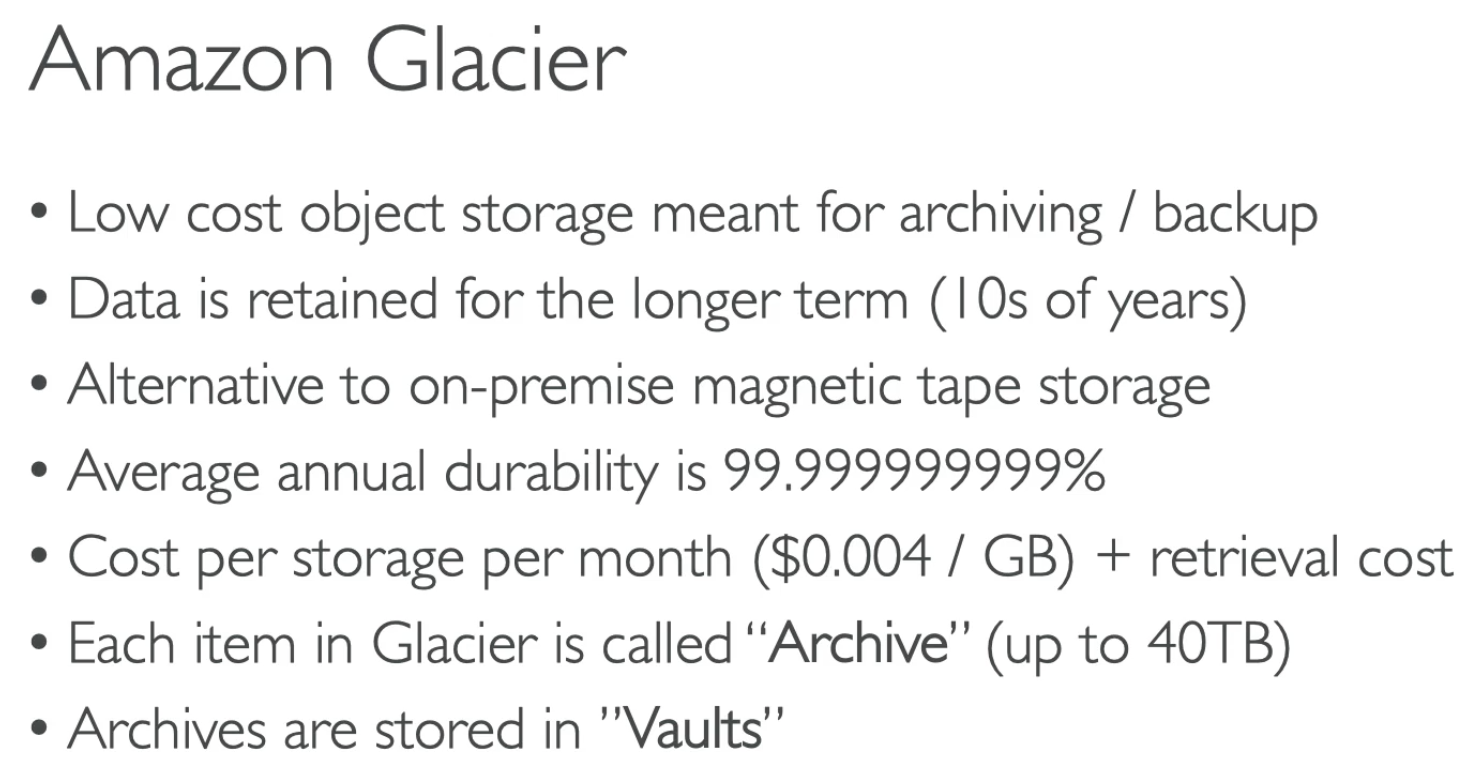

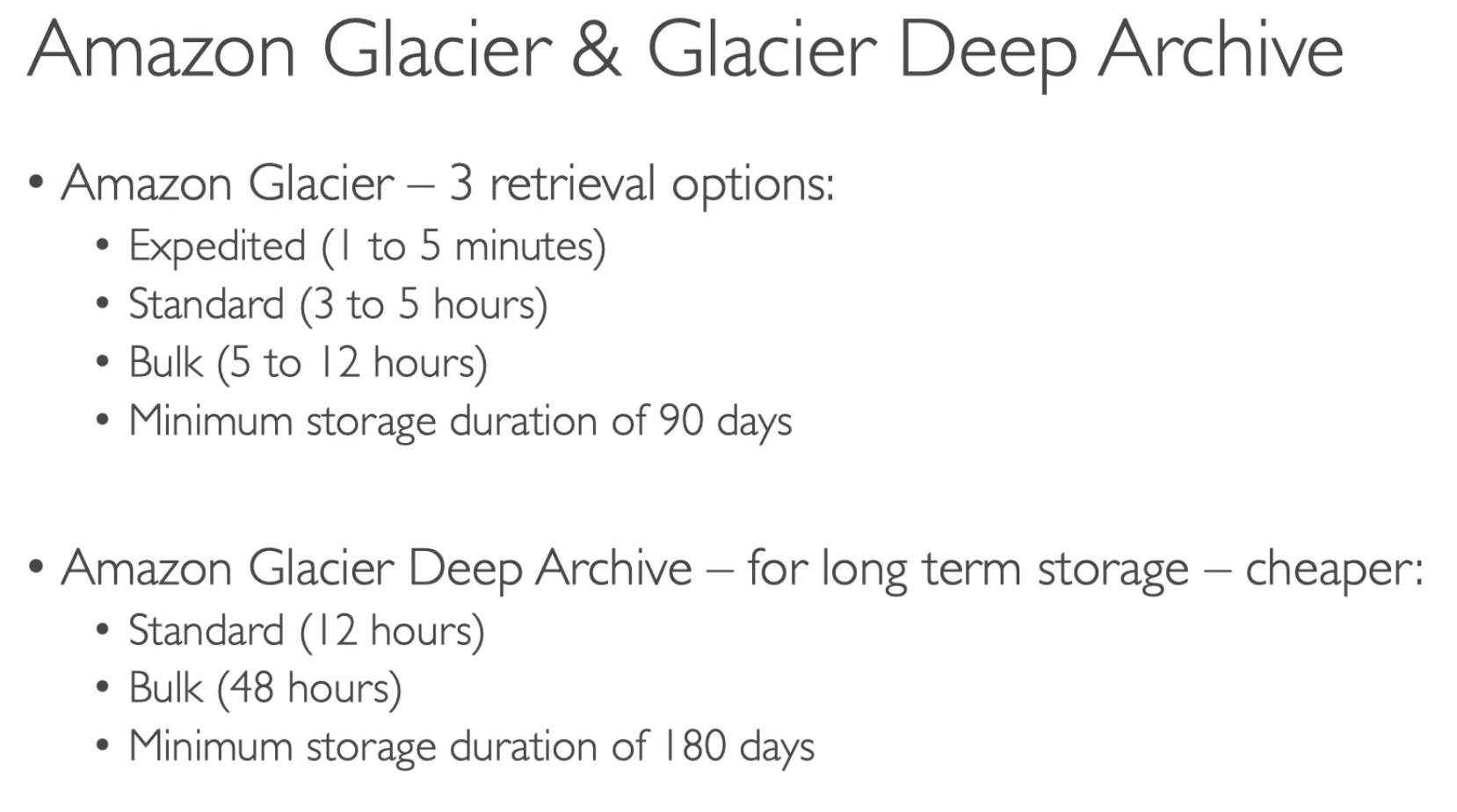

- Glacier under 90 days and 12 hours retriveal

- Deep archive under 180 days and 48 hours teriveal

- Thumbnail can be easily recreated, so can be saved into OneZone IA

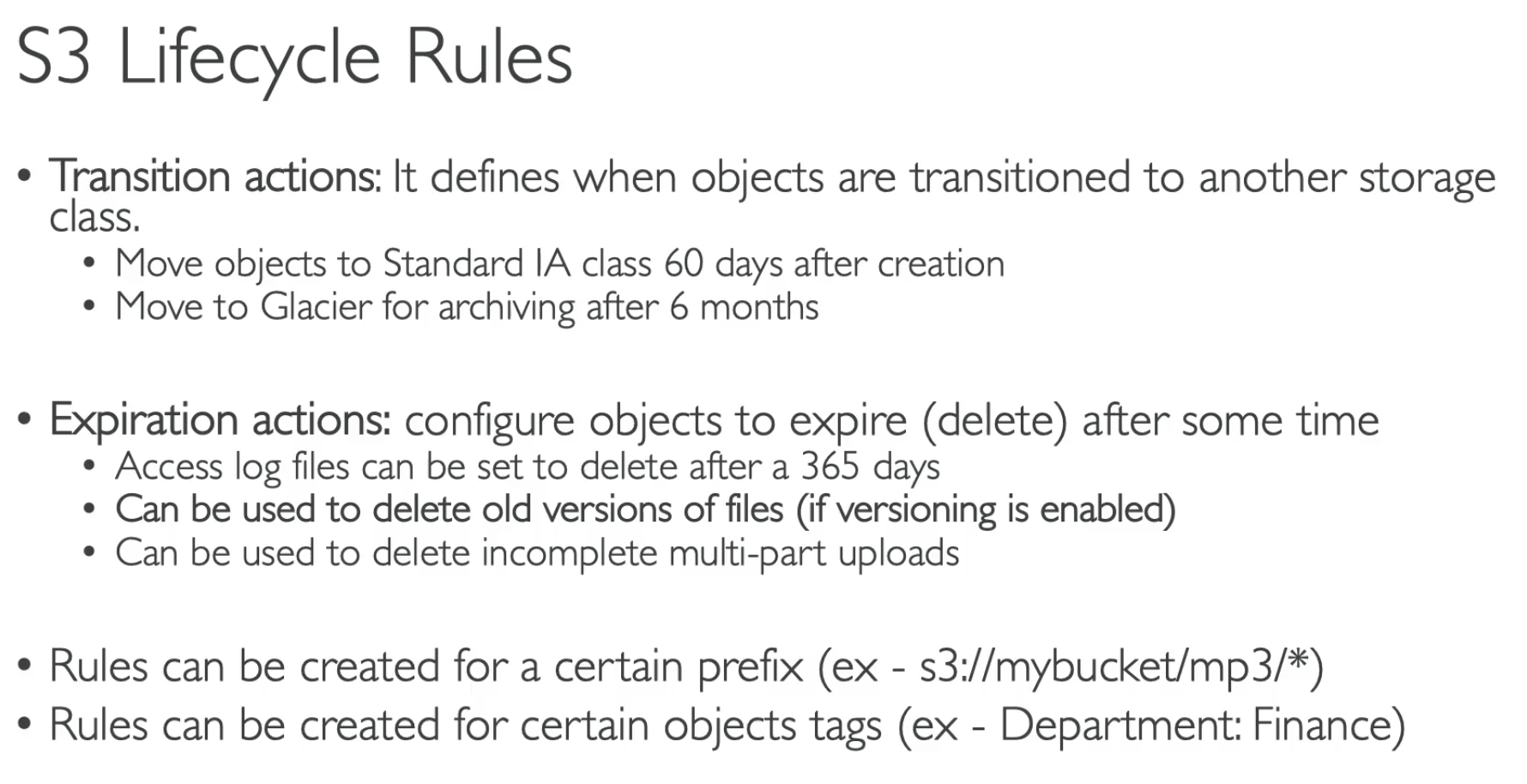

- After 45 days should be retrive up to 6 hours.. then should use Glacier

- Recover deleted objects .. Enable versioning first

- Once enabled versioning, the "noncurrent version" can be saved into S3_IA

- 365d, 48h means DEEP ARCHIVE

- Help to analytic how many days should transfer to IA or Glacier

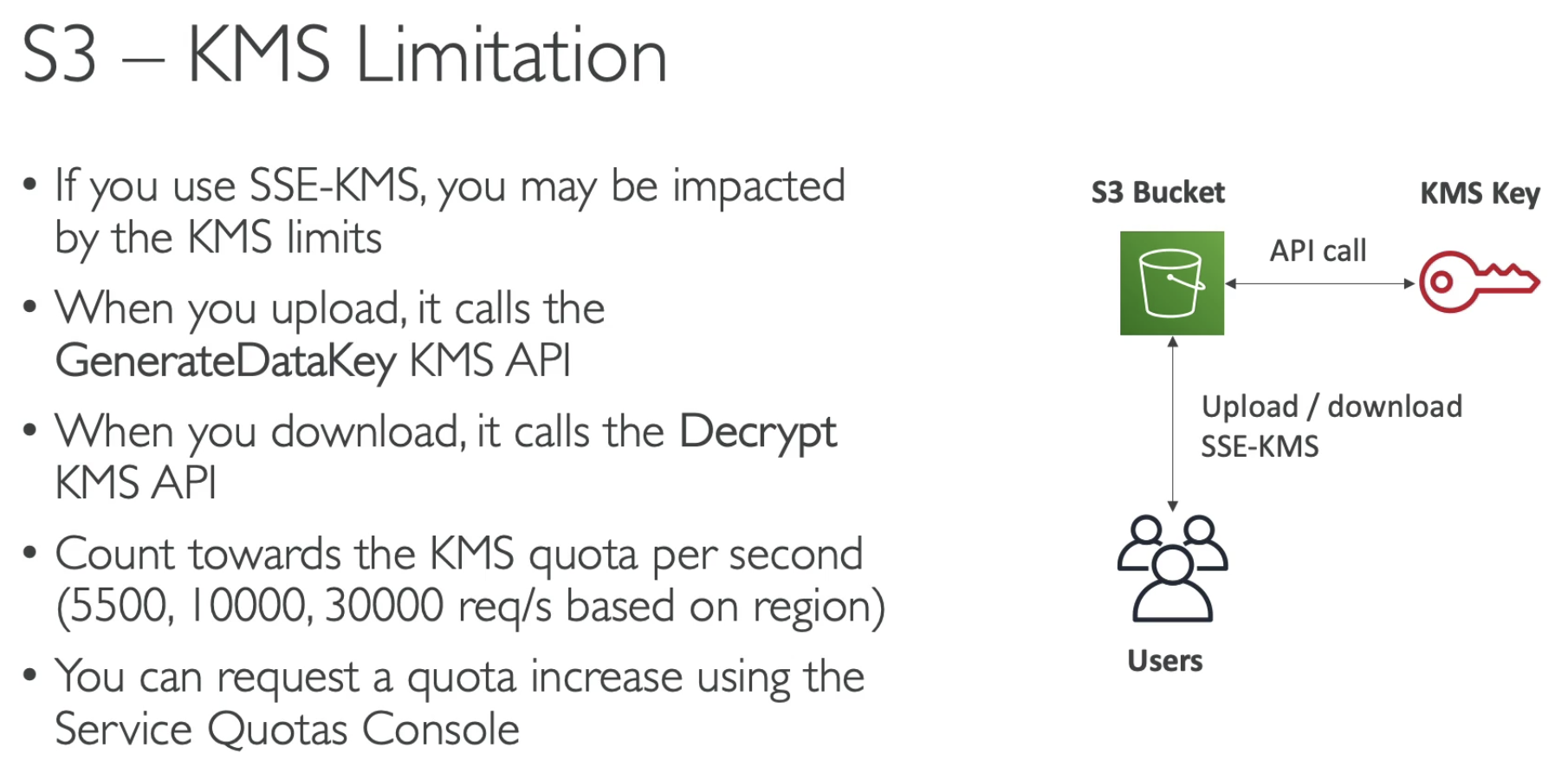

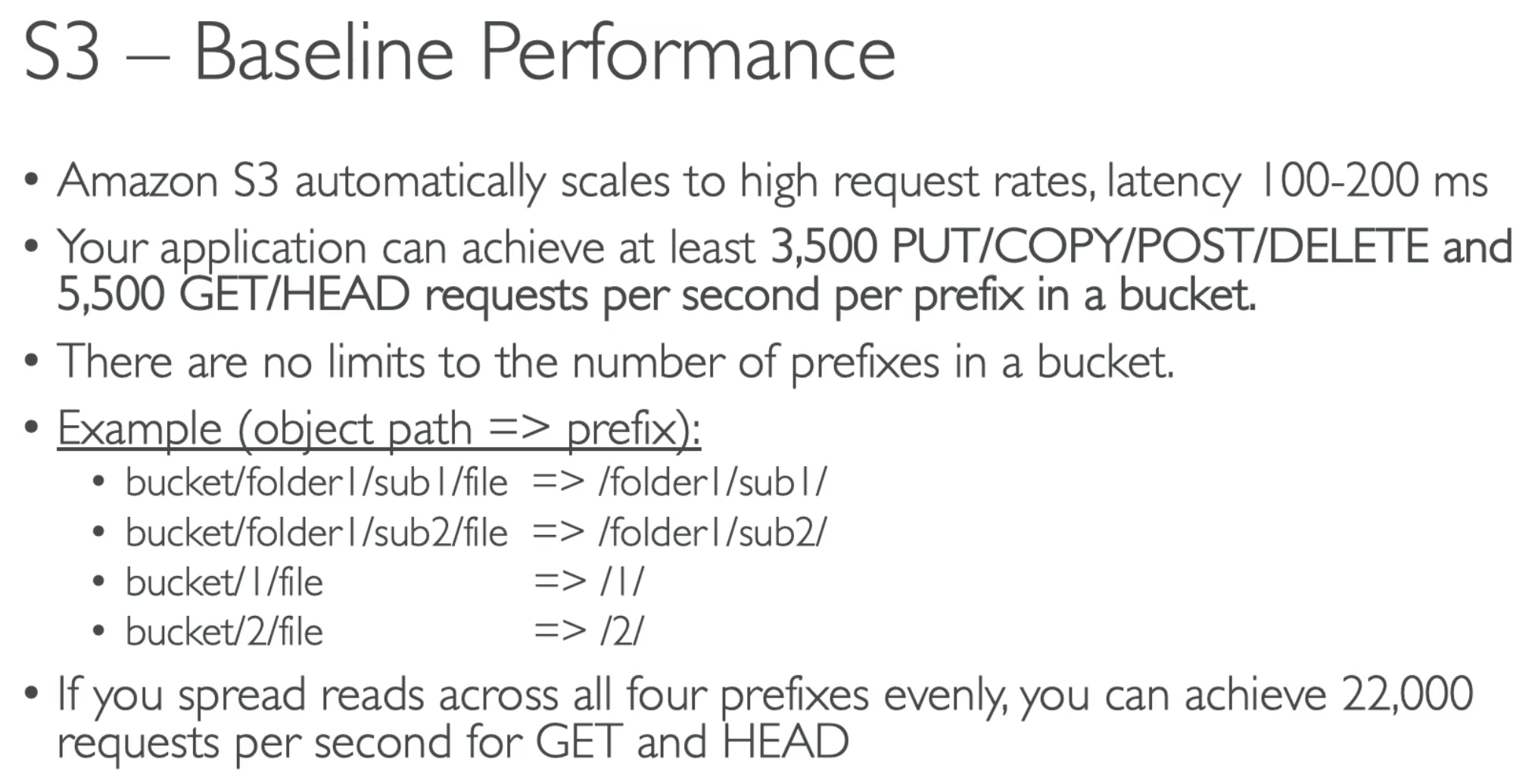

- If using KMS, the request is over 5500,10000,30000/s based on region, you need to increase Quotas

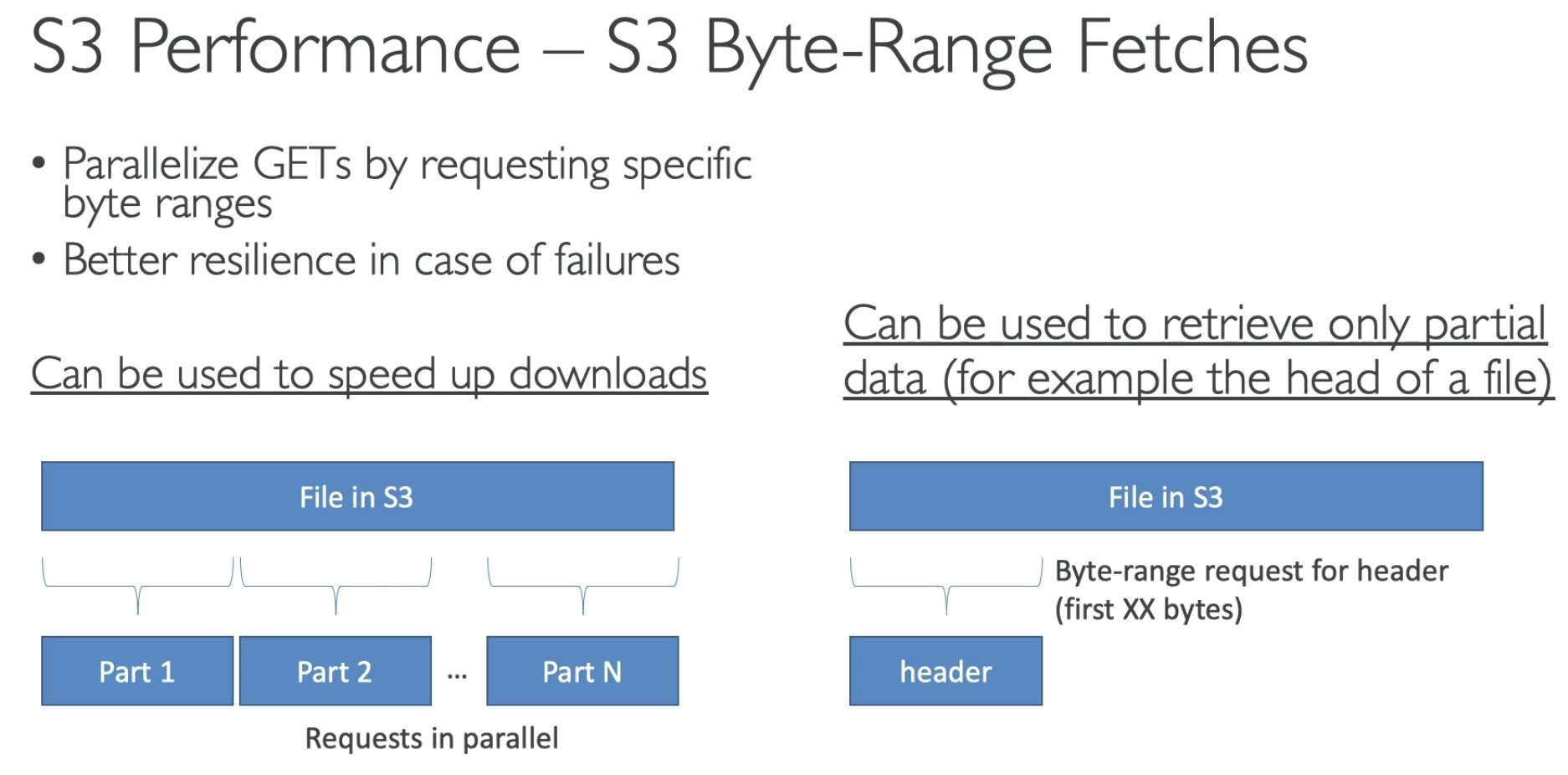

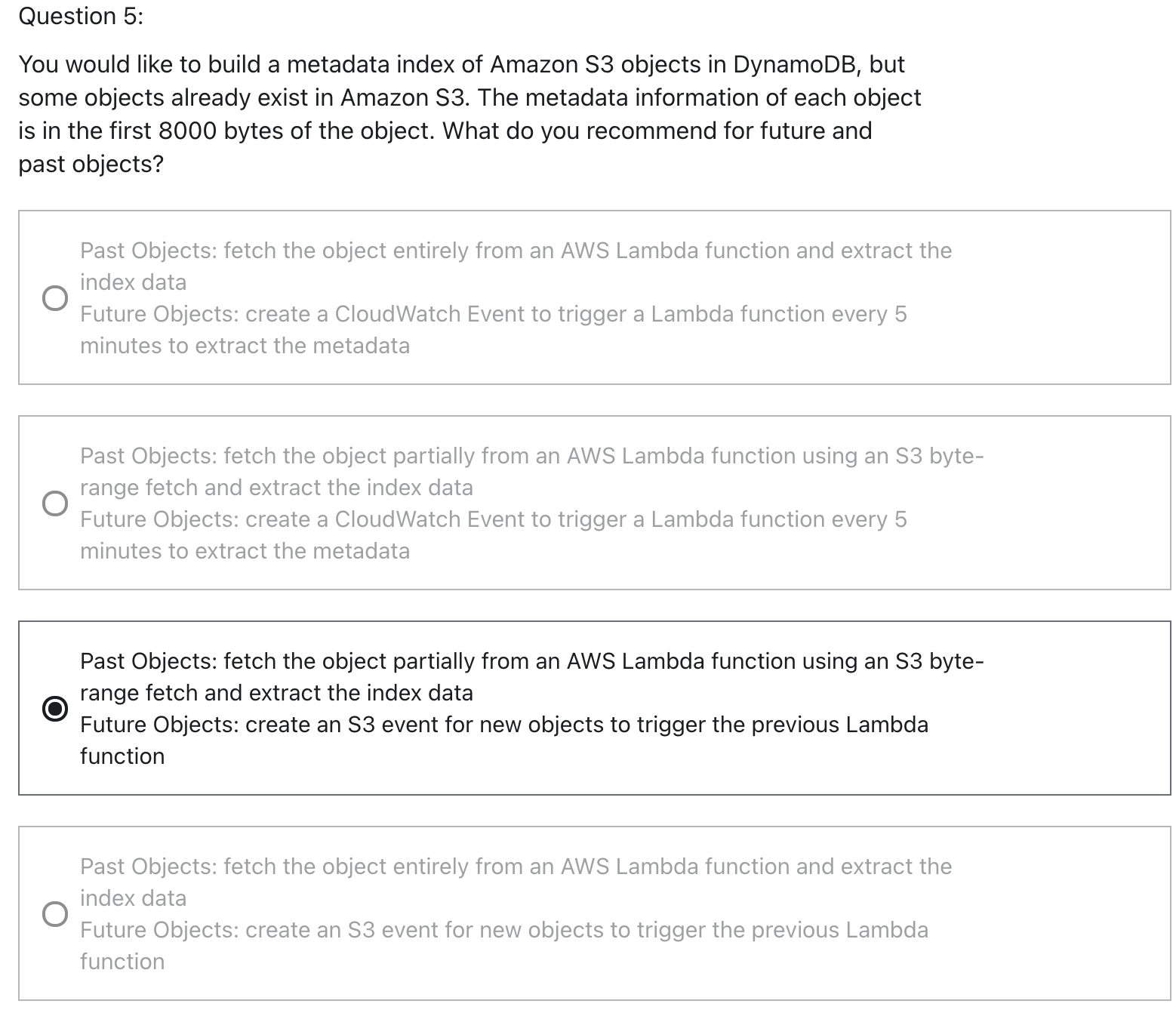

- Good for download large files

- Only get part of file

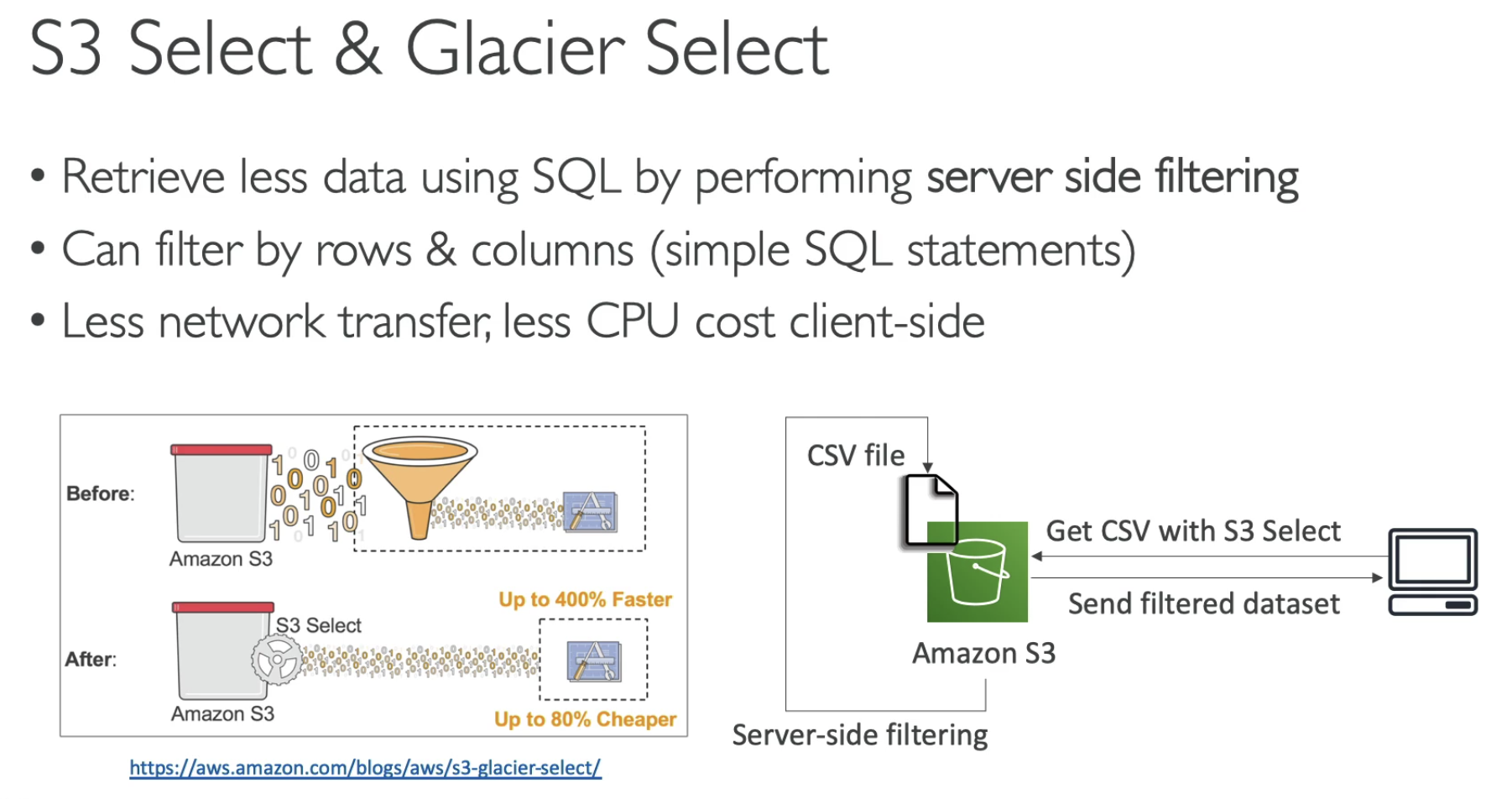

- Get less data (necessary data)

- SQL server-side filtering

S3 Select is NOT equal to Byte-range fetches.

S3 select: Less data by SQL

Byte-range fetches: first amount of byte data of the file

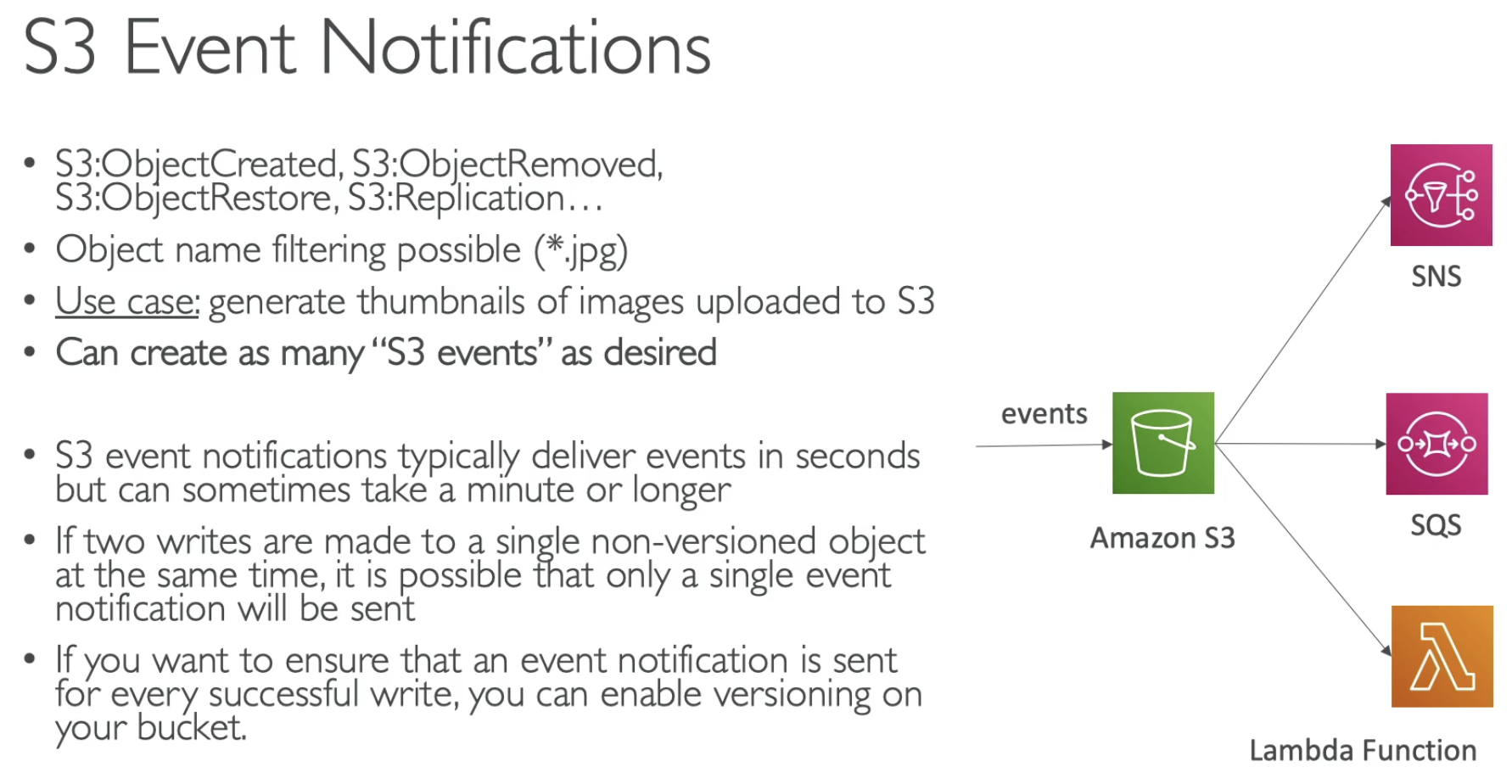

- Enabling versioning for every successful write.

- For compliance

SAP

Anti patterns

- Lots of small files: because you need to pay more

- POSIX file system (use EFS instead), file locks

- Search features, queries, rapidly chaning data

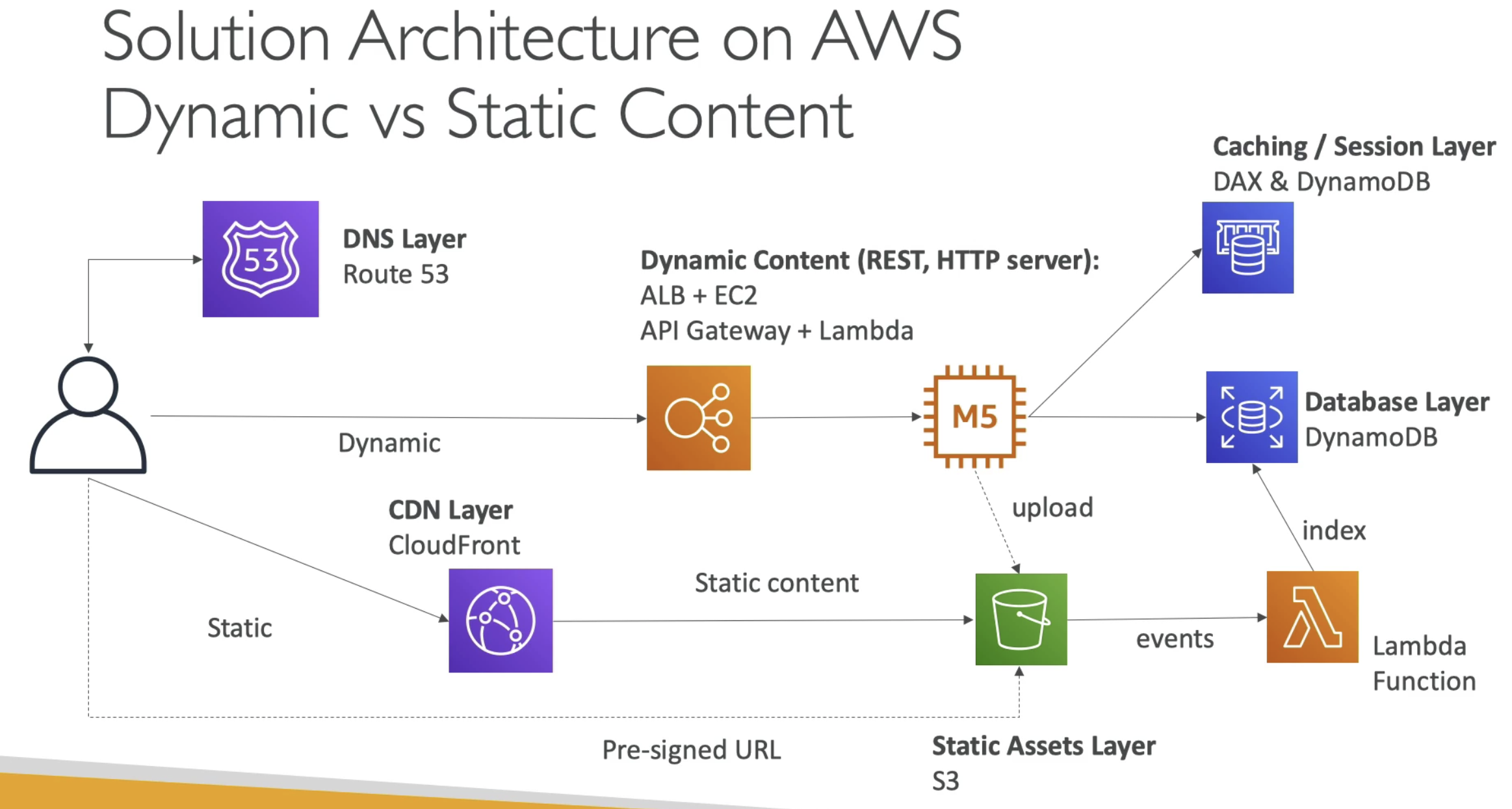

- Website with dynamic content

Replication

Why need repliation?

- reduce latency

- disaster recovery

- security

S3- CloudWatch Events

- By default, CloudTrail records S3 bucket-level API calls

- CloudTrail logs for object-level can be enabled

- This helps us generate events for object-level API (GetObject, PutObject, PutObjectAcl,....)

- Organize the prefix can get better S3 performance

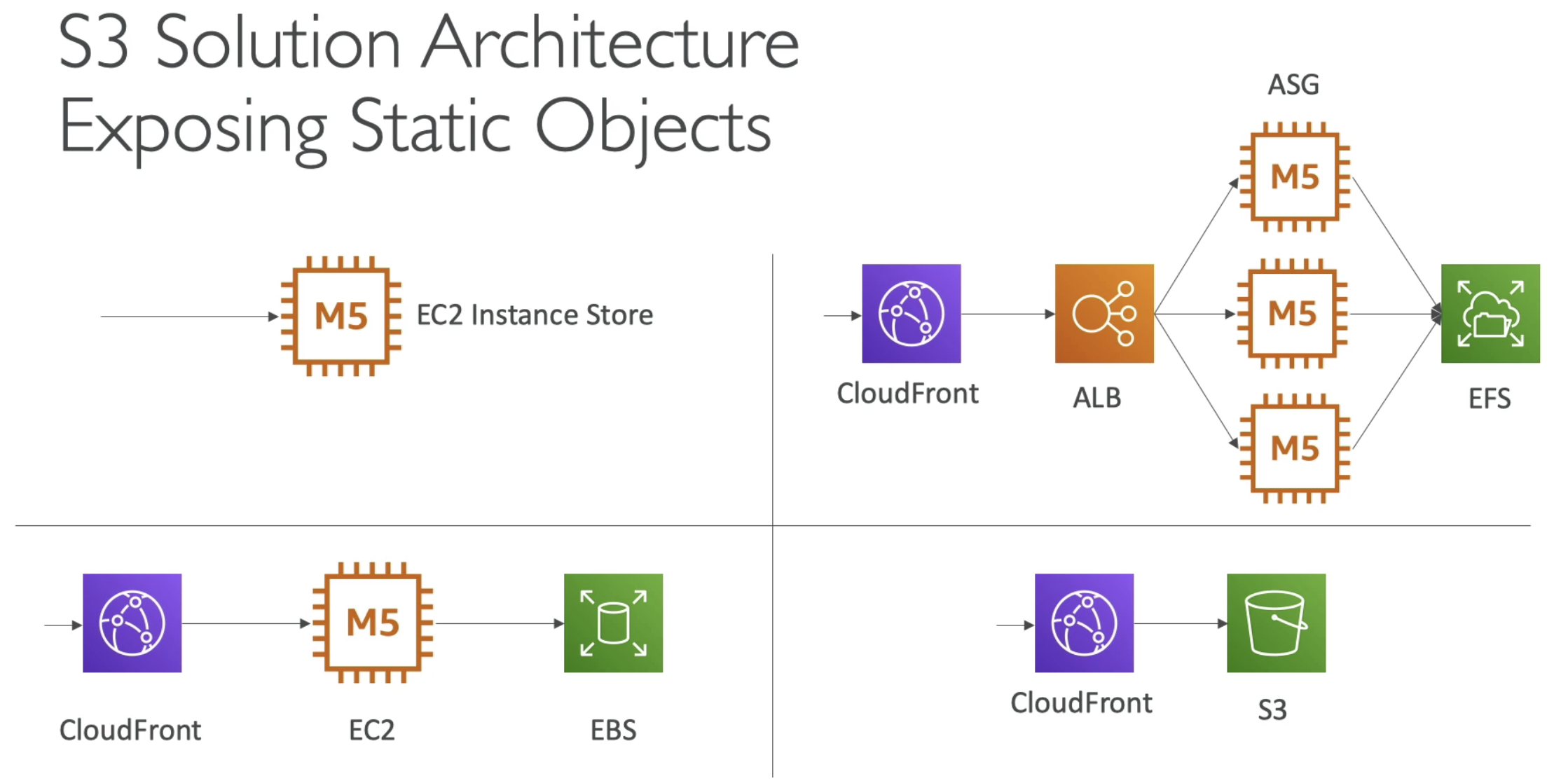

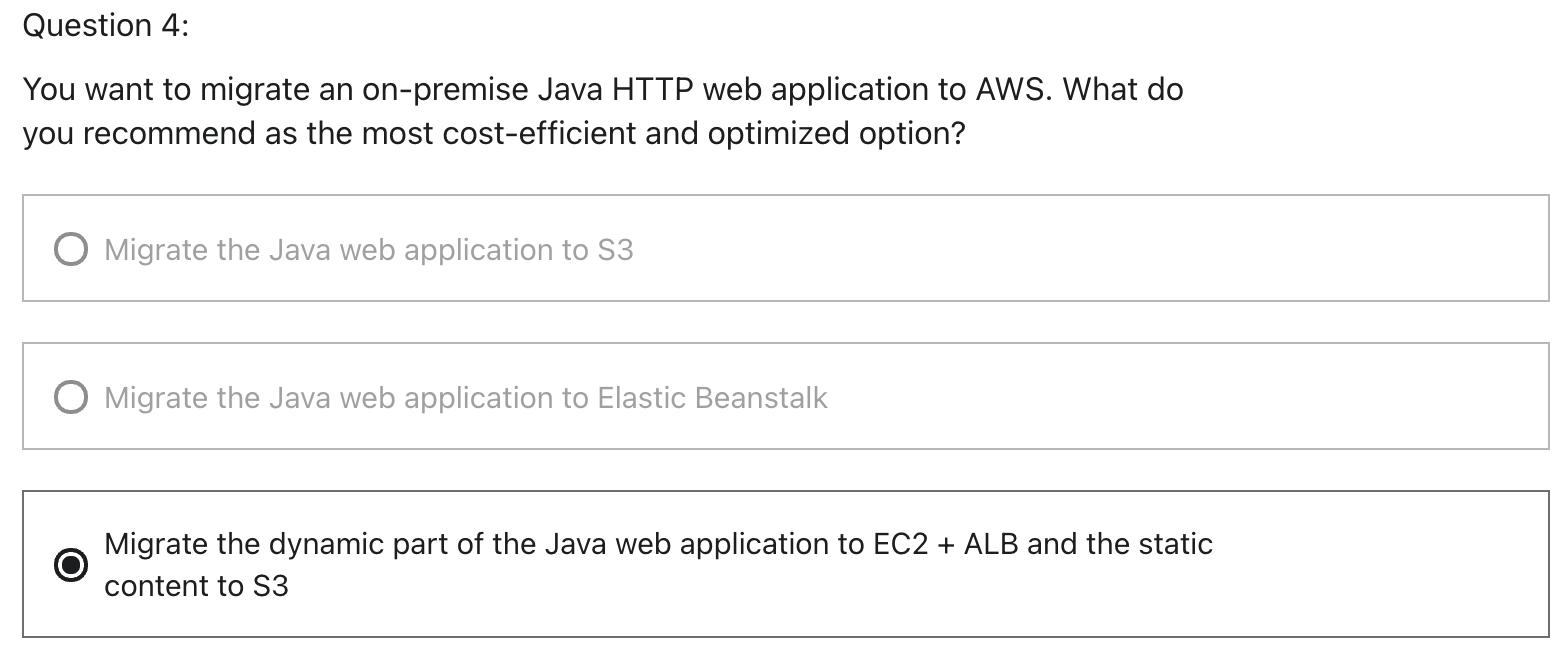

S3 Solution Architecture

- Just EC2 with instance store: you might lost the data

- CloudFront + EC2 + EBS: once EC2 fail, CloudFront might report failure if there is no cache

- CloudFront + ALB + ASG + EFS: need to use EFS for cross AZs, expensive

- CloudFront + S3: only static content is preferred, not dynamic content

As long as size is under 5.5TB, 1TB~3000IOPS

EBS cannot share content cross multi-AZ in runtime

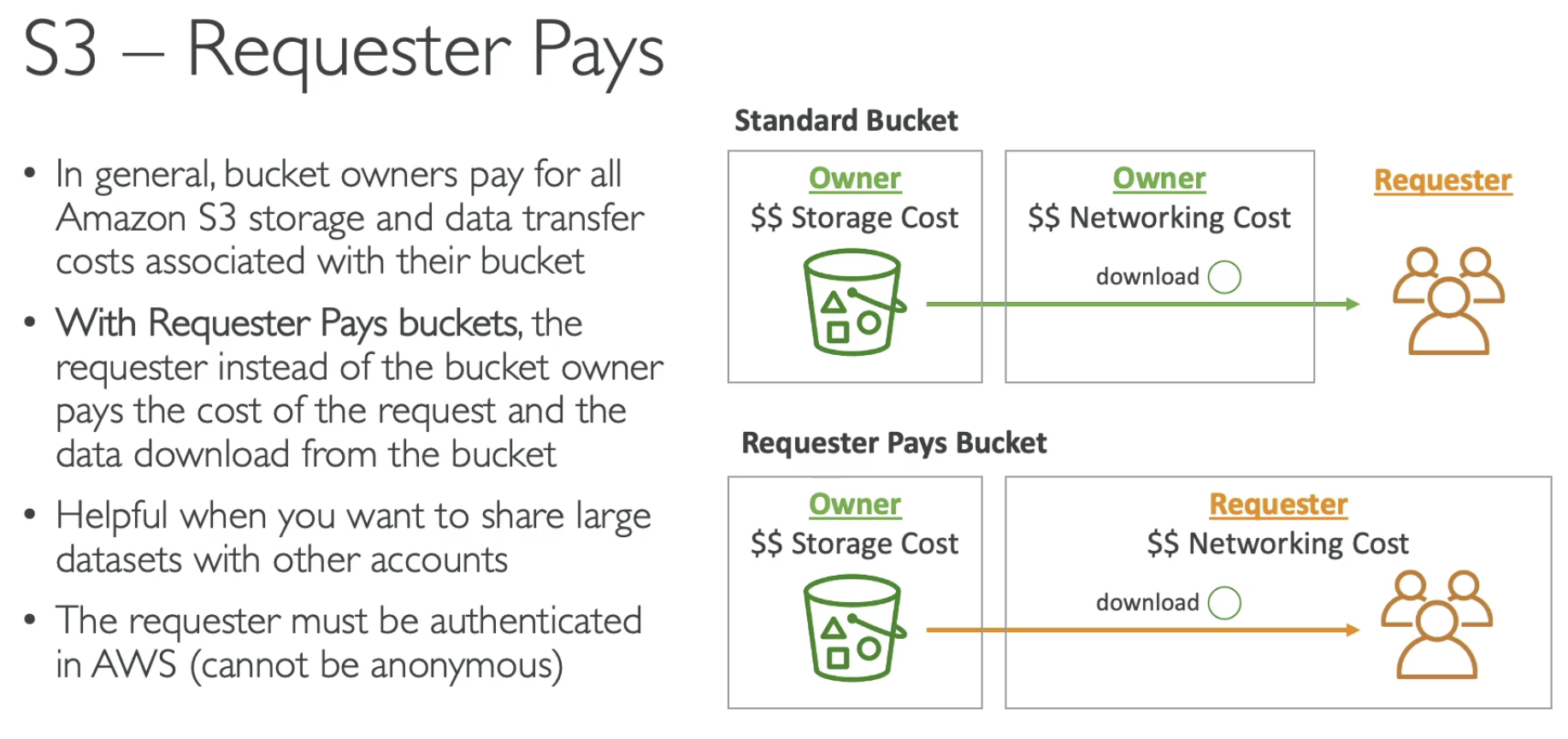

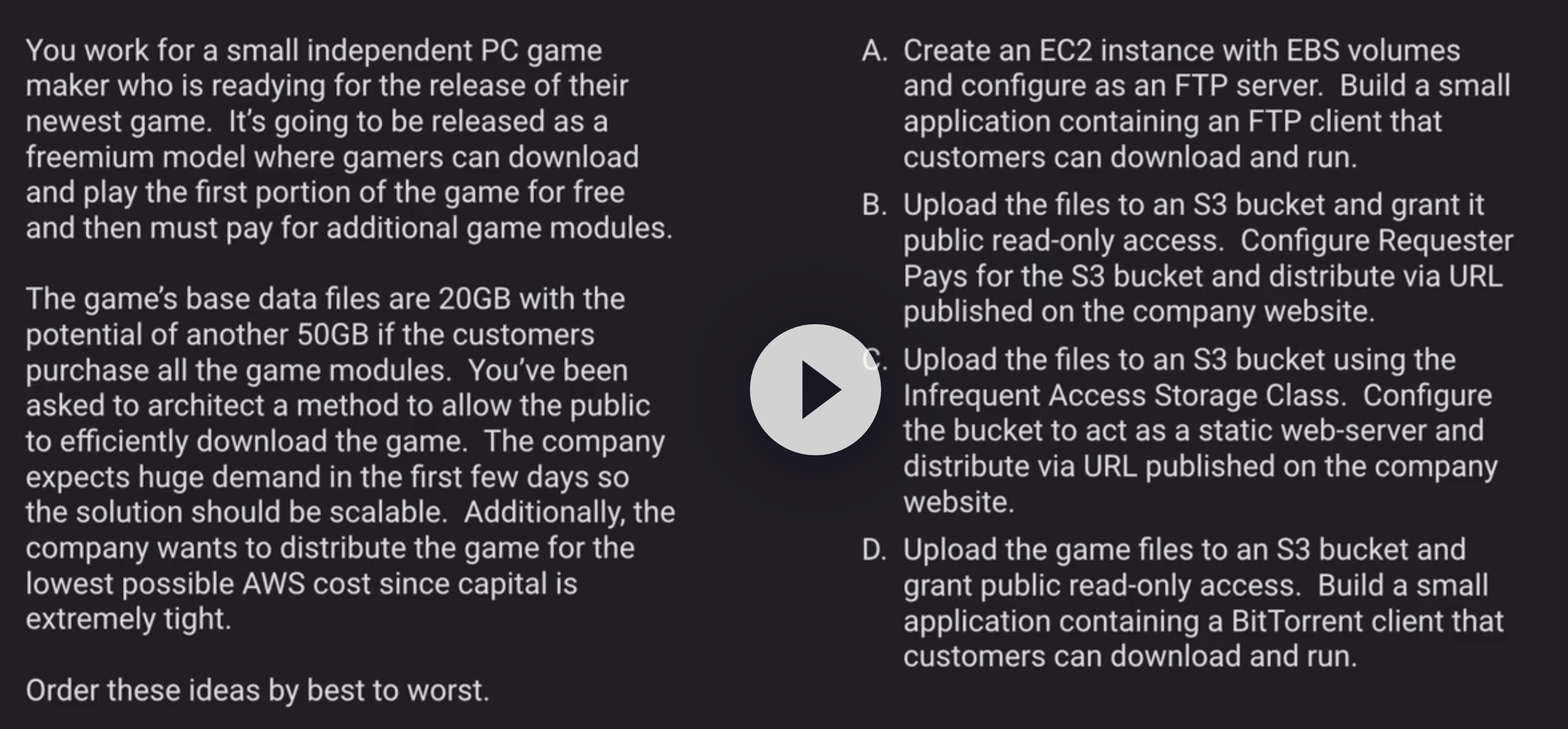

Requester pay: then it requires players also have AWS account .. against public accessable to the game content, B - 4

Infrequence access which will cause company pay more menoy on S3. against lowest possible AWS Cost, C - 3

A: which is not scalable, A- 2

D - 1

浙公网安备 33010602011771号

浙公网安备 33010602011771号