[Machine Learning] Simplified Cost Function and Gradient Descent

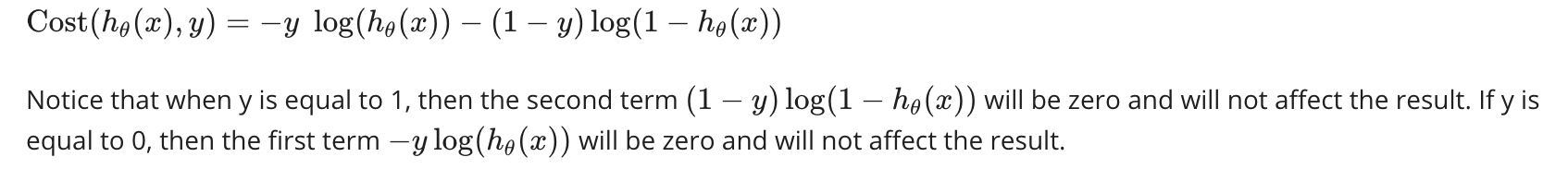

We can compress our cost function's two conditional cases into one case:

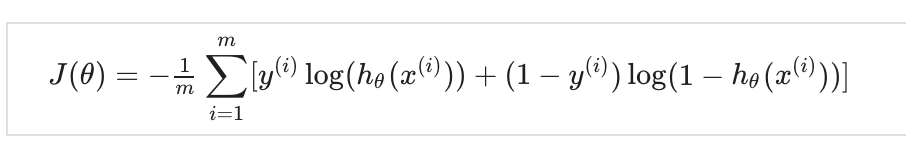

We can fully write out our entire cost function as follows:

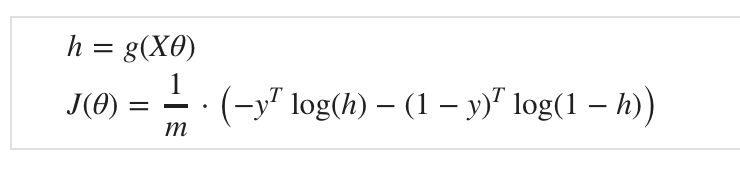

A vectorized implementation is:

Gradient Descent

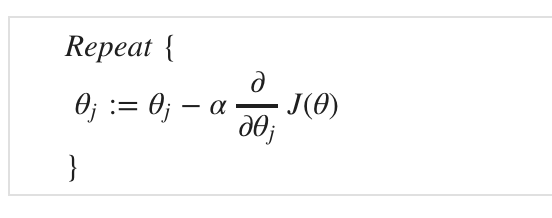

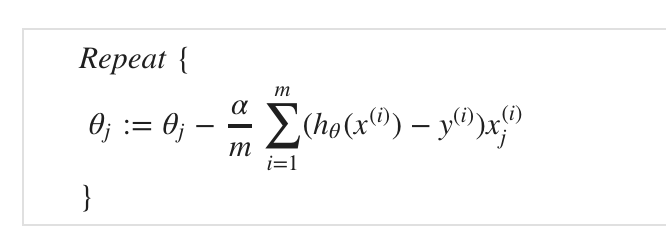

Remember that the general form of gradient descent is:

We can work out the derivative part using calculus to get:

Notice that this algorithm is identical to the one we used in linear regression. We still have to simultaneously update all values in theta.

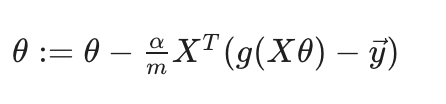

A vectorized implementation is:

浙公网安备 33010602011771号

浙公网安备 33010602011771号