数据采集与融合第二次作业

作业①:

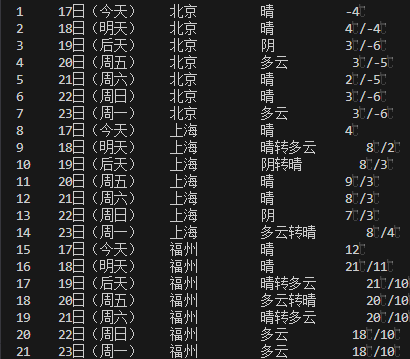

结果展示:

代码部分:

import sqlite3

import requests

from bs4 import BeautifulSoup

# 连接到SQLite数据库

conn = sqlite3.connect('weather.db')

c = conn.cursor()

# 创建表

c.execute('''

CREATE TABLE IF NOT EXISTS forecast (

id INTEGER PRIMARY KEY,

city TEXT NOT NULL,

date TEXT NOT NULL,

weather TEXT NOT NULL,

temperature TEXT NOT NULL,

UNIQUE(city, date) ON CONFLICT IGNORE

)

''')

# 提交更改

conn.commit()

def fetch_weather(city_id, city_name):

url = f'http://www.weather.com.cn/weather/{city_id}.shtml'

try:

response = requests.get(url)

response.raise_for_status() # 检查请求是否成功

soup = BeautifulSoup(response.content, 'html.parser')

# 解析天气数据

days = soup.find_all('li', class_='sky')

if not days:

return

for day in days:

date = day.find('h1').text.strip()

weather = day.find('p', class_='wea').text.strip()

temp = day.find('p', class_='tem').text.strip().replace('\n', '/').replace(' ', '')

# 插入数据到数据库

c.execute('''

INSERT OR IGNORE INTO forecast (city, date, weather, temperature)

VALUES (?, ?, ?, ?)

''', (city_name, date, weather, temp))

conn.commit()

except requests.RequestException as e:

print(f"请求错误: {e}")

except Exception as e:

print(f"解析错误: {e}")

# 示例城市列表

cities = [

{'id': '101010100', 'name': '北京'},

{'id': '101020100', 'name': '上海'},

{'id': '101280601', 'name': '福州'}

]

# 清除已有数据

c.execute('DELETE FROM forecast')

conn.commit()

# 获取并保存每个城市的天气预报

for city in cities:

fetch_weather(city['id'], city['name'])

# 计算总数据量

total_rows = len(cities) * 7

# 从数据库中读取并打印数据

c.execute(f'SELECT * FROM forecast LIMIT {total_rows}')

rows = c.fetchall()

if not rows:

print("数据库中没有数据")

else:

# 打印表头

print(f"{'序号':<5} {'日期':<10} {'城市':<10} {'天气':<10} {'温度':<10}")

print('-' * 50)

# 打印数据

for idx, row in enumerate(rows, start=1):

print(f"{idx:<5} {row[2]:<10} {row[1]:<10} {row[3]:<10} {row[4]:<10}")

# 关闭数据库连接

conn.close()

输出结果:

个人心得:

网页数据抓取过程中,遇到了网页结构复杂、数据动态加载等问题。通过不断学习和实践,掌握了使用正则表达式、XPath等方法提取数据的技巧。

作业②:

结果展示:

代码部分:

import requests

import pandas as pd

import json

import sqlite3

# 常量定义

BASE_URL = "http://44.push2.eastmoney.com/api/qt/clist/get"

API_PARAMS = {

'cb': 'jQuery112406854618710877052_1696660618066',

'pz': 20,

'po': 1,

'np': 1,

'ut': 'bd1d9ddb04089700cf9c27f6f7426281',

'fltt': 2,

'invt': 2,

'wbp2u': '|0|0|0|web',

'fid': 'f3',

'fs': 'm:0+t:6,m:0+t:80,m:1+t:2,m:1+t:23,m:0+t:81+s:2048',

'fields': 'f2,f3,f4,f5,f6,f7,f12,f14',

'_': '1696660618067'

}

DB_PATH = 'stock_info.db'

COLUMNS = ['序号', '代码', '名称', '最新价', '涨跌幅', '涨跌额', '成交量', '成交额', '振幅']

def fetch_stock_info(page_number):

"""

获取指定页码的股票信息

"""

params = API_PARAMS.copy()

params['pn'] = page_number

response = requests.get(BASE_URL, params=params)

content = response.text

json_str = content[content.find('(') + 1: content.rfind(')')]

data_json = json.loads(json_str)

stock_items = data_json['data']['diff']

for idx, item in enumerate(stock_items):

yield [

idx + 1,

item['f12'],

item['f14'],

item['f2'],

item['f3'],

item['f4'],

item['f5'],

item['f6'],

item['f7']

]

def save_to_database(stock_data):

"""

将股票数据保存到SQLite数据库

"""

with sqlite3.connect(DB_PATH) as conn:

cursor = conn.cursor()

cursor.execute('''

CREATE TABLE IF NOT EXISTS stock_data (

序号 INTEGER,

代码 TEXT,

名称 TEXT,

最新价 REAL,

涨跌幅 REAL,

涨跌额 REAL,

成交量 INTEGER,

成交额 REAL,

振幅 REAL

)

''')

cursor.executemany('INSERT INTO stock_data VALUES (?,?,?,?,?,?,?,?,?)', stock_data)

conn.commit()

def get_user_input():

"""

获取用户输入的页数

"""

while True:

try:

page_input = int(input("请输入要爬取的页数:"))

if page_input <= 0:

raise ValueError("页数必须为正整数")

return page_input

except ValueError as e:

print(f"输入错误: {e}")

def main():

"""

主函数,执行数据获取和存储操作

"""

page_input = get_user_input()

total_stock_data = []

for page in range(1, page_input + 1):

total_stock_data.extend(fetch_stock_info(page))

if total_stock_data:

save_to_database(total_stock_data)

df = pd.DataFrame(total_stock_data, columns=COLUMNS)

print(df)

if __name__ == "__main__":

main()

输出结果:

个人心得:

在爬取过程中,我遇到了反爬虫机制、动态加载数据等挑战。通过不断尝试和调整,我使用了伪装请求头、增加请求间隔等方法来绕过反爬虫机制,并通过分析JavaScript代码找到了动态加载数据的API接口。

作业③:

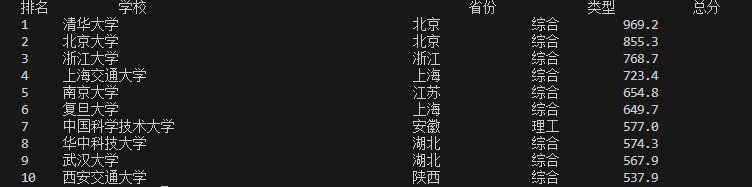

结果展示:

代码部分:

import requests

from bs4 import BeautifulSoup

import sqlite3

import re

# 常量定义

DATABASE_NAME = 'university_rankings.db'

TABLE_NAME = 'UniversityRanking'

URL = "https://www.shanghairanking.cn/rankings/bcur/2021"

LABELS_TO_REMOVE = ['双一流', '985工程', '211工程', '985', '211']

COLUMNS = ["排名", "学校", "省份", "类型", "总分"]

TEMPLATE = "{0:^6}\t{1:{5}<20}\t{2:^6}\t{3:^8}\t{4:^6}"

def fetch_html_content(url):

"""

获取指定URL的HTML内容

"""

try:

response = requests.get(url)

response.raise_for_status()

response.encoding = response.apparent_encoding

return response.text

except requests.RequestException as e:

print(f"请求错误: {e}")

return None

def sanitize_university_name(name, labels_to_remove):

"""

清理大学名称中的标签和非中文字符

"""

for label in labels_to_remove:

name = name.replace(label, '')

name = re.sub(r'[A-Za-z]', '', name)

name = re.sub(r'[^\u4e00-\u9fa5]', '', name)

return name

def extract_university_data(html_content, labels_to_remove):

"""

解析HTML内容,提取大学信息列表

"""

soup = BeautifulSoup(html_content, "html.parser")

university_list = []

tbody = soup.find('tbody')

if not tbody:

print("未找到表格数据")

return university_list

rows = tbody.find_all('tr')

for row in rows:

columns = row.find_all('td')

if len(columns) < 5:

continue

rank = columns[0].text.strip()

name_tag = columns[1].find('a')

name = sanitize_university_name(name_tag.text.strip() if name_tag else columns[1].text.strip(), labels_to_remove)

province = columns[2].text.strip()

category = columns[3].text.strip()

score = columns[4].text.strip()

university_list.append([rank, name, province, category, score])

return university_list

def store_university_data(data_list, database_name, table_name):

"""

将大学信息存储到SQLite数据库中

"""

with sqlite3.connect(database_name) as conn:

cursor = conn.cursor()

cursor.execute(f'''

CREATE TABLE IF NOT EXISTS {table_name} (

Rank TEXT,

Name TEXT,

Province TEXT,

Category TEXT,

Score TEXT

)

''')

cursor.executemany(f'INSERT INTO {table_name} VALUES (?,?,?,?,?)', data_list)

conn.commit()

def display_university_data(universities, count, template, columns):

"""

打印大学信息列表

"""

print(template.format(*columns, chr(12288)))

for i in range(min(count, len(universities))):

uni = universities[i]

print(template.format(*uni, chr(12288)))

def execute_university_ranking(url, database_name, table_name, labels_to_remove, columns, template, count):

"""

主函数,执行数据获取、解析、存储和显示操作

"""

html_content = fetch_html_content(url)

if not html_content:

return

university_data = extract_university_data(html_content, labels_to_remove)

if not university_data:

print("未提取到大学信息")

return

store_university_data(university_data, database_name, table_name)

display_university_data(university_data, count, template, columns)

if __name__ == '__main__':

execute_university_ranking(URL, DATABASE_NAME, TABLE_NAME, LABELS_TO_REMOVE, COLUMNS, TEMPLATE, 10)

输出结果:

个人心得:

在网页结构分析阶段,我遇到了网页动态加载数据的问题。通过深入研究,我发现数据是通过Ajax请求从服务器获取的。于是,我使用requests库模拟了这些Ajax请求,成功获取了所需的数据。

浙公网安备 33010602011771号

浙公网安备 33010602011771号