2023数据采集与融合技术实践作业2

2023数据采集与融合技术实践作业2

实验2.1

- 实验要求:在中国气象网(http://www.weather.com.cn)给定城市集合的7日天气预报,并保存在数据库

- 代码

码云链接:https://gitee.com/Alynyn/crawl_project/blob/master/作业2/1.py

code

from bs4 import BeautifulSoup

from bs4 import UnicodeDammit

import urllib.request

import sqlite3

class WeatherDB:

def openDB(self):

self.con=sqlite3.connect("weathers.db")

self.cursor=self.con.cursor()

try:

self.cursor.execute("create table weathers (wCity varchar(16),wDate varchar(16),wWeather varchar(64),"

"wTemp varchar(32),constraint pk_weather primary key (wCity,wDate))")

except:

self.cursor.execute("delete from weathers")

def closeDB(self):

self.con.commit()

self.con.close()

def insert(self, city, date, weather, temp):

try:

self.cursor.execute("insert into weathers (wCity,wDate,wWeather,wTemp) values (?,?,?,?)",

(city, date, weather, temp))

except Exception as err:

print(err)

def show(self):

self.cursor.execute("select * from weathers")

rows = self.cursor.fetchall()

print("%-16s%-16s%-32s%-16s" % ("city", "date", "weather", "temp"))

for row in rows:

print("%-16s%-16s%-32s%-16s" % (row[0], row[1], row[2], row[3]))

class WeatherForecast:

def __init__(self):

self.headers = {

"User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre"}

self.cityCode = {"北京": "101010100", "上海": "101020100", "广州": "101280101", "深圳": "101280601"}

def forecastCity(self, city):

if city not in self.cityCode.keys():

print(city + " code cannot be found")

return

url = "http://www.weather.com.cn/weather/" + self.cityCode[city] + ".shtml"

try:

req = urllib.request.Request(url, headers=self.headers)

data = urllib.request.urlopen(req)

data = data.read()

dammit = UnicodeDammit(data, ["utf-8", "gbk"])

data = dammit.unicode_markup

soup = BeautifulSoup(data, "lxml")

lis = soup.select("ul[class='t clearfix'] li")

for li in lis:

try:

date = li.select('h1')[0].text

weather = li.select('p[class="wea"]')[0].text

temp = li.select('p[class="tem"] span')[0].text + "/" + li.select('p[class="tem"] i')[0].text

print(city, date, weather, temp)

self.db.insert(city, date, weather, temp)

except Exception as err:

print(err)

except Exception as err:

print(err)

def process(self, cities):

self.db = WeatherDB()

self.db.openDB()

for city in cities:

self.forecastCity(city)

self.db.closeDB()

ws = WeatherForecast()

ws.process(["北京"])

print("completed")

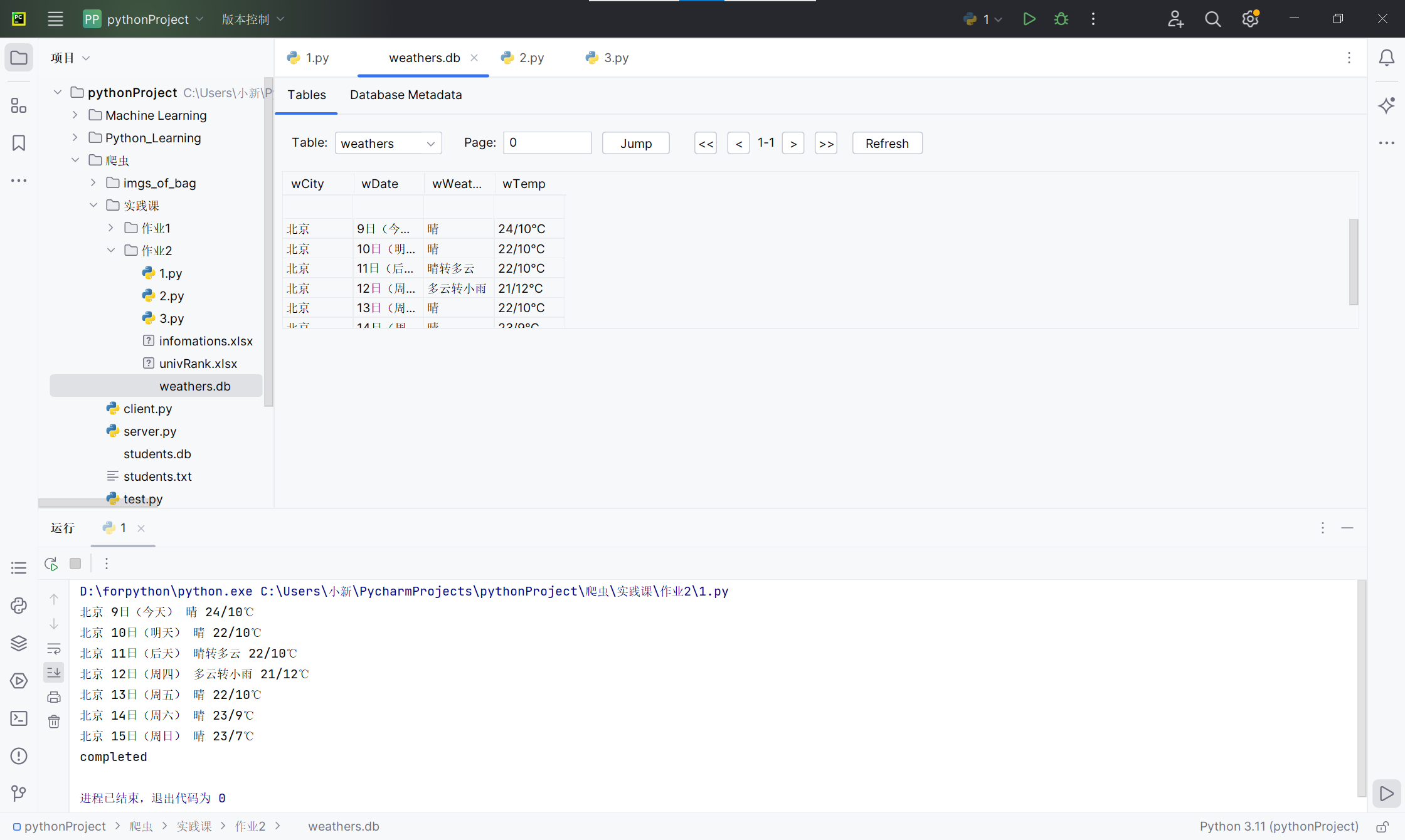

运行结果截图:

实验心得:本次实验为复现天气预报内容信息的爬取,结合老师提供的PPT内容即可,对自定义的数据库有了更进一步的理解,同时认识到通过函数化的爬虫可以更好的调用、修改等等。

实验2.2

- 实验要求:用requests和自选提取信息方法定向爬取股票相关信息,并存储在数据库中。

- 代码

码云链接:https://gitee.com/Alynyn/crawl_project/blob/master/作业2/2.py

code

import pandas as pd

import requests

def get_Html(page):

url = ("https://9.push2.eastmoney.com/api/qt/clist/get?cb=jQuery112405185584716637714_1696659395930&pn="+str(page)+

"&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&wbp2u=|0|0|0|web&fid=f3&fs=m:0+t:6,m:0+t:80,m:1+t:2,"

"m:1+t:23,m:0+t:81+s:2048&fields=f12,f14,f2,f3,f4,f5,f6,f7,f15,f16,f17,f18&_=1696659396068")

html = requests.get(url, timeout=30)

html.raise_for_status()

html.encoding = html.apparent_encoding

return html.text

def get_messege(dict):

a = []

for i in [12,14,2,3,4,5,6,7,15,16,17,18]:

x = 'f' + str(i)

a.append(dict[x])

return a

html = get_Html(1)

answer = html.split('[')[1].split(']')[0]

# print(answer)

answer = answer.split('}')

data =[]

for i in answer:

if i != ',' and i != ' ':

data.append(i.strip(' ').strip(',')+'}')

else:

continue

data.pop(-1) #去除结尾无用信息

Info = []

for i in data:

messege = eval(i) #str -> dict

Info.append(get_messege(messege))

columns = {1: "代码", 2: "名称", 3: "最新价格", 4: "涨跌额", 5: "涨跌幅", 6: "成交量",

7: "成交额", 8: "振幅",9: "最高", 10: "最低",11: "今开", 12: "昨收"}

df = pd.DataFrame(Info, columns=columns.values())

df.to_excel("./infomations.xlsx", index=False)

print("已成功保存至当前文件夹中!")

gif:

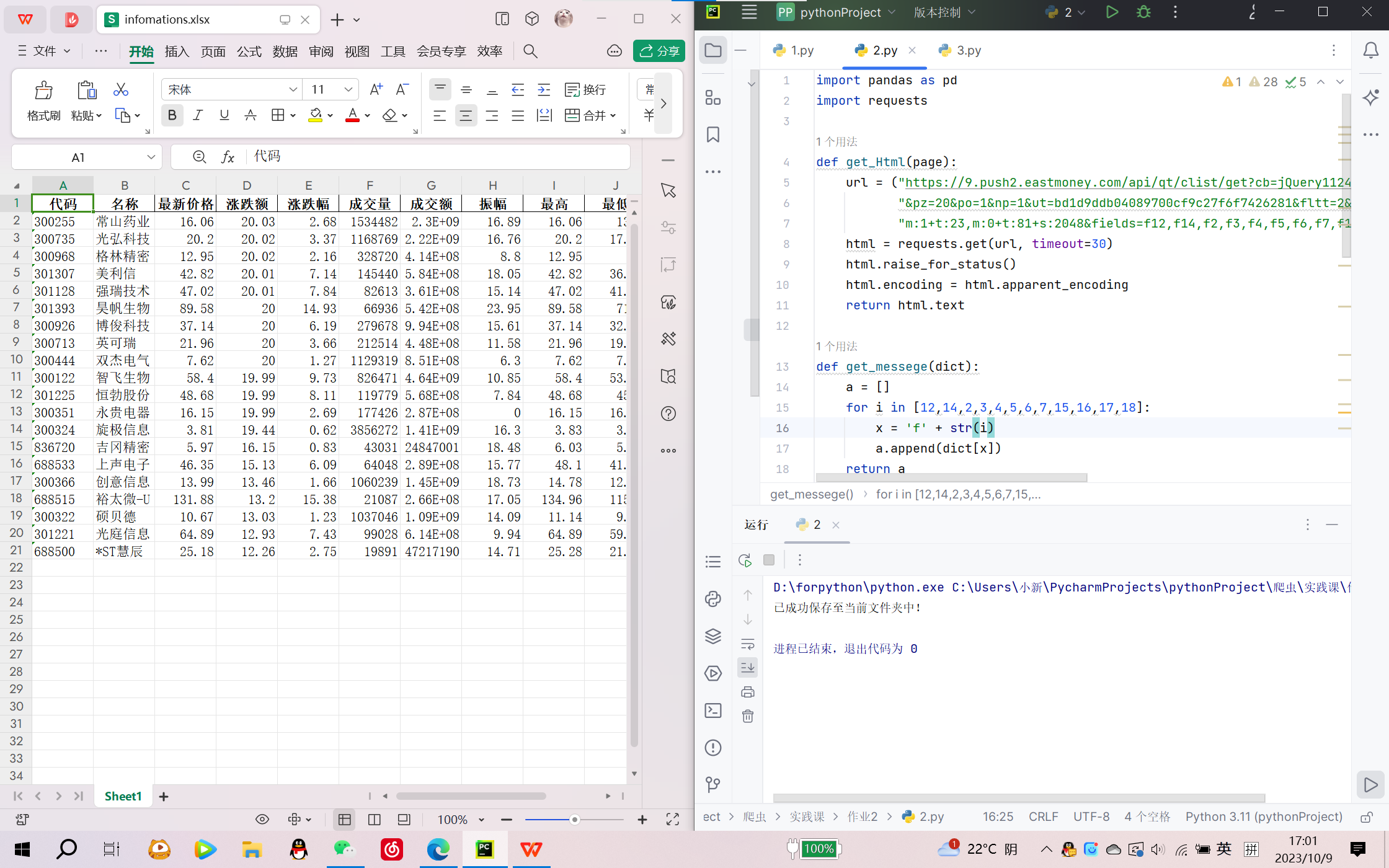

运行结果截图:

实验心得:本次实验通过抓包的方式,查找股票列表加载使用的url,并分析api返回的值,并根据所要求的参数可适当更改api的请求参数,使得数据的爬取更加便捷高效!

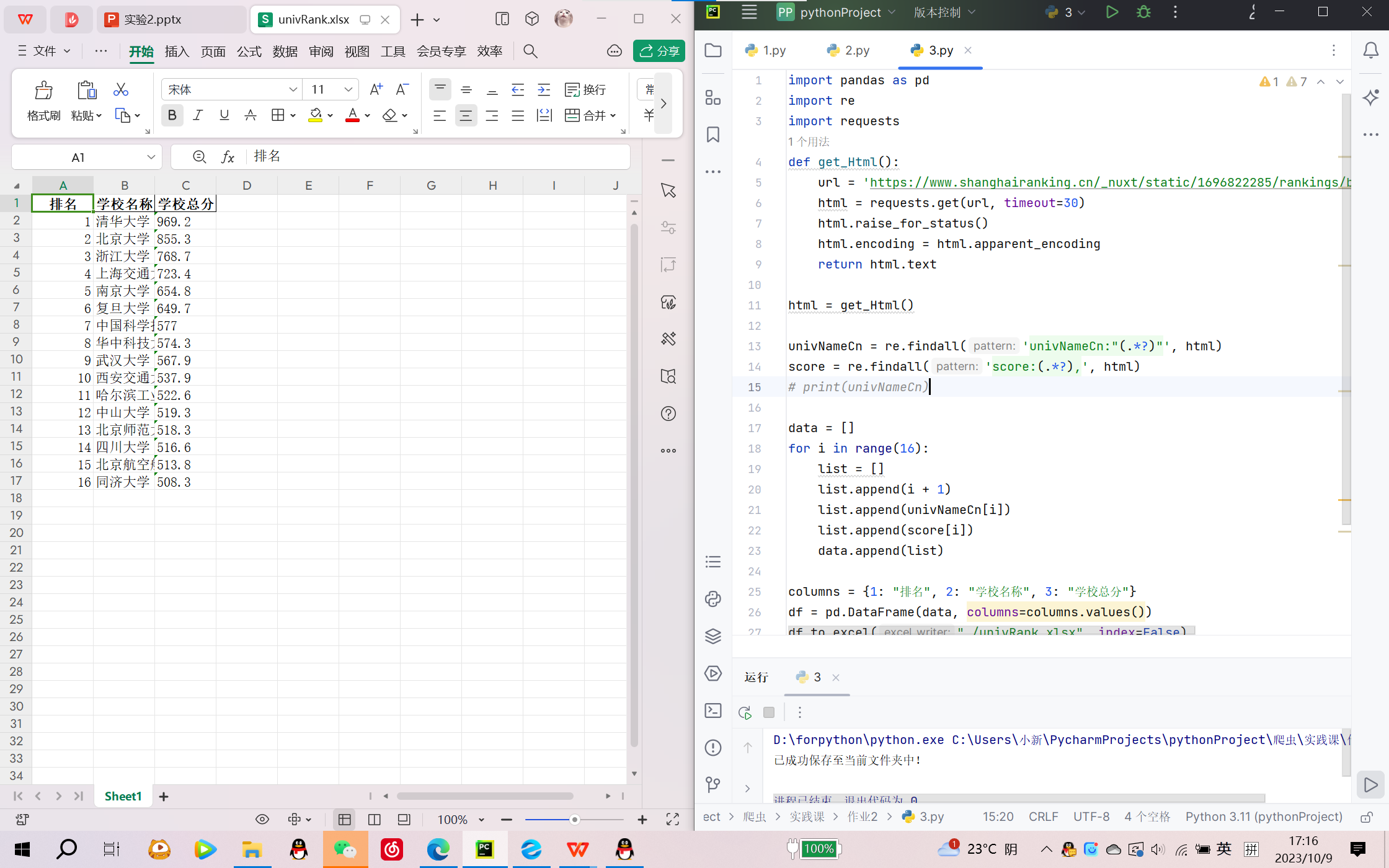

实验2.3

- 实验要求:爬取中国大学2021主榜(https://www.shanghairanking.cn/rankings/bcur/2021)所有院校信息,并存储在数据库中,同时将浏览器F12调试分析的过程录制Gif加入至博客中。技巧:分析该网站的发包情况,分析获取数据的api

- 代码

码云链接:https://gitee.com/Alynyn/crawl_project/blob/master/作业2/3.py

code

import pandas as pd

import re

import requests

def get_Html():

url = 'https://www.shanghairanking.cn/_nuxt/static/1696822285/rankings/bcur/2021/payload.js'

html = requests.get(url, timeout=30)

html.raise_for_status()

html.encoding = html.apparent_encoding

return html.text

html = get_Html()

univNameCn = re.findall('univNameCn:"(.*?)"', html)

score = re.findall('score:(.*?),', html)

# print(univNameCn)

data = []

for i in range(16):

list = []

list.append(i + 1)

list.append(univNameCn[i])

list.append(score[i])

data.append(list)

columns = {1: "排名", 2: "学校名称", 3: "学校总分"}

df = pd.DataFrame(data, columns=columns.values())

df.to_excel("./univRank.xlsx", index=False)

print("已成功保存至当前文件夹中!")

gif:

运行结果截图:

实验心得:同实验2.2通过分析该网站的发包情况,分析获取数据的api,进一步抓取所需要的信息,更进一步体会到通过抓包爬取信息的好处!