理解爬虫原理

作业来源:https://edu.cnblogs.com/campus/gzcc/GZCC-16SE1/homework/2881

1. 简单说明爬虫原理

爬虫就是向网站发起请求,获取资源后分析并提取有用数据的程序,从技术层面来说就是通过程序模拟浏览器请求站点的行为,把站点返回的HTML代码/JSON数据/二进制数据(图片、视频) 爬到本地,进而提取自己需要的数据,存放起来使用;

2. 理解爬虫开发过程

1).简要说明浏览器工作原理;

浏览器工作原理的实质就是实现http协议的通讯,http通信的流程则大体分为三个阶段,分别是连接、请求和应答。

2).使用 requests 库抓取网站数据;

requests.get(url) 获取校园新闻首页html代码

3).了解网页

写一个简单的html文件,包含多个标签,类,id

1 html_sample = ' \ 2 <html> \ 3 <body> \ 4 <h1 id="title">Hello</h1> \ 5 <a href="#" class="link"> This is link1</a>\ 6 <a href="# link2" class="link" qao=123> This is link2</a>\ 7 </body> \ 8 </html> '

4).使用 Beautiful Soup 解析网页;

- 通过BeautifulSoup(html_sample,'html.parser')把上述html文件解析成DOM Tree

- select(选择器)定位数据

- 找出含有特定标签的html元素

- 找出含有特定类名的html元素

- 找出含有特定id名的html元素

soups = BeautifulSoup(html_sample,'html.parser')

a1 =soups.a

a = soups.select('a')

h = soups.select('h1')

t = soups.select('#title')

l = soups.select('.link')

3.提取一篇校园新闻的标题、发布时间、发布单位、作者、点击次数、内容等信息

如url = 'http://news.gzcc.cn/html/2019/xiaoyuanxinwen_0323/11052.html'

要求发布时间为datetime类型,点击次数为数值型,其它是字符串类型。

- 导入requests库:pip install requests

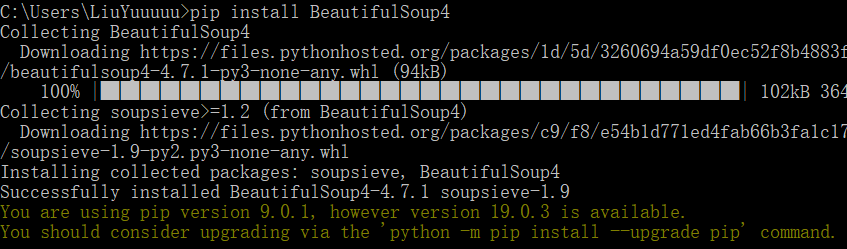

- 导入BeautifulSoup4库 :pip install BeautifulSoup4

- 引入:import requests

from bs4 import BeautifulSoup

from datetime import datetime

1 import requests 2 from bs4 import BeautifulSoup 3 from datetime import datetime

-

使用 requests 库抓取网站数据

requests.get(url) 获取校园新闻首页html代码

1 url = "http://news.gzcc.cn/html/2019/xiaoyuanxinwen_0323/11052.html" 2 res = requests.get(url) 3 type(res) 4 res.encoding = 'utf-8' 5 res.text

- 使用 Beautiful Soup 解析网页(标题、发布时间、发布单位等)

soups = BeautifulSoup(res.text, 'html.parser')

- 将时间转化为datetime类型

1 time1 = soups.select('.show-info')[0].text.split()[0].split(':')[1] 2 time2 = soups.select('.show-info')[0].text.split()[1] 3 Time = time1 + ' ' + time2 4 Time1 = datetime.strptime(Time, '%Y-%m-%d %H:%M:%S') 5 Time2 = datetime.strftime(Time1, '%Y{y}-%m{m}-%d{d} %H{H}:%M{M}:%S{S}').format(y='年', m='月', d='日', H='时', M='分', S='秒')

- 对点击次数进行获取

1 clickUrl = "http://oa.gzcc.cn/api.php?op=count&id=11052&modelid=80" 2 frequency = requests.get(clickUrl).text.split('.html')[-1] 3 sel = "();'" 4 for i in sel: 5 frequency = frequency.replace(i, '')

- 同理获取其他相关的信息(编辑、摄影、事件等)

1 editor = soups.select('.show-info')[0].text.split()[2] 2 auditor = soups.select('.show-info')[0].text.split()[3] 3 source = soups.select('.show-info')[0].text.split()[4] 4 photographer = soups.select('.show-info')[0].text.split()[5] 5 title1 = soups.title.text 6 matter1 = soups.select('.show-content')[0].text

完整代码:

1 import requests 2 from bs4 import BeautifulSoup 3 from datetime import datetime 4 5 url = "http://news.gzcc.cn/html/2019/xiaoyuanxinwen_0323/11052.html" 6 res = requests.get(url) 7 type(res) 8 res.encoding = 'utf-8' 9 res.text 10 11 clickUrl = "http://oa.gzcc.cn/api.php?op=count&id=11052&modelid=80" 12 frequency = requests.get(clickUrl).text.split('.html')[-1] 13 sel = "();'" 14 for i in sel: 15 frequency = frequency.replace(i, '') 16 17 soups = BeautifulSoup(res.text, 'html.parser') 18 time1 = soups.select('.show-info')[0].text.split()[0].split(':')[1] 19 time2 = soups.select('.show-info')[0].text.split()[1] 20 Time = time1 + ' ' + time2 21 Time1 = datetime.strptime(Time, '%Y-%m-%d %H:%M:%S') 22 Time2 = datetime.strftime(Time1, '%Y{y}-%m{m}-%d{d} %H{H}:%M{M}:%S{S}').format(y='年', m='月', d='日', H='时', M='分', S='秒') 23 24 editor = soups.select('.show-info')[0].text.split()[2] 25 auditor = soups.select('.show-info')[0].text.split()[3] 26 source = soups.select('.show-info')[0].text.split()[4] 27 photographer = soups.select('.show-info')[0].text.split()[5] 28 title1 = soups.title.text 29 matter1 = soups.select('.show-content')[0].text 30 31 32 print(title1) 33 print('发布时间:'+Time2 + ' '+editor+' '+auditor+' '+source+' '+photographer+' '+'点击次数:'+frequency) 34 print(matter1)

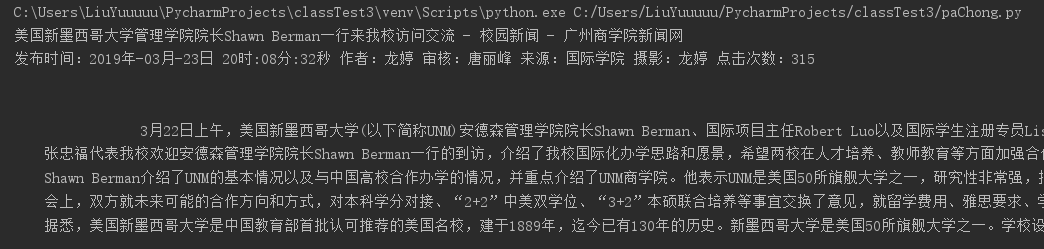

运行结果: