数据采集与融合技术实践第三次作业

第三次作业

作业①:

要求:

指定一个网站,爬取这个网站中的所有的所有图片,例如:中国气象网(http://www.weather.com.cn)。使用scrapy框架分别实现单线程和多线程的方式爬取。

–务必控制总页数(学号尾数2位)、总下载的图片数量(尾数后3位)等限制爬取的措施。

输出信息:

将下载的Url信息在控制台输出,并将下载的图片存储在images子文件中,并给出截图。

Gitee文件夹链接:题目一

image_spider.py代码如下:

import scrapy

from ..items import ImageCrawlerItem

class image_spider(scrapy.Spider):

name = 'image_spider'

start_urls = ['http://www.weather.com.cn']

pages_crawled = 0 # 用于计算已爬取的页面数

images_crawled = 0 # 用于计算已爬取的图片数量

max_pages = 23 # 设置要爬取的最大页面数

max_images = 123 # 设置要爬取的最大图片数量

def parse(self, response):

# 使用 CSS 选择器来提取图片 URL

image_urls = response.css('img::attr(src)').getall()

for img_url in image_urls:

if img_url.startswith('http'):

item = ImageCrawlerItem()

item['image_urls'] = [img_url]

print(f"Downloading image: {img_url}") # 使用 print 输出信息到控制台

self.images_crawled += 1 # 增加已爬取的图片数量

yield item

# 检查是否已达到页面和图片数量限制

if self.pages_crawled < self.max_pages and self.images_crawled < self.max_images:

# 继续爬取下一页(假定有下一页链接)

next_page_url = "" # 用下一页的URL替换

if next_page_url:

self.pages_crawled += 1 # 增加已爬取的页面数

yield response.follow(next_page_url, callback=self.parse)

settings.py代码如下:

IMAGES_STORE = 'images' # 图片将被存储在项目的 'images' 文件夹中

items.py代码如下:

class ImageCrawlerItem(scrapy.Item):

image_urls = scrapy.Field()

images = scrapy.Field()

在settings.py中添加以下内容实现多线程爬取:

CONCURRENT_REQUESTS = 12

run.py代码如下:

from scrapy import cmdline

cmdline.execute("scrapy crawl image_spider -s LOG_ENABLED=False".split())

输出结果:

images文件夹中:

心得体会:

通过这次作业,对于scrapy框架有了初步的理解,明白了如何设置item类和配置settings文件。

作业②:

要求:

熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;使用scrapy框架+Xpath+MySQL数据库存储技术路线爬取股票相关信息。

候选网站:东方财富网:https://www.eastmoney.com/

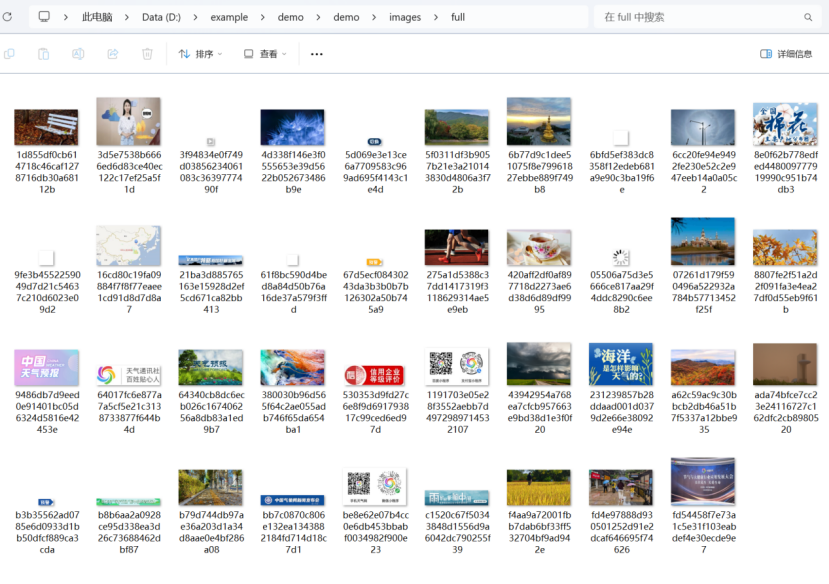

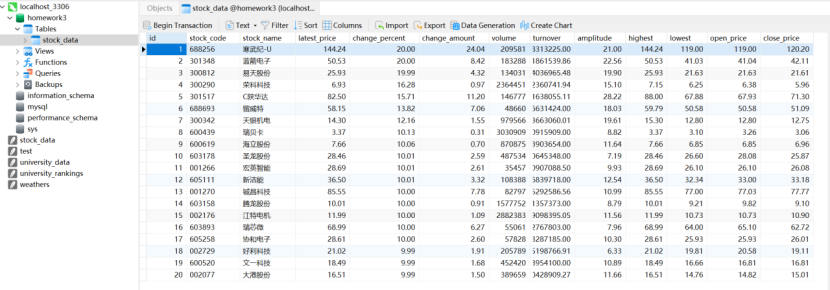

输出信息:MySQL数据库存储和输出格式如下:

表头英文命名例如:序号id,股票代码:bStockNo……

Gitee文件夹链接:题目二

stock_spider.py代码如下:

import scrapy

from ..items import StockItem

import re

class stock_spider(scrapy.Spider):

name = 'stock_spider'

start_urls = ['http://8.push2.eastmoney.com/api/qt/clist/get?cb=jQuery112404366619889619232_1697723935903&pn=1&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&wbp2u=|0|0|0|web&fid=f3&fs=m:0+t:6,m:0+t:80,m:1+t:2,m:1+t:23,m:0+t:81+s:2048&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1697723935904']

def parse(self, response):

pat = "\"diff\":\[(.*?)\]"

data = re.compile(pat, re.S).findall(response.text)

datas = data[0].split("},")

# 解析JSON数组并将数据存储到数据库中

for item in datas:

# 使用字符串操作来提取键值对

stock_info = {}

pairs = item.split(',')

for pair in pairs:

key, value = pair.split(':')

key = key.strip('"')

value = value.strip('"')

stock_info[key] = value

# 提取需要的字段

stock_code = stock_info.get('f12', 'N/A')

stock_name = stock_info.get('f14', 'N/A')

latest_price = float(stock_info.get('f2', 0.0))

change_percent = float(stock_info.get('f3', 0.0))

change_amount = float(stock_info.get('f4', 0.0))

volume = int(stock_info.get('f5', 0))

turnover = float(stock_info.get('f6', 0.0))

amplitude = float(stock_info.get('f7', 0.0))

highest = float(stock_info.get('f15', 0.0))

lowest = float(stock_info.get('f16', 0.0))

open_price = float(stock_info.get('f17', 0.0))

close_price = float(stock_info.get('f18', 0.0))

item = StockItem()

item['stock_code'] = stock_code

item['stock_name'] = stock_name

item['latest_price'] = latest_price

item['change_percent'] = change_percent

item['change_amount'] = change_amount

item['volume'] = volume

item['turnover'] = turnover

item['amplitude'] = amplitude

item['highest'] = highest

item['lowest'] = lowest

item['open_price'] = open_price

item['close_price'] = close_price

yield item

def closed(self, reason):

# 数据存储完成后关闭数据库连接

self.conn.close()

items.py代码如下:

class StockItem(scrapy.Item):

stock_code = scrapy.Field()

stock_name = scrapy.Field()

latest_price = scrapy.Field()

change_percent = scrapy.Field()

change_amount = scrapy.Field()

volume = scrapy.Field()

turnover = scrapy.Field()

amplitude = scrapy.Field()

highest = scrapy.Field()

lowest = scrapy.Field()

open_price = scrapy.Field()

close_price = scrapy.Field()

pipelines.py代码如下:

import pymysql

from .settings import MYSQL_HOST, MYSQL_DB, MYSQL_USER, MYSQL_PASSWORD, MYSQL_PORT

class StockMySQLPipeline:

def __init__(self):

self.conn = pymysql.connect(

host=MYSQL_HOST,

db=MYSQL_DB,

user=MYSQL_USER,

password=MYSQL_PASSWORD,

port=MYSQL_PORT

)

self.cursor = self.conn.cursor()

def process_item(self, item, spider):

# 将Item数据插入到MySQL数据库

insert_sql = """

INSERT INTO stock_data (stock_code, stock_name, latest_price, change_percent, change_amount, volume, turnover, amplitude, highest, lowest, open_price, close_price)

VALUES (%s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s)

"""

values = (

item['stock_code'],

item['stock_name'],

item['latest_price'],

item['change_percent'],

item['change_amount'],

item['volume'],

item['turnover'],

item['amplitude'],

item['highest'],

item['lowest'],

item['open_price'],

item['close_price']

)

try:

self.cursor.execute(insert_sql, values)

self.conn.commit()

except Exception as e:

self.conn.rollback()

self.cursor.close()

raise e

def close_spider(self, spider):

self.cursor.close()

settings.py代码如下:

ITEM_PIPELINES = {

'demo.pipelines.StockMySQLPipeline': 300, # 自定义的Pipeline

}

# MySQL数据库配置

MYSQL_HOST = 'localhost'

MYSQL_DB = 'homework3'

MYSQL_USER = 'root'

MYSQL_PASSWORD = 'yx20021207'

MYSQL_PORT = 3306

run.py代码如下:

from scrapy import cmdline

cmdline.execute("scrapy crawl stock_spider -s LOG_ENABLED=False".split())

创建表:

CREATE TABLE stock_data (

id INT AUTO_INCREMENT PRIMARY KEY,

stock_code VARCHAR(10) NOT NULL,

stock_name VARCHAR(255) NOT NULL,

latest_price DECIMAL(10, 2),

change_percent DECIMAL(5, 2),

change_amount DECIMAL(10, 2),

volume INT,

turnover DECIMAL(15, 2),

amplitude DECIMAL(5, 2),

highest DECIMAL(10, 2),

lowest DECIMAL(10, 2),

open_price DECIMAL(10, 2),

close_price DECIMAL(10, 2)

);

输出结果(通过navicat查看):

心得体会:

通过该题,明白了如何通过配置settings.py连接本地mysql数据库,并将数据存入数据库中,一开始没有先行在数据库中创建相应的表导致报错,后发现问题通过create table语句创建解决问题。

作业③:

要求:

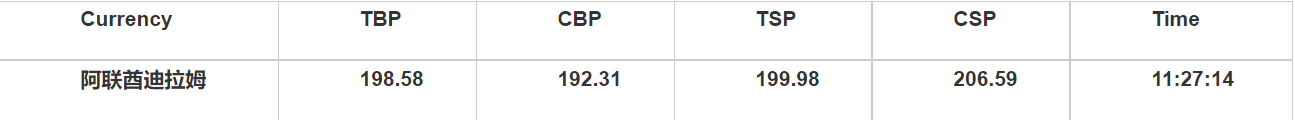

熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;使用scrapy框架+Xpath+MySQL数据库存储技术路线爬取外汇网站数据。

候选网站:中国银行网:https://www.boc.cn/sourcedb/whpj/

输出信息:

Gitee文件夹链接:题目三

Currency.py代码如下:

import scrapy

from ..items import CurrencyItem

class currency_spider(scrapy.Spider):

name = "currency"

count = 0

def start_requests(self):

url ='https://www.boc.cn/sourcedb/whpj/'

yield scrapy.Request(url=url,callback=self.parse)

def parse(self, response):

data=response.body.decode()

selector = scrapy.Selector(text=data)

# 使用xpath先定位到tr,然后再对tr下的目录进行处理

datas = selector.xpath("//table[@cellpadding='0'][@align='left'][@cellspacing='0'][@width='100%']/tr")

# 1:排除掉第一个tr:标题

for text in datas[1:]:

self.count += 1

item = CurrencyItem()

item["count"] = self.count

# 获取货币种类

item["Currency"] = text.xpath("./td[1]/text()").extract_first()

item["TSP"] = text.xpath("./td[2]/text()").extract_first()

item["CSP"] = text.xpath("./td[3]/text()").extract_first()

item["TBP"] = text.xpath("./td[4]/text()").extract_first()

item["CBP"] = text.xpath("./td[5]/text()").extract_first()

item["Time"] = text.xpath("./td[8]/text()").extract_first()

yield item

items.py代码如下:

class CurrencyItem(scrapy.Item):

count=scrapy.Field()

Currency=scrapy.Field()

TSP=scrapy.Field()

CSP=scrapy.Field()

TBP=scrapy.Field()

CBP=scrapy.Field()

Time=scrapy.Field()

settings.py代码如下

ITEM_PIPELINES = {

'demo.pipelines.currencyMySQLPipeline': 300, # 自定义的Pipeline

}

# MySQL数据库配置

MYSQL_HOST = 'localhost'

MYSQL_DB = 'homework3'

MYSQL_USER = 'root'

MYSQL_PASSWORD = 'yx20021207'

MYSQL_PORT = 3306

pipelines.py代码如下:

import pymysql

from .settings import MYSQL_HOST, MYSQL_DB, MYSQL_USER, MYSQL_PASSWORD, MYSQL_PORT

class currencyMySQLPipeline:

def __init__(self):

self.conn = pymysql.connect(

host=MYSQL_HOST,

db=MYSQL_DB,

user=MYSQL_USER,

password=MYSQL_PASSWORD,

port=MYSQL_PORT

)

self.cursor = self.conn.cursor()

def process_item(self, item, spider):

# 将Item数据插入到MySQL数据库

insert_sql = """

INSERT INTO currency_data (id,Currency, TBP, CBP, TSP, CSP, Time)

VALUES (%s ,%s, %s, %s, %s, %s, %s)

"""

values = (

item['count'],

item['Currency'],

item['TBP'],

item['CBP'],

item['TSP'],

item['CSP'],

item['Time']

)

try:

self.cursor.execute(insert_sql, values)

self.conn.commit()

except Exception as e:

self.conn.rollback()

self.cursor.close()

raise e

def close_spider(self, spider):

self.cursor.close()

run.py代码如下:

from scrapy import cmdline

cmdline.execute("scrapy crawl currency -s LOG_ENABLED=False".split())

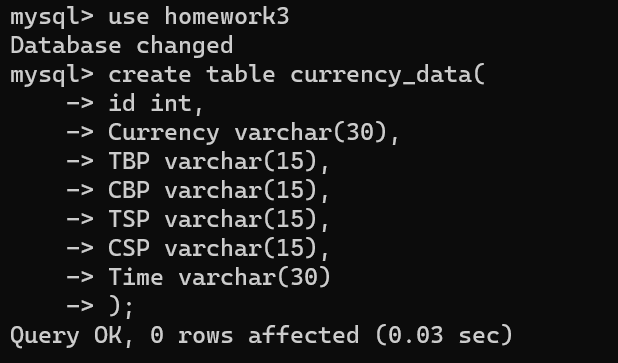

创建表:

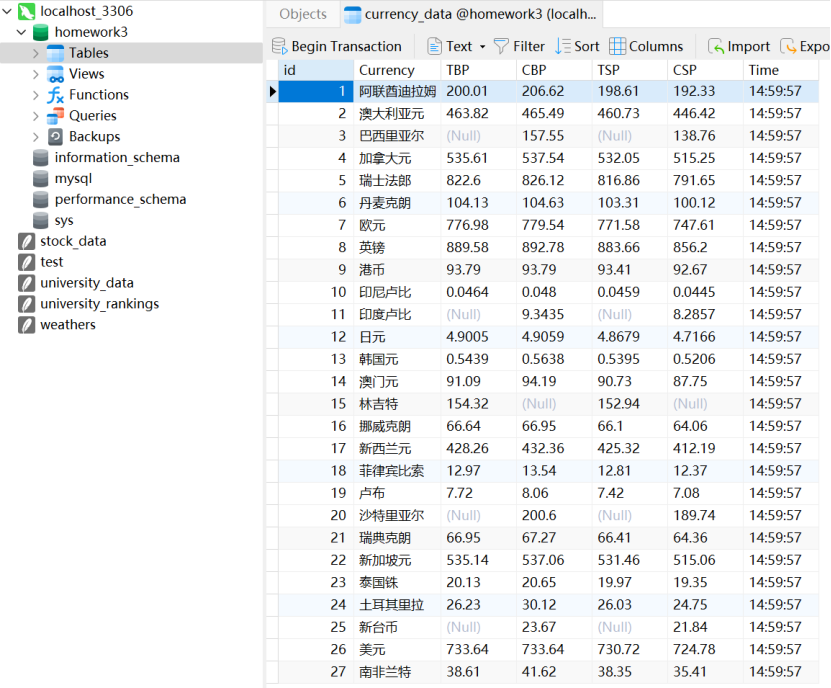

输出结果(通过navicat查看):

心得体会:

通过本题,对xpath定位元素有了更深的理解,一开始爬取数据时多爬了一行无用数据,后通过调整遍历解决该问题,对于自定义pipeline类将数据存入数据库有了一定的了解,对于scrapy框架更加熟悉。

浙公网安备 33010602011771号

浙公网安备 33010602011771号