测试环境:

- PHP版本:php7.0.10

- mysql版本:5.7.14

测试用例:循环插入两千行数据到数据库

public function test_syn($pc){

// $pc = trim(I('pc'));

$model = M('','');

$model -> startTrans();

try{

$sql = 'update d_fhditem set num = num +1 where id= 1365';

$res = $model -> execute($sql);

}catch(\Exception $e){

$res = false;

}

if($res === false){

$model -> rollback();

echo '更新失败<br>';

die;

}

try{

$sql = 'insert into check_thread_log(`pc`) values('.$pc.')';

$res = $model -> execute($sql);

}catch(\Exception $e){

$res = false;

}

if($res === false){

$model -> rollback();

echo '添加事务失败<br>';

die;

}

$res = $model -> commit();

if($res === false){

$model -> rollback();

echo '提交事务失败';

}

//echo '测试成功'.$pc.'<br>';

}

测试一:一次生成2000个curl句柄,同时运行

public function checkThread3(){

$t = microtime(true);

$row = 2000;

$i = 0;

$arr_handle = array();

while($i < $row){

$res = $res.$i;

$res = curl_init();

$url = 'http://localhost/InTimeCommnuicate/index.php/Home/WebstockApi/test_syn/pc/'.$i;

curl_setopt($res, CURLOPT_URL , $url);

curl_setopt($res, CURLOPT_RETURNTRANSFER, 1);

curl_setopt($res, CURLOPT_HEADER, 0);

$arr_handle[] = $res;

$i++;

}

$cmi = curl_multi_init();

//添加2000条句柄

foreach($arr_handle as $k => $v){

curl_multi_add_handle($cmi, $v);

}

$still_running = null;

do{

usleep(10000);

$res = curl_multi_exec($cmi, $still_running);

$j++;

}while($still_running > 0);

foreach($arr_handle as $v){

curl_multi_remove_handle($cmi, $v);

}

curl_multi_close($cmi);

$t1 = microtime(true);

echo '<hr>并发执行时间:'.($t1 - $t).'<br>';

}

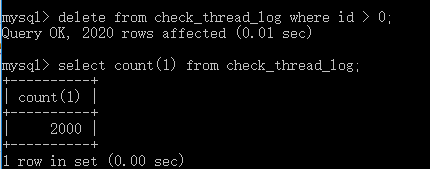

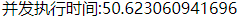

运行结果:

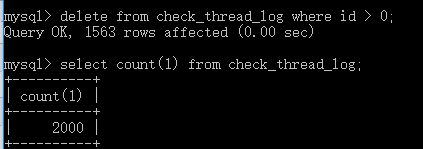

mysql查询:

小结:从结果可以看出,当同时运行的数量过大时会有部分丢失。

测试二:对程序进行部分改进,把并发数量控制在400条以内

public function checkThread(){

$t = microtime(true);

$row = 2000;

$i = 0;

$arr_handle = array();

while($i < $row){

$res = $res.$i;

$res = curl_init();

$url = 'http://localhost/InTimeCommnuicate/index.php/Home/WebstockApi/test_syn/pc/'.$i;

curl_setopt($res, CURLOPT_URL , $url);

curl_setopt($res, CURLOPT_RETURNTRANSFER, 1);

curl_setopt($res, CURLOPT_HEADER, 0);

$arr_handle[] = $res;

$i++;

}

$cmi = curl_multi_init();

//最大并发数

$maxRunHandle = 400;

//当前添加句柄

$thisAddHandle = 0;

//需要运行条数

$maxRow = count($arr_handle);

//添加400条句柄

foreach($arr_handle as $k => $v){

if($k >= $maxRunHandle) break;

curl_multi_add_handle($cmi, $v);

$thisAddHandle++;

}

$still_running = null;

$j = 1;

do{

//当运行并发数小于400且并发

if($still_running < 400 && $thisAddHandle < $maxRow){

curl_multi_add_handle($cmi, $arr_handle[$thisAddHandle]);

$thisAddHandle++;

}

usleep(10000);

$res = curl_multi_exec($cmi, $still_running);

// echo '第'.$j.'次输并发执行的句柄数量为'.$still_running.'<br>';

$j++;

}while($still_running > 0);

foreach($arr_handle as $v){

curl_multi_remove_handle($cmi, $v);

}

curl_multi_close($cmi);

$t1 = microtime(true);

echo '<hr>并发执行时间:'.($t1 - $t).'<br>';

}

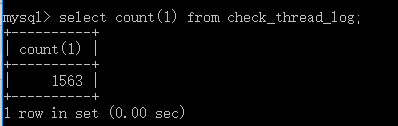

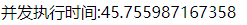

运行结果:

mysql查询:

小结:可以看出运行速度比原来的快,而且没有任何的数据丢失。

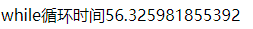

测试三:不使用curl函数,直接插入2000行数据

public function checkThread2(){

$t = microtime(true);

$row = 2000;

$i = 0;

while($row > $i){

$this -> test_syn($i);

$i++;

}

$t1 = microtime(true);

echo '<hr>while循环时间'.($t1 - $t).'<br>';

}

结果:

mysql查询: