Spark 连接 MySQL 数据库

Spark 连接 MySQL 数据库

目录

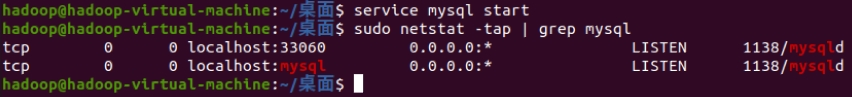

1. 安装启动检查 MySQL 服务

service mysql start

sudo netstat -tap | grep mysql

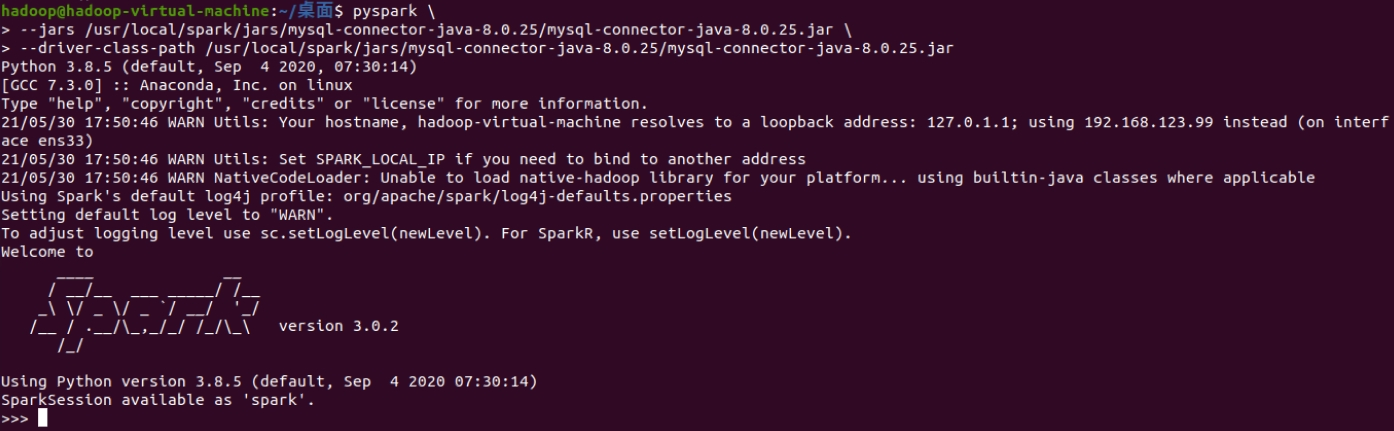

2. Spark 连接 MySQL 驱动程序

pyspark \

--jars /usr/local/spark/jars/mysql-connector-java-8.0.25/mysql-connector-java-8.0.25.jar \

--driver-class-path /usr/local/spark/jars/mysql-connector-java-8.0.25/mysql-connector-java-8.0.25.jar

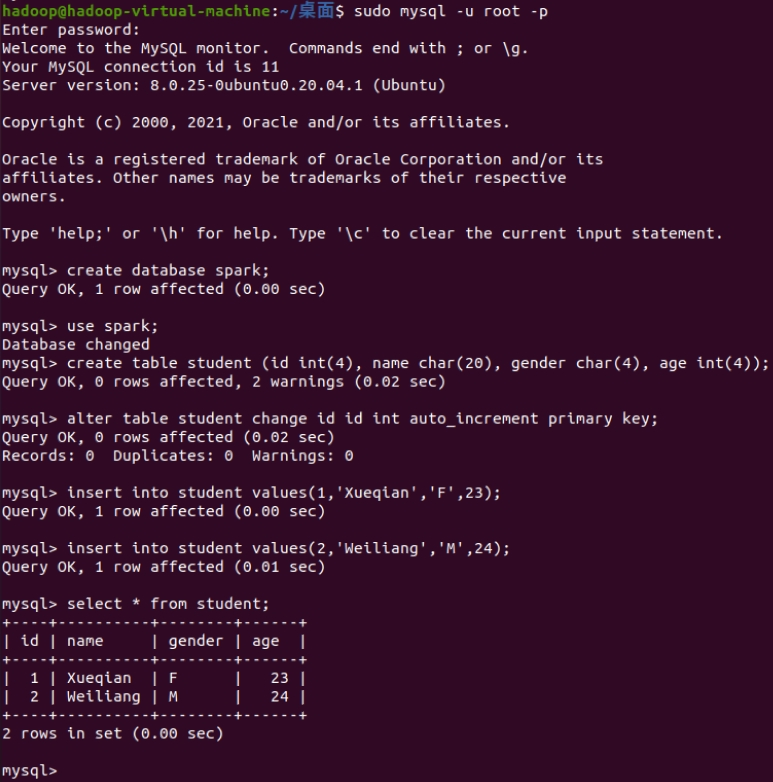

3. 启动 MySQL Shell,新建数据库 spark,表 student

sudo mysql -u root -p

mysql> create database spark;

mysql> use spark;

mysql> create table student (id int(4), name char(20), gender char(4), age int(4));

mysql> alter table student change id id int auto_increment primary key;

mysql> insert into student values(1,'Xueqian','F',23);

mysql> insert into student values(2,'Weiliang','M',24);

mysql> select * from student;

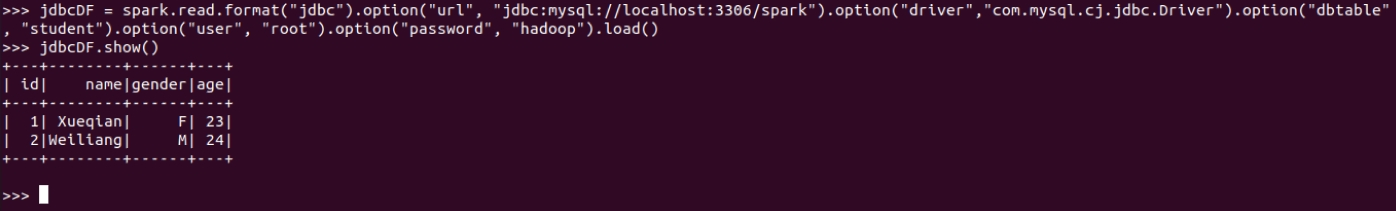

4. Spark 读取 MySQL 数据库中的数据

>>> jdbcDF = spark.read.format("jdbc").option("url", "jdbc:mysql://localhost:3306/spark").option("driver","com.mysql.cj.jdbc.Driver").option("dbtable", "student").option("user", "root").option("password", "hadoop").load()

>>> jdbcDF.show()

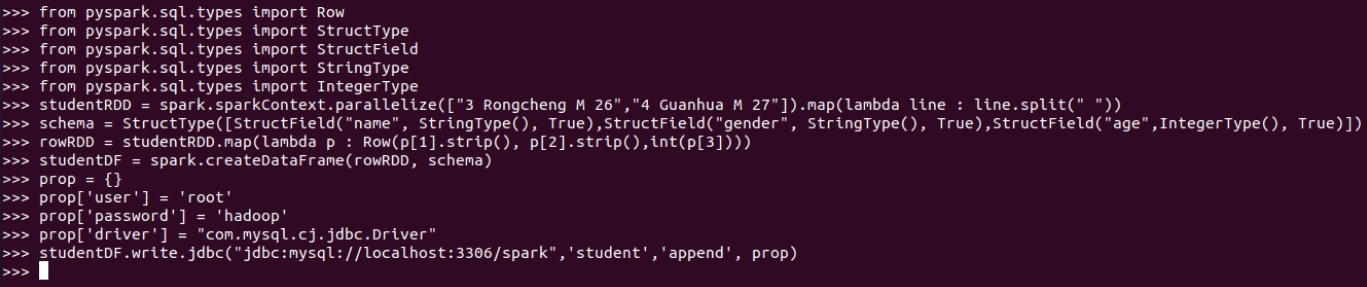

5. Spark 向 MySQL 数据库写入数据

>>> from pyspark.sql.types import Row

>>> from pyspark.sql.types import StructType

>>> from pyspark.sql.types import StructField

>>> from pyspark.sql.types import StringType

>>> from pyspark.sql.types import IntegerType

>>> studentRDD = spark.sparkContext.parallelize(["3 Rongcheng M 26","4 Guanhua M 27"]).map(lambda line : line.split(" "))

# 下面要设置模式信息

>>> schema = StructType([StructField("name", StringType(), True),StructField("gender", StringType(), True),StructField("age",IntegerType(), True)])

>>> rowRDD = studentRDD.map(lambda p : Row(p[1].strip(), p[2].strip(),int(p[3])))

# 建立起Row对象和模式之间的对应关系,也就是把数据和模式对应起来

>>> studentDF = spark.createDataFrame(rowRDD, schema)

>>> prop = {}

>>> prop['user'] = 'root'

>>> prop['password'] = 'hadoop'

>>> prop['driver'] = "com.mysql.cj.jdbc.Driver"

>>> studentDF.write.jdbc("jdbc:mysql://localhost:3306/spark",'student','append', prop)

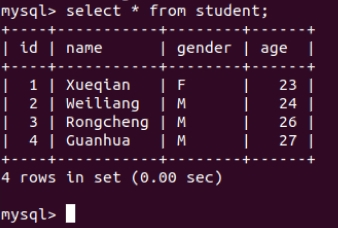

mysql> select * from student;