spark环境安装

源码包下载:

http://archive.apache.org/dist/spark/spark-2.1.1/v

集群环境:

master 192.168.1.99 slave1 192.168.1.100 slave2 192.168.1.101

下载安装包:

# Master wget http://archive.apache.org/dist/spark/spark-2.1.1/spark-2.1.1-bin-hadoop2.7.tgz -C /usr/local/src

wget https://downloads.lightbend.com/scala/2.11.8/scala-2.11.8.tgz -C /usr/local/src

tar -zxvf spark-2.1.1-bin-hadoop2.7.tgz

tar -zxvf scala-2.11.8.tgz

mv spark-2.1.1-bin-hadoop2.7 /usr/local/spark

mv scala-2.11.8 /usr/local/scala

修改配置文件:

cd /usr/local/spark/conf

vim spark-env.sh

export SCALA_HOME=/usr/local/scala

export JAVA_HOME=/usr/local/jdk1.8.0_181

export HADOOP_HOME=/usr/local/hadoop

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

SPARK_MASTER_IP=master

SPARK_LOCAL_DIRS=/usr/local/spark

SPARK_DRIVER_MEMORY=1G

vim slaves

slave1

salve2

配置环境变量:

#Master slave1 slave2

vim ~/.bashrc SPARK_HOME=/usr/local/spark PATH=$PATH:$SPARK_HOME/bin #刷新环境变量 source ~/.bashrc

拷贝安装包:

scp -r /usr/local/spark root@slave1:/usr/local/spark scp -r /usr/local/spark root@slave2:/usr/local/spark scp -r /usr/local/scala root@slave1:/usr/local/scala scp -r /usr/local/scala root@slave2:/usr/local/scala

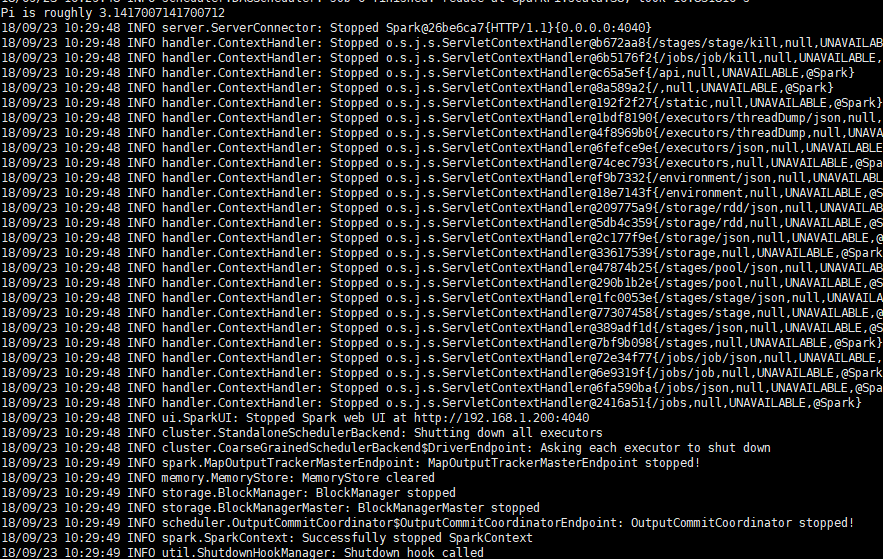

启动集群:

/usr/local/spark/sbin/start-all.sh

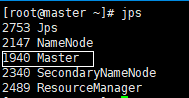

集群状态:

#Master

#slave1

#slave2

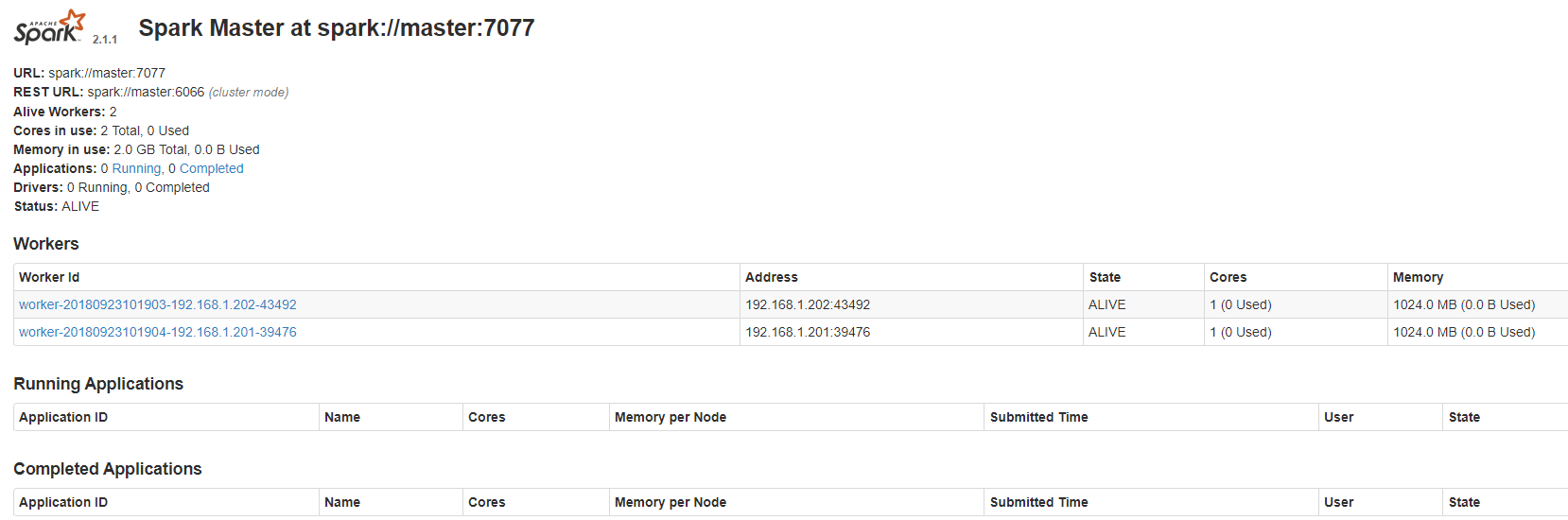

监控网页:

http://master:8080

验证:

#本地模式

run-example SparkPi 10 --master local[2]

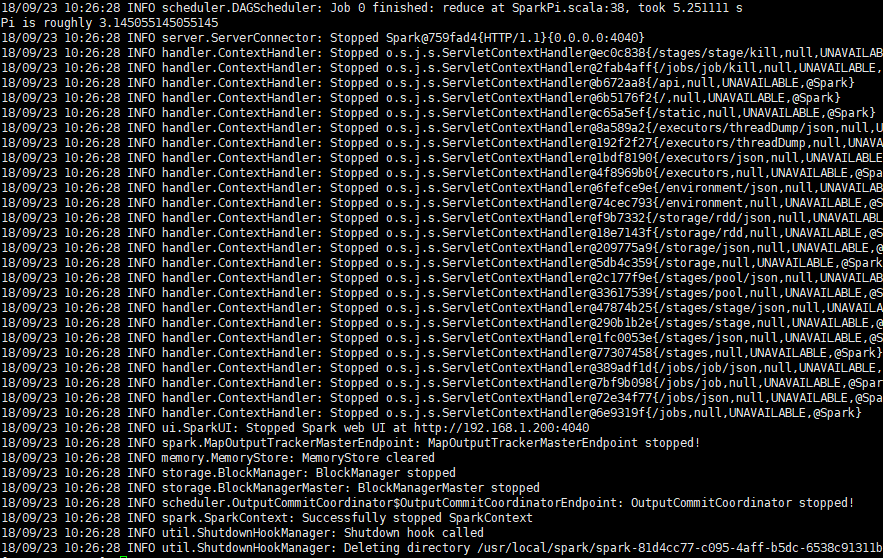

#集群Standlone

spark-submit --class org.apache.spark.examples.SparkPi --master spark://master:7077 /usr/local/spark/examples/jars/spark-examples_2.11-2.1.1.jar 100

#集群

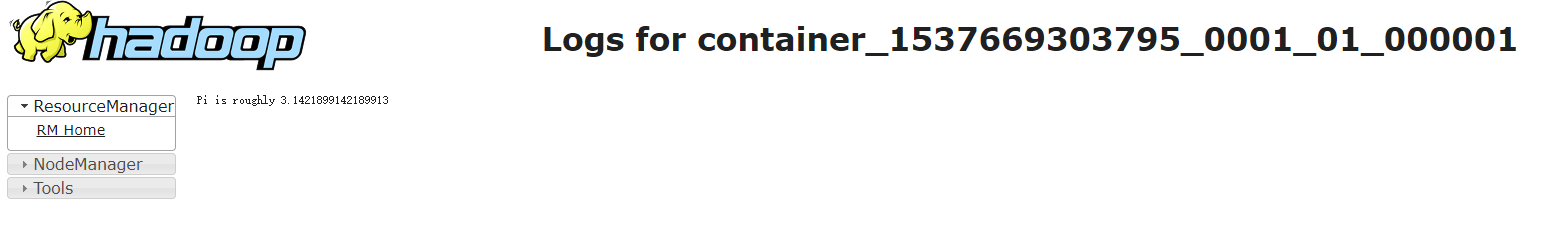

Spark on Yarn

spark-submit --class org.apache.spark.examples.SparkPi --master yarn-cluster /usr/local/spark/examples/jars/spark-examples_2.11-2.1.1.jar 100