bigquery nginx日志告警

需求:取k8s上的nginx日志状态码日志大于500,且请求次数超过10次,发钉钉告警

(1)gcp上nginx日志都在logging服务里面,用日志路由器流到bigquery里面,然后写脚本每分钟运行一次,获取最近5min日志,存在则发钉钉:

py脚本:

#!/usr/bin/env python

from google.cloud import bigquery as bq import os,time,hashlib,hmac,urllib,base64,datetime import requests,json from urllib import parse os.environ["GOOGLE_APPLICATION_CREDENTIALS"]="./gcloud_keys.json" client = bq.Client() def get_message(): webhook = get_webhook(1) QUERY = ("""SELECT CC.* FROM (SELECT ma.jsonpayload.vhost,ma.jsonpayload.path,ma.jsonpayload.status,count(*)cnt FROM `xxxxxx.dc_logs.stdout_*` ma where datetime(ma.timestamp,'+00:00')>= DATE_ADD(datetime(CURRENT_TIMESTAMP(),'+00:00'),interval -4 MINUTE) and ma.jsonpayload.status>=500 and ma.jsonpayload.vhost<>'nats.dcfx.co.id' group by 1,2,3)CC WHERE CC.CNT>10""") query_job = client.query(QUERY) # API request res = query_job.result() # Waits for query to finish for row in res: message={ "url":row.vhost,"path":row.path,"num":row.cnt,"code":int(row.status)} # print("请求域名:{} url:{} 次数:{}".format(row.vhost,row.path,row.cnt)) if message: url = str(message['url']) print(url) path = str(message['path']) cnt = str(message['num']) code = str(message['code']) headers = {'Content-Type': 'application/json'} data = { "msgtype": "markdown", "markdown": { "title": "NGINX log alert" + "....", "text": "<font color=#FF0000 size=3>最近5min访问情况:</font> " + "\n\n请求域名 :" + url + "\n\n>参数路径:" + path + " \n\n> 状态码:" + code + "\n\n>请求次数:" + cnt }, "at": { "atMobiles": [123456], "isAtAll": False } } requests.post(url=webhook, data=json.dumps(data), headers=headers) print(data) URL="https://oapi.dingtalk.com/robot/send?access_token=xxxxx" secret="SEC8735cbca2xxxxxx" def get_timestamp_sign(): timestamp = str(round(time.time() * 1000)) secret_enc = secret.encode('utf-8') string_to_sign = '{}\n{}'.format(timestamp, secret) string_to_sign_enc = string_to_sign.encode('utf-8') hmac_code = hmac.new(secret_enc, string_to_sign_enc, digestmod=hashlib.sha256).digest() sign = urllib.parse.quote_plus(base64.b64encode(hmac_code)) return (timestamp, sign) def get_signed_url(): timestamp, sign = get_timestamp_sign() webhook = URL + "×tamp="+timestamp+"&sign="+sign return webhook def get_webhook(mode): if mode == 0: # only 敏感字 webhook = URL elif mode == 1 or mode ==2 : # 敏感字和加签 或 # 敏感字+加签+ip webhook = get_signed_url() else: webhook = "" print("error! mode: ",mode," webhook : ",webhook) return webhook start_time = datetime.datetime.now() if __name__ == "__main__": get_message() end_time= datetime.datetime.now() time_cost = end_time - start_time print("当前脚本运行耗时为: " + str(time_cost).split('.')[0])

(2)做成docker镜像:

root@dba-ops:~/bigquery_alert# tree

.

├── Dockerfile

├── bq_alert.py

├── gcloud_keys.json

└── requirements.txt

0 directories, 4 files

root@dba-ops:~/bigquery_alert# cat Dockerfile

FROM python:3.8

#creates work dir

WORKDIR /app

#copy python script to the container folder app

COPY bq_alert.py /app/bq_alert.py

COPY ./gcloud_keys.json /app/gcloud_keys.json

COPY ./requirements.txt /app/requirements.txt

RUN chmod +x /app/bq_alert.py

RUN pip install --no-cache-dir -r requirements.txt

#user is appuser

ENTRYPOINT ["python", "/app/bq_alert.py"]

(3)k8s cronjob yaml:

apiVersion: batch/v1beta1 kind: CronJob metadata: name: bq-alert spec: schedule: "*/1 * * * *" jobTemplate: spec: backoffLimit: 5 template: spec: containers: - name: bq-alert image: dockerhub.xxxxx-internal.com/ops/xxx imagePullPolicy: IfNotPresent command: [/app/redis_sl_db.py] imagePullSecrets: - name: dockerhub restartPolicy: OnFailure

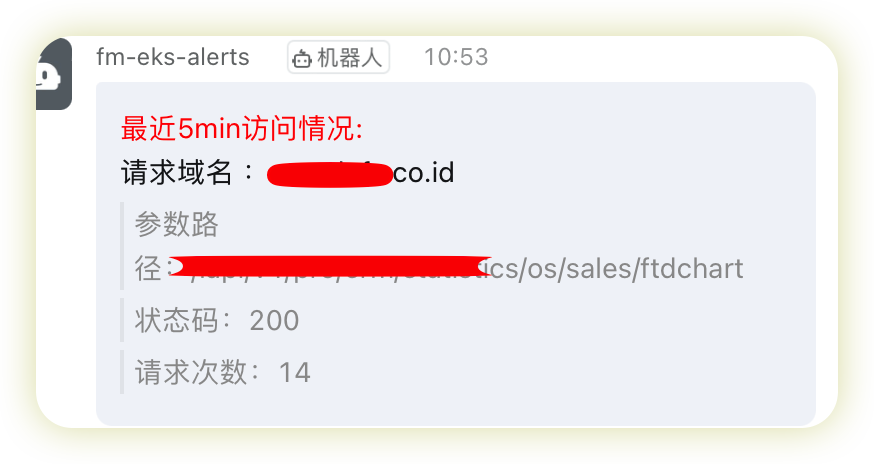

(4)最后运行正常,告警如下,我先测试200的,可以根据实际需求修改上面的脚本里面的sql:

业余经济爱好者

浙公网安备 33010602011771号

浙公网安备 33010602011771号