Hive入门操作

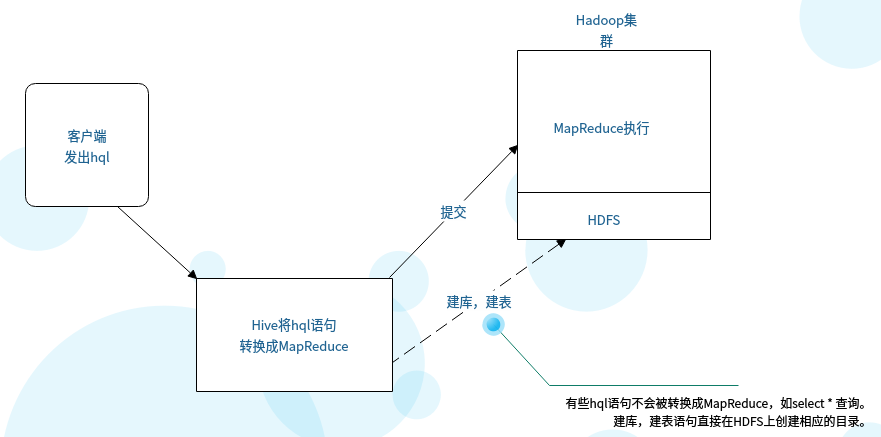

Hive是基于Hadoop的一个数据仓库工具,可以将结构化的数据文件映射为一张数据库表,并提供类SQL查询功能。本文描述了HIve的一些基本操作,如有错误之处还请指出。

常用语法

#显示相关信息

show tables;

show databases;

show partitions;

show functions;

desc extended table_name;

desc formatted table_name;

#创建库

create database test_db;

#删除库

drop database 库名;

#删除表

drop table 表名;

#重命名表名

ALTER TABLE table_name RENAME TO new_table_name;

#清空表数据

truncate table 表名;

建表语句

CREATE [EXTERNAL] TABLE [IF NOT EXISTS] table_name

[(col_name data_type [COMMENT col_comment], ...)]

[COMMENT table_comment]

[PARTITIONED BY (col_name data_type [COMMENT col_comment], ...)]

[CLUSTERED BY (col_name, col_name, ...)

[SORTED BY (col_name [ASC|DESC], ...)] INTO num_buckets BUCKETS]

[ROW FORMAT row_format]

[STORED AS file_format]

[LOCATION hdfs_path]

创建内部表

create table if not exists my_tb(id int,name string)

row format delimited fields terminated by ',';

创建外部表

#创建外部表要指明表的数据所在路径

create table if not exists my_ex_tb(id int,name string)

row format delimited fields terminated by ','

location 'hdfs://192.168.38.3:9000/externdb/my_ex_tb/';

在删除表的时候,内部表的元数据和数据会被一起删除,而外部表只删除元数据,不删除数据。

加载数据到表目录下

#在表的最后插入或覆盖整个表的数据(into/overwrite)

load data local inpath '/root/1.txt' into(overwrite) table my_ex_tb;

创建分区表

create table if not exists my_par_tb(id int,name string)

partitioned by(country string)

row format delimited fields terminated by ',';

load data local inpath '/root/1.txt' into table my_par_tb partition(country='China');

load data local inpath '/root/1.txt.us' into table my_par_tb partition(country='US');

#1.txt中的数据

1,张三

2,李四

3,王五

#1.txt.us中的数据

1,张三

2,李四

3,王五

#select * from my_par_tb显示的数据

1 张三 China

2 李四 China

3 王五 China

1 张三 US

2 李四 US

3 王五 US

#查某个分区里的数据

select * from my_par_tb where country='China'

1 张三 China

2 李四 China

3 王五 China

添加删除分区

#添加分区

alter table my_par_tb add partition(country='Eng') partition(country='Ame');

#删除分区

alter table my_par_tb drop partition(country='Eng') partition(country='Ame');

#显示表中的分区

show partitions my_par_tb;

country=China

country=US

创建分桶表

create table if not exists my_buck_tb(id int,name string)

clustered by(id) sorted by(id)

into 4 buckets

row format delimited fields terminated by ',';

#指定开启分桶

set hive.enforce.bucketing=true;

#分了几个桶就设置几个reduce,将从其他表中查出来多个文件,分表放入到多个桶里。

set mapreduce.job.reduces=4;

#从my_tb表中查出数据插入到分桶表里

insert into table my_buck_tb

#指定map输出的数据根据id去分区,排序(cluster by等价于distribute by+sort by的效果)

select id,name from my_tb cluster by(id);

保存查询结果

默认情况下查询结果显示在屏幕上,可以将查询结果保存到表里。

#将查询结果保存到一张新创建的表中

create table tmp_tb as select * from my_tb;

#将查询结果保存到一张已经存在的表中

insert into table tmp_tb select * from my_tb;

#将查询结果保存到指定目录下(本地或hdfs上)

#本地

insert overwrite local directory '/root/out_tb/'

select * from my_tb;

#hdfs

insert overwrite directory '/out_tb/'

select * from my_tb;

join操作

a表数据:

1,张三

2,李四

3,c

4,a

5,e

6,r

b表数据:

1,绿间

3,青峰

4,黑子

9,红发

建表:

create table a(id int,name string)

row format delimited fields terminated by ',';

create table b(id int,name string)

row format delimited fields terminated by ',';

导入数据:

load data local inpath '/root/a.txt' into table a;

load data local inpath '/root/b.txt' into table b;

#内连接(交集)

select * from a inner join b on a.id=b.id;

+-------+---------+-------+---------+--+

| a.id | a.name | b.id | b.name |

+-------+---------+-------+---------+--+

| 1 | 张三 | 1 | 绿间 |

| 3 | c | 3 | 青峰 |

| 4 | a | 4 | 黑子 |

+-------+---------+-------+---------+--+

#左连接

select * from a left join b on a.id=b.id;

+-------+---------+-------+---------+--+

| a.id | a.name | b.id | b.name |

+-------+---------+-------+---------+--+

| 1 | 张三 | 1 | 绿间 |

| 2 | 李四 | NULL | NULL |

| 3 | c | 3 | 青峰 |

| 4 | a | 4 | 黑子 |

| 5 | e | NULL | NULL |

| 6 | r | NULL | NULL |

+-------+---------+-------+---------+--+

#右连接

select * from a right join b on a.id=b.id;

+-------+---------+-------+---------+--+

| a.id | a.name | b.id | b.name |

+-------+---------+-------+---------+--+

| 1 | 张三 | 1 | 绿间 |

| 3 | c | 3 | 青峰 |

| 4 | a | 4 | 黑子 |

| NULL | NULL | 9 | 红发 |

+-------+---------+-------+---------+--+

#全连接

select * from a full outer join b on a.id=b.id;

+-------+---------+-------+---------+--+

| a.id | a.name | b.id | b.name |

+-------+---------+-------+---------+--+

| 1 | 张三 | 1 | 绿间 |

| 2 | 李四 | NULL | NULL |

| 3 | c | 3 | 青峰 |

| 4 | a | 4 | 黑子 |

| 5 | e | NULL | NULL |

| 6 | r | NULL | NULL |

| NULL | NULL | 9 | 红发 |

+-------+---------+-------+---------+--+

#左半连接(内连接的结果中只取左边的表的数据)

select * from a left semi join b on a.id = b.id;

+-------+---------+--+

| a.id | a.name |

+-------+---------+--+

| 1 | 张三 |

| 3 | c |

| 4 | a |

+-------+---------+--+

select查询语句

SELECT [ALL | DISTINCT] select_expr, select_expr, ...

FROM table_name[..join..on(a.id=b.id)]

[WHERE where_condition]

[GROUP BY col_list [HAVING condition]]

[CLUSTER BY col_list

| [DISTRIBUTE BY col_list] [SORT BY| ORDER BY col_list]

]

[LIMIT number]

#指定map输出的数据根据id去分区,排序(cluster by等价于distribute by+sort by的效果)

select id,name from my_tb cluster by(id);

自定义函数

hive内置函数

https://cwiki.apache.org/confluence/display/Hive/LanguageManual+UDF

pom文件

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.xiaojie.mm</groupId>

<artifactId>my_hive</artifactId>

<version>0.0.1-SNAPSHOT</version>

<properties>

<hadoop.version>2.6.5</hadoop.version>

<hive.version>1.2.1</hive.version>

</properties>

<dependencies>

<!-- Hadoop -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.6.5</version>

</dependency>

<!-- Hive -->

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-exec</artifactId>

<version>1.2.1</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-metastore</artifactId>

<version>1.2.1</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-pdk</artifactId>

<version>0.10.0</version>

</dependency>

<dependency>

<groupId>javax.jdo</groupId>

<artifactId>jdo2-api</artifactId>

<version>2.3-eb</version>

</dependency>

<dependency>

<groupId>commons-logging</groupId>

<artifactId>commons-logging</artifactId>

<version>1.1.1</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.7</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>jdk.tools</groupId>

<artifactId>jdk.tools</artifactId>

<version>1.7</version>

<scope>system</scope>

<systemPath>/home/miao/apps/install/jdk1.7.0_45/lib/tools.jar</systemPath>

</dependency>

</dependencies>

</project>

自定义将大写转为小写的方法

package com.xiaojie.mm;

import org.apache.hadoop.hive.ql.exec.UDF;

public class ToLower extends UDF{

// 重载该方法

public String evaluate(String field) {

return field.toLowerCase();

}

}

导出jar包,并放到hive所在的机器上

scp tolower.jar mini1:/root/apps/

hive客户端添加自定义函数

#第一步

add JAR /root/apps/tolower.jar;

#第二步 引号里是自定义方法的全名(临时方法,只在该回话窗口有效)

create temporary function tolower as 'com.xiaojie.mm.ToLower';

#第三步使用

select * from a;

+-------+---------+--+

| a.id | a.name |

+-------+---------+--+

| 7 | AAAAA |

| 1 | 张三 |

| 2 | 李四 |

| 3 | c |

| 4 | a |

| 5 | e |

| 6 | r |

+-------+---------+--+

select id,tolower(name) from a;

+-----+--------+--+

| id | _c1 |

+-----+--------+--+

| 7 | aaaaa |

| 1 | 张三 |

| 2 | 李四 |

| 3 | c |

| 4 | a |

| 5 | e |

| 6 | r |

+-----+--------+--+

自定义获取手机归属地

package com.xiaojie.mm;

import java.util.HashMap;

import org.apache.hadoop.hive.ql.exec.UDF;

public class GetProvince extends UDF{

public static HashMap<String,String> provinceMap = new HashMap<String,String>();

static {

provinceMap.put("183", "hangzhou");

provinceMap.put("186", "nanjing");

provinceMap.put("187", "suzhou");

provinceMap.put("188", "ningbo");

}

public String evaluate(int phonenumber) {

String phone_num = String.valueOf(phonenumber);

#取手机号码前三位

String phone = phone_num.substring(0, 3);

return provinceMap.get(phone)==null?"未知":provinceMap.get(phone);

}

}

原数据:

+----------------------+---------------------+--+

| flow_province.phone | flow_province.flow |

+----------------------+---------------------+--+

| 1837878 | 12m |

| 1868989 | 13m |

| 1878989 | 14m |

| 1889898 | 15m |

| 1897867 | 16m |

| 1832323 | 78m |

| 1858767 | 88m |

| 1862343 | 99m |

| 1893454 | 77m |

+----------------------+---------------------+--+

调用自定义方法后:

select phone,getpro(phone),flow from flow_province;

+----------+-----------+-------+--+

| phone | _c1 | flow |

+----------+-----------+-------+--+

| 1837878 | hangzhou | 12m |

| 1868989 | nanjing | 13m |

| 1878989 | suzhou | 14m |

| 1889898 | ningbo | 15m |

| 1897867 | 未知 | 16m |

| 1832323 | hangzhou | 78m |

| 1858767 | 未知 | 88m |

| 1862343 | nanjing | 99m |

| 1893454 | 未知 | 77m |

+----------+-----------+-------+--+

自定义解析json格式的数据

#创建表

create table json_tb(line string);

#导入数据

load data local inpath '/root/test_data/a.json' into table json_tb;

#显示原数据

select line from my_tb limit 10;

+----------------------------------------------------------------+--+

| json_tb.line |

+----------------------------------------------------------------+--+

| {"movie":"1193","rate":"5","timeStamp":"978300760","uid":"1"} |

| {"movie":"661","rate":"3","timeStamp":"978302109","uid":"1"} |

| {"movie":"914","rate":"3","timeStamp":"978301968","uid":"1"} |

| {"movie":"3408","rate":"4","timeStamp":"978300275","uid":"1"} |

| {"movie":"2355","rate":"5","timeStamp":"978824291","uid":"1"} |

| {"movie":"1197","rate":"3","timeStamp":"978302268","uid":"1"} |

| {"movie":"1287","rate":"5","timeStamp":"978302039","uid":"1"} |

| {"movie":"2804","rate":"5","timeStamp":"978300719","uid":"1"} |

| {"movie":"594","rate":"4","timeStamp":"978302268","uid":"1"} |

| {"movie":"919","rate":"4","timeStamp":"978301368","uid":"1"} |

+----------------------------------------------------------------+--+

#自定义函数

package com.xiaojie.mm;

import org.apache.hadoop.hive.ql.exec.UDF;

import parquet.org.codehaus.jackson.map.ObjectMapper;

public class JsonParse extends UDF{

public String evaluate(String jsonLine) {

ObjectMapper objectMapper = new ObjectMapper();

try {

MovieBean bean = objectMapper.readValue(jsonLine, MovieBean.class);

return bean.toString();

}catch(Exception e){

}

return "";

}

}

package com.xiaojie.mm;

public class MovieBean {

// 电影id

private String movie;

// 电影评分

private String rate;

// 评分时间

private String timeStamp;

// 用户id

private String uid;

public String getMovie() {

return movie;

}

public void setMovie(String movie) {

this.movie = movie;

}

public String getRate() {

return rate;

}

public void setRate(String rate) {

this.rate = rate;

}

public String getTimeStamp() {

return timeStamp;

}

public void setTimeStamp(String timeStamp) {

this.timeStamp = timeStamp;

}

public String getUid() {

return uid;

}

public void setUid(String uid) {

this.uid = uid;

}

@Override

public String toString() {

return this.movie + "\t" +this.rate + "\t" + this.timeStamp + "\t" + this.uid;

}

}

#打jar包上传到hive所在机器,创建函数

add JAR /root/test_data/json_parse.jar;

create temporary function json_parse as 'com.xiaojie.mm.JsonParse';

#使用自定义的json解析函数

select json_parse(line) from json_tb limit 10;

+---------------------+--+

| _c0 |

+---------------------+--+

| 1193 5 978300760 1 |

| 661 3 978302109 1 |

| 914 3 978301968 1 |

| 3408 4 978300275 1 |

| 2355 5 978824291 1 |

| 1197 3 978302268 1 |

| 1287 5 978302039 1 |

| 2804 5 978300719 1 |

| 594 4 978302268 1 |

| 919 4 978301368 1 |

+---------------------+--+

#将json解析的数据保存到一张新创建的表里

create table json_parse_tb as

select split(json_parse(line),'\t')[0] as movieid,

split(json_parse(line),'\t')[1] as rate,

split(json_parse(line),'\t')[2] as time,

split(json_parse(line),'\t')[3] as userid

from json_tb limit 100;

#内置json函数

select get_json_object(line,'$.movie') as moiveid,

get_json_object(line,'$.rate') as rate,

get_json_object(line,'$.timeStamp') as time,

get_json_object(line,'$.uid') as userid

from json_tb limit 10;

Transform(调用自定义脚本)

Hive的 TRANSFORM 关键字提供了在SQL中调用自写脚本的功能,适合实现Hive中没有的功能又不想写UDF的情况。

自定义python脚本(vim time_parse.py)

#!/bin/python

import sys

import datetime

for line in sys.stdin:

line = line.strip()

movieid, rate, unixtime,userid = line.split('\t')

weekday = datetime.datetime.fromtimestamp(float(unixtime)).isoweekday()

print '\t'.join([movieid, rate, str(weekday),userid])

将py文件导入到hive的工作目录下

add file time_parse.py

使用transform调用自定义的py代码

create TABLE json_parse_time_tb as

SELECT

#根据transform括号中的参数,将json_parse_tb表的对应数据取出

TRANSFORM (movieid, rate, time, userid)

USING 'python time_parse.py'

AS (movieid, rate, weekday,userid)

FROM json_parse_tb;

查看新表数据

select * from json_parse_time_tb;

+-----------------------------+--------------------------+-----------------------------+----------------------------+--+

| json_parse_time_tb.movieid | json_parse_time_tb.rate | json_parse_time_tb.weekday | json_parse_time_tb.userid |

+-----------------------------+--------------------------+-----------------------------+----------------------------+--+

| 1690 | 3 | 1 | 2 |

| 589 | 4 | 1 | 2 |

| 3471 | 5 | 1 | 2 |

| 1834 | 4 | 1 | 2 |

| 2490 | 3 | 1 | 2 |

| 2278 | 3 | 1 | 2 |

| 110 | 5 | 1 | 2 |

| 3257 | 3 | 1 | 2 |

| 3256 | 2 | 1 | 2 |

| 3255 | 4 | 1 | 2 |

+-----------------------------+--------------------------+-----------------------------+----------------------------+--+

案例

原数据(用户名,月份,点击量)

A,2015-01,5

A,2015-01,15

B,2015-01,5

A,2015-01,8

B,2015-01,25

A,2015-01,5

A,2015-02,4

A,2015-02,6

B,2015-02,10

B,2015-02,5

求每个人每个月的点击量,以及点击量累计

第一步:创建表,导入数据

#建表

create table click_tb(username string,month string,click int)

row format delimited fields terminated by ',';

#导入数据

load data local inpath ‘/root/test_data/click.txt’ into click_tb;

第二步:求每个用户每个月的点击量

select username,month,sum(click) as click_count from click_tb group by username,month;

+-----------+----------+--------------+--+

| username | month | click_count |

+-----------+----------+--------------+--+

| A | 2015-01 | 33 |

| A | 2015-02 | 10 |

| B | 2015-01 | 30 |

| B | 2015-02 | 15 |

+-----------+----------+--------------+--+

第三步:自己和自己内连接(求交集)

select * from

(select username,month,sum(click) as click_count from click_tb group by username,month) A

inner join

(select username,month,sum(click) as click_count from click_tb group by username,month) B

on

A.username=B.username;

+-------------+----------+----------------+-------------+----------+----------------+--+

| a.username | a.month | a.click_count | b.username | b.month | b.click_count |

+-------------+----------+----------------+-------------+----------+----------------+--+

| A | 2015-01 | 33 | A | 2015-01 | 33 |

| A | 2015-01 | 33 | A | 2015-02 | 10 |

| A | 2015-02 | 10 | A | 2015-01 | 33 |

| A | 2015-02 | 10 | A | 2015-02 | 10 |

| B | 2015-01 | 30 | B | 2015-01 | 30 |

| B | 2015-01 | 30 | B | 2015-02 | 15 |

| B | 2015-02 | 15 | B | 2015-01 | 30 |

| B | 2015-02 | 15 | B | 2015-02 | 15 |

+-------------+----------+----------------+-------------+----------+----------------+--+

第四步:求出最终所需结果

select a.username,a.month,min(a.click_count) as click_count,sum(b.click_count) as sum_count from

(select username,month,sum(click) as click_count from click_tb group by username,month) a

inner join

(select username,month,sum(click) as click_count from click_tb group by username,month) b

on

A.username=B.username

where b.month<=a.month

group by a.username,a.month

order by a.username,a.month;

+-------------+----------+--------------+------------+--+

| a.username | a.month | click_count | sum_count |

+-------------+----------+--------------+------------+--+

| A | 2015-01 | 33 | 33 |

| A | 2015-02 | 10 | 43 |

| B | 2015-01 | 30 | 30 |

| B | 2015-02 | 15 | 45 |

+-------------+----------+--------------+------------+--+

作者:py小杰

本文版权归作者和博客园共有,欢迎转载,但未经作者同意必须保留此段声明,且在文章页面明显位置给出原文连接,否则保留追究法律责任的权利。

浙公网安备 33010602011771号

浙公网安备 33010602011771号