Python爬虫学习笔记(二)

GET + 字典传参:

代码:

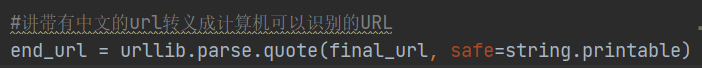

import urllib.request import urllib.parse import string def get_params(): url = "http://www.baidu.com/s?wd=" params = { "wd": "中文", "key": "zhang", "value": "san", } str_params = urllib.parse.urlencode(params) print(str_params) final_url = url + str_params #讲带有中文的url转义成计算机可以识别的URL end_url = urllib.parse.quote(final_url, safe=string.printable) response = urllib.request.urlopen(end_url) data = response.read().decode("utf-8") print(data) get_params()

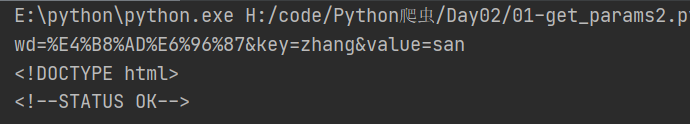

返回结果:

GET传参:

(1)汉字报错:

解释器ascii没有汉字,url汉字转码

urllib.parse.quote(params, safe=string.printable)

(2)字典传参:

urllib.parse.urlencode()

注:

POST请求:

urllib.request.urlopen(url, data="服务器接收的数据")

hander:

User-Agent:

(1)模拟真实的浏览器发送请求:百度批量搜索,检察元素

(2)request.add_header()动态添加head数据

(3)响应头:response.header

(4)创建request:urllib.request.Request(url)

Test(响应头):

代码:

import urllib.request

def load_baidu():

url = "http://www.baidu.com/"

response = urllib.request.urlopen(url)

print(response)

#响应头

print(response.headers)

load_baidu()

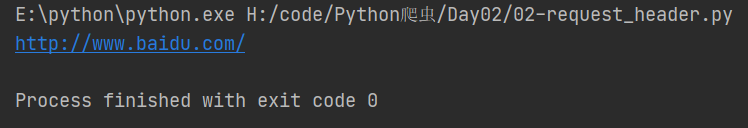

返回:

E:\python\python.exe H:/code/Python爬虫/Day02/02-request_header.py

<http.client.HTTPResponse object at 0x000001F64CC88CA0>

Bdpagetype: 1

Bdqid: 0x9829fa7c000a56cf

Cache-Control: private

Content-Type: text/html;charset=utf-8

Date: Tue, 26 Jan 2021 06:35:11 GMT

Expires: Tue, 26 Jan 2021 06:34:11 GMT

P3p: CP=" OTI DSP COR IVA OUR IND COM "

P3p: CP=" OTI DSP COR IVA OUR IND COM "

Server: BWS/1.1

Set-Cookie: BAIDUID=A276C955F91E3B32F4D56ADC1EE37C59:FG=1; expires=Thu, 31-Dec-37 23:55:55 GMT; max-age=2147483647; path=/; domain=.baidu.com

Set-Cookie: BIDUPSID=A276C955F91E3B32F4D56ADC1EE37C59; expires=Thu, 31-Dec-37 23:55:55 GMT; max-age=2147483647; path=/; domain=.baidu.com

Set-Cookie: PSTM=1611642911; expires=Thu, 31-Dec-37 23:55:55 GMT; max-age=2147483647; path=/; domain=.baidu.com

Set-Cookie: BAIDUID=A276C955F91E3B3283913B84A5B12CFA:FG=1; max-age=31536000; expires=Wed, 26-Jan-22 06:35:11 GMT; domain=.baidu.com; path=/; version=1; comment=bd

Set-Cookie: BDSVRTM=0; path=/

Set-Cookie: BD_HOME=1; path=/

Set-Cookie: H_PS_PSSID=33425_33507_33437_33257_33273_31253_33395_33398_33321_33265; path=/; domain=.baidu.com

Traceid: 1611642911060665933810964570178293749455

Vary: Accept-Encoding

Vary: Accept-Encoding

X-Ua-Compatible: IE=Edge,chrome=1

Connection: close

Transfer-Encoding: chunked

Process finished with exit code 0

Test(获取请求头信息):

import urllib.request def load_baidu(): url = "http://www.baidu.com/" #创建请求对象 request = urllib.request.Request(url) #请求网络数据 response = urllib.request.urlopen(request) #print(response) data = response.read().decode("utf-8") #响应头 #print(response.headers) #获取请求头的信息 request_header = request.headers print(request_header) with open("02header.html", "w")as f: f.write(data) load_baidu()

Test(添加请求头信息):

代码1:

法一:自行获取

法一:自行获取返回1:

E:\python\python.exe H:/code/Python爬虫/Day02/03-request_header_two.py

{'User-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.104 Safari/537.36', '3ch0 - nu1l': 's1mpL3'}

Process finished with exit code 0

代码2:

import urllib.request

def load_baidu():

url = "http://www.baidu.com/"

header = {

#浏览器版本

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.104 Safari/537.36",

"3cH0 - Nu1L": "s1mpL3",

}

#创建请求对象

request = urllib.request.Request(url, headers=header)

#请求网络数据(不在此处增加请求头信息,因为此方法系统没有提供参数)

response = urllib.request.urlopen(request)

data = response.read().decode("utf-8")

#获取请求头的信息(所有头的信息)

#request_headers = request.headers

#print(request_headers)

#第二种方式打印headers信息

request_headers = request.get_header("User-agent")

print(request_headers)

with open("02header.html", "w")as f:

f.write(data)

load_baidu()

返回2:

E:\python\python.exe H:/code/Python爬虫/Day02/03-request_header_two.py

Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.104 Safari/537.36

Process finished with exit code 0

注1:

对比两次返回值:

使用内置函数时,不返回字典中的"3cH0 - Nu1L": "s1mpL3",

自行获取时则都返回

注2:

中首字母要大写(其余均小写),若改为小写,则返回值为None

中首字母要大写(其余均小写),若改为小写,则返回值为None

代码:

import urllib.request

def load_baidu():

url = "http://www.baidu.com/"

header = {

#浏览器版本

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.104 Safari/537.36",

"3cH0 - Nu1L": "s1mpL3",

}

#创建请求对象

request = urllib.request.Request(url, headers=header)

#请求网络数据(不在此处增加请求头信息,因为此方法系统没有提供参数)

response = urllib.request.urlopen(request)

data = response.read().decode("utf-8")

#获取请求头的信息(所有头的信息)

#request_headers = request.headers

#print(request_headers)

#第二种方式打印headers信息

request_headers = request.get_header("user-agent")

print(request_headers)

with open("02header.html", "w")as f:

f.write(data)

load_baidu()

返回:

E:\python\python.exe H:/code/Python爬虫/Day02/03-request_header_two.py

None

Process finished with exit code 0

Test(动态添加Header信息):

代码:

import urllib.request

def load_baidu():

url = "http://www.baidu.com/"

header = {

# 浏览器版本

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.104 Safari/537.36",

"3cH0 - Nu1L": "s1mpL3",

}

#创建请求对象

request = urllib.request.Request(url)

#动态添加hander信息

request.add_header("User-Agent", "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.104 Safari/537.36")

#请求网络数据

response = urllib.request.urlopen(request)

#print(response)

data = response.read().decode("utf-8")

#响应头

#print(response.headers)

#获取请求头的信息

request_header = request.headers

print(request_header)

with open("02header.html", "w")as f:

f.write(data)

load_baidu()

返回:

E:\python\python.exe H:/code/Python爬虫/Day02/03-request_header_two.py

Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.104 Safari/537.36

Process finished with exit code 0

Test(获取完整的url):

代码:

import urllib.request

def load_baidu():

url = "http://www.baidu.com/"

header = {

# 浏览器版本

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.104 Safari/537.36",

"3cH0 - Nu1L": "s1mpL3",

}

#创建请求对象

request = urllib.request.Request(url)

#动态添加hander信息

request.add_header("User-Agent", "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.104 Safari/537.36")

#请求网络数据

response = urllib.request.urlopen(request)

#print(response)

data = response.read().decode("utf-8")

#获取完整的url

final_url = request.get_full_url()

print(final_url)

load_baidu()

返回:

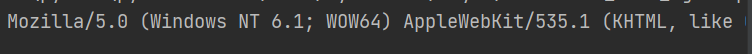

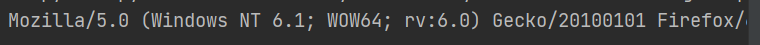

Test(随机user-agent):

需要多份user-agent(网上搜索user-agent大全即可)

代码:

import urllib.request

import random

def load_baidu():

url = "http://www.baidu.com"

user_agent_list = [

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.1 (KHTML, like Gecko) Chrome/14.0.835.163 Safari/535.1",

"Mozilla/5.0 (Windows NT 6.1; WOW64; rv:6.0) Gecko/20100101 Firefox/6.0",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/534.50 (KHTML, like Gecko) Version/5.1 Safari/534.50",

"Opera/9.80 (Windows NT 6.1; U; zh-cn) Presto/2.9.168 Version/11.50",

]

#每次请求的浏览器都是不一样的

random_user_agent = random.choice(user_agent_list)

request = urllib.request.Request(url)

#增加对应的响应头(user-agent)

request.add_header("User-Agent", random_user_agent)

#请求数据

response = urllib.request.urlopen(request)

#获取请求头的信息

print(request.get_header("User-agent"))

load_baidu()

返回:

具有随机性

IP代理:

(1)免费IP:时效性差,错误率高

(2)付费IP:也存在失效的

IP分类:

透明IP:

对方知道我们的真实IP

匿名IP:

对方不知道我们的真实IP,知道我们使用了代理

高匿IP:

既不知道真实IP,也不知道使用了代理

handler:

系统的urlopen()不支持代理的添加

创建对应的处理器(handler)

- 代理处理器:ProxyHandler

- 用ProxyHandler创建openner:build_openner()

- openner.open(url)就可以请求数据

Test(HTTPHandler):

代码:

import urllib.request

def handler_openner():

#系统的urlopen没有添加代理的功能,需要我们自定义该功能

#安全 套接层 ssl第三方的CA数字证书

#http:80

#https:443

#urlopen为什么可以请求数据:

#①handler处理器,

#②自己的openner请求数据

url = "https://www.cnblogs.com/3cH0-Nu1L/"

#创建自己的处理器

handler = urllib.request.HTTPHandler

#创建自己的oppener

openner = urllib.request.build_opener(handler)

#用自己创建的openner调用open方法请求数据

response = openner.open(url)

data = response.read()

print(response)

print(data)

handler_openner()

返回:

注:

HTTPHandler()不可以增加代理

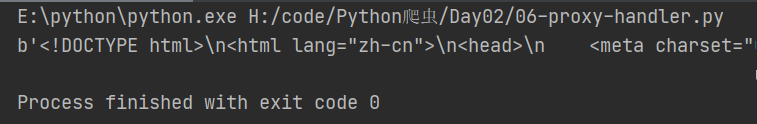

Test(使用代理IP_免费IP):

代码:

import urllib.request

def create_proxy_handler():

url = "https://www.cnblogs.com/3cH0-Nu1L/"

#添加代理

proxy = {

#免费的写法

"http": "104.131.109.66:8080"

}

#代理处理器

proxy_handler = urllib.request.ProxyHandler(proxy)

#创建自己的openner

openner = urllib.request.build_opener(proxy_handler)

#拿着代理IP发送请求

data = openner.open(url).read()

print(data)

create_proxy_handler()

返回:

Test(随机创建):

代码:

import urllib.request

def proxy_user():

proxy_list = [

{"http": "104.131.109.66:8080"},

{"http": "88.198.24.108:8080"},

{"http": "96.113.165.182:3128"},

{"http": "117.185.17.151:80"},

{"http": "112.30.164.18:80"},

]

for proxy in proxy_list:

print(proxy)

#利用遍历出来的IP创建处理器

proxy_handler = urllib.request.ProxyHandler(proxy)

#创建openner

openner = urllib.request.build_opener(proxy_handler)

try:

openner.open("http://www.baidu.com", timeout=1)

print("s1mpL3")

except Exception as e:

print(e)

proxy_user()

返回:

E:\python\python.exe H:/code/Python爬虫/Day02/07-random-user-proxy.py

{'http': '104.131.109.66:8080'}

s1mpL3

{'http': '88.198.24.108:8080'}

<urlopen error timed out>

{'http': '96.113.165.182:3128'}

s1mpL3

{'http': '117.185.17.151:80'}

s1mpL3

{'http': '112.30.164.18:80'}

<urlopen error timed out>

Process finished with exit code 0

Test(使用代理IP_付费IP):

代码:

import urllib.request

import requests

#付费代理发送

#1.用户名密码(带着)

#通过验证的处理起来发送

def money_proxy_user():

#1.代理IP

money_proxy = {

"http": "username:passwd@192.168.12.1:8080"

}

#2.代理的处理器

proxy_handler = urllib.request.ProxyHandler(money_proxy)

#3.通过处理器创建openner

openner = urllib.request.build_opener(proxy_handler)

#4.open发送请求

openner.open("http://www.baidu.com/")

money_proxy_user()

import urllib.request

import requests

#付费代理发送

#1.用户名密码(带着)

#通过验证的处理起来发送

def money_proxy_user():

# 第二种方式发送付费的IP地址

user_name = "abcname"

passwd = "123456"

proxy_money = "123.158.62.120:8080"

# 2.创建密码管理器,添加用户名和密码

password_manager = urllib.request.HTTPPasswordMgrWithDefaultRealm()

#uri定位 uri > url

#url:资源定位符

password_manager.add_password(None, proxy_money, user_name, passwd)

# 3.创建可以验证代理IP的处理器

handler_auth_proxy = urllib.request.ProxyBasicAuthHandler(password_manager)

# 4.根据处理器创建openner

openner_auth = urllib.request.build_opener(handler_auth_proxy)

# 5.发送请求

response = openner_auth.open("http:www.baidu.com")

print(response.read())

money_proxy_user()

Auth认证:

爬取自己网站的数据进行分析,类似使用付费代理IP的过程。

Test:

代码:

import urllib.request

def auth_neiwang():

# 1.用户名密码

user = "admin"

password ="admin123"

nei_url = "http://192.168.179.66"

# 2.创建密码管理器

pwd_manager = urllib.request.HTTPPasswordMgrWithDefaultRealm()

pwd_manager.add_password(None, nei_url, user, password)

# 3.创建认证处理器(requests)

auth_handler = urllib.request.HTTPBasicAuthHandler(pwd_manager)

openner = urllib.request.build_opener(auth_handler)

response = openner.open(nei_url)

print(response)

auth_neiwang()

The Working Class Must Lead!

hander与handler简单使用

hander与handler简单使用