Hive UDF IP解析(二):使用geoip2数据库自定义UDF

开发中经常会碰到将IP转为地域的问题,所以以下记录Hive中自定义UDF来解析IP。

使用到的地域库位maxmind公司的geoIP2数据库,分为免费版GeoLite2-City.mmdb和收费版GeoIP2-City.mmdb,不管哪个版本,开发的接口都是相同。

开发环境:

hive-2.3.0

hadoop 2.7.3

jdk 1.8

1. 新建maven项目regionParse,加入以下依赖包

<dependency> <groupId>org.apache.hive</groupId> <artifactId>hive-exec</artifactId> <version>2.3.0</version> </dependency> <dependency> <groupId>com.maxmind.db</groupId> <artifactId>maxmind-db</artifactId> <version>1.2.2</version> </dependency> <dependency> <groupId>com.maxmind.geoip2</groupId> <artifactId>geoip2</artifactId> <version>2.11.0</version> </dependency>

2.1 新建IP2Region.java,继承UDF(中文版)

package com.gw.udf; import java.io.File; import java.io.IOException; import java.net.InetAddress; import java.net.UnknownHostException; import org.apache.hadoop.hive.ql.exec.UDF; //import org.apache.log4j.Logger; import com.gw.util.Constants; import com.gw.util.geoip2.GWDatabaseReader; //重写了com.maxmind.geoip2.DatabaseReader类 import com.maxmind.geoip2.exception.GeoIp2Exception; import com.maxmind.geoip2.model.CityResponse; import com.maxmind.geoip2.record.City; import com.maxmind.geoip2.record.Country; import com.maxmind.geoip2.record.Subdivision; public class IP2Region extends UDF { // private static Logger log = Logger.getLogger(IP2Region.class); private static GWDatabaseReader reader = null; static { String path = IP2Region.class.getResource("/").getFile().toString(); try { System.out.println(path + Constants.geoIPFile); File database = new File(path + Constants.geoIPFile); reader = new GWDatabaseReader.Builder(database).build(); } catch (IOException e) { // log.error(e.getMessage()); } } @SuppressWarnings("finally") public static String evaluate(String ip,int i) { InetAddress ipAddress; String region = null; try { ipAddress = InetAddress.getByName(ip); CityResponse response = null; response = reader.city(ipAddress); Country country = response.getCountry(); Subdivision subdivision = response.getMostSpecificSubdivision(); City city = response.getCity(); if(country!=null && i==0){ region = country.getNames().get("zh-CN") != null ? country.getNames().get("zh-CN") : country.getNames().get("en") ; } else if(subdivision!=null && i == 1 ){ region = Constants.provinceCN.get(subdivision.getIsoCode()) != null ? Constants.provinceCN.get(subdivision.getIsoCode()) : subdivision.getNames().get("en") ; } else if(city!=null && i == 2){ String province = city.getNames().get("zh-CN"); if(province != null && province.lastIndexOf("市") > 0){ province = province.substring(0,province.length()-1); } region = province != null ? province : city.getNames().get("en"); } // Postal postal = response.getPostal(); //获取经纬度,暂时不需要 // Location location = response.getLocation(); // System.out.println(location.getLatitude()); // 44.9733 // System.out.println(location.getLongitude()); // -93.2323 } catch (Exception e) { // log.error(e.getMessage()); } finally{ return region; } } public static void main(String[] args) throws UnknownHostException, IOException, GeoIp2Exception { String ip = "223.104.6.25"; System.out.println(evaluate(ip,0) + ":" + evaluate(ip,1) + ":" + evaluate(ip,2)); } }

2.2 新建IP2RegionEN.java, 继承UDF(英文版)

package com.gw.udf; import java.io.File; import java.io.IOException; import java.net.InetAddress; import java.net.UnknownHostException; import org.apache.hadoop.hive.ql.exec.UDF; //import org.apache.log4j.Logger; import com.gw.util.Constants; import com.gw.util.geoip2.GWDatabaseReader; import com.maxmind.geoip2.exception.GeoIp2Exception; import com.maxmind.geoip2.model.CityResponse; import com.maxmind.geoip2.record.City; import com.maxmind.geoip2.record.Country; import com.maxmind.geoip2.record.Subdivision; public class IP2RegionEN extends UDF { // private static Logger log = Logger.getLogger(IP2Region.class); private static GWDatabaseReader reader = null; static { String path = IP2RegionEN.class.getResource("/").getFile().toString(); try { System.out.println(path + Constants.geoIPFile); File database = new File(path + Constants.geoIPFile); reader = new GWDatabaseReader.Builder(database).build(); } catch (IOException e) { // log.error(e.getMessage()); } } @SuppressWarnings("finally") public static String evaluate(String ip,int i) { InetAddress ipAddress; String region = null; try { ipAddress = InetAddress.getByName(ip); CityResponse response = null; response = reader.city(ipAddress); Country country = response.getCountry(); Subdivision subdivision = response.getMostSpecificSubdivision(); City city = response.getCity(); if(country!=null && i==0){ region = country.getNames().get("en") ; } else if(subdivision!=null && i == 1 ){ region = subdivision.getNames().get("en") ; } else if(city!=null && i == 2){ region = city.getNames().get("en"); } // Postal postal = response.getPostal(); //获取经纬度,暂时不需要 // Location location = response.getLocation(); // System.out.println(location.getLatitude()); // 44.9733 // System.out.println(location.getLongitude()); // -93.2323 } catch (Exception e) { // log.error(e.getMessage()); } finally{ return region; } } public static void main(String[] args) throws UnknownHostException, IOException, GeoIp2Exception { String ip = "223.104.6.25"; System.out.println(evaluate(ip,0) + ":" + evaluate(ip,1) + ":" + evaluate(ip,2)); } }

3. 由于免费的数据库解析出来的中文省份含有简称比如福建直接显示为闽,比较坑。

为便于统一,所以自己定义了一个isocode的转换类Constants.java 用于省份的统一显示。

package com.gw.util; import java.util.HashMap; import java.util.Map; public class Constants { public static final String geoIPFile= "GeoLite2-City.mmdb"; public static final String geollFile = "geo.csv"; public static final Map<String,String> provinceCN = new HashMap<String,String>(); static { provinceCN.put("AH","安徽"); provinceCN.put("BJ","北京"); provinceCN.put("CQ","重庆"); provinceCN.put("FJ","福建"); provinceCN.put("GD","广东"); provinceCN.put("GS","甘肃"); provinceCN.put("GX","广西"); provinceCN.put("GZ","贵州"); provinceCN.put("HA","河南"); provinceCN.put("HB","湖北"); provinceCN.put("HE","河北"); provinceCN.put("HI","海南"); provinceCN.put("HK","香港"); provinceCN.put("HL","黑龙江"); provinceCN.put("HN","湖南"); provinceCN.put("JL","吉林"); provinceCN.put("JS","江苏"); provinceCN.put("JX","江西"); provinceCN.put("LN","辽宁"); provinceCN.put("MO","澳门"); provinceCN.put("NM","内蒙古"); provinceCN.put("NX","宁夏"); provinceCN.put("QH","青海"); provinceCN.put("SC","四川"); provinceCN.put("SD","山东"); provinceCN.put("SH","上海"); provinceCN.put("SN","陕西"); provinceCN.put("SX","山西"); provinceCN.put("TJ","天津"); provinceCN.put("TW","台湾"); provinceCN.put("XJ","新疆"); provinceCN.put("XZ","西藏"); provinceCN.put("YN","云南"); provinceCN.put("ZJ","浙江"); } }

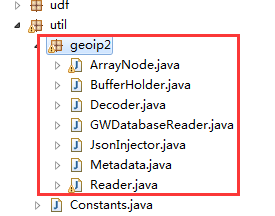

4. 在测试过程中发现已有的geoip2的jar和hive系统中其他jar有冲突,所以又修改了geoip2的部分源码。

最后,打包编译提交到hive的external_lib(自定义包统一存放的文件夹)中。

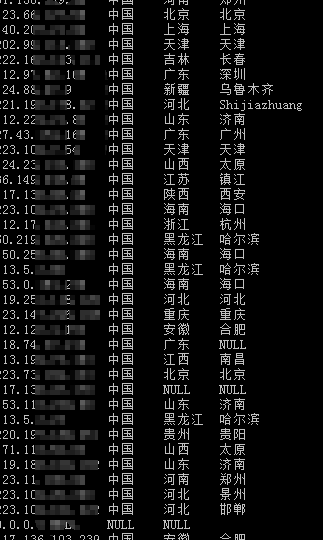

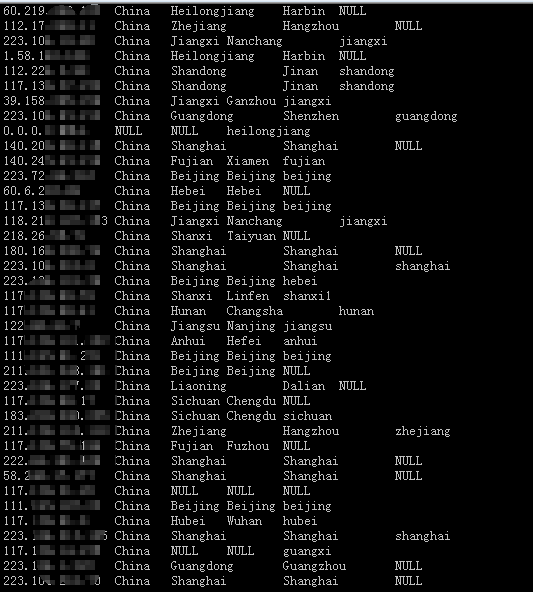

5. 测试结果:

ip2region(ip,0)表示国家

ip2region(ip,1)表示省份

ip2region(ip,2)表示城市

select ip,ip2region(ip,0),ip2region(ip,1),ip2region(ip,2) from xxx_table where ip is not null limit 300;

测试结果看到部分城市给出的是拼音(Shijiazhuang),这个免费库还是有点坑爹,暂时可以将就使用了。

中文版本如下:

英文版本如下:

最后附上整个部署的说明:

1. 将数据库文件添加到hive的路径 $HIVE_HOME/conf目录下

以后如果有IP数据库城市的更新,直接更新这个目录下的文件。

省份文件固定,不需要修改。

数据库文件有三个:geoCN.csv、geoEN.csv、GeoLite2-City.mmdb

2. 删除$HIVE_HOME/lib/目录下 geoip2-0.4.0.jar、maxminddb-0.2.0.jar 文件

使用maxmind-db-1.2.2.jar、geoip2-2.11.0.jar替换放入。

3. 将编译的regionalParse-0.0.1-SNAPSHOT.jar放到external_lib目录。

4. 修改.hiverc文件,添加以下内容

add jar /usr/local/hive/external_lib/regionalParse-0.0.1-SNAPSHOT.jar;

create temporary function ll2province as 'com.gw.udf.LL2Province';

create temporary function ll2provinceen as 'com.gw.udf.LL2ProvinceEN';

create temporary function indexof as 'com.gw.udf.IndexOf';

create temporary function ip2region as 'com.gw.udf.IP2Region';

create temporary function ip2regionen as 'com.gw.udf.IP2RegionEN';

5. 重启hiveServer2

#!/bin/sh

nohup $HIVE_HOME/bin/hive --service hiveserver2 &

6. 测试方法:

测试中文:

select ip,ip2region(ip,0),ip2region(ip,1),ip2region(ip,2),

ll2province(substring(coordinator, 0, indexof(coordinator, ":")), substring(coordinator, indexof(coordinator, ":") + 2))

from xxx where ip is not null limit 10;

测试英文:

select ip,ip2regionen(ip,0),ip2regionen(ip,1),ip2regionen(ip,2),

ll2provinceen(substring(coordinator, 0, indexof(coordinator, ":")), substring(coordinator, indexof(coordinator, ":") + 2))

from xxx where ip is not null limit 10;

本文来自博客园,作者:硅谷工具人,转载请注明原文链接:https://www.cnblogs.com/30go/p/8650584.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号