爬虫作业

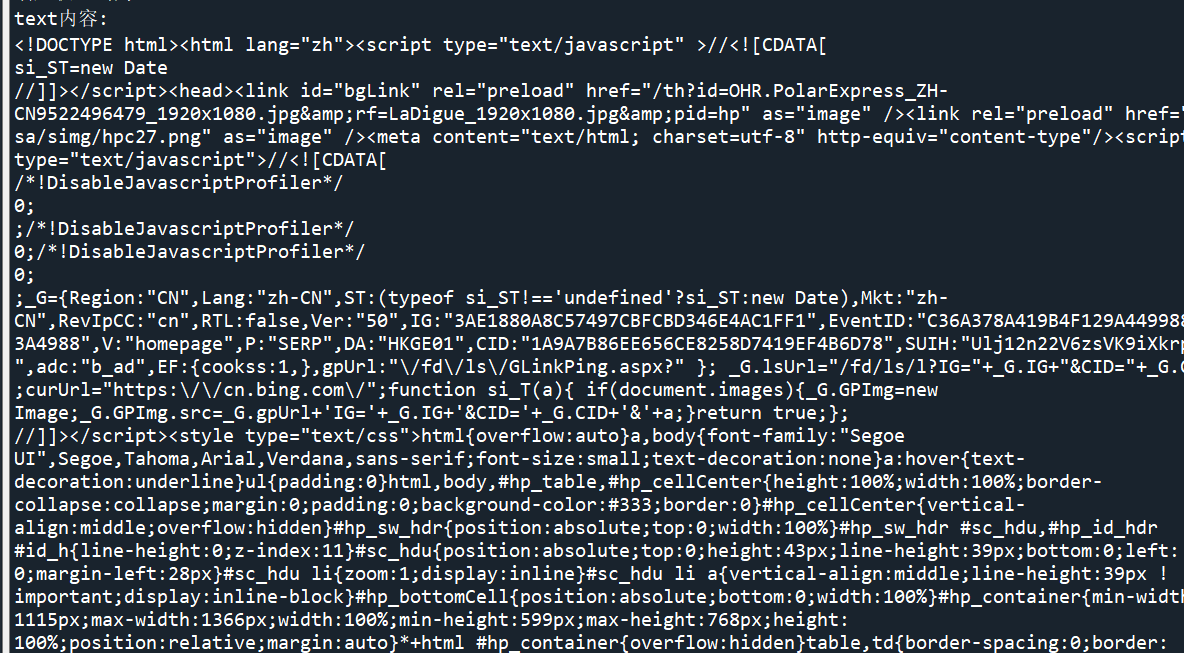

import requests url = 'https://cn.bing.com/' #请求头 headers = {'user-agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3741.400 QQBrowser/10.5.3863.400'} def Access(url,headers = headers): try: r = requests.get(url, timeout = 30, headers = headers) r.raise_for_status() status = r.status_code print('响应状态码为:{}'.format(status)) return r except: print('请求访问失败') for i in range(1,21): print('第{}次'.format(i)) r = Access(url) text = r.text content = r.content print('text内容:\n{}'.format(text)) print('text属性返回的网页内容长度:{}'.format(len(text))) print('contents属性返回的网页内容长度:{}'.format(len(content)))

这是一个简单的html页面,请保持为字符串,完成后面的计算要求。(良好)

<!DOCTYPE html> <html> <head> <meta charset="utf-8"> <title>菜鸟教程(runoob.com)</title> </head> <body> <h1>我的第一个标题</h1> <p id=first>我的第一个段落。</p > </body><table border="1"> <tr> <td>row 1, cell 1</td> <td>row 1, cell 2</td> </tr> <tr> <td>row 2, cell 1</td> <td>row 2, cell 2</td> </tr> </table> </html>

from bs4 import BeautifulSoup import re soup=BeautifulSoup('''<!DOCTYPE html> <html> <head> <meta charset="utf-8"> <title>菜鸟教程(runoob.com)</title> </head> <body> <h1>好好学习</h1> <p id="first">天天向上</p> </body> <table border="1"> <tr> <td>row 1, cell 1</td> <td>row 1, cell 2</td> </tr> <tr> <td>row 2, cell 1</td> <td>row 2, cell 2</td> </tr> </table> </html>''') print("head标签:\n",soup.head,"\n学号后两位:39") print("body标签:\n",soup.body) print("id为first的标签对象:\n",soup.find_all(id="first")) st=soup.text pp = re.findall(u'[\u1100-\uFFFDh]+?',st) print("html页面中的中文字符") print(pp)

,爬取大学排名(学号尾号9,0,爬取年费2019,)

import requests import csv import os import codecs from bs4 import BeautifulSoup allUniv = [] def getHTMLText(url): try: r = requests.get(url, timeout=30) r.raise_for_status() r.encoding = 'utf-8' return r.text except: return "" def fillUnivList(soup): data = soup.find_all('tr') for tr in data: ltd = tr.find_all('td') if len(ltd)==0: continue singleUniv = [] for td in ltd: singleUniv.append(td.string) allUniv.append(singleUniv) def printUnivList(num): print("{:^4}{:^10}{:^5}{:^8}{:^10}".format("排名","学校名称","省市","总分","培养规模")) for i in range(num): u=allUniv[i] print("{:^4}{:^10}{:^5}{:^8}{:^10}".format(u[0],u[1],u[2],u[3],u[6])) '''def write_csv_file(path, head, data): try: with open(path, 'w', newline='') as csv_file: writer = csv.writer(csv_file, dialect='excel') if head is not None: writer.writerow(head) for row in data: writer.writerow(row) print("Write a CSV file to path %s Successful." % path) except Exception as e: print("Write an CSV file to path: %s, Case: %s" % (path, e))''' def writercsv(save_road,num,title): if os.path.isfile(save_road): with open(save_road,'a',newline='')as f: csv_write=csv.writer(f,dialect='excel') for i in range(num): u=allUniv[i] csv_write.writerow(u) else: with open(save_road,'w',newline='')as f: csv_write=csv.writer(f,dialect='excel') csv_write.writerow(title) for i in range(num): u=allUniv[i] csv_write.writerow(u) title=["排名","学校名称","省市","总分","生源质量","培养结果","科研规模","科研质量","顶尖成果","顶尖人才","科技服务","产学研究合作","成果转化"] save_road="F:\\python\csvData.csv" def main(): url = 'http://www.zuihaodaxue.cn/zuihaodaxuepaiming2019.html' html = getHTMLText(url) soup = BeautifulSoup(html, "html.parser") fillUnivList(soup) printUnivList(10) writercsv('F:\\python\csvData.csv',10,title) main()