爬虫大作业

利用Python爬取微博数据生成词云图

import re from lxml import etree f = open('info.txt' ,'rb').read().decode('utf-8').replace('\\','') repost = re.findall('<div class="WB_text W_f14" node-type="feed_list_content" nick-name="UNIQ-王一博">(.*?)</div>', f) dongtai = re.findall('<div class="WB_text W_f14" node-type="feed_list_content" >(.*?)</div>', f) for i in repost: selector = etree.HTML(i) text = selector.xpath('string()') file = open('weico.txt', 'a+', encoding='utf-8') file.write(re.sub('\s+', '', text)) for j in dongtai: selector = etree.HTML(j) text1 = selector.xpath('string()') file = open('weico.txt', 'a+', encoding='utf-8') file.write(re.sub('\s+', '', text1))

import requests import re header = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/59.0.3071.115 Safari/537.36', 'Cookie': 'SINAGLOBAL=5542715244496.104.1504275191526; SCF=AhTamjvKXKQ6KxI4fPK4OCo3IcJW7WJ3bzHYaxiXbv6jeaSCC-kZD4k1uL2yYVm2T4vbdkdILuCFmdacFfopvWQ.; SUHB=0OMV2fGIiDZRi3; SUB=_2AkMttnCgdcPxrAFVn_Edy2jraIhH-jyeYxlWAn7uJhMyOhgv7lhQqSVutBF-XLeJtSfazwW1-LaF7O_swC9yVA0q; SUBP=0033WrSXqPxfM72wWs9jqgMF55529P9D9WFzxiHi1Fma1.SV9rcCiS_p5JpVF020SoMES0q01Ke4; login_sid_t=d2e992ebac9ccd5b74acb6ce967b2943; _s_tentry=www.baidu.com; Apache=3225744868884.1685.1526545586367; ULV=1526545586373:11:2:1:3225744868884.1685.1526545586367:1525350236568; cross_origin_proto=SSL; UOR=blog.csdn.net,widget.weibo.com,www.baidu.com',} Url = "https://weibo.com/u/5492443184?refer_flag=1001030101_&is_all=1#_rnd1526811587161" def parse_page(): resp = requests.get(Url, headers=header) txt_content = resp.content.decode("utf-8").replace('\\','') html = re.findall("<script>FM.view(.*?)</script>", txt_content) for info in html: f = open('info.txt', 'a+', encoding='utf-8') f.write(info.replace('(','').replace(')','')) f.close() if __name__ == '__main__': parse_page()

# -*- coding: utf-8 -*- import jieba from wordcloud import WordCloud, STOPWORDS text = '' f = open('weico.txt','rb').read().decode('utf-8') text += ' '.join(jieba.lcut(f)) wc = WordCloud( width=1920, height=1080, margin=2, background_color='white', # 设置背景颜色 font_path='C:\Windows\Fonts\STZHONGS.TTF', # 若是有中文的话,这句代码必须添加,不然会出现方框,不出现汉字 max_words=200, # 设置最大现实的字数 stopwords=STOPWORDS, # 设置停用词 max_font_size=150, # 设置字体最大值 random_state=42 # 设置有多少种随机生成状态,即有多少种配色方案 ) wc.generate_from_text(text) wc.to_file('weico.jpg')

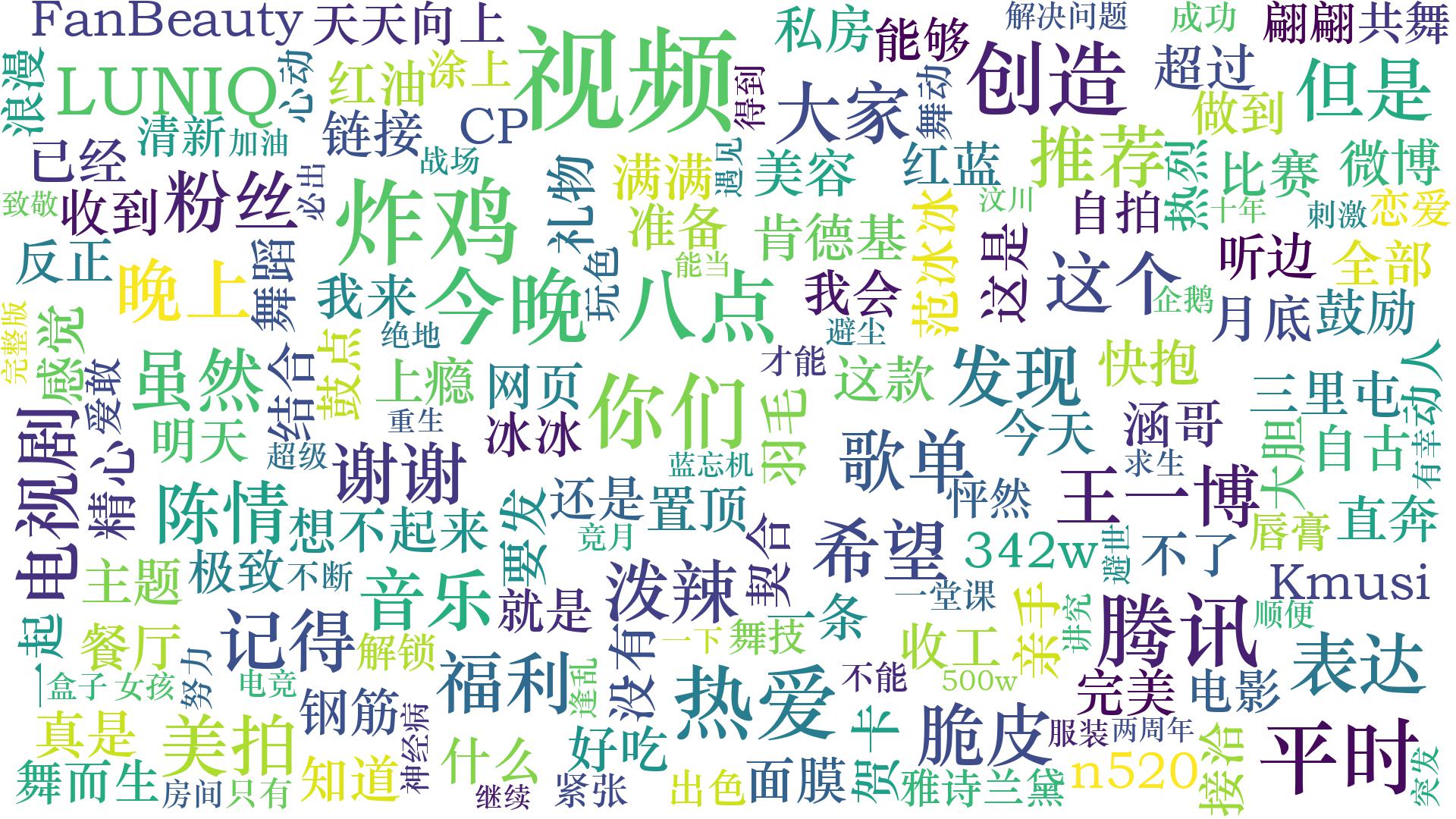

运行结果