hadoop使用

1.编写Java 代码

package edu.jmi.hdfsclient;

import java.io.IOException;

import java.net.Socket;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileStatus;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path/* * Hello world! *

*/

public class AppTest

{

public static void main( String[] args ) throws Exception, IOException

{

upload();

}

public static void download() throws IOException {

Configuration conf = new Configuration();

conf.set("fs.defaultFS","hdfs://192.168.159.134:9000");

FileSystem fs = FileSystem.newInstance(conf);

fs.copyToLocalFile(new Path("/start-all.sh"),new Path("e://"));

}

public static void upload() throws IOException {

Configuration conf = new Configuration();

conf.set("fs.defaultFS","hdfs://192.168.159.134:9000");

FileSystem fs = FileSystem.get(conf);

Path src=new Path("d://my.txt");

Path dest=new Path("/");

fs.copyFromLocalFile(src,dest);

FileStatus[] fileStatus = fs.listStatus(dest);

for(FileStatus file:fileStatus){

System.out.println(file.getPath());

}

System.out.println("上传成功");

}

public static void test()throws Exception{

Socket socket=new Socket("192.168.159.134",9000);

System.out.println(socket);//查看网络是否相同,拒绝说明防火墙开了,外界无法访问到网络。关一下就好了

}

}

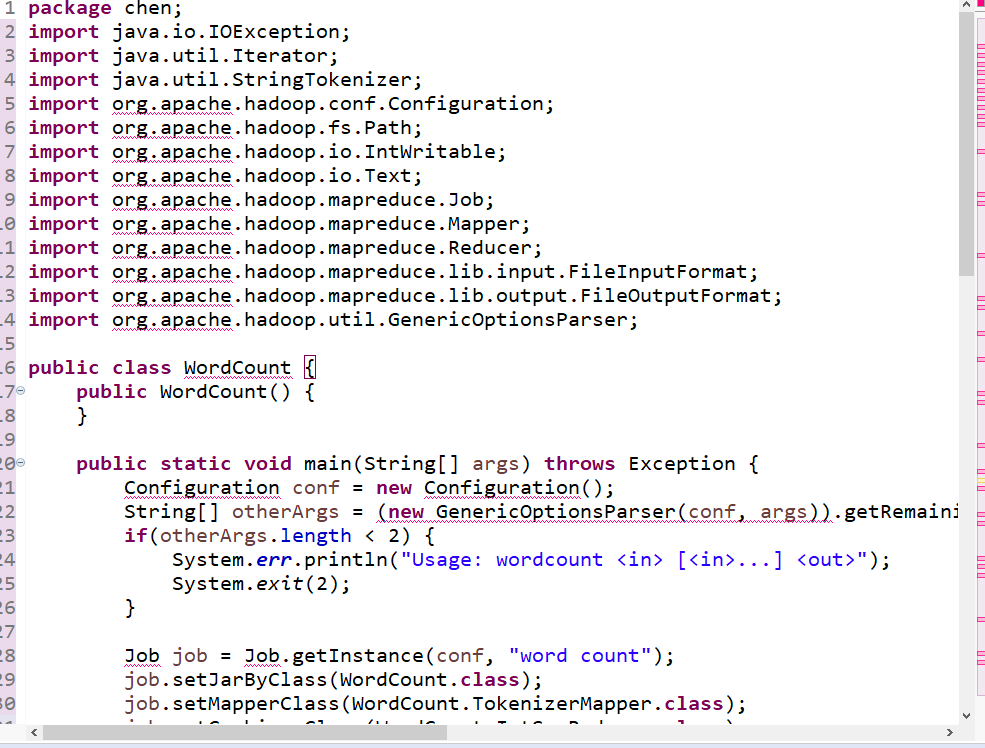

2.编写map与reduce函数