学习率

来源:中国大学MOOC 曹健 《TensorFlow笔记》

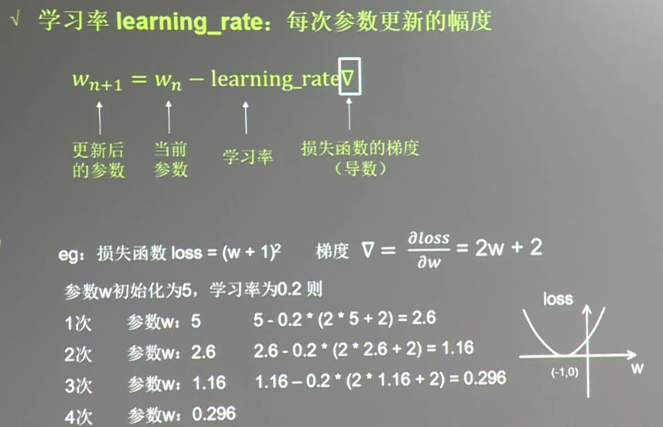

学习率:参数每次更新的幅度。

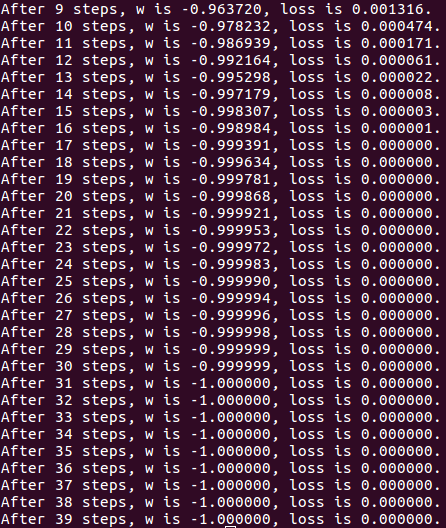

#coding:utf-8 #设损失函数 loss=(w+1)^2,令w的初值是常数5。反向传播就是求最优w。即求最小loss对应的w值 import tensorflow as tf #定义待优化参数w初值赋5 w = tf.Variable(tf.constant(5,dtype=tf.float32)) #定义损失函数loss loss = tf.squre(w+1) #定义反向传播方法 train_step = tf.train.GradientDescentOptimizer(0.2).minimize(loss) #定义会话,训练40轮 with tf.Session() as sess: init_op = tf.global_variables_initializer() sess.run(init_op) for i in range(40): sess.run(train_step) w_val = sess.run(w) loss_val = sess.run(loss) print "After %s steps: w is %f, loss is %f." % (i, w_val, loss_val)

· 10年+ .NET Coder 心语,封装的思维:从隐藏、稳定开始理解其本质意义

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· 阿里巴巴 QwQ-32B真的超越了 DeepSeek R-1吗?

· 10年+ .NET Coder 心语 ── 封装的思维:从隐藏、稳定开始理解其本质意义

· 【译】Visual Studio 中新的强大生产力特性

· 【设计模式】告别冗长if-else语句:使用策略模式优化代码结构

· 字符编码:从基础到乱码解决