k8s安装2

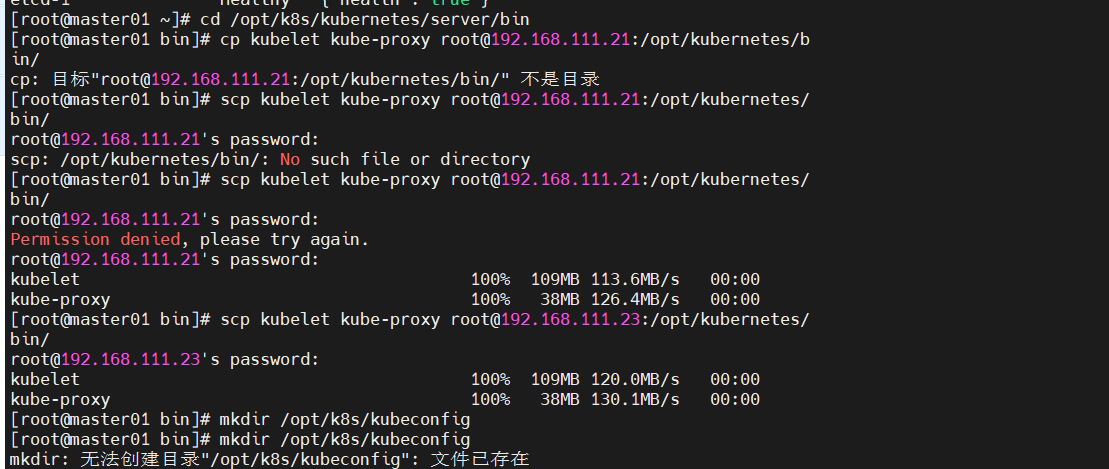

[root@master01 ~]# cd /opt/k8s/kubernetes/server/bin

[root@master01 bin]# scp kubelet kube-proxy root@192.168.111.21:/opt/kubernetes/

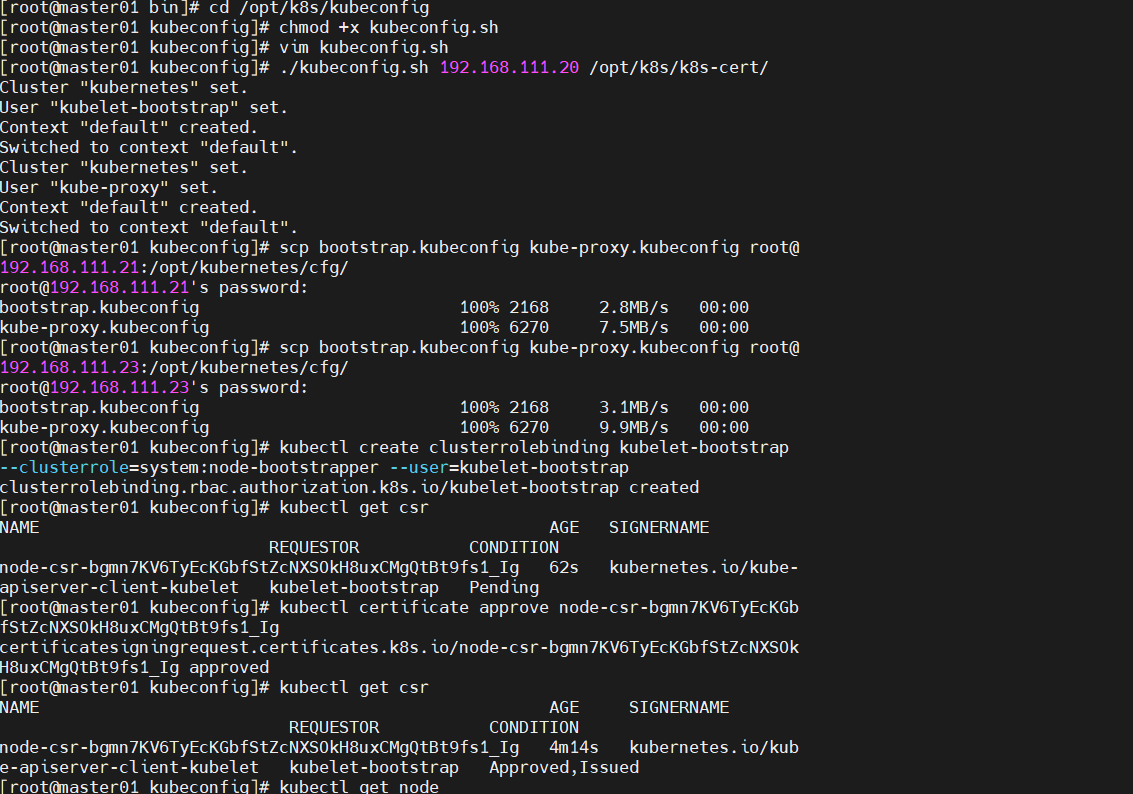

[root@master01 bin]# cd /opt/k8s/kubeconfig

[root@master01 kubeconfig]# chmod +x kubeconfig.sh

[root@master01 kubeconfig]# vim kubeconfig.sh

[root@master01 kubeconfig]# ./kubeconfig.sh 192.168.111.20 /opt/k8s/k8s-cert/

Cluster "kubernetes" set.

User "kubelet-bootstrap" set.

Context "default" created.

Switched to context "default".

Cluster "kubernetes" set.

User "kube-proxy" set.

Context "default" created.

Switched to context "default".

[root@master01 kubeconfig]# scp bootstrap.kubeconfig kube-proxy.kubeconfig root@ 192.168.111.21:/opt/kubernetes/cfg/

root@192.168.111.21's password:

bootstrap.kubeconfig 100% 2168 2.8MB/s 00:00

kube-proxy.kubeconfig 100% 6270 7.5MB/s 00:00

[root@master01 kubeconfig]# scp bootstrap.kubeconfig kube-proxy.kubeconfig root@ 192.168.111.23:/opt/kubernetes/cfg/

root@192.168.111.23's password:

bootstrap.kubeconfig 100% 2168 3.1MB/s 00:00

kube-proxy.kubeconfig 100% 6270 9.9MB/s 00:00

[root@master01 kubeconfig]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

[root@master01 kubeconfig]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-bgmn7KV6TyEcKGbfStZcNXSOkH8uxCMgQtBt9fs1_Ig 62s kubernetes.io/kube- apiserver-client-kubelet kubelet-bootstrap Pending

[root@master01 kubeconfig]# kubectl certificate approve node-csr-bgmn7KV6TyEcKGb fStZcNXSOkH8uxCMgQtBt9fs1_Ig

certificatesigningrequest.certificates.k8s.io/node-csr-bgmn7KV6TyEcKGbfStZcNXSOk H8uxCMgQtBt9fs1_Ig approved

[root@master01 kubeconfig]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

[root@master01 kubeconfig]# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.111.21 NotReady <none> 64s v1.20.11

[root@master01 kubeconfig]# cd /opt/k8s

[root@master01 k8s]# kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

[root@master01 k8s]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

kube-flannel-ds-5t8xq 0/1 Init:0/1 0 11s

[root@master01 k8s]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

192.168.111.21 NotReady <none> 7m37s v1.20.11

[root@master01 k8s]# vim calico.yaml

[root@master01 k8s]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

kube-flannel-ds-5t8xq 1/1 Running 0 7m12s

[root@master01 k8s]# vim calico.yaml

[root@master01 k8s]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

kube-flannel-ds-5t8xq 1/1 Running 0 13m

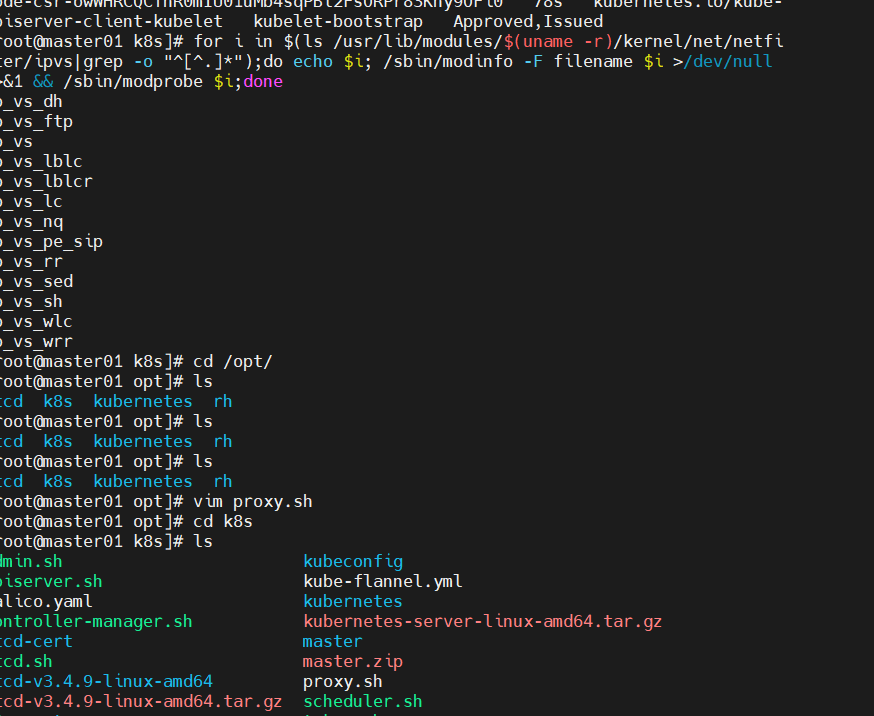

[root@master01 k8s]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-bgmn7KV6TyEcKGbfStZcNXSOkH8uxCMgQtBt9fs1_Ig 31m kubernetes.io/kube- apiserver-client-kubelet kubelet-bootstrap Approved,Issued

node-csr-owWHRCQCfhR0mIU01uMb4sqPBl2FsORPr83Khy9OFi0 27s kubernetes.io/kube- apiserver-client-kubelet kubelet-bootstrap Pending

[root@master01 k8s]# kubectl certificate approve node-csr-owWHRCQCfhR0mIU01uMb4s qPBl2FsORPr83Khy9OFi0

certificatesigningrequest.certificates.k8s.io/node-csr-owWHRCQCfhR0mIU01uMb4sqPB l2FsORPr83Khy9OFi0 approved

[root@master01 k8s]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-bgmn7KV6TyEcKGbfStZcNXSOkH8uxCMgQtBt9fs1_Ig 32m kubernetes.io/kube- apiserver-client-kubelet kubelet-bootstrap Approved,Issued

node-csr-owWHRCQCfhR0mIU01uMb4sqPBl2FsORPr83Khy9OFi0 78s kubernetes.io/kube- apiserver-client-kubelet kubelet-bootstrap Approved,Issued

[root@master01 k8s]# for i in $(ls /usr/lib/modules/$(uname -r)/kernel/net/netfi lter/ipvs|grep -o "^[^.]*");do echo $i; /sbin/modinfo -F filename $i >/dev/null 2>&1 && /sbin/modprobe $i;done

ip_vs_dh

ip_vs_ftp

ip_vs

ip_vs_lblc

ip_vs_lblcr

ip_vs_lc

ip_vs_nq

ip_vs_pe_sip

ip_vs_rr

ip_vs_sed

ip_vs_sh

ip_vs_wlc

ip_vs_wrr

[root@master01 k8s]# cd /opt/

[root@master01 opt]# ls

etcd k8s kubernetes rh

[root@master01 opt]# ls

etcd k8s kubernetes rh

[root@master01 opt]# ls

etcd k8s kubernetes rh

[root@master01 opt]# vim proxy.sh

[root@master01 opt]# cd k8s

[root@master01 k8s]# ls

admin.sh kubeconfig

apiserver.sh kube-flannel.yml

calico.yaml kubernetes

controller-manager.sh kubernetes-server-linux-amd64.tar.gz

etcd-cert master

etcd.sh master.zip

etcd-v3.4.9-linux-amd64 proxy.sh

etcd-v3.4.9-linux-amd64.tar.gz scheduler.sh

k8s-cert token.sh

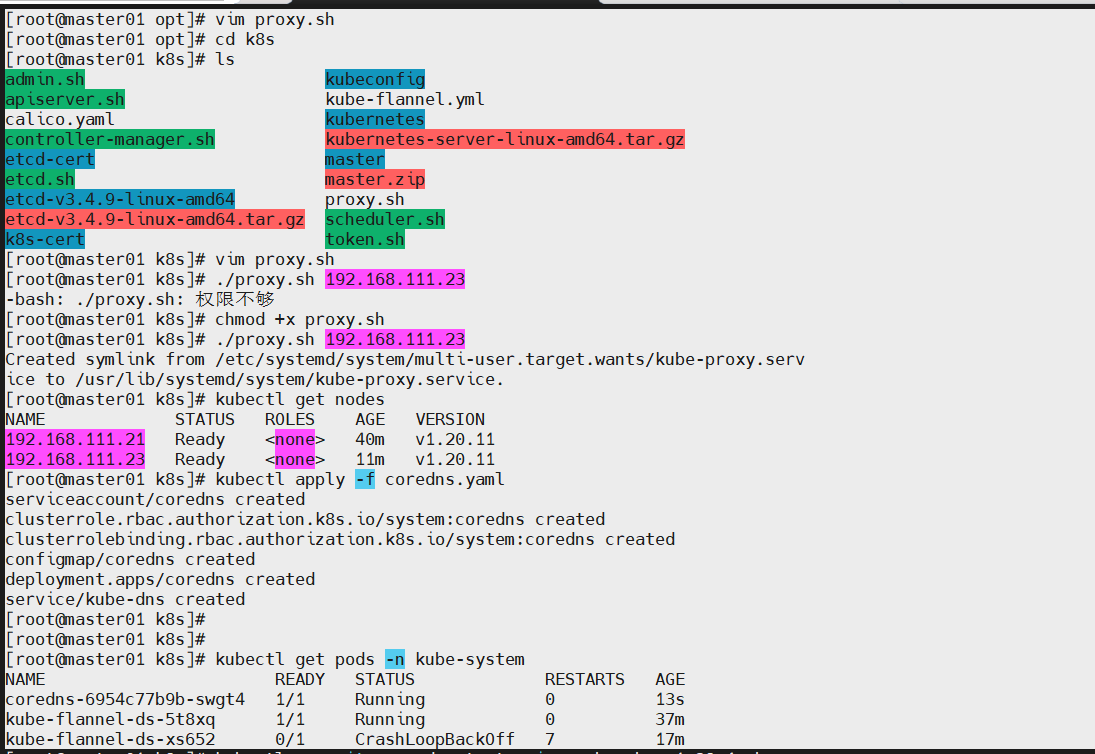

[root@master01 k8s]# vim proxy.sh

[root@master01 k8s]# ./proxy.sh 192.168.111.23

-bash: ./proxy.sh: 权限不够

[root@master01 k8s]# chmod +x proxy.sh

[root@master01 k8s]# ./proxy.sh 192.168.111.23

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.serv ice to /usr/lib/systemd/system/kube-proxy.service.

[root@master01 k8s]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

192.168.111.21 Ready <none> 40m v1.20.11

192.168.111.23 Ready <none> 11m v1.20.11

[root@master01 k8s]# kubectl apply -f coredns.yaml

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

configmap/coredns created

deployment.apps/coredns created

service/kube-dns created

[root@master01 k8s]#

[root@master01 k8s]#

[root@master01 k8s]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-6954c77b9b-swgt4 1/1 Running 0 13s

kube-flannel-ds-5t8xq 1/1 Running 0 37m

kube-flannel-ds-xs652 0/1 CrashLoopBackOff 7 17m

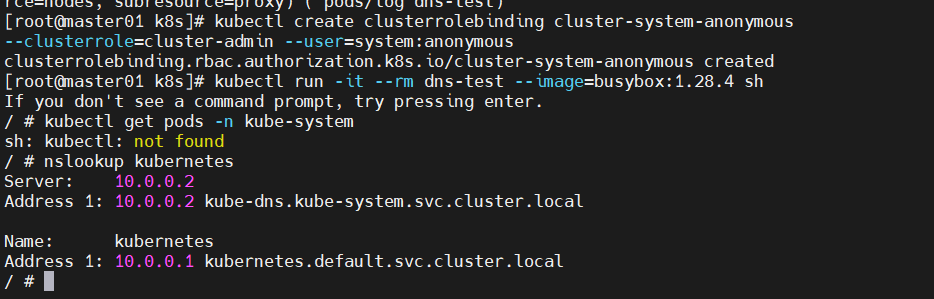

[root@master01 k8s]# kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=system:anonymous

clusterrolebinding.rbac.authorization.k8s.io/cluster-system-anonymous created

[root@master01 k8s]# kubectl run -it --rm dns-test --image=busybox:1.28.4 sh If you don't see a command prompt, try pressing enter.

/ # kubectl get pods -n kube-system

sh: kubectl: not found

/ # nslookup kubernetes

Server: 10.0.0.2

Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 10.0.0.1 kubernetes.default.svc.cluster.local

/ #

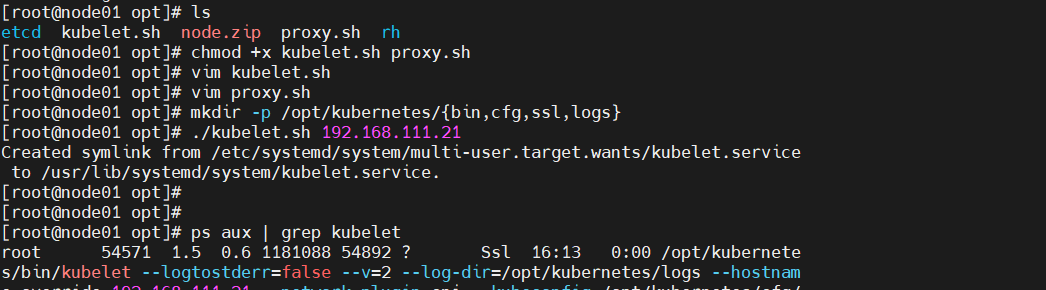

[root@node01 opt]# chmod +x kubelet.sh proxy.sh

[root@node01 opt]# vim kubelet.sh

[root@node01 opt]# vim proxy.sh

[root@node01 opt]# mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}

[root@node01 opt]# ./kubelet.sh 192.168.111.21

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

[root@node01 opt]#

[root@node01 opt]#

[root@node01 opt]# ps aux | grep kubelet

root 54571 1.5 0.6 1181088 54892 ? Ssl 16:13 0:00 /opt/kubernete s/bin/kubelet --logtostderr=false --v=2 --log-dir=/opt/kubernetes/logs --hostnam

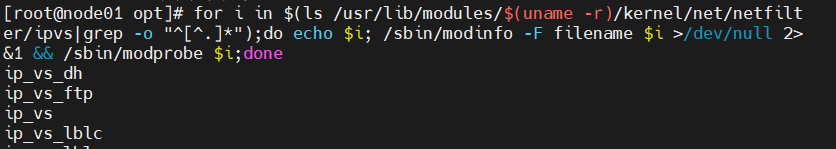

[root@node01 opt]# for i in $(ls /usr/lib/modules/$(uname -r)/kernel/net/netfilt er/ipvs|grep -o "^[^.]*");do echo $i; /sbin/modinfo -F filename $i >/dev/null 2> &1 && /sbin/modprobe $i;done

ip_vs_dh

ip_vs_ftp

ip_vs

[root@node01 opt]# cd /opt/

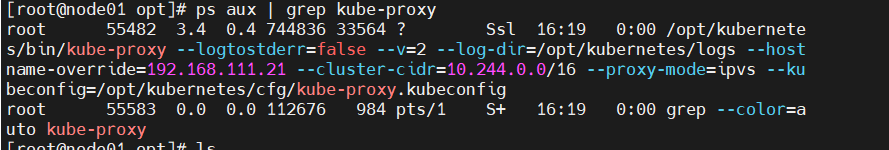

[root@node01 opt]# ./proxy.sh 192.168.111.21

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.serv ice to /usr/lib/systemd/system/kube-proxy.service.

[root@node01 opt]# ps aux | grep kube-proxy

root 55482 3.4 0.4 744836 33564 ? S

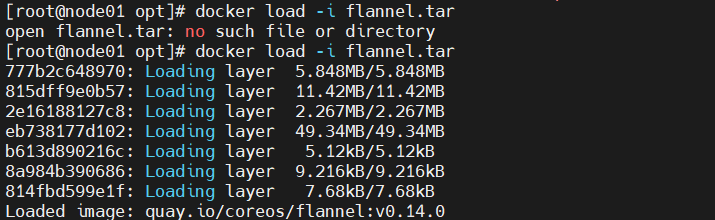

[root@node01 opt]# docker load -i flannel.tar

777b2c648970: Loading layer 5.848MB/5.848MB

815dff9e0b57: Loading layer 11.42MB/11.42MB

2e16188127c8: Loading layer 2.267MB/2.267MB

eb738177d102: Loading layer 49.34MB/49.34MB

b613d890216c: Loading layer 5.12kB/5.12kB

[root@node01 opt]# kubectl get csr

bash: kubectl: 未找到命令...

[root@node01 opt]# docker load -i coredns.tar

225df95e717c: Loading layer 336.4kB/336.4kB

96d17b0b58a7: Loading layer 45.02MB/45.02MB

Loaded image: k8s.gcr.io/coredns:1.7.0

[root@node01 opt]#

[root@node01 opt]# cd /opt/k8s

-bash: cd: /opt/k8s: 没有那个文件或目录

[root@node01 opt]# mkdir k8s

[root@node01 opt]# ls

cni coredns.tar k8s node.zip

cni-plugins-linux-amd64-v0.8.6.tgz etcd kubelet.sh proxy.sh

containerd flannel.tar kubernetes rh

[root@node01 opt]# cd k8s

[root@node01 k8s]# kubectl apply -f coredns.yaml

bash: kubectl: 未找到命令...

[root@node01 k8s]# cd /opt

[root@node01 opt]# ls

cni coredns.tar kubelet.sh proxy.sh

cni-plugins-linux-amd64-v0.8.6.tgz etcd kubernetes rh

containerd flannel.tar node.zip

[root@node01 opt]#

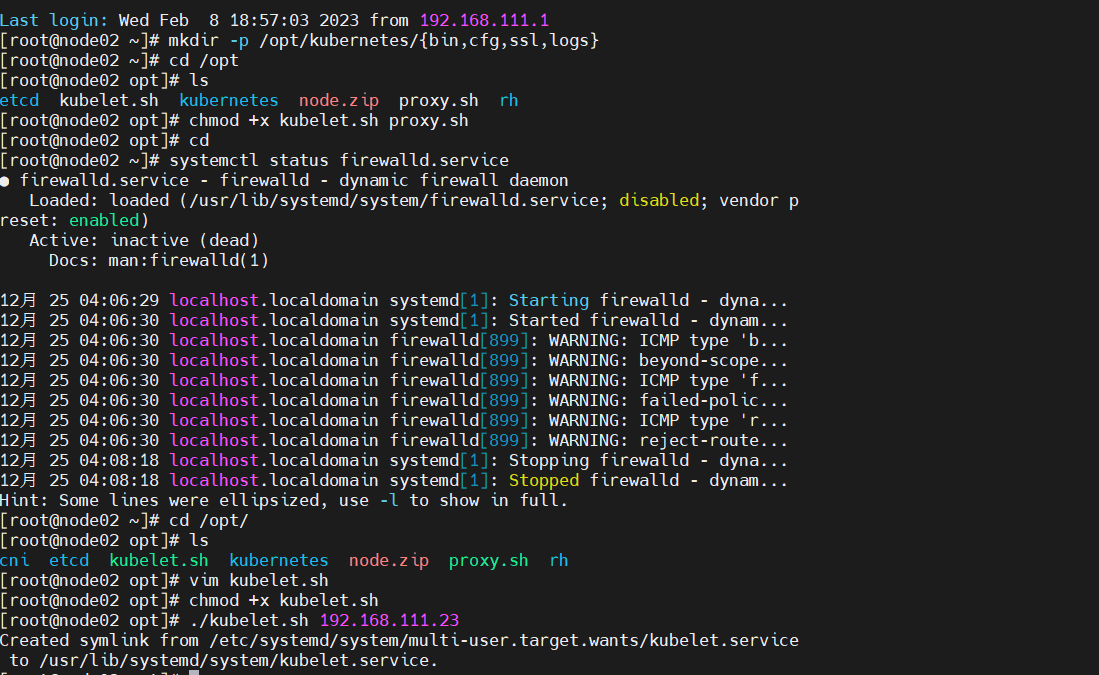

[root@node02 ~]# mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}

[root@node02 ~]# cd /opt

[root@node02 opt]# ls

etcd kubelet.sh kubernetes node.zip proxy.sh rh

[root@node02 opt]# chmod +x kubelet.sh proxy.sh

[root@node02 opt]# cd

[root@node02 ~]# systemctl status firewalld.service

● firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor p reset: enabled)

Active: inactive (dead)

Docs: man:firewalld(1)

12月 25 04:06:29 localhost.localdomain systemd[1]: Starting firewalld - dyna...

12月 25 04:06:30 localhost.localdomain systemd[1]: Started firewalld - dynam...

12月 25 04:06:30 localhost.localdomain firewalld[899]: WARNING: ICMP type 'b...

12月 25 04:06:30 localhost.localdomain firewalld[899]: WARNING: beyond-scope...

12月 25 04:06:30 localhost.localdomain firewalld[899]: WARNING: ICMP type 'f...

12月 25 04:06:30 localhost.localdomain firewalld[899]: WARNING: failed-polic...

12月 25 04:06:30 localhost.localdomain firewalld[899]: WARNING: ICMP type 'r...

12月 25 04:06:30 localhost.localdomain firewalld[899]: WARNING: reject-route...

12月 25 04:08:18 localhost.localdomain systemd[1]: Stopping firewalld - dyna...

12月 25 04:08:18 localhost.localdomain systemd[1]: Stopped firewalld - dynam...

Hint: Some lines were ellipsized, use -l to show in full.

[root@node02 ~]# cd /opt/

[root@node02 opt]# ls

cni etcd kubelet.sh kubernetes node.zip proxy.sh rh

[root@node02 opt]# vim kubelet.sh

[root@node02 opt]# chmod +x kubelet.sh

[root@node02 opt]# ./kubelet.sh 192.168.111.23

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 阿里最新开源QwQ-32B,效果媲美deepseek-r1满血版,部署成本又又又降低了!

· 开源Multi-agent AI智能体框架aevatar.ai,欢迎大家贡献代码

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· AI技术革命,工作效率10个最佳AI工具