(转)Lucene中文分词图解

本文记录Lucene+Paoding的使用方法图解:

一、下载Lucene(官网:http://archive.apache.org/dist/lucene/java/)本文中使用的是:2.9.4,下载后解压,Lucene所需要的基本jar文件如下列表:

lucene-core-2.9.4.jar Lucene核心jar

lucene-analyzers-2.9.4.jar Lucene分词jar

lucene-highlighter-2.9.4.jar Lucene高亮显示jar

二、由于Lucene中的中文分词实现不了我们所需要的功能,所以,需要下载第三方包(疱丁解牛)(官网:http://code.google.com/p/paoding/ )最新版本为:paoding-analysis-2.0.4-beta.zip 下载解压后,Lucene使用'疱丁'所需要的jar文件如下列表:

paoding-analysis.jar Lucene针对中文分词需要jar

commons-logging.jar 日志文件

{PADODING_HOME}/dic 疱丁解牛词典目录(PAODING_HOME:代表解压后的paoding目录)

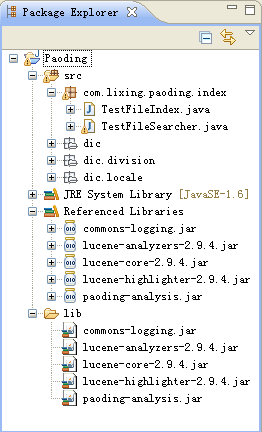

三、打开Eclipse并创建一个Java Project(项目名称和项目所在的路径不能包含空格),本例中Project Name:Paoding

1_1:在Paoding Project 创建一个Folder--lib(用于存放所有的jar),把前面所说的jar文件拷贝到lib目录下,并把lib下所有的jar添加到项目ClassPath下.

1_2:拷贝{PAODING_HOME}/dic目录 至 Paoding项目/src下整个项目结构图如下:

四、创建TestFileIndex.java类,实现功能是:把d:\data\*.txt所有文件读入内存中,并写入索引目录(d:\luceneindex)下

TestFileIndex.java

package com.lixing.paoding.index;

import java.io.BufferedReader;

import java.io.File;

import java.io.FileInputStream;

import java.io.InputStreamReader;

import net.paoding.analysis.analyzer.PaodingAnalyzer;

import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.document.Field;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.store.Directory;

import org.apache.lucene.store.FSDirectory;

public class TestFileIndex {

public static void main(String[] args) throws Exception {

String dataDir="d:/data";

String indexDir="d:/luceneindex";

File[] files=new File(dataDir).listFiles();

System.out.println(files.length);

Analyzer analyzer=new PaodingAnalyzer();

Directory dir=FSDirectory.open(new File(indexDir));

IndexWriter writer=new IndexWriter(dir, analyzer, IndexWriter.MaxFieldLength.UNLIMITED);

for(int i=0;i<files.length;i++){

StringBuffer strBuffer=new StringBuffer();

String line="";

FileInputStream is=new FileInputStream(files[i].getCanonicalPath());

BufferedReader reader=new BufferedReader(new InputStreamReader(is,"gb2312"));

line=reader.readLine();

while(line != null){

strBuffer.append(line);

strBuffer.append("\n");

line=reader.readLine();

}

Document doc=new Document();

doc.add(new Field("fileName", files[i].getName(), Field.Store.YES, Field.Index.ANALYZED));

doc.add(new Field("contents", strBuffer.toString(), Field.Store.YES, Field.Index.ANALYZED));

writer.addDocument(doc);

reader.close();

is.close();

}

writer.optimize();

writer.close();

dir.close();

System.out.println("ok");

}

}

import java.io.BufferedReader;

import java.io.File;

import java.io.FileInputStream;

import java.io.InputStreamReader;

import net.paoding.analysis.analyzer.PaodingAnalyzer;

import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.document.Field;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.store.Directory;

import org.apache.lucene.store.FSDirectory;

public class TestFileIndex {

public static void main(String[] args) throws Exception {

String dataDir="d:/data";

String indexDir="d:/luceneindex";

File[] files=new File(dataDir).listFiles();

System.out.println(files.length);

Analyzer analyzer=new PaodingAnalyzer();

Directory dir=FSDirectory.open(new File(indexDir));

IndexWriter writer=new IndexWriter(dir, analyzer, IndexWriter.MaxFieldLength.UNLIMITED);

for(int i=0;i<files.length;i++){

StringBuffer strBuffer=new StringBuffer();

String line="";

FileInputStream is=new FileInputStream(files[i].getCanonicalPath());

BufferedReader reader=new BufferedReader(new InputStreamReader(is,"gb2312"));

line=reader.readLine();

while(line != null){

strBuffer.append(line);

strBuffer.append("\n");

line=reader.readLine();

}

Document doc=new Document();

doc.add(new Field("fileName", files[i].getName(), Field.Store.YES, Field.Index.ANALYZED));

doc.add(new Field("contents", strBuffer.toString(), Field.Store.YES, Field.Index.ANALYZED));

writer.addDocument(doc);

reader.close();

is.close();

}

writer.optimize();

writer.close();

dir.close();

System.out.println("ok");

}

}

五、创建TestFileSearcher.java,实现在的功能是:读取索引中的内容:

TestFileSearcerh.java

package com.lixing.paoding.index;

import java.io.File;

import net.paoding.analysis.analyzer.PaodingAnalyzer;

import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.queryParser.QueryParser;

import org.apache.lucene.search.IndexSearcher;

import org.apache.lucene.search.Query;

import org.apache.lucene.search.ScoreDoc;

import org.apache.lucene.search.TopDocs;

import org.apache.lucene.store.Directory;

import org.apache.lucene.store.FSDirectory;

import org.apache.lucene.util.Version;

public class TestFileSearcher {

public static void main(String[] args) throws Exception {

String indexDir = "d:/luceneindex";

Analyzer analyzer = new PaodingAnalyzer();

Directory dir = FSDirectory.open(new File(indexDir));

IndexSearcher searcher = new IndexSearcher(dir, true);

QueryParser parser = new QueryParser(Version.LUCENE_29, "contents",analyzer);

Query query = parser.parse("呼救");

//Term term=new Term("fileName", "大学");

//TermQuery query=new TermQuery(term);

TopDocs docs=searcher.search(query, 1000);

ScoreDoc[] hits=docs.scoreDocs;

System.out.println(hits.length);

for(int i=0;i<hits.length;i++){

Document doc=searcher.doc(hits[i].doc);

System.out.print(doc.get("fileName")+"--:\n");

System.out.println(doc.get("contents")+"\n");

}

searcher.close();

dir.close();

}

}

import java.io.File;

import net.paoding.analysis.analyzer.PaodingAnalyzer;

import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.queryParser.QueryParser;

import org.apache.lucene.search.IndexSearcher;

import org.apache.lucene.search.Query;

import org.apache.lucene.search.ScoreDoc;

import org.apache.lucene.search.TopDocs;

import org.apache.lucene.store.Directory;

import org.apache.lucene.store.FSDirectory;

import org.apache.lucene.util.Version;

public class TestFileSearcher {

public static void main(String[] args) throws Exception {

String indexDir = "d:/luceneindex";

Analyzer analyzer = new PaodingAnalyzer();

Directory dir = FSDirectory.open(new File(indexDir));

IndexSearcher searcher = new IndexSearcher(dir, true);

QueryParser parser = new QueryParser(Version.LUCENE_29, "contents",analyzer);

Query query = parser.parse("呼救");

//Term term=new Term("fileName", "大学");

//TermQuery query=new TermQuery(term);

TopDocs docs=searcher.search(query, 1000);

ScoreDoc[] hits=docs.scoreDocs;

System.out.println(hits.length);

for(int i=0;i<hits.length;i++){

Document doc=searcher.doc(hits[i].doc);

System.out.print(doc.get("fileName")+"--:\n");

System.out.println(doc.get("contents")+"\n");

}

searcher.close();

dir.close();

}

}

本文出自 “李新博客” 博客,请务必保留此出处http://kinglixing.blog.51cto.com/3421535/702663