数据结构化与保存

1. 将新闻的正文内容保存到文本文件。

soup = BeautifulSoup(res.text,'html.parser') content =soup.select('.show-content')[0].text f=open('news.txt','w',encoding='utf-8') f.write(content) f.close()

2. 将新闻数据结构化为字典的列表:

- 单条新闻的详情-->字典news

- 一个列表页所有单条新闻汇总-->列表newsls.append(news)

- 所有列表页的所有新闻汇总列表newstotal.extend(newsls)

3. 安装pandas,用pandas.DataFrame(newstotal),创建一个DataFrame对象df.

4. 通过df将提取的数据保存到csv或excel 文件。

5. 用pandas提供的函数和方法进行数据分析:

- 提取包含点击次数、标题、来源的前6行数据

- 提取‘学校综合办’发布的,‘点击次数’超过3000的新闻。

- 提取'国际学院'和'学生工作处'发布的新闻。

import requests import re import pandas import openpyxl from bs4 import BeautifulSoup from datetime import datetime homepage='http://news.gzcc.cn/html/xiaoyuanxinwen/' #newsurl='http://news.gzcc.cn/html/2018/xiaoyuanxinwen_0411/9205.html' res = requests.get(homepage) #返回response对象 res.encoding='utf-8' soup = BeautifulSoup(res.text,'html.parser') newscount=int(soup.select('.a1')[0].text.split('条')[0]) newspages = newscount // 10 + 1 allnews=[] alllistnews=[] def get_new_click_count(click_url): Res = requests.get(click_url) Res.encoding = 'utf-8' Soup = BeautifulSoup(Res.text, 'html.parser').text return Soup.split("('#hits').html")[1].lstrip("('").rstrip("');") def get_all_news(newurl): dictionary={} Res = requests.get(newurl) Res.encoding = 'utf-8' Soup = BeautifulSoup(Res.text, 'html.parser') newlist=Soup.select('.news-list')[0].select('li') for newitem in newlist: title = newitem.select('.news-list-title')[0].text describe = newitem.select('.news-list-description')[0].text newurl=newitem.a.attrs['href'] newcontenturl = re.search('(\d{1,}\.html)', newurl).group(1) newcontenturl2 = newcontenturl.rstrip('.html') click_url='http://oa.gzcc.cn/api.php?op=count&id='+newcontenturl2+'&modelid=80' newclicktimes=get_new_click_count(click_url) def get_new_click_content(newurl): Res = requests.get(newurl) Res.encoding = 'utf-8' Soup = BeautifulSoup(Res.text, 'html.parser') distributetime = Soup.select('.show-info')[0].text.split()[0].lstrip('发布时间:') author = Soup.select('.show-info')[0].text.split()[2].lstrip('作者:') trial = Soup.select('.show-info')[0].text.split()[3].lstrip('审核:') orgin = Soup.select('.show-info')[0].text.split()[4].lstrip('来源:') photograph = Soup.select('.show-info')[0].text.split()[5].lstrip('摄影:') return distributetime,author,trial,orgin,photograph dictionary['distributetime']=get_new_click_content(newurl)[0] dictionary['author'] = get_new_click_content(newurl)[1] dictionary['trial'] = get_new_click_content(newurl)[2] dictionary['orgin'] = get_new_click_content(newurl)[3] dictionary['photograph'] = get_new_click_content(newurl)[4] dictionary['title'] = title dictionary['describe'] = describe allnews.append(dictionary) return allnews for i in range(2,6): page='http://news.gzcc.cn/html/xiaoyuanxinwen/{}.html'.format(i) alllistnews.extend(get_all_news(page)) df = pandas.DataFrame(alllistnews) print(df) df.to_excel('text.xlsx') print(df.head(6)) super=df[(df['clickcount']>2000)&(df['source']=='学校综合办')] print(super)

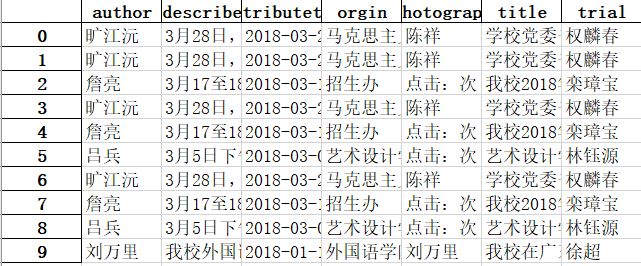

截图如下: