hadoop-3.3.0 + hbase-2.2.4 伪分布式模式部署

一、下载hadoop-3.3.0-aarch64.tar.gz解压到/home/hadoop-3.3.0 目录

二、设置环境变量 /etc/profile 文件末尾添加红色内容:

if [ -n "${BASH_VERSION-}" ] ; then if [ -f /etc/bashrc ] ; then # Bash login shells run only /etc/profile # Bash non-login shells run only /etc/bashrc # Check for double sourcing is done in /etc/bashrc. . /etc/bashrc fi fi export JAVA_HOME=/usr/java/jdk-11.0.4 export JRE_HOME=${JAVA_HOME}/jre export HADOOP_HOME=/home/hadoop-3.3.0 export CLASSPATH=$($HADOOP_HOME/bin/hadoop classpath):$CLASSPATH export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

保存文件后,执行 #source /etc/profile 使文件生效。

三、设置 /home/hadoop-3.3.0/etc/hadoop/hadoop-env.sh 文件的JAVA_HOME参数:

# The java implementation to use. By default, this environment # variable is REQUIRED on ALL platforms except OS X! export JAVA_HOME=/usr/java/jdk-11.0.4 # Location of Hadoop. By default, Hadoop will attempt to determine # this location based upon its execution path. # export HADOOP_HOME=

四、设置/home/hadoop-3.3.0/etc/hadoop 下的hdfs-site.xml 、core-site.xml、mapred-site.xml、yarn-site.xml、start-dfs.sh、stop-dfs.sh、start-yarn.sh、stop-yarn.sh 文件

hdfs-site.xml :

<?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. See accompanying LICENSE file. --> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>dfs.replication</name> <value>1</value> </property> <property> <name>dfs.namenode.name.dir</name> <value>/home/hadoop-3.3.0/namenode/name</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>/home/hadoop-3.3.0/datanode/data</value> </property> </configuration>

core-site.xml :

<?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. See accompanying LICENSE file. --> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>fs.defaultFS</name> <value>hdfs://iZwz974yt1dail4ihlqh6fZ{主机hostname}:9000</value> </property> <property> <name>hadoop.tmp.dir</name> <value>/home/hadoop-3.3.0/tmp</value> </property> </configuration>

mapred-site.xml:

<?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. See accompanying LICENSE file. --> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> </configuration>

yarn-site.xml:

<?xml version="1.0"?> <!-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. See accompanying LICENSE file. --> <configuration> <!-- Site specific YARN configuration properties --> <property> <name>yarn.resourcemanager.hostname</name> <value>iZwz974yt1dail4ihlqh6fZ{主机hostname}</value> </property> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.nodemanager.env-whitelist</name> <value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value> </property> </configuration>

start-dfs.sh、stop-dfs.sh:

#!/usr/bin/env bash HDFS_DATANODE_USER=root HDFS_DATANODE_SECURE_USER=hdfs HDFS_NAMENODE_USER=root HDFS_SECONDARYNAMENODE_USER=root # Licensed to the Apache Software Foundation (ASF) under one or more # contributor license agreements. See the NOTICE file distributed with # this work for additional information regarding copyright ownership.

start-yarn.sh、stop-yarn.sh:

#!/usr/bin/env bash YARN_RESOURCEMANAGER_USER=root HDFS_DATANODE_SECURE_USER=yarn YARN_NODEMANAGER_USER=root # Licensed to the Apache Software Foundation (ASF) under one or more # contributor license agreements. See the NOTICE file distributed with # this work for additional information regarding copyright ownership.

五、配置/etc/ssh/sshd_config 文件:

# $OpenBSD: sshd_config,v 1.103 2018/04/09 20:41:22 tj Exp $ # This is the sshd server system-wide configuration file. See # sshd_config(5) for more information. # This sshd was compiled with PATH=/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin # The strategy used for options in the default sshd_config shipped with # OpenSSH is to specify options with their default value where # possible, but leave them commented. Uncommented options override the # default value. # If you want to change the port on a SELinux system, you have to tell # SELinux about this change. # semanage port -a -t ssh_port_t -p tcp #PORTNUMBER # #Port 22 #AddressFamily any #ListenAddress 0.0.0.0 #ListenAddress :: HostKey /etc/ssh/ssh_host_rsa_key HostKey /etc/ssh/ssh_host_ecdsa_key HostKey /etc/ssh/ssh_host_ed25519_key # Ciphers and keying #RekeyLimit default none # System-wide Crypto policy: # This system is following system-wide crypto policy. The changes to # Ciphers, MACs, KexAlgoritms and GSSAPIKexAlgorithsm will not have any # effect here. They will be overridden by command-line options passed on # the server start up. # To opt out, uncomment a line with redefinition of CRYPTO_POLICY= # variable in /etc/sysconfig/sshd to overwrite the policy. # For more information, see manual page for update-crypto-policies(8). # Logging #SyslogFacility AUTH #LogLevel INFO # Authentication: #LoginGraceTime 2m #StrictModes yes #MaxAuthTries 6 #MaxSessions 10 PubkeyAuthentication yes # The default is to check both .ssh/authorized_keys and .ssh/authorized_keys2 # but this is overridden so installations will only check .ssh/authorized_keys AuthorizedKeysFile .ssh/authorized_keys #AuthorizedPrincipalsFile none #AuthorizedKeysCommand none #AuthorizedKeysCommandUser nobody # For this to work you will also need host keys in /etc/ssh/ssh_known_hosts #HostbasedAuthentication no # Change to yes if you don't trust ~/.ssh/known_hosts for # HostbasedAuthentication #IgnoreUserKnownHosts no # Don't read the user's ~/.rhosts and ~/.shosts files #IgnoreRhosts yes # To disable tunneled clear text passwords, change to no here! #PermitEmptyPasswords no # Change to no to disable s/key passwords #ChallengeResponseAuthentication yes ChallengeResponseAuthentication no # Kerberos options #KerberosAuthentication no #KerberosOrLocalPasswd yes #KerberosTicketCleanup yes #KerberosGetAFSToken no #KerberosUseKuserok yes # GSSAPI options GSSAPIAuthentication yes GSSAPICleanupCredentials no #GSSAPIStrictAcceptorCheck yes #GSSAPIKeyExchange no #GSSAPIEnablek5users no # Set this to 'yes' to enable PAM authentication, account processing, # and session processing. If this is enabled, PAM authentication will # be allowed through the ChallengeResponseAuthentication and # PAM authentication via ChallengeResponseAuthentication may bypass # the setting of "PermitRootLogin without-password". # If you just want the PAM account and session checks to run without # and ChallengeResponseAuthentication to 'no'. # WARNING: 'UsePAM no' is not supported in Fedora and may cause several # problems. UsePAM yes #AllowAgentForwarding yes #AllowTcpForwarding yes #GatewayPorts no X11Forwarding yes #X11DisplayOffset 10 #X11UseLocalhost yes #PermitTTY yes # It is recommended to use pam_motd in /etc/pam.d/sshd instead of PrintMotd, # as it is more configurable and versatile than the built-in version. PrintMotd no #PrintLastLog yes #TCPKeepAlive yes #PermitUserEnvironment no #Compression delayed #ClientAliveInterval 0 #ClientAliveCountMax 3 #UseDNS no #PidFile /var/run/sshd.pid #MaxStartups 10:30:100 #PermitTunnel no #ChrootDirectory none #VersionAddendum none # no default banner path #Banner none # Accept locale-related environment variables AcceptEnv LANG LC_CTYPE LC_NUMERIC LC_TIME LC_COLLATE LC_MONETARY LC_MESSAGES AcceptEnv LC_PAPER LC_NAME LC_ADDRESS LC_TELEPHONE LC_MEASUREMENT AcceptEnv LC_IDENTIFICATION LC_ALL LANGUAGE AcceptEnv XMODIFIERS # override default of no subsystems Subsystem sftp /usr/libexec/openssh/sftp-server # Example of overriding settings on a per-user basis #Match User anoncvs # X11Forwarding no # AllowTcpForwarding no # PermitTTY no # ForceCommand cvs server UseDNS no AddressFamily inet SyslogFacility AUTHPRIV PermitRootLogin yes PasswordAuthentication yes

执行 service sshd reload 使生效。

六、对namenode进行格式化,执行命令:

# hdfs namenode -format

七、启动: 进入 /home/hadoop-3.3.0/sbin 目录执行:

#./start-all.sh

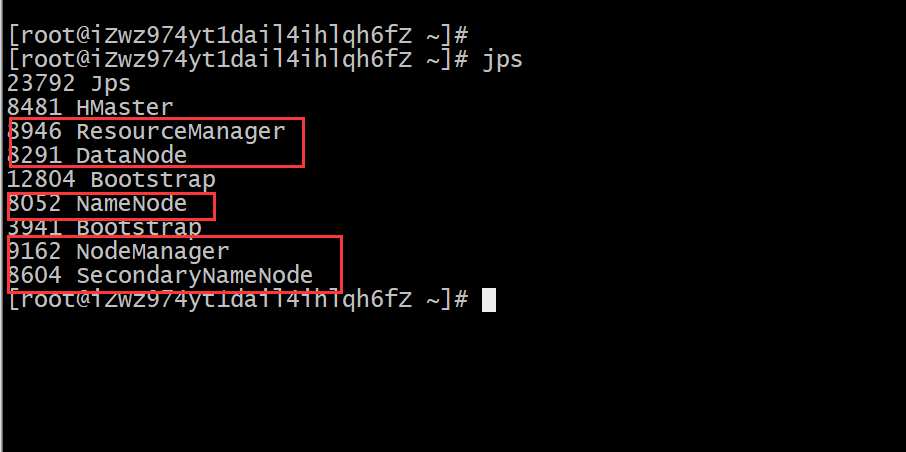

jps命令看到以下五个进程,表示启动成功:

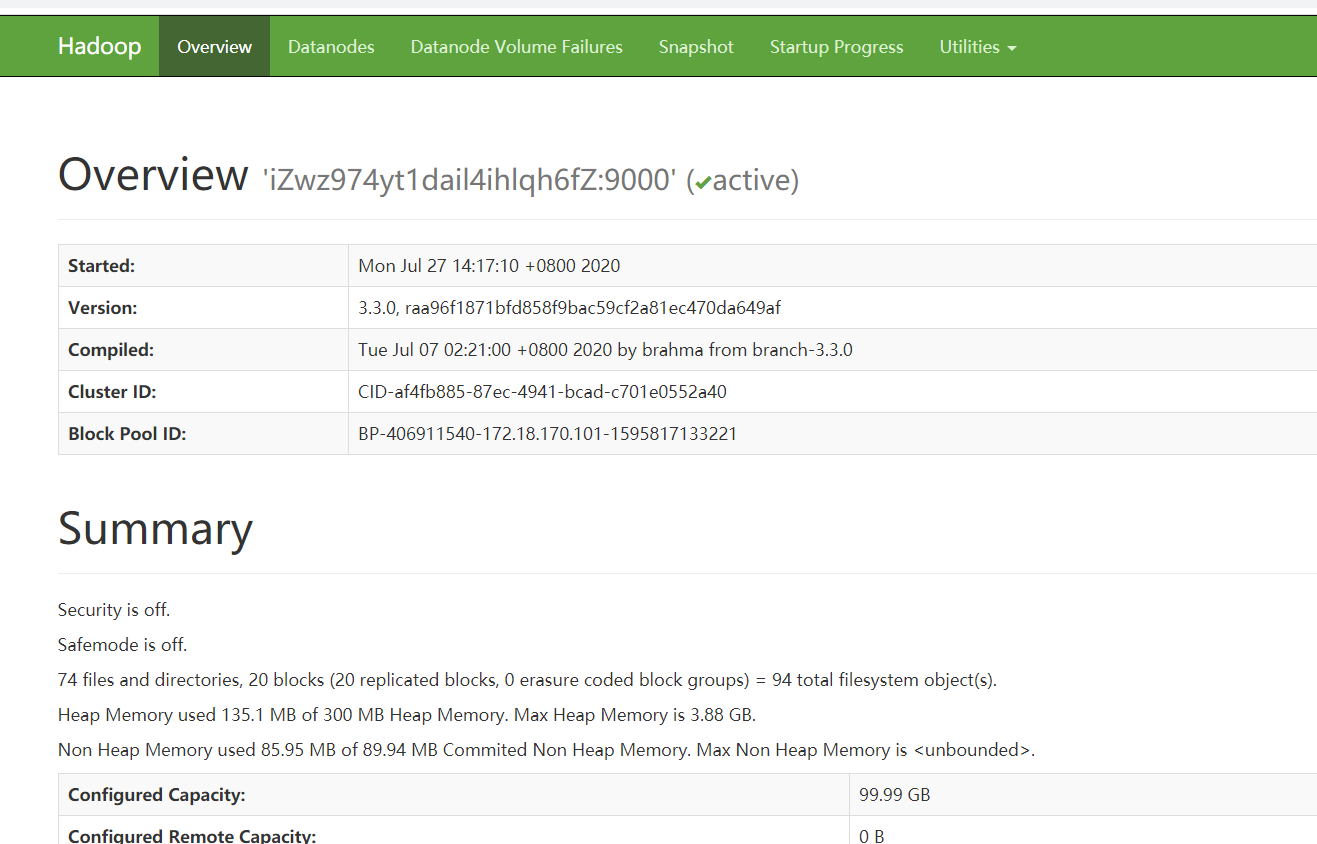

访问hadoop web页面: http://主机IP:9870/

相关问题: 启动过程出现 Permission denied (publickey,gssapi-keyex,gssapi-with-mic,password).错误:

解决办法,复制导入公钥就可以了,SSH链接需要使用公钥认证:

切换到ssh目录:cd ~/.ssh/

创建密钥:ssh-keygen -t rsa -P ""

将公钥追加到authorized_keys文件中去:cat $HOME/.ssh/id_rsa.pub >> $HOME/.ssh/authorized_keys

八、与hbase集成

8.1 复制hdfs-site.xml和core-site.xml 文件到 /home/hbase-2.2.4/conf 目录下;

8.2 确保hbase-site.xml的hbase.rootdir项配置与core-site.xml的fs.defaultFS对应

<property> <name>hbase.rootdir</name> <value>hdfs://iZwz974yt1dail4ihlqh6fZ:9000/hbase</value> <description> </description> </property>

<property> <name>fs.defaultFS</name> <value>hdfs://iZwz974yt1dail4ihlqh6fZ:9000</value> </property>

浙公网安备 33010602011771号

浙公网安备 33010602011771号