Prometheus

什么是Prometheus?

Prometheus是一个开源的系统监控和报警框架,其本身是一个时序数据库(TSDB),它的设计灵感来源于Google的Borgmon,就像Kubernetes是基于Borg系统开源的。

Prometheus是由SoundCloud的Google前员工设计并开源的,官方网站:https://prometheus.io。Prometheus于2016年加入云原生计算基金会(Cloud Native Computing Foundation,简称CNCF),成为了受欢迎程序仅此于Kubernetes的开源项目。

Prometheus特性

- 一个多维的数据模型,具有由指标名称和键/值对表示的时间序列数据。

- 使用PromQL查询和聚合数据,可以非常灵活的对数据进行检索。

- 不依赖额外的数据存储,Prometheus本身就是一个时序数据库,提供本地存储和分布式存储,并且每个Prometheus都是自治的。

- 应用程序暴露Metrics接口,Prometheus通过基于HTTP的Pull模式采集数据,同时可以使用PushGateway进行Push数据。

- Prometheus同时支持动态服务发现和静态配置发现目标机器。

- 支持多种图形和仪表盘,和Grafana堪称绝配。

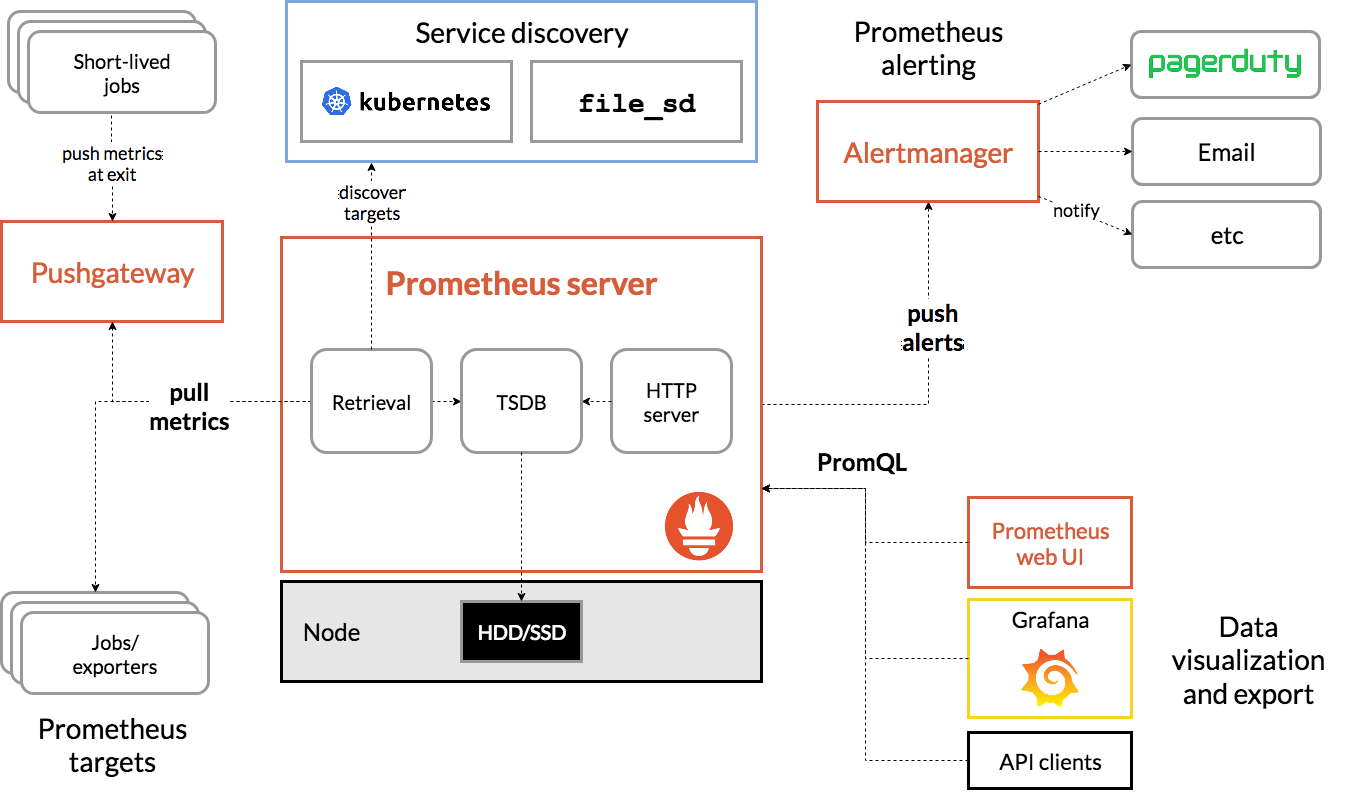

Prometheus架构及生态组件

- Prometheus Server: Prometheus生态最重要的组件,主要用于抓取和存储时间序列数据,同时提供数据的查询和告警策略的配置管理。

- Alertmanager:Prometheus生态用于告警的组件,Prometheus Server会讲告警发送给Alertmanager,Alertmanager根据路由配置,将告警信息发送给指定的人或组。Alertmanager支持邮件、webhook、微信、钉钉、短信等媒介进行告警通知。

- Grafana: 用于展示数据,便于数据的查询和观测。

- Push Gateway:Prometheus本身是通过Pull的方式拉取数据,但是有些监控的数据可能是短期的,如果没有采集数据可能会丢失。Push Gateway可以用来解决此类问题,它可以用来接收数据,也就是客户端可以通过Push的方式将数据推送到Push Gateway,之后Prometheus可以通过Pull拉取该数据。

- Exporter:主要用来采集监控数据,比如主机的监控数据可以通过node_exporter采集,MySQL的监控数据可以通过mysql_exporter采集,之后Exporter暴露一个接口,比如/metrics,Prometheus可以通过该接口采集到数据。

- PromQL:PromQL其实不算Prometheus的组件,它是用来查询数据的一种语法。

- Service Discovery: 用来发现监控目标的自动发现,常用的有基于Kubernetes、Consul、Eureka、文件的自动发现等。

Prometheus安装

kube-prometheus项目地址:https://github.com/prometheus-operator/kube-prometheus/

https://prometheus-operator.dev/docs/prologue/quick-start/

git clone -b release-0.8 https://github.com/prometheus-operator/kube-prometheus.git安装Prometheus Operator

cd kube-prometheus/manifests/

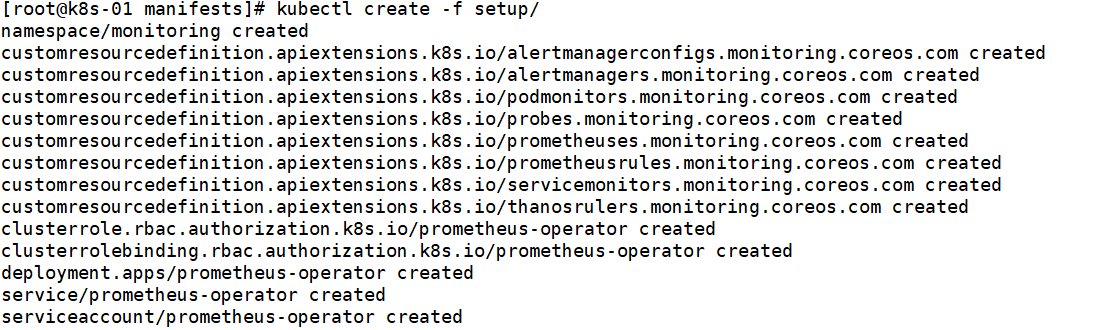

kubectl create -f setup/

- 查看Operator状态

kubectl get pod -n monitoring

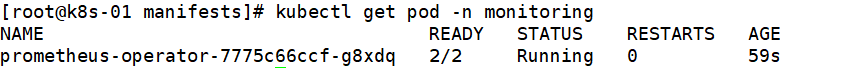

安装Prometheus

kubectl create -f .- 查看Prometheus状态

kubectl get pod -n monitoring

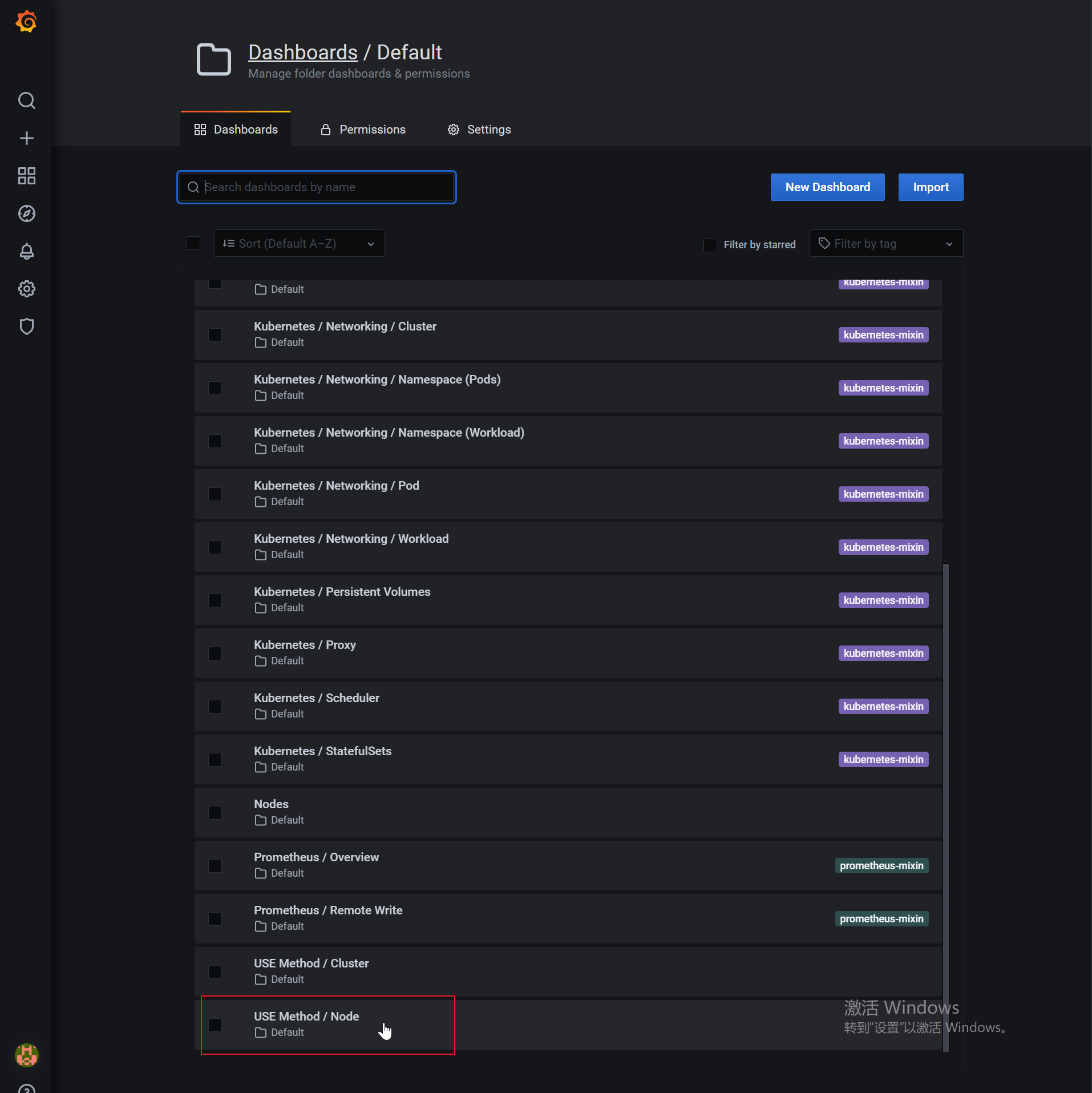

访问Grafana

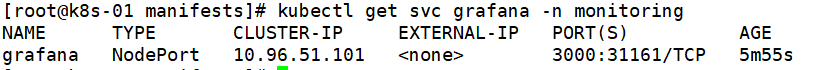

- 将Grafana的Service改成NodePort类型

kubectl edit svc grafana -n monitoring

kubectl get svc grafana -n monitoring

之后可以通过任意一个Node IP+31161端口访问到Grafana

Grafana默认登录账号密码admin/admin。

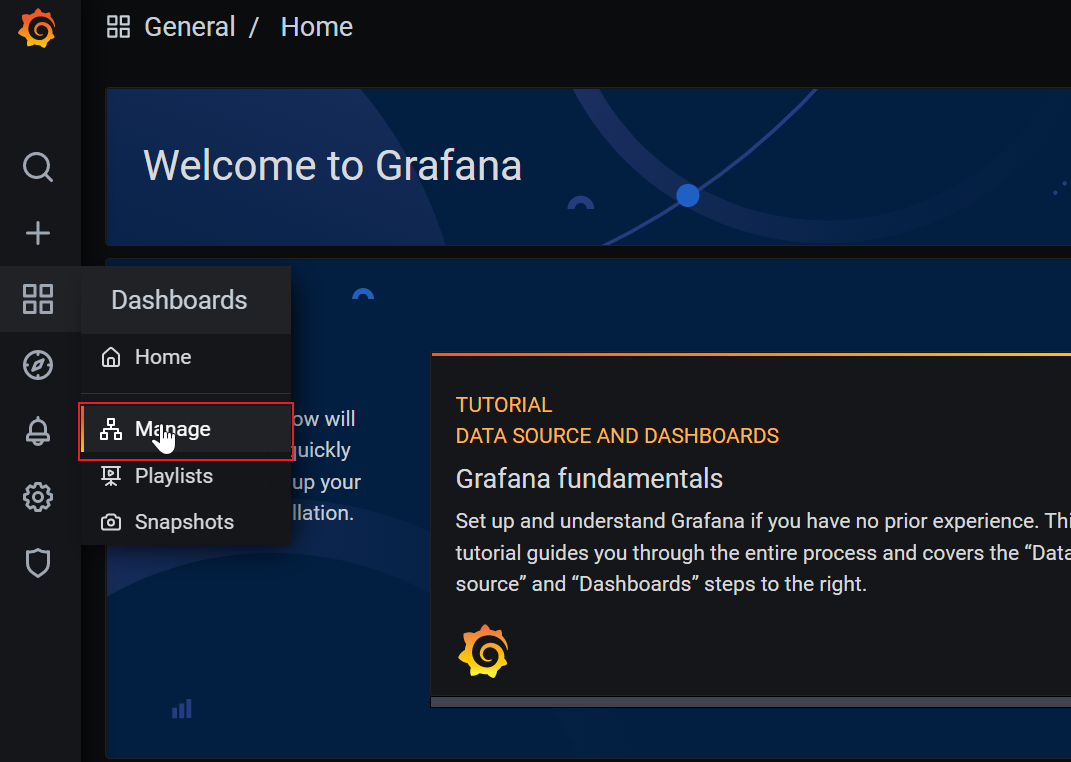

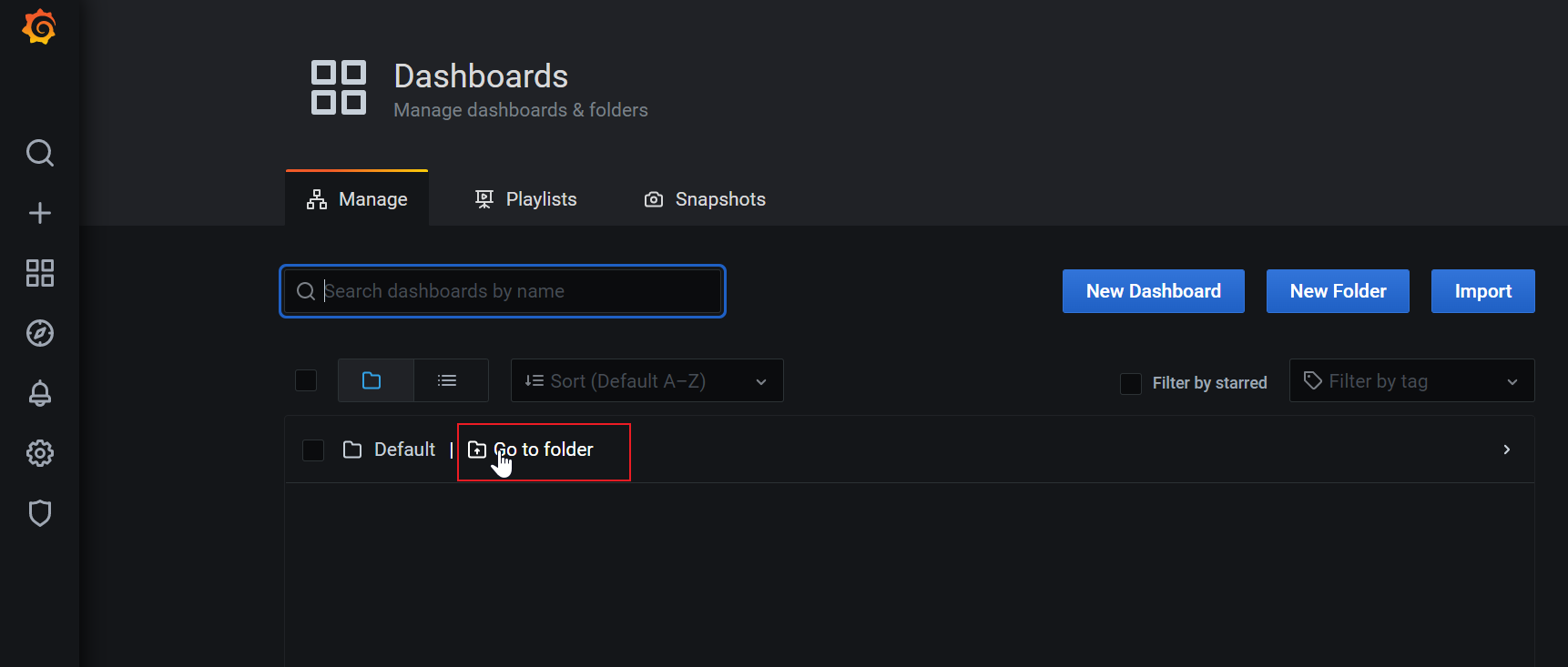

查看Node指标

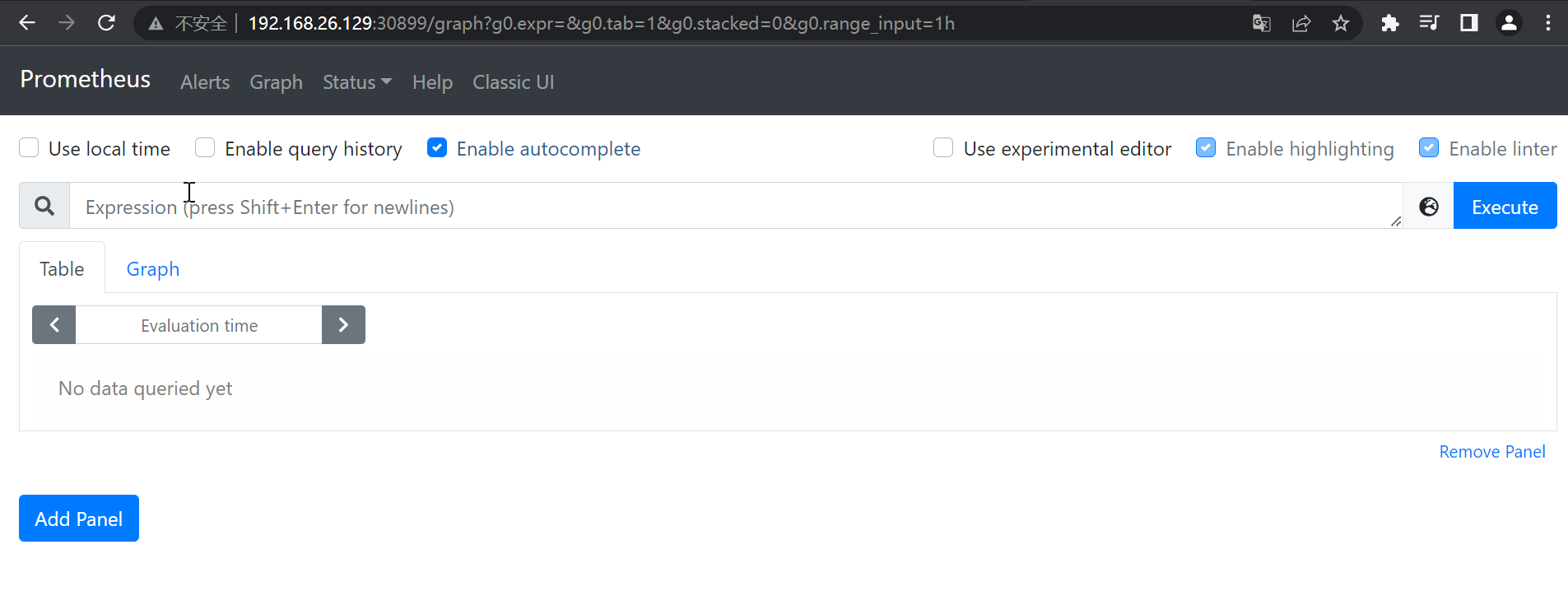

访问Prometheus的Web UI

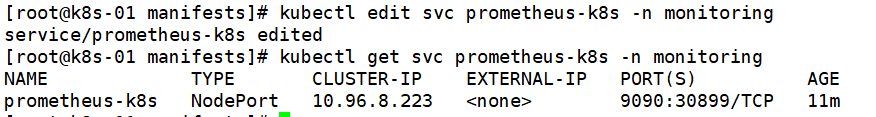

修改Prometheus Service为NodePort

kubectl edit svc prometheus-k8s -n monitoring

kubectl get svc prometheus-k8s -n monitoring

通过任意Node IP+30899端口访问Prometheus的Web UI。

Prometheus常用的Exporter

https://prometheus.io/docs/instrumenting/exporters/

| 类型 | Exporter |

| 数据库 | MySQL Exporter、Redis Exporter、MongoDB Exporter、MSSQL Exporter |

| 硬件 | Apcupsd Exporter,IoT Edison Exporter, IPMI Exporter, Node Exporter |

| 消息队列 | Beanstalkd Exporter, Kafka Exporter, NSQ Exporter, RabbitMQ Exporter |

| 存储 | Ceph Exporter, Gluster Exporter, HDFS Exporter, ScaleIO Exporter |

| HTTP 服务 | Apache Exporter, HAProxy Exporter, Nginx Exporter |

| API 服务 | AWS ECS Exporter, Docker Cloud Exporter, Docker Hub Exporter, GitHub Exporter |

| 日志 | Fluentd Exporter, Grok Exporter |

| 监控系统 | Collectd Exporter, Graphite Exporter, InfluxDB Exporter, Nagios Exporter, SNMP Exporter |

| 其它 | Blackbox Exporter, JIRA Exporter, Jenkins Exporter, Confluence Exporter |

通过ServiceMonitor创建服务发现

参考链接:https://help.aliyun.com/document_detail/260895.html

Prometheus监控支持使用CRD ServiceMonitor的方式来满足自定义服务发现的采集需求。通过使用ServiceMonitor,您可以自行定义Pod发现的Namespace范围以及通过matchLabel来选择监听的Service。

Demo

您可以通过下载Demo工程,同步体验通过ServiceMonitor创建服务发现的完整过程。

部署Demo工程至Kubernettes集群

参考以下内容创建Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: go-prometheus-app

namespace: default

labels:

app: demo-prometheus

spec:

replicas: 1

selector:

matchLabels:

app: demo-prometheus

template:

metadata:

labels:

app: demo-prometheus

spec:

containers:

- name: prometheus-application

image: registry.cn-hangzhou.aliyuncs.com/0x00000/prometheus-application:v0.0.1

ports:

- containerPort: 2112参考以下内容创建Service

apiVersion: v1

kind: Service

metadata:

name: go-prometheus-app-svc

namespace: default

labels:

app: prometheus-discovery

spec:

selector:

app: demo-prometheus

ports:

- protocol: TCP

port: 80

targetPort: 2112

name: metrics创建ServiceMonitor

ServiceMonitor的YAML示例如下:

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: prometheus-application

namespace: default

spec:

endpoints:

- interval: 15s

path: /metrics

port: metrics

namespaceSelector:

matchNames:

- default

selector:

matchLabels:

app: prometheus-discovery在这段YAML文件中,各代码段含义如下:

metadata下的name和namespace将指定ServiceMonitor所需要的一些关键元信息。spec的endpoints为服务端点,代表Prometheus所需要采集Metrics的地址。endpoints为一个数组,同时可以创建多个endpoints。每个endpoints包含三个字段,每个字段的含义如下:interval: 指定Prometheus对当前endpoints采集的周期。单位为秒,在本次示例中设定为15s。path: 指定Prometheus的采集路径。在本次示例中,指定为/metrics。port: 指定采集数据需要通过的端口,设置的端口为上一步创建Service时端口所设置的name。在本次示例中,设定为metrics。

spec的namespaceSelector为需要发现的Service的范围。namespaceSelector包含两个互斥字段,字段的含义如下:any: 有且仅有一个值true,当该字段被设置时,将监听所有符合Selector过滤条件的Service的变动。matchNames: 数组值,指定需要监听的namespace的范围。例如,只想监听default和kube-system两个命名空间中的Service,那么matchNames设置如下:

namespaceSelector:

matchNames:

- default

- kube-systemspec的selector用于选择Service。

在本次示例所使用的Service有 app: prometheus-discovery 的Label ,所以selector设置如下:

selector:

matchLabels:

app: prometheus-discovery创建ServiceMonitor

cd prometheus-application

kubectl apply -f manifests/service-monitor.yaml

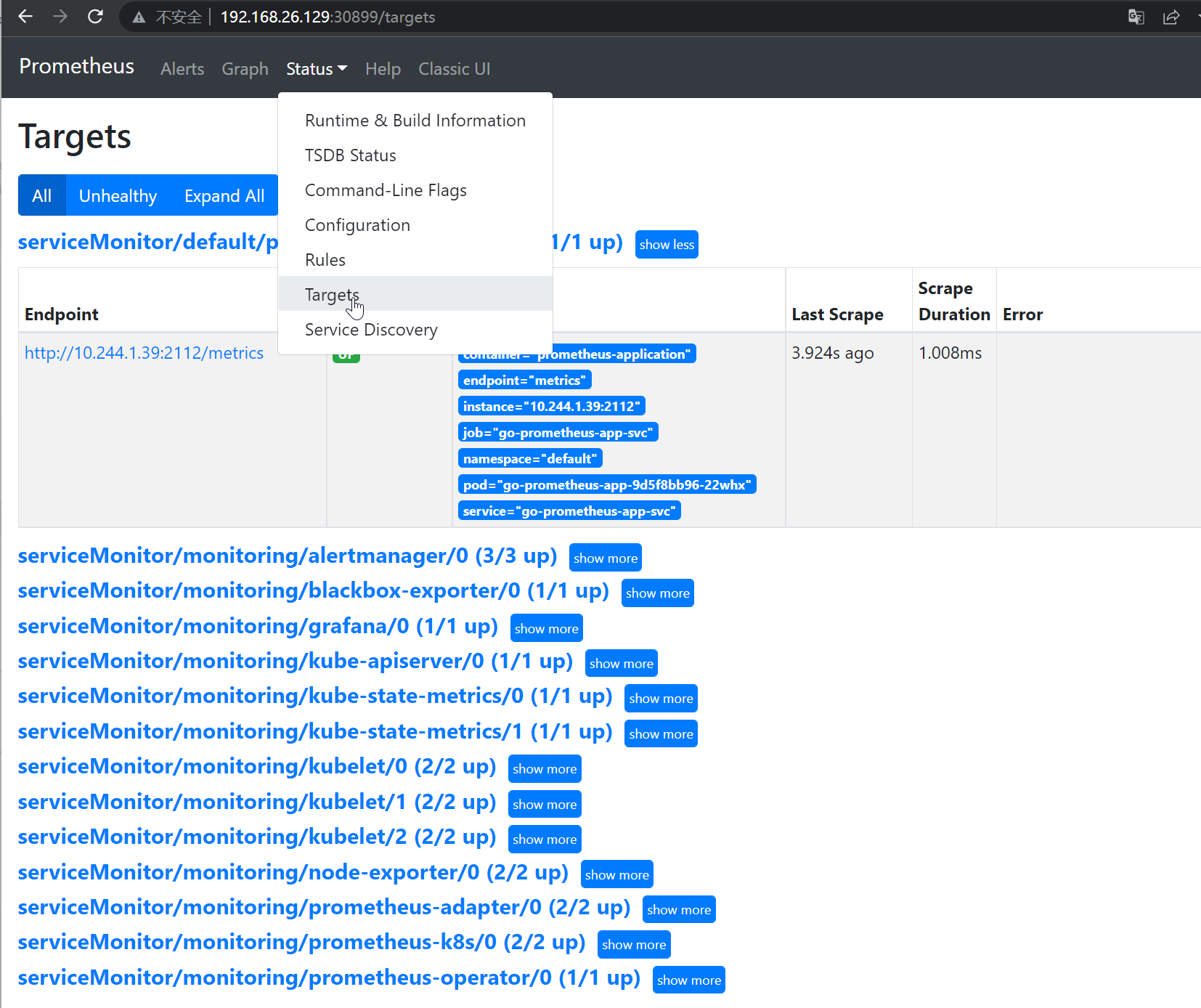

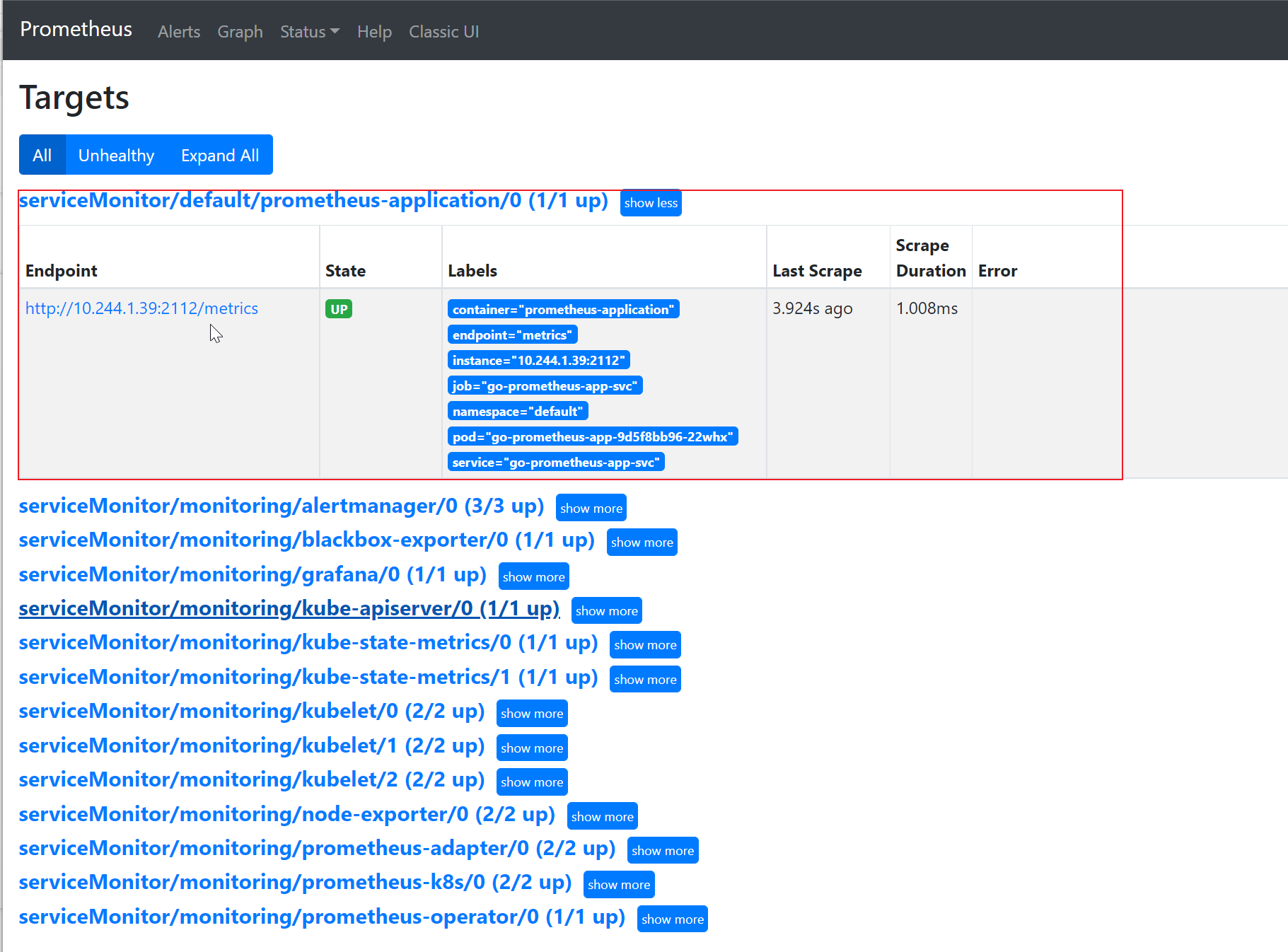

验证ServiceMonitor

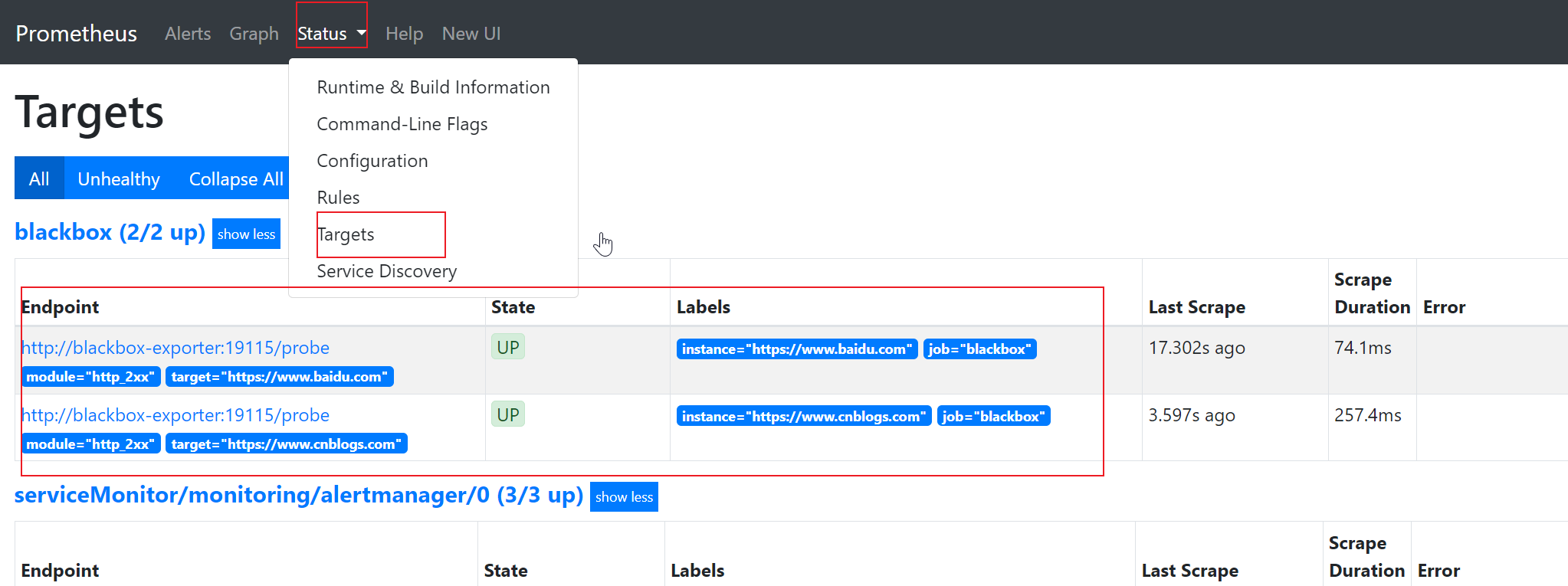

打开Prometheus Web UI,在Status下Targets页签,查看{namespace}/{serviceMonitorName}/x的Target。

非云原生监控Exporter

部署测试用例

首先部署MySQL至Kubernetes集群中

kubectl create deploy mysql --image=mysql:5.7.40

# 设置密码

kubectl set env deploy/mysql MYSQL_ROOT_PASSWORD=mysql

# 查看POD状态

kubectl get pod -l app=mysql

# 创建Service

kubectl expose deploy mysql --port 3306

# 查看Service

kubectl get svc -l app=mysql

# 登录MySQL

kubectl exec -ti mysql-ffc49bdb9-vvbss -- bash

mysql -uroot -pmysql

CREATE USER 'exporter'@'%' IDENTIFIED BY 'exporter' WITH

MAX_USER_CONNECTIONS 3;

GRANT PROCESS, REPLICATION CLIENT, SELECT ON *.* TO

'exporter'@'%';配置mysqld-exporter采集MySQL监控数据

mysqld-exporter.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysqld-exporter

namespace: monitoring

spec:

replicas: 1

selector:

matchLabels:

app: mysqld-exporter

template:

metadata:

labels:

app: mysqld-exporter

spec:

containers:

- name: mysqld-exporter

image: prom/mysqld-exporter

env:

- name: DATA_SOURCE_NAME

value: "exporter:exporter@(mysql.default:3306)/"

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9104

---

apiVersion: v1

kind: Service

metadata:

name: mysqld-exporter

namespace: monitoring

labels:

app: mysqld-exporter

spec:

type: ClusterIP

selector:

app: mysqld-exporter

ports:

- name: api

port: 9104

protocol: TCP注意将DATA_SOURCE_NAME的配置,需要将exporter:exporter@(mysql.default:3306)/ 改为自己实际的配置。

kubectl apply -f mysqld-exporter.yaml

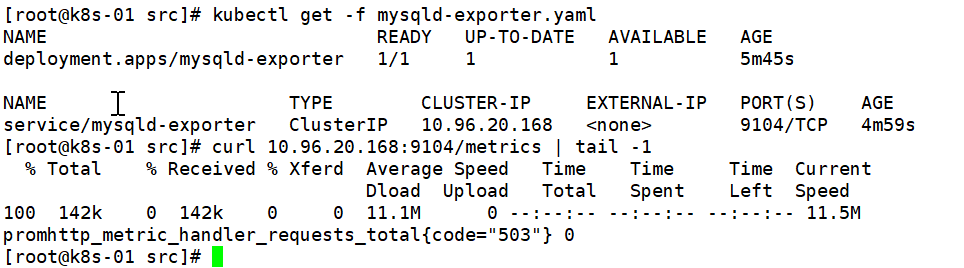

kubectl get -f mysqld-exporter.yaml通过该Service地址,检查是否正常获取到Metrics数据

curl 10.96.20.168:9104/metrics | tail -1

配置ServiceMonitor和Grafana

- 配置ServiceMonitor

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: mysqld-exporter

namespace: monitoring

labels:

app: mysqld-exporter

namespace: monitoring

spec:

jobLabel: app

endpoints:

- port: api

interval: 30s

scheme: http

selector:

matchLabels:

app: mysqld-exporter

namespaceSelector:

matchNames:

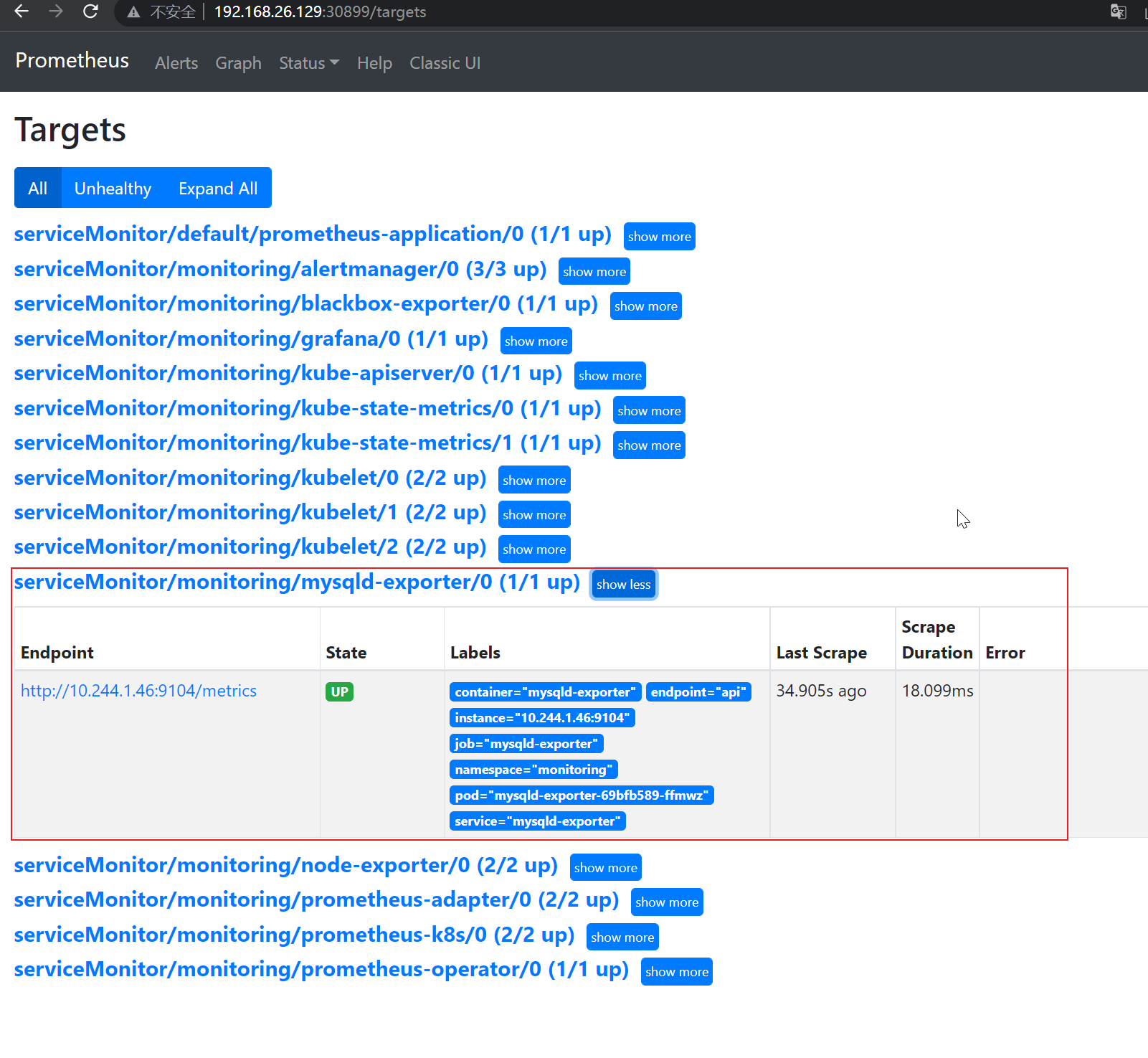

- monitoring在Prometheus Web UI查看该监控

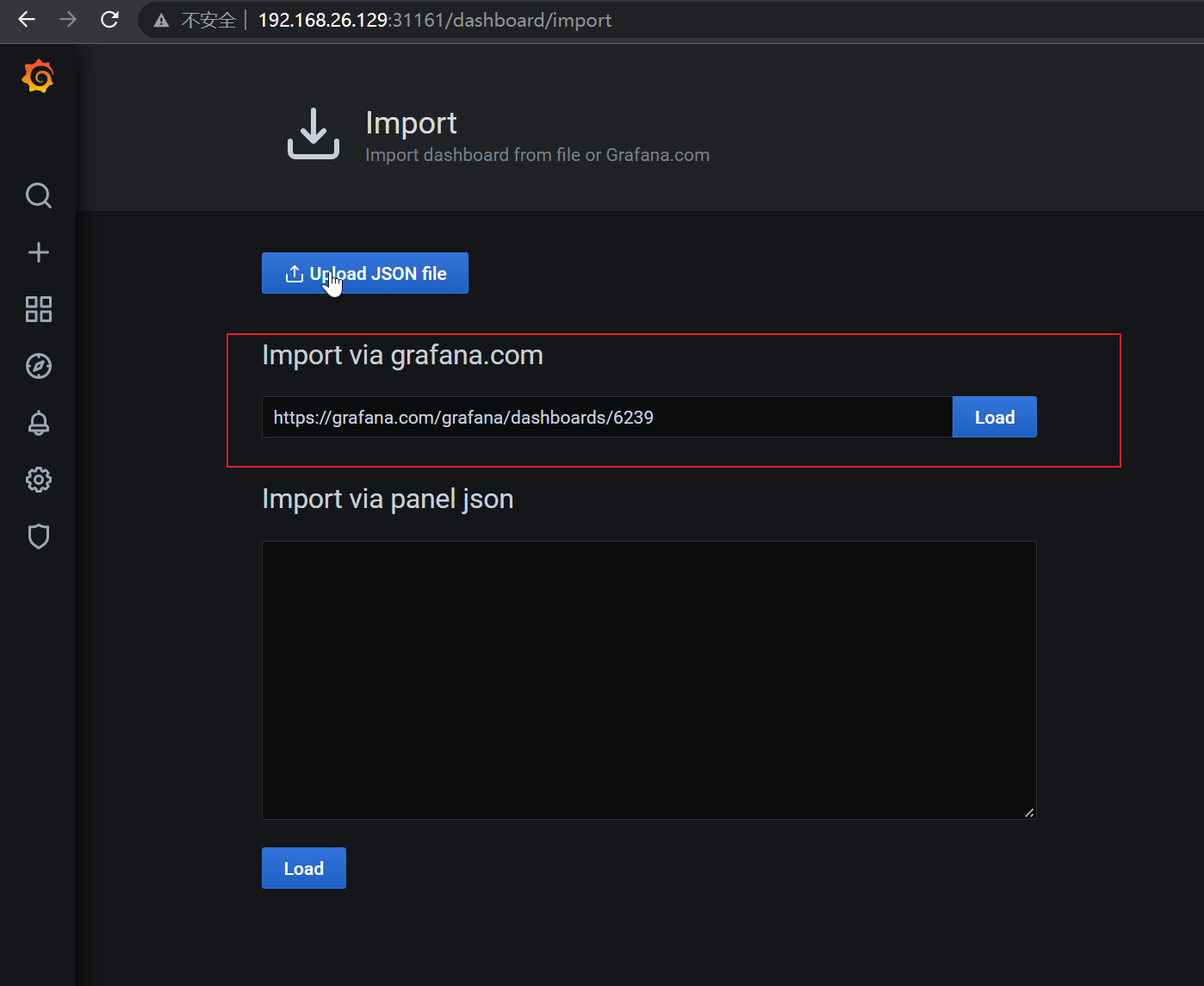

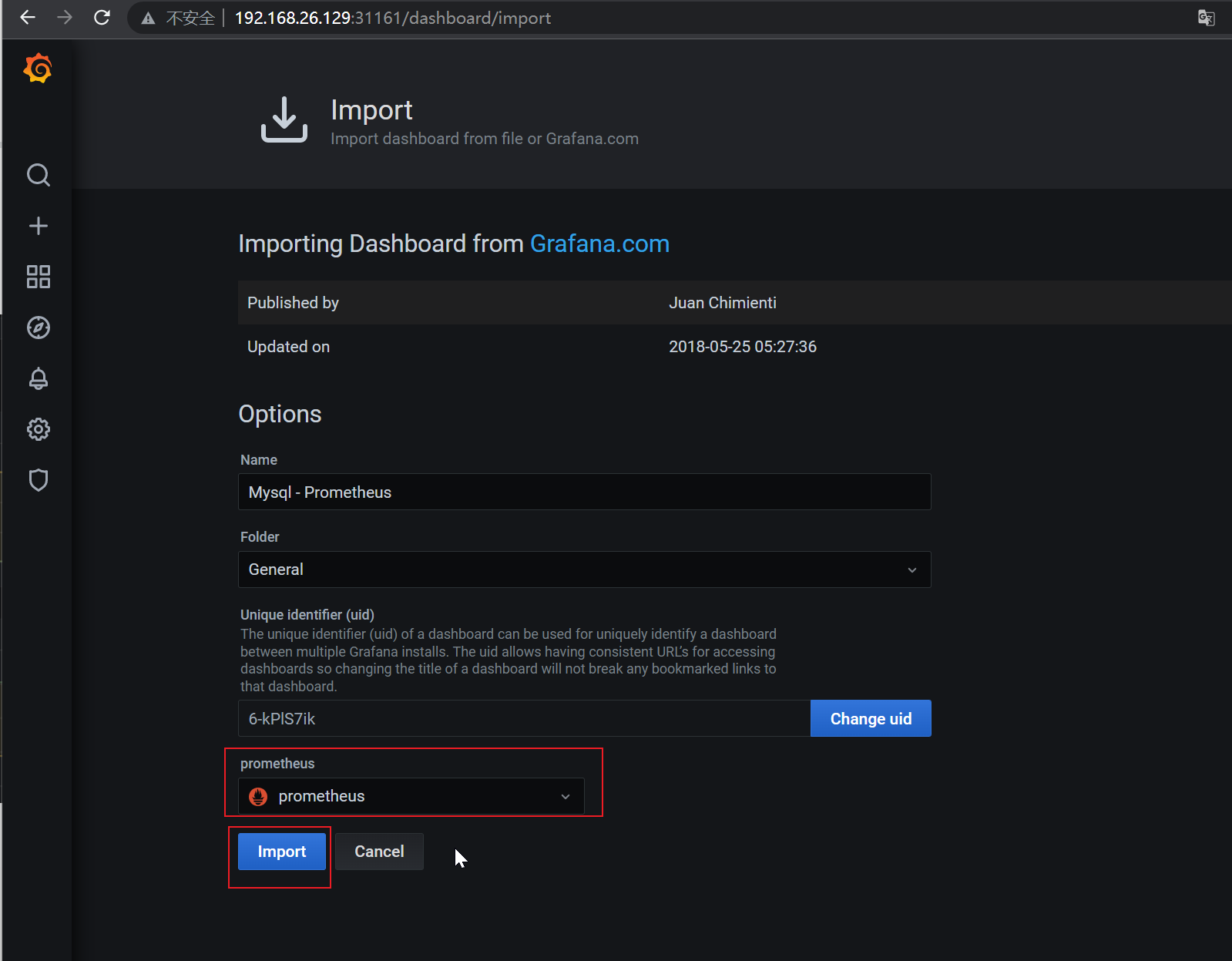

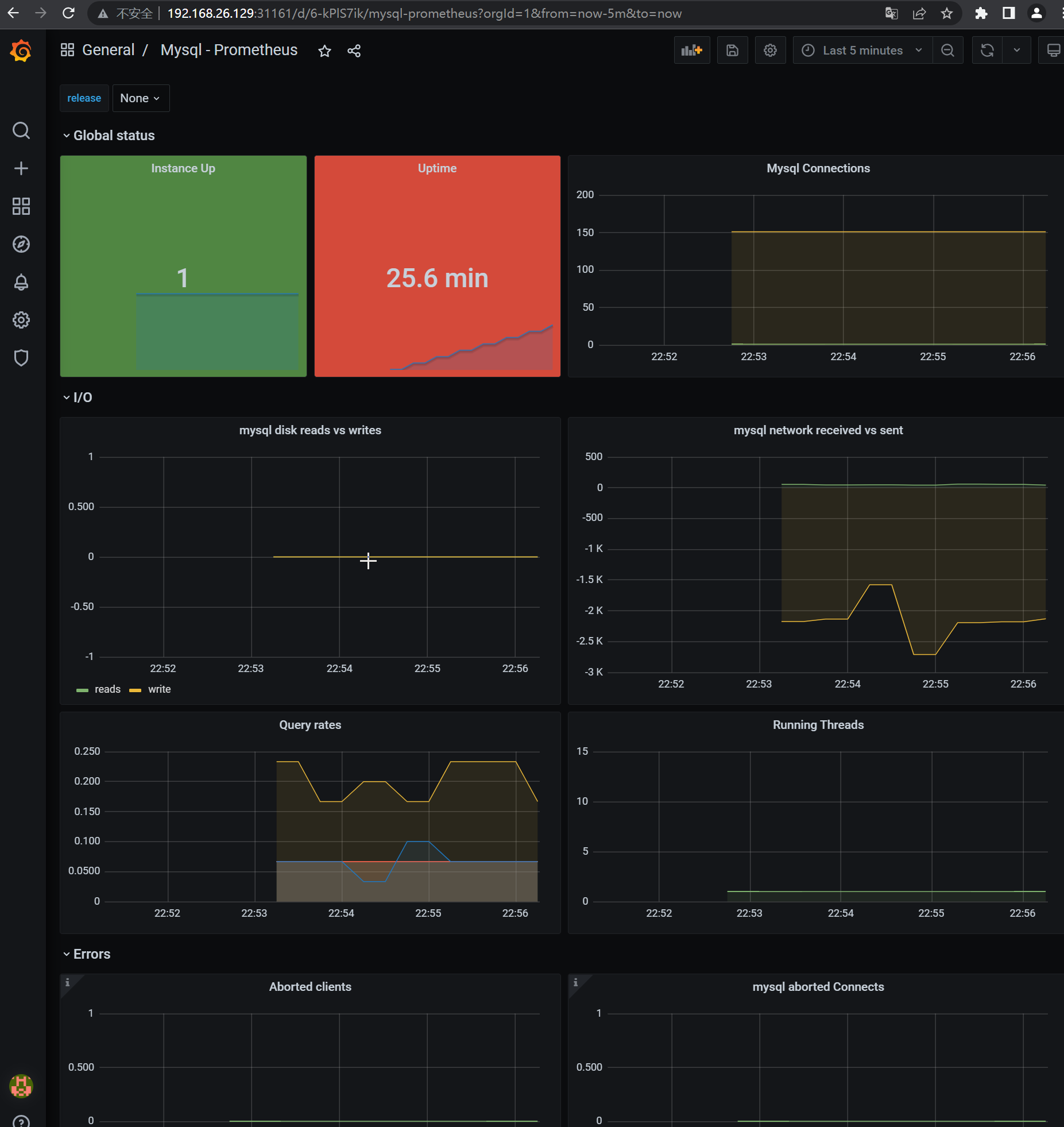

- 配置Grafana Dashboard

导入Dashboard,地址:https://grafana.com/grafana/dashboards/6239,

Blackbox-exporter

官方文档: https://github.com/prometheus/blackbox_exporter

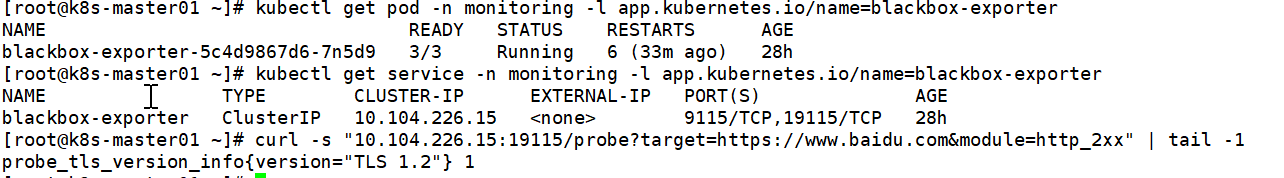

新版本模板已安装Blackbox Exporter,可以通过以下命令查看:

kubectl get pod -n monitoring -l app.kubernetes.io/name=blackbox-exporter通过Service访问Blackbox Exporter并传递一些参数:

kubectl get service -n monitoring -l app.kubernetes.io/name=blackbox-exporter

curl -s "10.104.226.15:19115/probe?target=https://www.baidu.com&module=http_2xx" | tail -1

probe是接口地址,target是检测的目标,module是使用那个模块进行探测。 更多参数查看配置文件kubectl get configmap -n monitoring blackbox-exporter-configuration -oyaml

更过使用方式请观看下文Prometheus静态配置

Prometheus静态配置

首先创建一个空文件prometheus-additional.yaml, 然后通过该文件创建一个Secret,那么这个Secret既可以作为Promethus的静态配置:

touch prometheus-additional.yaml

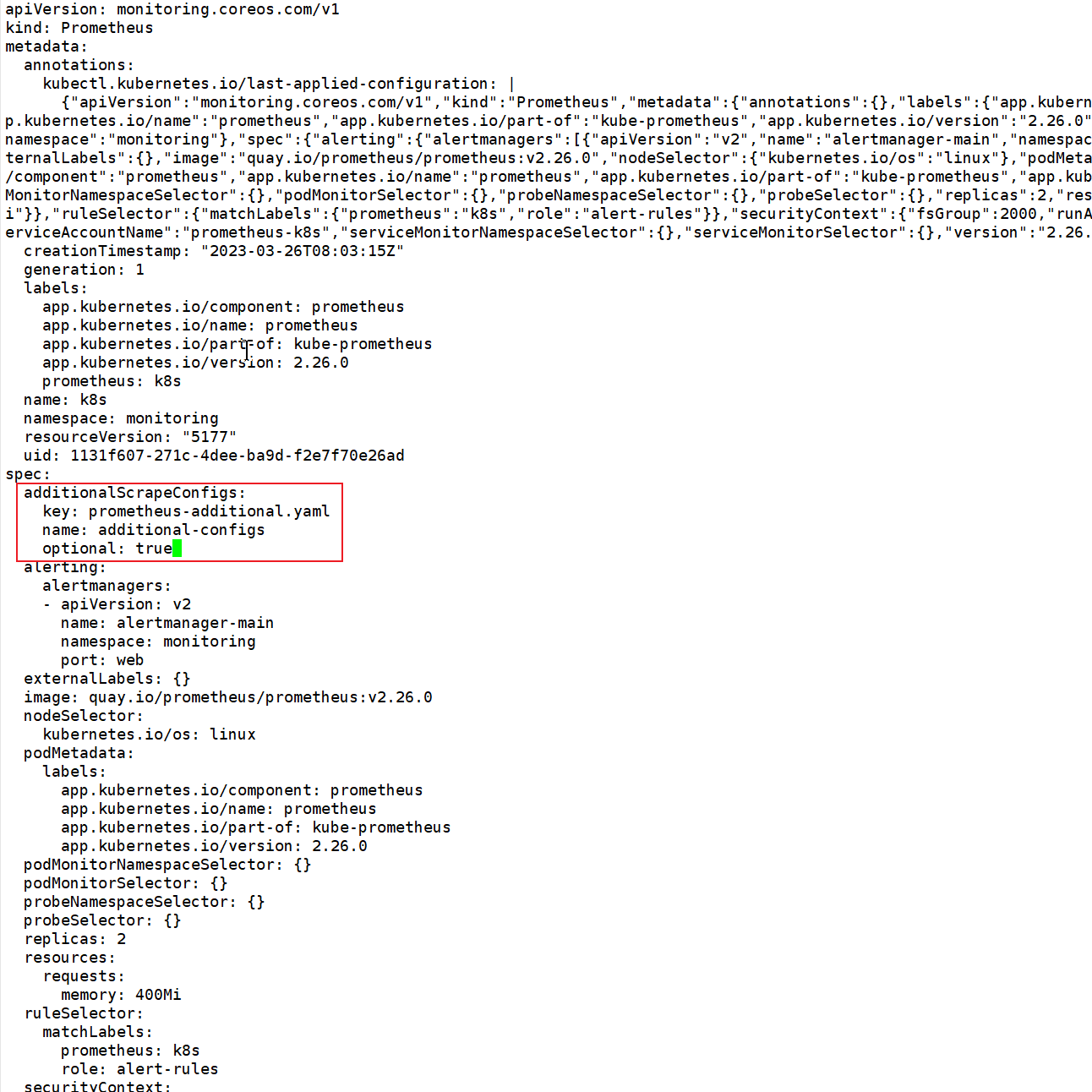

kubectl create secret generic additional-configs --from-file=prometheus-additional.yaml -n monitoring创建完成Secret后,需要编辑Prometheus配置:

kubectl get prometheus -n monitoring

kubectl edit prometheus k8s -n monitoring

# 在spec下添加如下配置

additionalScrapeConfigs:

key: prometheus-additional.yaml

name: additional-configs

optional: true

添加如上配置保持即生效。

Blackbox Exporter监控示例

编辑prometheus-additional.yaml配置如下静态配置

- job_name: 'blackbox'

metrics_path: /probe

params:

module: [http_2xx]

static_configs:

- targets:

- https://www.baidu.com

- https://www.cnblogs.com

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: blackbox-exporter:19115- targets:探测的目标,根据目标实际情况进行更改

- params: 使用那个模块进行探测

- replacement: Blackbox Exporter的地址

更新配置

kubectl create secret generic additional-configs --from-file=prometheus-additional.yaml --dry-run=client -oyaml | kubectl replace -f - -n monitoring

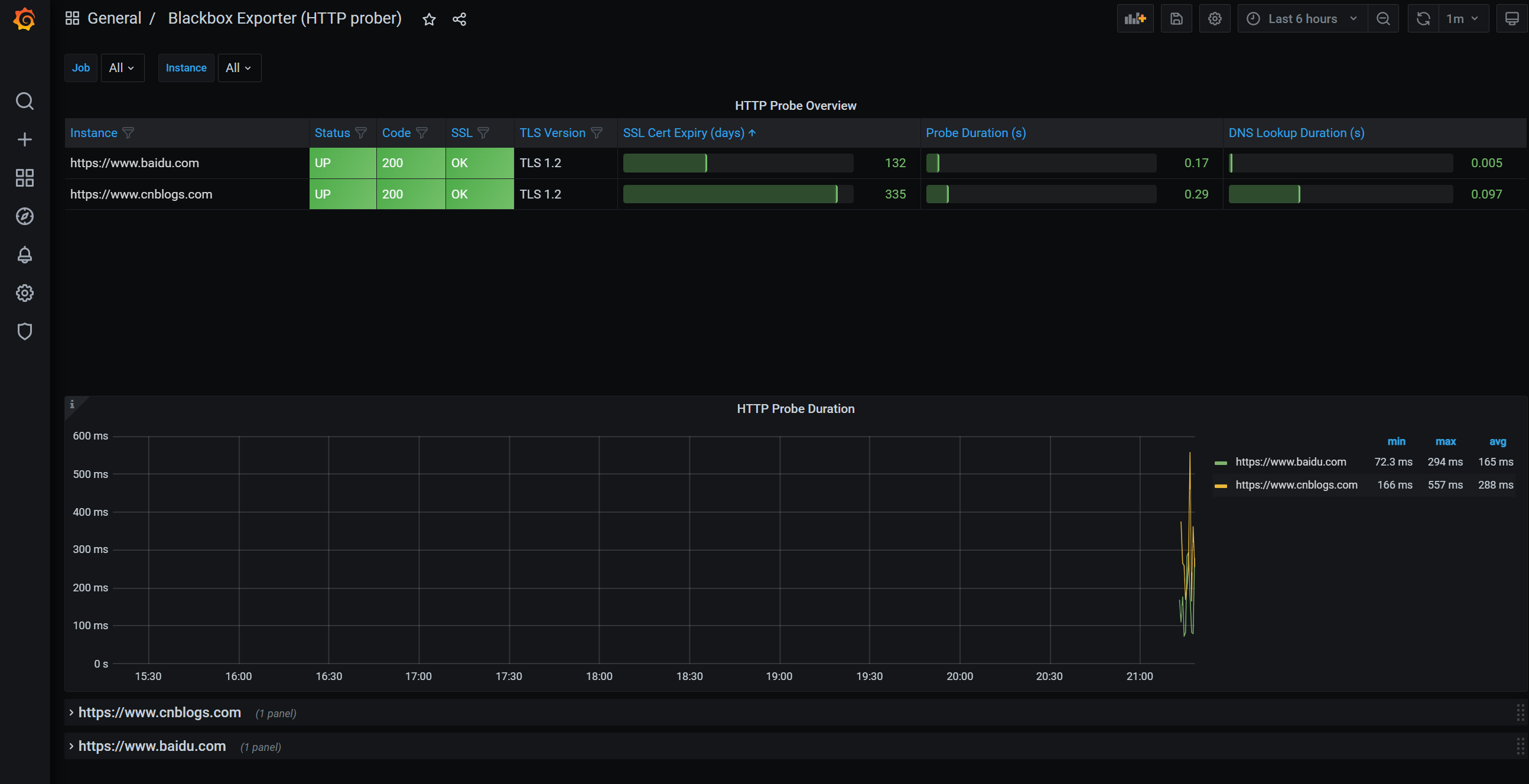

- 添加grafana dashboard

https://grafana.com/grafana/dashboards/13659

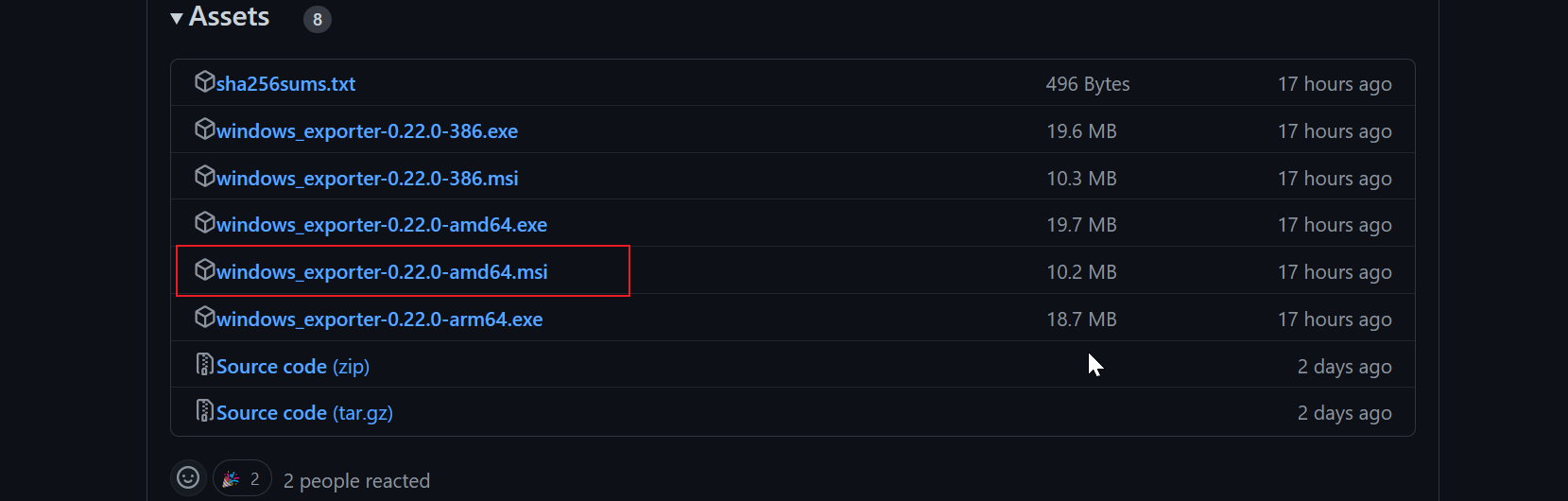

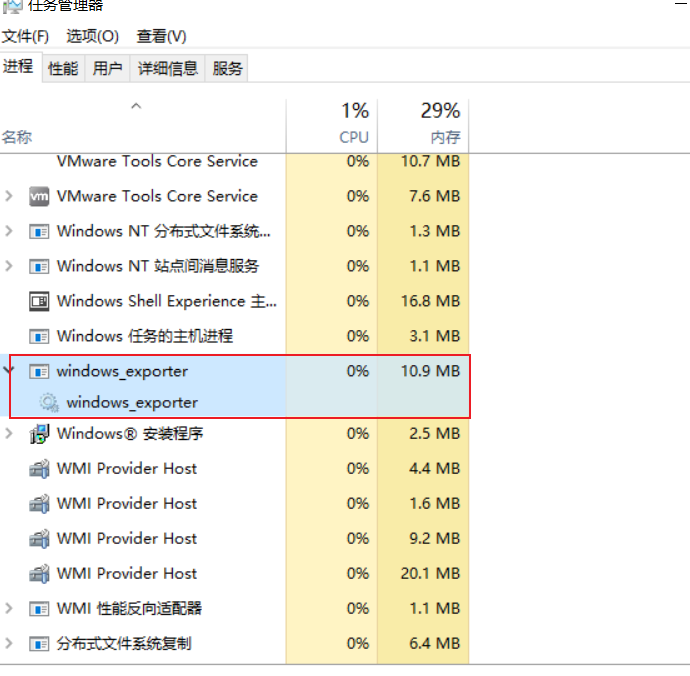

Prometheus监控Windows(外部)主机

Windows Exporter地址: https://github.com/prometheus-community/windows_exporter

在静态配置文件添加如下配置:

- job_name: 'WindowsServer2016'

static_configs:

- targets:

- "192.168.216.128:9182"

labels:

server_type: "windows"

relabel_configs:

- source_labels: [__address__]

target_label: instanceGrafana导入模板 https://grafana.com/grafana/dashboards/12566

AlertManager告警

https://prometheus.io/docs/alerting/latest/configuration/

https://github.com/prometheus/alertmanager/blob/main/doc/examples/simple.yml

https://github.com/dotbalo/k8s/blob/master/prometheus-operator/alertmanager.yaml

AlertManager配置文件

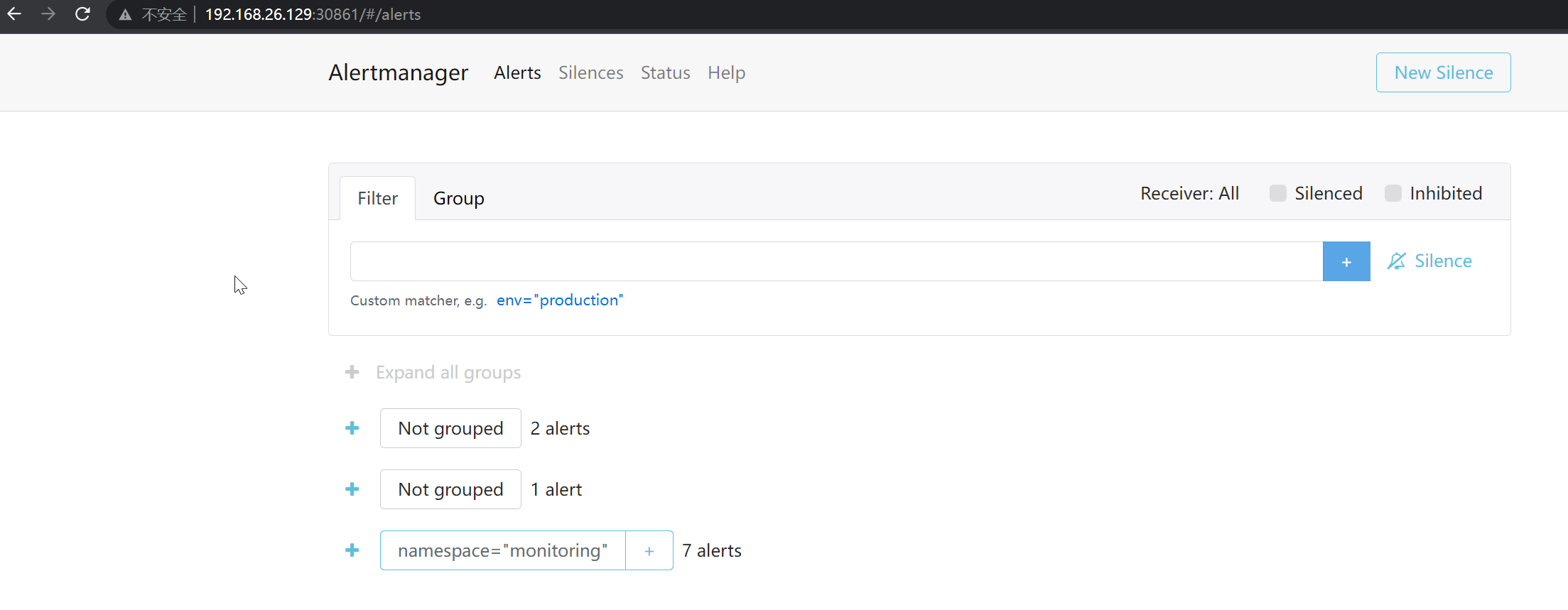

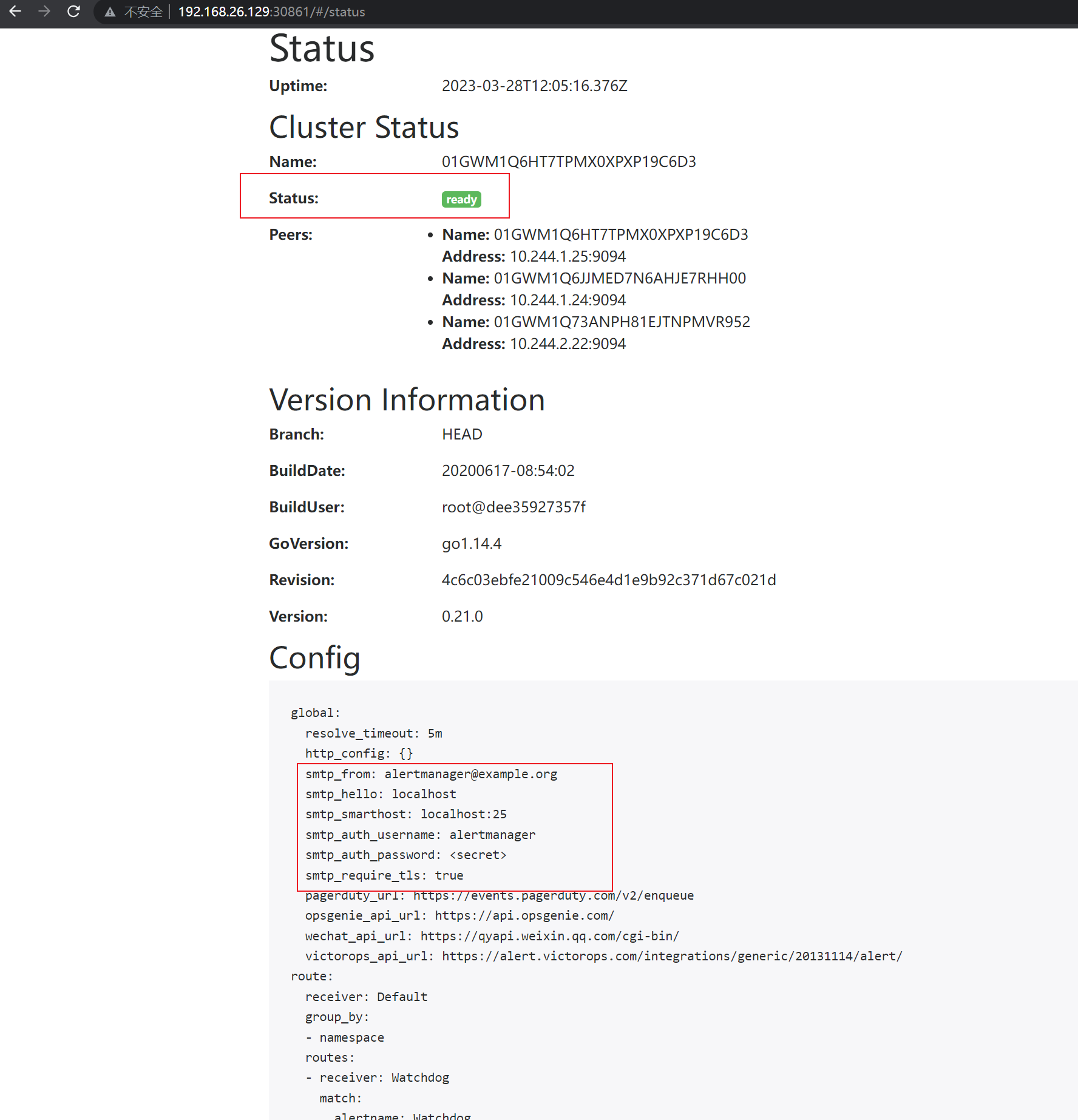

- AlertManager Dashboard

# NodePort公开服务

kubectl edit svc alertmanager-main -n monitoring

global:

# 用于邮件通知和SMTP发件人

smtp_smarthost: 'localhost:25'

smtp_from: 'alertmanager@example.org'

smtp_auth_username: 'alertmanager'

smtp_auth_password: 'password'

# 告警模板

templates:

- '/etc/alertmanager/template/*.tmpl'

# 告警根路由

route:

# 传入告警分组依据的标签。例如:cluster=A 和alertname=LatencyHigh 将被分为一组

group_by: ['alertname', 'cluster', 'service']

# 若一组新的告警产生,则会等group_wait后在发送通知,该功能主要用于当告警在很短时间内接连产生时,在group_wait内合并为单一的告警后再发送

group_wait: 30s

# 再次告警时间间隔

group_interval: 5m

# 如果一条告警通知已成功发送,且在间隔repeat_interval后,该告警仍然未被设置为resolved,则会再次发送该告警通知

repeat_interval: 12h

# 默认告警接收者,凡未被匹配进入各子路由节点的告警均被发送到此接收者

receiver: team-X-mails

# 子路由, 父级路由配置会传递给子路由,子路由配置优先级大于父级配置

routes:

- matchers:

- service="foo1|foo2|baz"

receiver: team-X-mails

routes:

- matchers:

- severity="critical"

receiver: team-X-pager

- matchers:

- service="files"

receiver: team-Y-mails

routes:

- matchers:

- severity="critical"

receiver: team-Y-pager

- matchers:

- service="database"

receiver: team-DB-pager

group_by: [alertname, cluster, database]

routes:

- matchers:

- owner="team-X"

receiver: team-X-pager

continue: true

- matchers:

- owner="team-Y"

receiver: team-Y-pager

# 抑制规则, 当出现critical告警时,忽略warning

inhibit_rules:

- source_matchers: [severity="critical"]

target_matchers: [severity="warning"]

equal: [alertname, cluster, service]

# 收件人

receivers:

- name: 'team-X-mails'

email_configs:

- to: 'team-X+alerts@example.org'

- name: 'team-X-pager'

email_configs:

- to: 'team-X+alerts-critical@example.org'

pagerduty_configs:

- service_key: <team-X-key>

- name: 'team-Y-mails'

email_configs:

- to: 'team-Y+alerts@example.org'

- name: 'team-Y-pager'

pagerduty_configs:

- service_key: <team-Y-key>

- name: 'team-DB-pager'

pagerduty_configs:

- service_key: <team-DB-key>

AlertManager邮件通知

官方文档: https://prometheus.io/docs/alerting/latest/configuration/#email_config

修改 kube-prometheus/manifests/alertmanager-secret.yaml

apiVersion: v1

kind: Secret

metadata:

labels:

alertmanager: main

app.kubernetes.io/component: alert-router

app.kubernetes.io/name: alertmanager

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 0.21.0

name: alertmanager-main

namespace: monitoring

stringData:

alertmanager.yaml: |-

"global":

"resolve_timeout": "5m"

# 用于邮件通知和SMTP发件人

smtp_smarthost: 'localhost:25'

smtp_from: 'alertmanager@example.org'

smtp_auth_username: 'alertmanager'

smtp_auth_password: 'password'

"inhibit_rules":

- "equal":

- "namespace"

- "alertname"

"source_match":

"severity": "critical"

"target_match_re":

"severity": "warning|info"

- "equal":

- "namespace"

- "alertname"

"source_match":

"severity": "warning"

"target_match_re":

"severity": "info"

"receivers":

- "name": "Default"

# email_configs 代表使用邮件告警

email_configs:

# to 收件人

- to: "team-X+alerts@example.org"

# send_resolved 告警如果被解决是否发送解决通知

send_resolved: true

- "name": "Watchdog"

- "name": "Critical"

"route":

"group_by":

- "namespace"

"group_interval": "5m"

"group_wait": "30s"

"receiver": "Default"

"repeat_interval": "12h"

"routes":

- "match":

"alertname": "Watchdog"

"receiver": "Watchdog"

- "match":

"severity": "critical"

"receiver": "Critical"

type: Opaque- 加载配置

kubectl replace -f alertmanager-secret.yaml

kubectl get svc -n monitoring alertmanager-main

AlertManager企业微信通知

官方文档: https://prometheus.io/docs/alerting/latest/configuration/#wechat_config

apiVersion: v1

kind: Secret

metadata:

labels:

alertmanager: main

app.kubernetes.io/component: alert-router

app.kubernetes.io/name: alertmanager

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 0.21.0

name: alertmanager-main

namespace: monitoring

stringData:

alertmanager.yaml: |-

"global":

"resolve_timeout": "5m"

smtp_smarthost: 'localhost:25'

smtp_from: 'alertmanager@example.org'

smtp_auth_username: 'alertmanager'

smtp_auth_password: 'password'

# wechat_api_url 企业微信接口地址

wechat_api_url: "https://qyapi.weixin.qq.com/cgi-bin/"

# wechat_api_corp_id 企业ID

wechat_api_corp_id: "xxxxxx"

"inhibit_rules":

- "equal":

- "namespace"

- "alertname"

"source_match":

"severity": "critical"

"target_match_re":

"severity": "warning|info"

- "equal":

- "namespace"

- "alertname"

"source_match":

"severity": "warning"

"target_match_re":

"severity": "info"

"receivers":

- "name": "Default"

email_configs:

- to: "team-X+alerts@example.org"

send_resolved: true

- "name": "Watchdog"

# wechat

wechat_configs:

- send_resolved: true

# 部门/组ID

to_party: 1

to_user: '@all'

agent_id: 100001

api_secret: "secretxxxxxxxx"

- "name": "Critical"

"route":

"group_by":

- "namespace"

"group_interval": "5m"

"group_wait": "30s"

"receiver": "Default"

"repeat_interval": "12h"

"routes":

- "match":

"alertname": "Watchdog"

"receiver": "Watchdog"

- "match":

"severity": "critical"

"receiver": "Critical"

type: Opaque- 加载配置

kubectl replace -f alertmanager-secret.yaml

kubectl get svc -n monitoring alertmanager-main

自定义告警模板

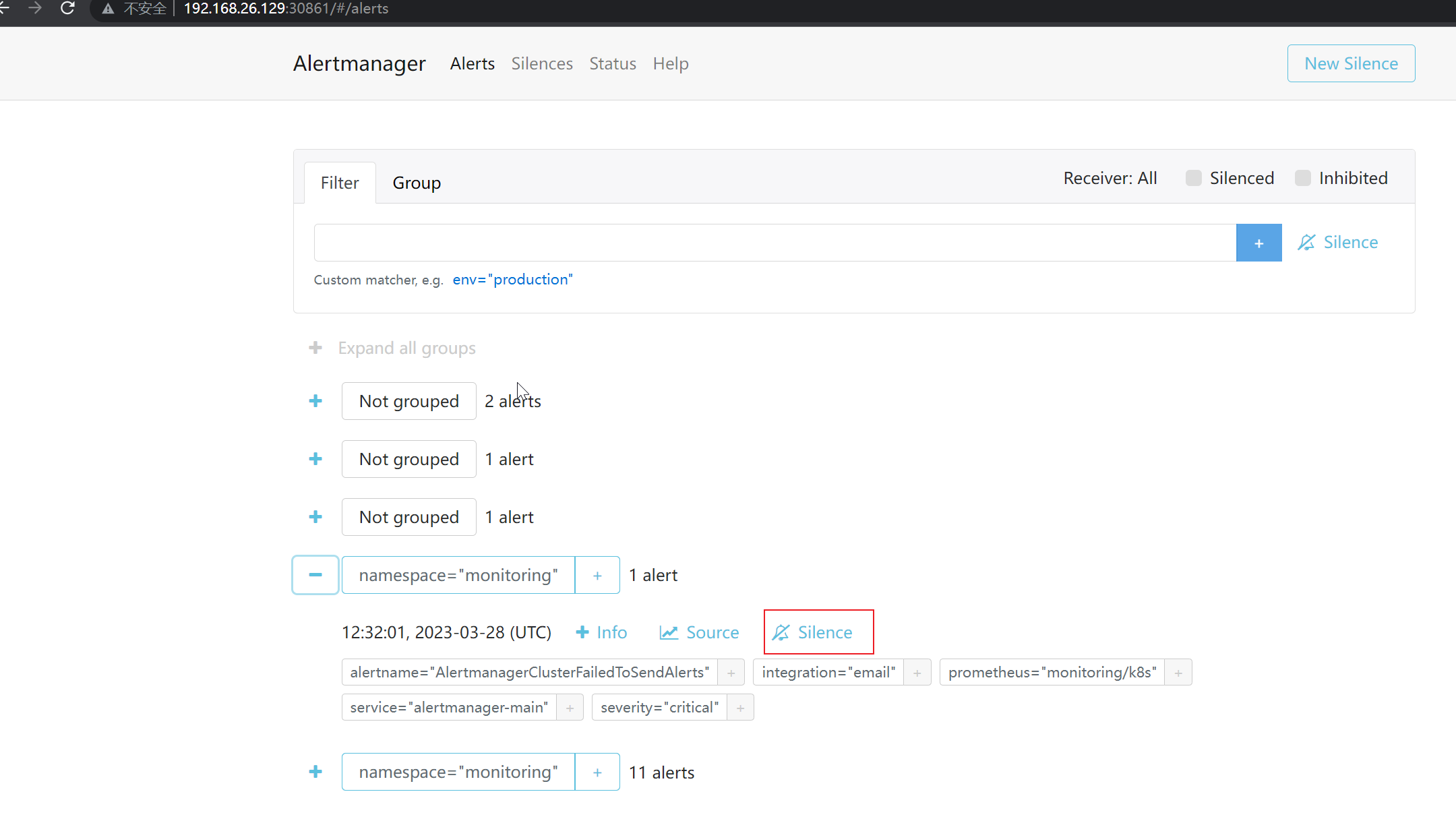

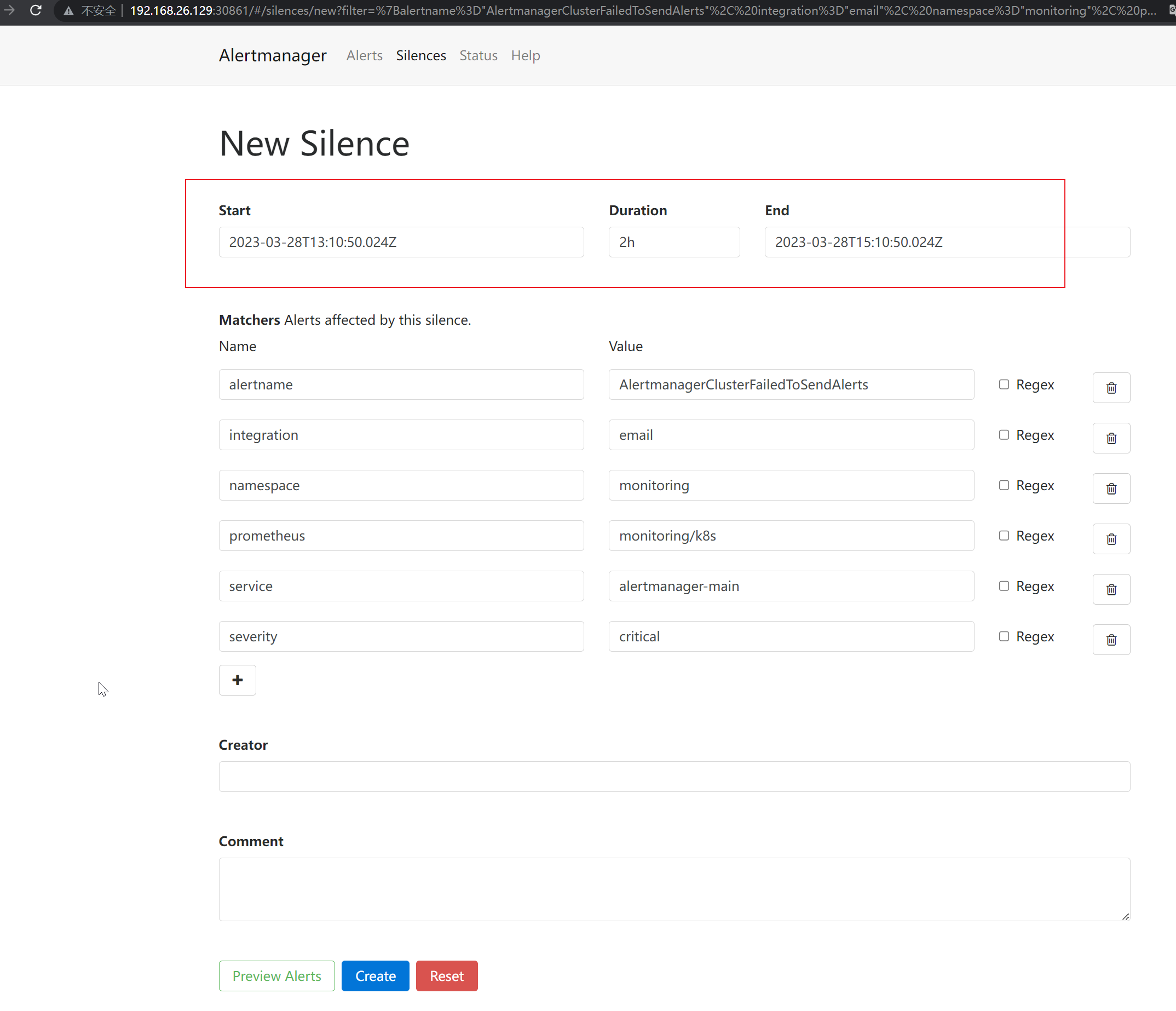

计划维护Silence

Prometheus Exporter开发

- Gauges 仪表盘

- Counters 计数器

- Histograms 直方图

- Summaries 摘要