python多线程内存溢出--ThreadPoolExecutor内存溢出

ThreadPoolExecutor内存溢出

情景一:

在数据处理中,使用ThreadPoolExecutor(线程池)处理大量数据情况下,导致内存溢出

机器卡死挂掉;

场景模拟:

from concurrent.futures import ThreadPoolExecutor, as_completed

import time

from memory_profiler import profile

import queue

from line_profiler import LineProfiler

from functools import wraps

class BoundThreadPoolExecutor(ThreadPoolExecutor):

"""

对ThreadPoolExecutor 进行重写,给队列设置边界

"""

def __init__(self, qsize: int = None, *args, **kwargs):

super(BoundThreadPoolExecutor, self).__init__(*args, **kwargs)

self._work_queue = queue.Queue(qsize)

def timer(func):

@wraps(func)

def decorator(*args, **kwargs):

func_return = func(*args, **kwargs)

lp = LineProfiler()

lp_wrap = lp(func)

lp_wrap(*args, **kwargs)

lp.print_stats()

return func_return

return decorator

def func(num):

print(f"the {num} run...")

time.sleep(0.5)

return num*num

# @timer

@profile

def main():

# with ThreadPoolExecutor(max_workers=2) as t:

# res = [t.submit(func, i) for i in range(100)]

# pool = BoundThreadPoolExecutor(qsize=2, max_workers=2)

pool = ThreadPoolExecutor(max_workers=2)

for i in range(100):

# func(i)

pool.submit(func, i)

print(pool._work_queue.qsize())

pool.shutdown()

if __name__ == '__main__':

main()

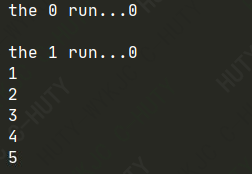

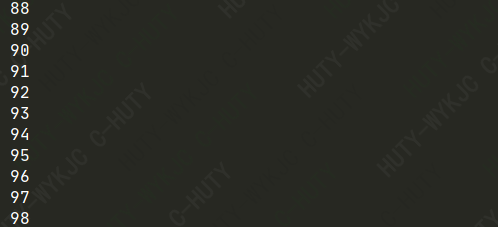

未对线程队列限制时,进程将所有对象添加到self._work_queue 中

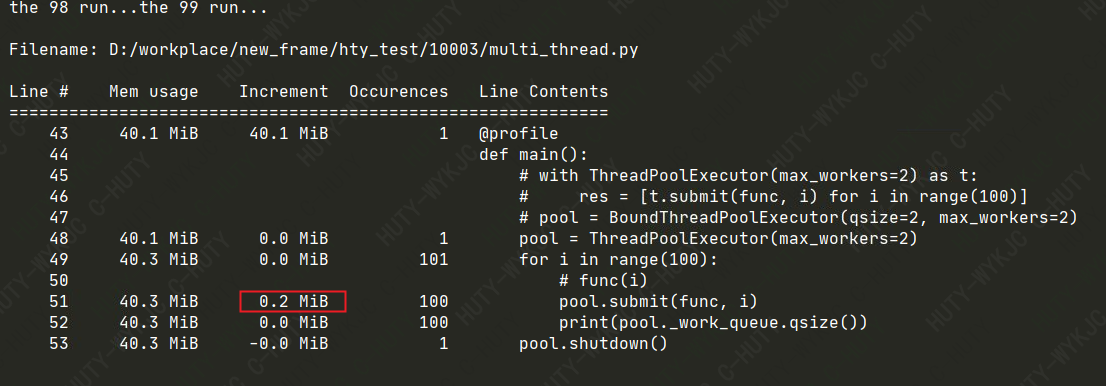

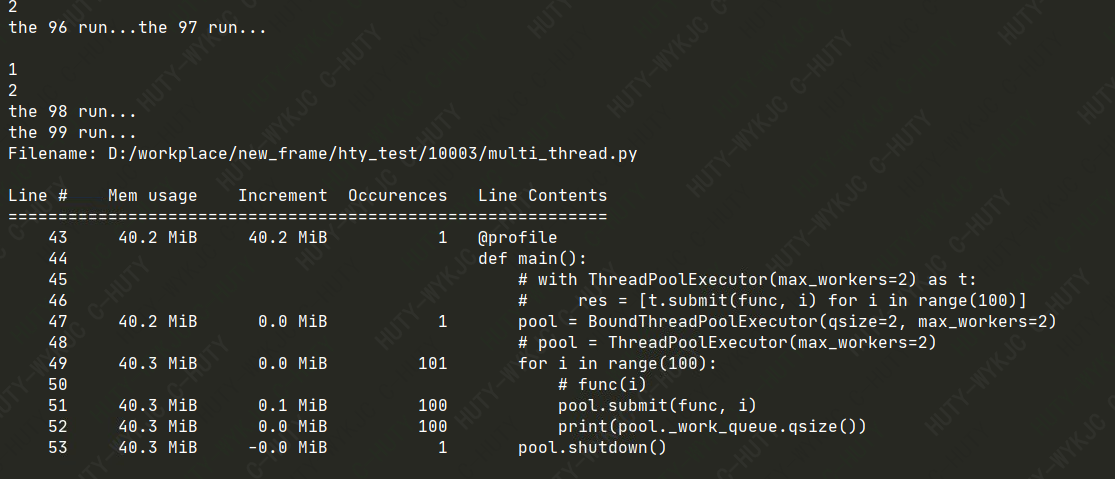

重写ThreadPoolExecutor, 限制self._work_queue = queue.Queue(qsize)队列大小

结果对比

总结

存在内存溢出的情况,原因是ThreadPoolExecutor 线程池使用的是无边界队列,进程在队列中

添加对象时没有对空闲线程进行判断,导致内存消耗过多

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· Manus的开源复刻OpenManus初探

· AI 智能体引爆开源社区「GitHub 热点速览」

· 三行代码完成国际化适配,妙~啊~

· .NET Core 中如何实现缓存的预热?