springboot-mybatis-plus基本项目框架

此仅仅为web最基本框架, 统一异常管理、接口统一日志管理。

项目结构:

注:

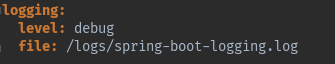

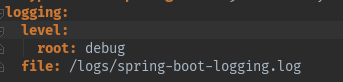

修改为如下图,作用是sql打印输出。

源码下载:https://files.cnblogs.com/files/007sx/jarfk_v3.zip

以上为jar方式使用ieda内嵌tomcat启动,如果想打包成war包,使用外部tomcat启动需要进行如下修改:

1. 将项目的启动类JarfkApplication.java继承SpringBootServletInitializer并重写configure方法:

package com.jarfk; import org.mybatis.spring.annotation.MapperScan; import org.springframework.boot.SpringApplication; import org.springframework.boot.autoconfigure.SpringBootApplication; import org.springframework.boot.builder.SpringApplicationBuilder; import org.springframework.boot.web.support.SpringBootServletInitializer; @SpringBootApplication @MapperScan("com.jarfk.mapper*") public class JarfkApplication extends SpringBootServletInitializer { @Override protected SpringApplicationBuilder configure(SpringApplicationBuilder application) { return application.sources(JarfkApplication.class); } public static void main(String[] args) { SpringApplication.run(JarfkApplication.class, args); } }

2. pom.xml中:

<groupId>com.jarfk</groupId> <artifactId>fack</artifactId> <version>0.0.1-SNAPSHOT</version> <packaging>jar</packaging>

改为:

<groupId>com.jarfk</groupId> <artifactId>fack</artifactId> <version>0.0.1-SNAPSHOT</version> <packaging>war</packaging>

新增:

<!-- 打war包时加入此项, 告诉spring-boot tomcat相关jar包用外部的,不要打进去 --> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-tomcat</artifactId> <scope>provided</scope> </dependency>

<plugins>里面注释:

<!-- 如果要打成jar包并使用 java -jar **.jar运行,请不要注释spring-boot-maven-plugin --> <!--<plugin>--> <!--<groupId>org.springframework.boot</groupId>--> <!--<artifactId>spring-boot-maven-plugin</artifactId>--> <!--</plugin>-->

完成即可。

如果想使用自定义log4j日志输出,可以如下配置:

1. pom.xml中添加:

<!-- log4j日志 --> <dependency> <groupId>org.slf4j</groupId> <artifactId>slf4j-api</artifactId> <version>1.7.21</version> </dependency> <dependency> <groupId>org.slf4j</groupId> <artifactId>slf4j-log4j12</artifactId> <version>1.7.21</version> </dependency>

2. 在resources目录下新建log4j.properties,内容如下:

log4j.rootLogger=DEBUG, stdout

######################### logger ##############################

log4j.appender.stdout = org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout = org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.conversionPattern = %d [%t] %-5p %c - %m%n

#日志级别是INFO,标签是extProfile

log4j.logger.extProfile=DEBUG, extProfile

#输出到指定文件extProfile.log中

log4j.additivity.extProfile=false;

log4j.appender.extProfile=org.apache.log4j.RollingFileAppender

#输出到resin根目录的logs文件夹,log4j会自动生成目录和文件

log4j.appender.extProfile.File=logs/extProfile.log

#超过20M就重新创建一个文件

log4j.appender.extProfile.MaxFileSize=20480KB

log4j.appender.extProfile.MaxBackupIndex=10

log4j.appender.extProfile.layout=org.apache.log4j.PatternLayout

log4j.appender.extProfile.layout.ConversionPattern=%d [%t] %-5p %c - %m%n

完成以上两步即可,以上是 org.apache.log4j.Logger 类型的日志配置。

如果想同时设置 org.slf4j.LoggerFactory 类型日志,可进行如下操作:

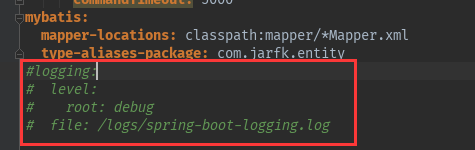

application.yml中日志的设置注释掉:

然后再资源目录resources下新建 logback.xml 即可,如下:

<?xml version="1.0" encoding="UTF-8"?> <configuration debug="false"> <!--定义日志文件的存储地址 勿在 LogBack 的配置中使用相对路径--> <property name="LOG_HOME" value="/spring-boot-log" /> <!-- 控制台输出 --> <appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender"> <encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder"> <!--格式化输出:%d表示日期,%thread表示线程名,%-5level:级别从左显示5个字符宽度%msg:日志消息,%n是换行符--> <pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} [%thread] %-5level %logger{50} - %msg%n</pattern> <charset>GBK</charset> </encoder> </appender> <!-- 按照每天生成日志文件 --> <appender name="FILE" class="ch.qos.logback.core.rolling.RollingFileAppender"> <rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy"> <!--日志文件输出的文件名--> <FileNamePattern>${LOG_HOME}/web.log.%d{yyyy-MM-dd}.log</FileNamePattern> <!--日志文件保留天数--> <MaxHistory>30</MaxHistory> </rollingPolicy> <encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder"> <!--格式化输出:%d表示日期,%thread表示线程名,%-5level:级别从左显示5个字符宽度%msg:日志消息,%n是换行符--> <pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} [%thread] %-5level %logger{50} - %msg%n</pattern> <charset>UTF-8</charset> </encoder> <!--日志文件最大的大小--> <triggeringPolicy class="ch.qos.logback.core.rolling.SizeBasedTriggeringPolicy"> <MaxFileSize>10MB</MaxFileSize> </triggeringPolicy> </appender> <!-- show parameters for hibernate sql 专为 Hibernate 定制 --> <logger name="org.hibernate.type.descriptor.sql.BasicBinder" level="TRACE" /> <logger name="org.hibernate.type.descriptor.sql.BasicExtractor" level="DEBUG" /> <logger name="org.hibernate.SQL" level="DEBUG" /> <logger name="org.hibernate.engine.QueryParameters" level="DEBUG" /> <logger name="org.hibernate.engine.query.HQLQueryPlan" level="DEBUG" /> <!--myibatis log configure--> <logger name="com.apache.ibatis" level="TRACE"/> <logger name="java.sql.Connection" level="DEBUG"/> <logger name="java.sql.Statement" level="DEBUG"/> <logger name="java.sql.PreparedStatement" level="DEBUG"/> <!-- 日志输出级别 --> <root level="DEBUG"> <appender-ref ref="STDOUT" /> <appender-ref ref="FILE" /> </root> <!--日志异步到数据库 --> <!--<appender name="DB" class="ch.qos.logback.classic.db.DBAppender">--> <!--<!–日志异步到数据库 –>--> <!--<connectionSource class="ch.qos.logback.core.db.DriverManagerConnectionSource">--> <!--<!–连接池 –>--> <!--<dataSource class="com.mchange.v2.c3p0.ComboPooledDataSource">--> <!--<driverClass>com.mysql.jdbc.Driver</driverClass>--> <!--<url>jdbc:mysql://127.0.0.1:3306/databaseName</url>--> <!--<user>root</user>--> <!--<password>root</password>--> <!--</dataSource>--> <!--</connectionSource>--> <!--</appender>--> </configuration>

如果此框架中需要在普通类里面注入bean,那么可以在Spring Boot可以扫描的包下新建SpringAutoWriteUtil:

package com.jarfk.util; import org.springframework.beans.BeansException; import org.springframework.context.ApplicationContext; import org.springframework.context.ApplicationContextAware; import org.springframework.stereotype.Component; /** * 注入bean工具类 * 注:此类需要在Spring Boot可以扫描的包下 * Created by Administrator on 2017/9/7. */ @Component public class SpringAutoWriteUtil implements ApplicationContextAware { private static ApplicationContext applicationContext = null; @Override public void setApplicationContext(ApplicationContext applicationContext) throws BeansException { if(SpringAutoWriteUtil.applicationContext == null){ SpringAutoWriteUtil.applicationContext = applicationContext; } } //获取applicationContext private static ApplicationContext getApplicationContext() { return applicationContext; } //通过name获取 Bean. public static Object getBean(String name){ return getApplicationContext().getBean(name); } //通过class获取Bean. public static <T> T getBean(Class<T> clazz){ return getApplicationContext().getBean(clazz); } //通过name,以及Clazz返回指定的Bean public static <T> T getBean(String name,Class<T> clazz){ return getApplicationContext().getBean(name, clazz); } }

调用方式:

附加:整合redis

1.pom.xml引入两个jar包

<!-- redis --> <dependency> <groupId>redis.clients</groupId> <artifactId>jedis</artifactId> <version>2.9.0</version> </dependency> <dependency> <groupId>org.springframework.data</groupId> <artifactId>spring-data-redis</artifactId> <version>1.8.1.RELEASE</version> </dependency>

2.application.yml中配置redis服务

3.新建JedisClusterConfig配置类

package com.jarfk.config; import org.apache.commons.pool2.impl.GenericObjectPoolConfig; import org.springframework.beans.factory.annotation.Value; import org.springframework.boot.autoconfigure.condition.ConditionalOnClass; import org.springframework.context.annotation.Bean; import org.springframework.context.annotation.Configuration; import redis.clients.jedis.HostAndPort; import redis.clients.jedis.JedisCluster; import java.util.HashSet; import java.util.Set; /** * Created by Administrator on 2017/9/25 0025. */ @Configuration @ConditionalOnClass({ JedisCluster.class }) public class JedisClusterConfig { @Value("${spring.redis.cache.clusterNodes}") private String clusterNodes; @Value("${spring.redis.cache.password}") private String password; @Value("${spring.redis.cache.commandTimeout}") private Integer commandTimeout; @Bean public JedisCluster getJedisCluster() { String[] serverArray = clusterNodes.split(","); Set<HostAndPort> nodes = new HashSet<>(); for (String ipPort : serverArray) { String[] ipPortPair = ipPort.split(":"); nodes.add(new HostAndPort(ipPortPair[0].trim(), Integer.valueOf(ipPortPair[1].trim()))); } return new JedisCluster(nodes, commandTimeout, commandTimeout, 2, password, new GenericObjectPoolConfig()); } }

4.新建redis服务类RedisClusterCache(后面代码中只需注入该类即可对redis数据库操作)

package com.jarfk.util.redis; import com.jarfk.util.SerializerUtil; import org.springframework.beans.factory.annotation.Autowired; import org.springframework.stereotype.Component; import redis.clients.jedis.JedisCluster; /** * Created by Administrator on 2017/9/25 0025. */ @Component public class RedisClusterCache { @Autowired private JedisCluster jedisCluster; /** * 添加缓存数据 * @param key * @param obj * @param <T> * @return * @throws Exception */ public <T> String putCache(String key, T obj) throws Exception { final byte[] bkey = key.getBytes(); final byte[] bvalue = SerializerUtil.serializeObj(obj); return jedisCluster.set(bkey,bvalue); } /** * 添加缓存数据,设定缓存失效时间 * @param key * @param obj * @param expireTime 秒 * @param <T> * @throws Exception */ public <T> String putCacheWithExpireTime(String key, T obj, final int expireTime) throws Exception { final byte[] bkey = key.getBytes(); final byte[] bvalue = SerializerUtil.serializeObj(obj); String result = jedisCluster.setex(bkey, expireTime,bvalue); return result; } /** * 根据key取缓存数据 * @param key * @param <T> * @return * @throws Exception */ public <T> T getCache(final String key) throws Exception { byte[] result = jedisCluster.get(key.getBytes()); return (T) SerializerUtil.deserializeObj(result); } /** * 根据key删除缓存数据 * @return * @throws Exception */ public void delCache(final String key) throws Exception { jedisCluster.del(key.getBytes()); } }

5. 附加:关于redis集群实现的分布式锁:

http://www.cnblogs.com/007sx/p/7655057.html

数据库表:

CREATE TABLE `sys_user` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`nickname` varchar(20) DEFAULT NULL COMMENT '用户昵称',

`email` varchar(128) DEFAULT NULL COMMENT '邮箱|登录帐号',

`pswd` varchar(32) DEFAULT NULL COMMENT '密码',

`salt` varchar(255) DEFAULT NULL COMMENT '盐',

`create_time` datetime DEFAULT NULL COMMENT '创建时间',

`last_login_time` datetime DEFAULT NULL COMMENT '最后登录时间',

`status` bigint(1) DEFAULT '1' COMMENT '1:有效,0:禁止登录',

PRIMARY KEY (`id`)

) ENGINE=InnoDB AUTO_INCREMENT=16 DEFAULT CHARSET=utf8

自行创建,此采用id自增方式,可自行配置。

浙公网安备 33010602011771号

浙公网安备 33010602011771号