python Rabbitmq编程

python Rabbitmq

实现最简单的队列通信

send端

#!/usr/bin/env python import pika credentials = pika.PlainCredentials("用户名","密码") connection = pika.BlockingConnection(pika.ConnectionParameters( 'localhost',credentials=credentials)) channel = connection.channel() #建立了rabbit协议的通道 # 声明queue channel.queue_declare(queue='hello') # n RabbitMQ a message can never be sent directly to the queue, it always needs to go through an exchange. channel.basic_publish(exchange='', routing_key='hello', body='Hello World!') print(" [x] Sent 'Hello World!'") connection.close()

receive端

# _*_coding:utf-8_*_ __author__ = 'Alex Li' import pika credentials = pika.PlainCredentials("用户名","密码") connection = pika.BlockingConnection(pika.ConnectionParameters( 'localhost',credentials=credentials)) channel = connection.channel() #建立了rabbit协议的通道 # You may ask why we declare the queue again ‒ we have already declared it in our previous code. # We could avoid that if we were sure that the queue already exists. For example if send.py program # was run before. But we're not yet sure which program to run first. In such cases it's a good # practice to repeat declaring the queue in both programs. channel.queue_declare(queue='hello') def callback(ch, method, properties, body): print(" [x] Received %r" % body) # callback函数当拿到队列里的值,则调用 channel.basic_consume(callback, queue='hello', no_ack=True) print(' [*] Waiting for messages. To exit press CTRL+C') channel.start_consuming()

#注意:远程连接rabbitmq server的话,需要配置权限。

#1.设置用户与密码

# > rabbitmqctl add_user name pass # > rabbitmqctl set_user_tags name administrator

#2.设置权限,允许从外面访问

# rabbitmqctl set_permissions -p /name ".*" ".*" ".*"

set_permissions [-p vhost] {user} {conf} {write} {read} vhost The name of the virtual host to which to grant the user access, defaulting to /. user The name of the user to grant access to the specified virtual host. conf A regular expression matching resource names for which the user is granted configure permissions. write A regular expression matching resource names for which the user is granted write permissions. read A regular expression matching resource names for which the user is granted read permissions.

#3.生产者与消费者添加认证信息

credentials = pika.PlainCredentials("用户名","密码")

1 2 3 4 | #为什么要声明两次queue,这里hello为队列名# channel.queue_declare(queue='hello')# 解决发起者先启动,而接收者还没有启动,发送者先创建queue,# 如果发起者已经声明了,接收者会检测有没有queue,如果有了,实际接收者是不会执行声明的,没有就会声明这个queue。 |

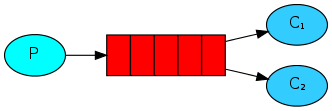

消息公平分发(循环调度)

1 | 在这种模式下,RabbitMQ会默认把p发的消息依次分发给各个消费者(c)。 |

1 | 轮巡公平的发送给接收者,比如第一次发送给第一个接收者,第二次发送给第二格接受者,如此。 |

send端

import pika import time credentials = pika.PlainCredentials("用户名","密码") connection = pika.BlockingConnection(pika.ConnectionParameters( 'localhost',credentials=credentials)) channel = connection.channel() # 声明queue channel.queue_declare(queue='task_queue') # n RabbitMQ a message can never be sent directly to the queue, it always needs to go through an exchange. import sys message = ' '.join(sys.argv[1:]) or "Hello World! %s" % time.time() channel.basic_publish(exchange='', routing_key='task_queue', body=message, properties=pika.BasicProperties( delivery_mode=2, # make message persistent ) ) print(" [x] Sent %r" % message) connection.close()

receive端

# _*_coding:utf-8_*_ import pika, time credentials = pika.PlainCredentials("用户名","密码") connection = pika.BlockingConnection(pika.ConnectionParameters( 'localhost',credentials=credentials)) channel = connection.channel() def callback(ch, method, properties, body): print(" [x] Received %r" % body) time.sleep(20) print(" [x] Done") print("method.delivery_tag", method.delivery_tag) ch.basic_ack(delivery_tag=method.delivery_tag) channel.basic_consume(callback, queue='task_queue', no_ack=True ) print(' [*] Waiting for messages. To exit press CTRL+C') channel.start_consuming()

消息确认

执行任务可能需要几秒钟。你可能想知道如果其中一个消费者开始一项长期任务并且只是部分完成而死亡会发生什么。使用我们当前的代码,一旦RabbitMQ向消费者传递消息,它立即将其标记为删除。在这种情况下,如果你杀死一个工人,我们将丢失它刚刚处理的消息。我们还将丢失分发给这个特定工作者但尚未处理的所有消息。

但我们不想失去任何任务。如果工人死亡,我们希望将任务交付给另一名工人。

为了确保消息永不丢失,RabbitMQ支持 消息确认。消费者发回ack(nowledgement)告诉RabbitMQ已收到,处理了特定消息,RabbitMQ可以自由删除它。

如果消费者死亡(其通道关闭,连接关闭或TCP连接丢失)而不发送确认,RabbitMQ将理解消息未完全处理并将重新排队。如果同时有其他在线消费者,则会迅速将其重新发送给其他消费者。这样你就可以确保没有消息丢失,即使工人偶尔会死亡。

没有任何消息超时; 当消费者死亡时,RabbitMQ将重新发送消息。即使处理消息需要非常长的时间,也没关系。

默认情况下,手动消息确认已打开。在前面的示例中,我们通过auto_ack = True 标志明确地将它们关闭。在我们完成任务后,是时候删除此标志并从工作人员发送适当的确认。

def callback(ch, method, properties, body): print " [x] Received %r" % (body,) time.sleep( body.count('.') ) print " [x] Done" ch.basic_ack(delivery_tag = method.delivery_tag) channel.basic_consume(callback, queue='hello')

Using this code we can be sure that even if you kill a worker using CTRL+C while it was processing a message, nothing will be lost. Soon after the worker dies all unacknowledged messages will be redelivered

消息持久化

我们已经学会了如何确保即使消费者死亡,任务也不会丢失。但是如果RabbitMQ服务器停止,我们的任务仍然会丢失。

当RabbitMQ退出或崩溃时,它将忘记队列和消息,除非你告诉它不要。确保消息不会丢失需要做两件事:我们需要将队列和消息都标记为持久。

首先,我们需要确保RabbitMQ永远不会丢失我们的队列。为此,我们需要声明它是持久的:

channel.queue_declare(queue='hello', durable=True)

虽然此命令本身是正确的,但它在我们的设置中不起作用。那是因为我们已经定义了一个名为hello的队列 ,这个队列不耐用。RabbitMQ不允许您使用不同的参数重新定义现有队列,并将向尝试执行此操作的任何程序返回错误。但是有一个快速的解决方法 - 让我们声明一个具有不同名称的队列,例如task_queue:

channel.queue_declare(queue='task_queue', durable=True)

此queue_declare更改需要应用于生产者和消费者代码。

此时我们确信即使RabbitMQ重新启动,task_queue队列也不会丢失。现在我们需要将消息标记为持久性 - 通过提供值为2的delivery_mode属性。

channel.basic_publish(exchange='', routing_key="task_queue", body=message, properties=pika.BasicProperties( delivery_mode = 2, # make message persistent ))

负载均衡

1 | 如果Rabbit只管按顺序把消息发到各个消费者身上,不考虑消费者负载的话,很可能出现,一个机器配置不高的消费者那里堆积了很多消息处理不完,同时配置高的消费者却一直很轻松。为解决此问题,可以在各个消费者端,<br>配置perfetch_count=1,意思就是告诉RabbitMQ在我这个消费者当前消息还没处理完的时候就不要再给我发新消息了。 |

send端

#!/usr/bin/env python import pika import sys connection = pika.BlockingConnection( pika.ConnectionParameters(host='localhost')) channel = connection.channel() channel.queue_declare(queue='task_queue', durable=True) message = ' '.join(sys.argv[1:]) or "Hello World!" channel.basic_publish( exchange='', routing_key='task_queue', body=message, properties=pika.BasicProperties( delivery_mode=2, # make message persistent )) print(" [x] Sent %r" % message) connection.close()

receive端

#!/usr/bin/env python import pika import time connection = pika.BlockingConnection( pika.ConnectionParameters(host='localhost')) channel = connection.channel() channel.queue_declare(queue='task_queue', durable=True) print(' [*] Waiting for messages. To exit press CTRL+C') def callback(ch, method, properties, body): print(" [x] Received %r" % body) time.sleep(body.count(b'.')) print(" [x] Done") ch.basic_ack(delivery_tag=method.delivery_tag) channel.basic_qos(prefetch_count=1) channel.basic_consume(queue='task_queue', on_message_callback=callback) channel.start_consuming()

发布订阅

以下转自:https://www.cnblogs.com/wangqiaomei/p/5715331.html

发布订阅原理:

1)发布订阅和简单的消息队列区别在于,发布订阅会将消息发送给所有的订阅者,而消息队列中的数据被消费一次便消失。

2)所以,RabbitMQ实现发布和订阅时,会为每一个订阅者创建一个队列,而发布者发布消息时,会将消息放置在所有相关队列中。

3)exchange 可以帮你发消息到多个队列!type设为什么值,就把消息发给哪些队列。

发布订阅应用到监控上:

模板就是写上一段脚本,放在服务器上,

客户端每5分钟,从服务端拿到监控模板,根据模板来取数据,

然后把数据结果发步到服务端的redis频道里。

服务端收到数据,1)处理历史记录 2)报警 3)dashboard显示监控信息

服务端有三处一直来订阅服务端频道(一直来收取客户端监控数据)

fanout:发布给所有绑定队列(广播)

exchange type = fanout

exchange 可以帮你发消息到多个队列,type = fanout表示:跟exchange绑定的所有队列,都会收到消息。

发布者:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

__author__ = 'WangQiaomei

import pika

import sys

# ########################### 发布者 ###########################

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='192.168.137.208'))

channel = connection.channel()

channel.exchange_declare(exchange='logs',

type='fanout')

message = ' '.join(sys.argv[1:]) or "info: Hello World!"

channel.basic_publish(exchange='logs',

routing_key='',

body=message)

print(" [x] Sent %r" % message)

connection.close()

订阅者:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

__author__ = 'WangQiaomei'

import pika

# ########################### 订阅者 ###########################

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='192.168.137.208'))

channel = connection.channel()

channel.exchange_declare(exchange='logs',

type='fanout')

# 随机创建队列

result = channel.queue_declare(exclusive=True)

queue_name = result.method.queue

# 绑定

channel.queue_bind(exchange='logs',

queue=queue_name)

print(' [*] Waiting for logs. To exit press CTRL+C')

def callback(ch, method, properties, body):

print(" [x] %r" % body)

channel.basic_consume(callback,

queue=queue_name,

no_ack=True)

channel.start_consuming()

'''

多次执行这个文件,就会随机生成多个队列。并且exchange都绑定这些队列。

然后发布者只需要给exchange发送消息,然后exchange绑定的多个队列都有这个消息了。订阅者就收到这个消息了。

'''

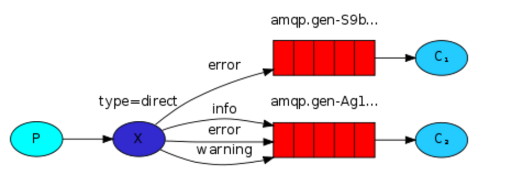

direct:关键字发送(完全匹配)

一个队列还可以绑定多个关键字

对一个随机队列,绑定三个关键字

再次执行,对另一个随机队列,只绑定一个关键字。

消费者:每执行一次可以生成一个队列。通过使用命令行传参的方式,来传入队列的关键字。

#!/usr/bin/env python

import pika

import sys

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='localhost'))

channel = connection.channel()

channel.exchange_declare(exchange='direct_logs',

type='direct')

result = channel.queue_declare(exclusive=True)

queue_name = result.method.queue

severities = sys.argv[1:]

if not severities:

sys.stderr.write("Usage: %s [info] [warning] [error]\n" % sys.argv[0])

sys.exit(1)

for severity in severities:

channel.queue_bind(exchange='direct_logs',

queue=queue_name,

routing_key=severity)

print(' [*] Waiting for logs. To exit press CTRL+C')

def callback(ch, method, properties, body):

print(" [x] %r:%r" % (method.routing_key, body))

channel.basic_consume(callback,

queue=queue_name,

no_ack=True)

channel.start_consuming()

容易测试的版本:

消费者1:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

__author__ = 'WangQiaomei'

import pika

import sys

# ########################### 消费者1 ###########################

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='192.168.137.208'))

channel = connection.channel()

channel.exchange_declare(exchange='direct_logs',

type='direct')

result = channel.queue_declare(exclusive=True) # 随机生成队列

queue_name = result.method.queue

severities =["info","warbing","error"]

if not severities:

sys.stderr.write("Usage: %s [info] [warning] [error]\n" % sys.argv[0])

sys.exit(1)

for severity in severities:

channel.queue_bind(exchange='direct_logs',

queue=queue_name,

routing_key=severity)

print(' [*] Waiting for logs. To exit press CTRL+C')

def callback(ch, method, properties, body):

print(" [x] %r:%r" % (method.routing_key, body))

channel.basic_consume(callback,

queue=queue_name,

no_ack=True)

channel.start_consuming()

消费者2:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

__author__ = 'WangQiaomei'

import pika

import sys

# ########################### 消费者2 ###########################

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='192.168.137.208'))

channel = connection.channel()

channel.exchange_declare(exchange='direct_logs',

type='direct')

result = channel.queue_declare(exclusive=True) # 随机生成队列

queue_name = result.method.queue

severities =["error"]

for severity in severities:

channel.queue_bind(exchange='direct_logs',

queue=queue_name,

routing_key=severity)

print(' [*] Waiting for logs. To exit press CTRL+C')

def callback(ch, method, properties, body):

print(" [x] %r:%r" % (method.routing_key, body))

channel.basic_consume(callback,

queue=queue_name,

no_ack=True)

channel.start_consuming()

生产者:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

__author__ = 'WangQiaomei'

import pika

import sys

# ############################## 生产者 ##############################

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='192.168.137.208'))

channel = connection.channel()

channel.exchange_declare(exchange='direct_logs',

type='direct')

severity = 'info'

message = 'Hello World!'

channel.basic_publish(exchange='direct_logs',

routing_key=severity,

body=message)

print(" [x] Sent %r:%r" % (severity, message))

connection.close()

'''

同时运行消费者1,消费者2,然后修改生产者的关键字,运行生产者。

当生产者:severity = 'info',则消费者1收到消息,消费者2没收到消息

当生产者:severity = 'error',则消费者1、消费者2 都收到消息

'''

模糊匹配

exchange type = topic

在topic类型下,可以让队列绑定几个模糊的关键字,之后发送者将数据发送到exchange,exchange将传入”路由值“和 ”关键字“进行匹配,匹配成功,则将数据发送到指定队列。

- # 表示可以匹配 0 个 或 多个 字符

- * 表示只能匹配 一个 任意字符

|

1

2

3

|

发送者路由值 队列中old.boy.python old.* -- 不匹配old.boy.python old.# -- 匹配 |

消费者:

#!/usr/bin/env python

import pika

import sys

# ############################## 消费者 ##############################

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='192.168.137.208'))

channel = connection.channel()

channel.exchange_declare(exchange='topic_logs',

type='topic')

result = channel.queue_declare(exclusive=True)

queue_name = result.method.queue

binding_keys = "*.orange.*"

for binding_key in binding_keys:

channel.queue_bind(exchange='topic_logs',

queue=queue_name,

routing_key=binding_key)

print(' [*] Waiting for logs. To exit press CTRL+C')

def callback(ch, method, properties, body):

print(" [x] %r:%r" % (method.routing_key, body))

channel.basic_consume(callback,

queue=queue_name,

no_ack=True)

channel.start_consuming()

生产者:

#!/usr/bin/env python

import pika

import sys

# ############################## 生产者 ##############################

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='192.168.137.208'))

channel = connection.channel()

channel.exchange_declare(exchange='topic_logs',

type='topic')

# routing_key = 'abc.new.qiaomei.old'

routing_key = 'neworangeold'

message = 'Hello World!'

channel.basic_publish(exchange='topic_logs',

routing_key=routing_key,

body=message)

print(" [x] Sent %r:%r" % (routing_key, message))

connection.close()

'''

#.orange.# 匹配:new.orange.old neworangeold

*.orange.* 匹配:neworangeold,不匹配:new.orange.old

'''

saltstack原理实现

saltstack:zeromq:放到内存里的,会更快,会基于这个做rcp

openstack:大量使用:rabbitmq

saltstack上有master,有三个队列。,让三个客户端每个人取一个队列的任务

saltstack的原理:

1)发一条命令ifconfig,想让所有nginx主机组的机器,都执行。

2)在master我们可以发命令给exchange,nginx总共有10台服务器,创建10个带有nginx关键字的10个队列,

3)master随机生成队列,md5是一个队列的名字,exchange把命令和md5这个消息推送到nginx关键字的队列里。

4)nginx10台服务器从队列中取出消息,执行命令,并且把主机名和执行的结果返回给这个队列里。

5)master变为消费者,取出队列里的主机名和执行结果,并打印到终端上。

服务器1:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

__author__ = 'WangQiaomei'

import pika

import sys

# ########################### 消费者1 ###########################

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='192.168.137.208'))

channel = connection.channel()

channel.exchange_declare(exchange='direct_logs',

type='direct')

result = channel.queue_declare(exclusive=True) # 随机生成队列

queue_name = result.method.queue

severities = ]

if not severities:

sys.stderr.write("Usage: %s [info] [warning] [error]\n" % sys.argv[0])

sys.exit(1)

for severity in severities:

channel.queue_bind(exchange='direct_logs',

queue=queue_name,

routing_key=severity)

print(' [*] Waiting for logs. To exit press CTRL+C')

def callback(ch, method, properties, body):

print(" [x] %r:%r" % (method.routing_key, body))

queue_md5=body.decode().split(",")[1]

hostname = 'nginx1'

channel.queue_declare(queue=queue_md5) # 如果队列没有创建,就创建这个队列

channel.basic_publish(exchange='',

routing_key=queue_md5, # 指定队列的关键字为,这里是队列的名字

body='%s|cmd_result1' %hostname) # 往队列里发的消息内容

channel.basic_consume(callback,

queue=queue_name,

no_ack=True)

channel.start_consuming()

服务器2:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

__author__ = 'WangQiaomei'

import pika

import sys

# ########################### 消费者2 ###########################

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='192.168.137.208'))

channel = connection.channel()

channel.exchange_declare(exchange='direct_logs',

type='direct')

result = channel.queue_declare(exclusive=True) # 随机生成队列

queue_name = result.method.queue

severities = ["nginx"]

for severity in severities:

channel.queue_bind(exchange='direct_logs',

queue=queue_name,

routing_key=severity)

print(' [*] Waiting for logs. To exit press CTRL+C')

def callback(ch, method, properties, body):

print(" [x] %r:%r" % (method.routing_key, body))

queue_md5=body.decode().split(",")[1]

hostname = 'nginx2'

channel.queue_declare(queue=queue_md5) # 如果队列没有创建,就创建这个队列

channel.basic_publish(exchange='',

routing_key=queue_md5, # 指定队列的关键字为,这里是队列的名字

body='%s|cmd_result2' %hostname) # 往队列里发的消息内容

channel.basic_consume(callback,

queue=queue_name,

no_ack=True)

channel.start_consuming()

master:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

__author__ = 'WangQiaomei'

import pika

import sys

import hashlib

# ############################## 生产者 ##############################

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='192.168.137.208'))

channel = connection.channel()

channel.exchange_declare(exchange='direct_logs',

type='direct')

severity = 'nginx'

m2 = hashlib.md5()

m2.update(severity.encode('utf-8'))

md5_security=m2.hexdigest()

print('md5_security:',md5_security)

message = 'cmd,%s' % md5_security

channel.basic_publish(exchange='direct_logs',

routing_key=severity,

body=message)

print(" [x] Sent %r:%r" % (severity, message))

connection.close()

#################################3

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='192.168.137.208'))

channel = connection.channel()

channel.queue_declare(queue=md5_security) # 如果队列没有创建,就创建这个队列

def callback(ch, method, propertities,body):

print(" [x] Received %r" % body)

channel.basic_consume(callback,

queue=md5_security, # 队列名

no_ack=True) # 不通知已经收到,如果连接中断消息就丢失

print(' [*] Waiting for message. To exit press CTRL+C')

channel.start_consuming()

打印:

|

1

2

3

4

5

6

7

8

9

10

11

12

|

'''服务器1,和服务器2都打印: [*] Waiting for logs. To exit press CTRL+C [x] 'nginx':b'cmd,ee434023cf89d7dfb21f63d64f0f9d74'master打印:md5_security: ee434023cf89d7dfb21f63d64f0f9d74 [x] Sent 'nginx':'cmd,ee434023cf89d7dfb21f63d64f0f9d74' [*] Waiting for message. To exit press CTRL+C [x] Received b'nginx2|cmd_result2' [x] Received b'nginx1|cmd_result1'''' |

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· Manus的开源复刻OpenManus初探

· AI 智能体引爆开源社区「GitHub 热点速览」

· 三行代码完成国际化适配,妙~啊~

· .NET Core 中如何实现缓存的预热?