springboot集成kafka

pom

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

<!-- <version>2.2.7.RELEASE</version>-->

</dependency>

yml

spring: kafka: bootstrap-servers: 127.0.0.1:9092 producer: # 发生错误后,消息重发的次数。 retries: 0 #当有多个消息需要被发送到同一个分区时,生产者会把它们放在同一个批次里。该参数指定了一个批次可以使用的内存大小,按照字节数计算。 batch-size: 16384 # 设置生产者内存缓冲区的大小。 buffer-memory: 33554432 # 键的序列化方式 key-serializer: org.apache.kafka.common.serialization.StringSerializer # 值的序列化方式 value-serializer: org.apache.kafka.common.serialization.StringSerializer # acks=0 : 生产者在成功写入消息之前不会等待任何来自服务器的响应。 # acks=1 : 只要集群的首领节点收到消息,生产者就会收到一个来自服务器成功响应。 # acks=all :只有当所有参与复制的节点全部收到消息时,生产者才会收到一个来自服务器的成功响应。 acks: all consumer: # 指定 group_id # 指定一个默认的组名 # 不使用group的话,启动10个consumer消费一个topic,这10个consumer都能得到topic的所有数据,相当于这个topic中的任一条消息被消费10次。 # 使用group的话,连接时带上groupId,topic的消息会分发到10个consumer上,每条消息只被消费1次 group-id: group_id # 自动提交的时间间隔 在spring boot 2.X 版本中这里采用的是值的类型为Duration 需要符合特定的格式,如1S,1M,2H,5D auto-commit-interval: 1S # 该属性指定了消费者在读取一个没有偏移量的分区或者偏移量无效的情况下该作何处理: # latest(默认值)在偏移量无效的情况下,消费者将从最新的记录开始读取数据(在消费者启动之后生成的记录) # earliest :在偏移量无效的情况下,消费者将从起始位置读取分区的记录 auto-offset-reset: earliest # 是否自动提交偏移量,默认值是true,为了避免出现重复数据和数据丢失,可以把它设置为false,然后手动提交偏移量 enable-auto-commit: false # 键的反序列化方式 key-deserializer: org.apache.kafka.common.serialization.StringDeserializer # 值的反序列化方式 value-deserializer: org.apache.kafka.common.serialization.StringDeserializer listener: # 在侦听器容器中运行的线程数。 concurrency: 5 #listner负责ack,每调用一次,就立即commit ack-mode: manual_immediate missing-topics-fatal: false

基础一对一、一对多、多消费者集群

package com.hcp.tools.kafaka; import org.apache.kafka.clients.admin.NewTopic; import org.apache.kafka.clients.consumer.ConsumerRecord; import org.springframework.beans.factory.annotation.Autowired; import org.springframework.context.annotation.Bean; import org.springframework.kafka.annotation.KafkaListener; import org.springframework.kafka.core.KafkaTemplate; import org.springframework.stereotype.Component; import org.springframework.web.bind.annotation.GetMapping; import org.springframework.web.bind.annotation.RestController; @RestController public class ComsumerP { private static final String TOPIC = "tp"; @Bean public NewTopic batchTopic() { // 主题 分区数 备份数 return new NewTopic(ComsumerP.TOPIC, 1, (short) 1); } @Autowired KafkaTemplate<String,Object> kafkaTemplate; String data = "{'userId':'1','name':'llf'}"; @GetMapping("pd") public String sendMsg(){ kafkaTemplate.send(ComsumerP.TOPIC,data); return "success"; } @KafkaListener(topics = ComsumerP.TOPIC, groupId = "group-id") //这个groupId是在yml中配置的 public void consumer(ConsumerRecord<?, ?> consumer) { System.out.println("基础点对点,partition:" + consumer.partition()+",offset:"+consumer.offset()+",value:"+consumer.value()); } @KafkaListener(topics = ComsumerP.TOPIC, groupId = "group-id2") //这个groupId是在yml中配置的 public void consumer2(ConsumerRecord<?, ?> consumer) { System.out.println("一对多,partition:" + consumer.partition()+",offset:"+consumer.offset()+",value:"+consumer.value()); } @KafkaListener(topics = ComsumerP.TOPIC, groupId = "group-id") //这个groupId是在yml中配置的 public void consumer3(ConsumerRecord<?, ?> consumer) { System.out.println("多消费者集群消费,partition:" + consumer.partition()+",offset:"+consumer.offset()+",value:"+consumer.value()); } }

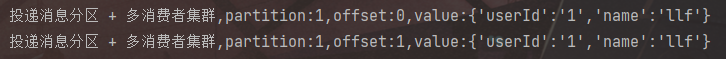

投递消息分区 + 多消费者集群

package com.hcp.tools.kafaka;

import org.apache.kafka.clients.admin.NewTopic;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.context.annotation.Bean;

import org.springframework.kafka.annotation.KafkaListener;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.stereotype.Component;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController;

@RestController

public class ComsumerP {

private static final String TOPIC = "tp";

@Bean

public NewTopic batchTopic() {

// 主题 分区数 备份数

return new NewTopic(ComsumerP.TOPIC, 2, (short) 1);

}

@Autowired

KafkaTemplate<String,Object> kafkaTemplate;

String data = "{'userId':'1','name':'llf'}";

@GetMapping("pd")

public String sendMsg(){

kafkaTemplate.send(ComsumerP.TOPIC,data);

return "success";

}

@KafkaListener(topics = ComsumerP.TOPIC, groupId = "group-id") //这个groupId是在yml中配置的

public void consumer(ConsumerRecord<?, ?> consumer) {

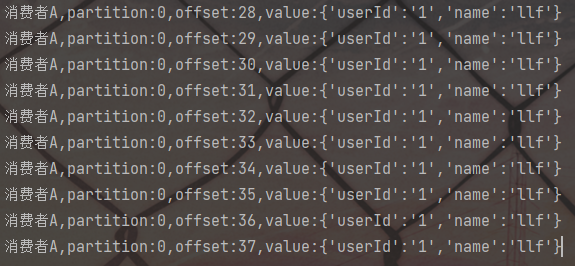

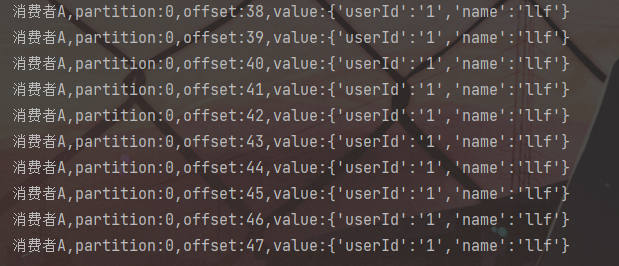

System.out.println("消费者A,partition:" + consumer.partition()+",offset:"+consumer.offset()+",value:"+consumer.value());

}

@KafkaListener(topics = ComsumerP.TOPIC, groupId = "group-id") //这个groupId是在yml中配置的

public void consumer2(ConsumerRecord<?, ?> consumer) {

System.out.println("投递消息分区 + 多消费者集群,partition:" + consumer.partition()+",offset:"+consumer.offset()+",value:"+consumer.value());

}

}

多消费者集群消息顺序一致性

package com.hcp.tools.kafaka; import org.apache.kafka.clients.admin.NewTopic; import org.apache.kafka.clients.consumer.ConsumerRecord; import org.springframework.beans.factory.annotation.Autowired; import org.springframework.context.annotation.Bean; import org.springframework.kafka.annotation.KafkaListener; import org.springframework.kafka.core.KafkaTemplate; import org.springframework.stereotype.Component; import org.springframework.web.bind.annotation.GetMapping; import org.springframework.web.bind.annotation.RestController; @RestController public class ComsumerP { private static final String TOPIC = "tp"; @Bean public NewTopic batchTopic() { // 主题 分区数 备份数 return new NewTopic(ComsumerP.TOPIC, 2, (short) 1); } @Autowired KafkaTemplate<String,Object> kafkaTemplate; String data = "{'userId':'1','name':'llf'}"; @GetMapping("pd") public String sendMsg(){ for(int i = 0;i < 10;i++){ kafkaTemplate.send(ComsumerP.TOPIC,"ComsumerP",data); } return "success"; } @KafkaListener(topics = ComsumerP.TOPIC, groupId = "group-id") //这个groupId是在yml中配置的 public void consumer(ConsumerRecord<?, ?> consumer) { System.out.println("消费者A,partition:" + consumer.partition()+",offset:"+consumer.offset()+",value:"+consumer.value()); } @KafkaListener(topics = ComsumerP.TOPIC, groupId = "group-id") //这个groupId是在yml中配置的 public void consumer2(ConsumerRecord<?, ?> consumer) { System.out.println("投递消息分区 + 多消费者集群,partition:" + consumer.partition()+",offset:"+consumer.offset()+",value:"+consumer.value()); } }

手动提交 commit offset + 消息重试

package com.hcp.tools.kafaka; import org.apache.kafka.clients.admin.NewTopic; import org.apache.kafka.clients.consumer.ConsumerRecord; import org.springframework.beans.factory.annotation.Autowired; import org.springframework.context.annotation.Bean; import org.springframework.kafka.annotation.KafkaListener; import org.springframework.kafka.config.ConcurrentKafkaListenerContainerFactory; import org.springframework.kafka.config.KafkaListenerContainerFactory; import org.springframework.kafka.core.ConsumerFactory; import org.springframework.kafka.core.KafkaTemplate; import org.springframework.kafka.listener.ConcurrentMessageListenerContainer; import org.springframework.kafka.listener.ContainerProperties; import org.springframework.kafka.listener.SeekToCurrentErrorHandler; import org.springframework.stereotype.Component; import org.springframework.util.backoff.FixedBackOff; import org.springframework.web.bind.annotation.GetMapping; import org.springframework.web.bind.annotation.RestController; @RestController public class ComsumerP { private static final String TOPIC = "tp"; @Bean public NewTopic batchTopic() { // 主题 分区数 备份数 return new NewTopic(ComsumerP.TOPIC, 1, (short) 1); } // 配置手动提交offset @Bean public KafkaListenerContainerFactory<ConcurrentMessageListenerContainer<String, String>> kafkaListenerContainerFactory(ConsumerFactory<String, String> consumerFactory) { ConcurrentKafkaListenerContainerFactory<String, String> factory = new ConcurrentKafkaListenerContainerFactory<>(); factory.setConsumerFactory(consumerFactory); factory.getContainerProperties().setPollTimeout(1500); // 消息是否或异常重试次数3次 (5000=5秒 3=重试3次) // 注意: 没有配置配置手动提交offset,不生效, 因为没有配置手动提交时消息接受到就会自动提交,不会管程序是否异常 SeekToCurrentErrorHandler seekToCurrentErrorHandler = new SeekToCurrentErrorHandler((consumerRecord, e) -> { System.out.println("消费消息异常.抛弃这个消息,{}"+ consumerRecord.toString()+e); }, new FixedBackOff(5000, 3)); factory.setErrorHandler(seekToCurrentErrorHandler); // 配置手动提交offset factory.getContainerProperties().setAckMode(ContainerProperties.AckMode.MANUAL); return factory; } @Autowired KafkaTemplate<String,Object> kafkaTemplate; String data = "{'userId':'1','name':'llf'}"; @GetMapping("pd") public String sendMsg(){ for(int i = 0;i < 10;i++){ kafkaTemplate.send(ComsumerP.TOPIC,"ComsumerP",data); } return "success"; } @KafkaListener(topics = ComsumerP.TOPIC, groupId = "group-id") //这个groupId是在yml中配置的 public void consumer(ConsumerRecord<?, ?> consumer) { System.out.println("消费者A,partition:" + consumer.partition()+",offset:"+consumer.offset()+",value:"+consumer.value()); } }

浙公网安备 33010602011771号

浙公网安备 33010602011771号