sqoop2-1.99.5-cdh5.5.4.tar.gz的部署搭建

不多说,直接上干货!

首先,说下博主我,为什么,好端端的Sqoop1用的好好的,然后又安装和学习Sqoop2?

因为,在Cloudera Hue里的Sqoop,是需要Sqoop2。

HUE配置文件hue.ini 的sqoop模块详解(图文详解)(分HA集群)

Sqoop2安装简介

sqoop2的安装分为server端和client端。

server端:负责与hadoop集群通信进行数据的迁移,client端负责与用户和server交互。

client端:不用安装, 只需要将其安装包解压到集群中任何的机器上去,然后对其进行解压即可, 无需其他额外的配置。

Sqoop分client和server,server安装在Hadoop或Spark集群中的某个节点上,这个节点充当要连接sqoop的入口节点,

client端不需要安装hadoop。

本博文是个入门,即只在bigdatamaster上安装server端。当然你也可以假设认为server和client都在bigdatamaster上哈。

对于Sqoop和hive这样的组件,我一般都是安装在master节点,即在本博客里是bigdatamaster。

http://archive.cloudera.com/cdh5/cdh/5/sqoop2-1.99.5-cdh5.5.4.tar.gz

[hadoop@bigdatamaster app]$ cd sqoop [hadoop@bigdatamaster sqoop]$ pwd /home/hadoop/app/sqoop [hadoop@bigdatamaster sqoop]$ ll total 188 drwxr-xr-x 2 hadoop hadoop 4096 Apr 26 2016 bin -rw-r--r-- 1 hadoop hadoop 51764 Apr 26 2016 CHANGELOG.txt drwxr-xr-x 3 hadoop hadoop 4096 Apr 26 2016 client drwxr-xr-x 3 hadoop hadoop 4096 Apr 26 2016 cloudera drwxr-xr-x 3 hadoop hadoop 4096 Apr 26 2016 common drwxr-xr-x 3 hadoop hadoop 4096 Apr 26 2016 common-test drwxr-xr-x 9 hadoop hadoop 4096 Apr 26 2016 connector drwxr-xr-x 3 hadoop hadoop 4096 Apr 26 2016 core drwxr-xr-x 2 hadoop hadoop 4096 Apr 26 2016 dev-support drwxr-xr-x 3 hadoop hadoop 4096 Apr 26 2016 dist drwxr-xr-x 8 hadoop hadoop 4096 Apr 26 2016 docs drwxr-xr-x 3 hadoop hadoop 4096 Apr 26 2016 execution -rw-r--r-- 1 hadoop hadoop 17253 Apr 26 2016 LICENSE.txt -rw-r--r-- 1 hadoop hadoop 166 Apr 26 2016 NOTICE.txt -rw-r--r-- 1 hadoop hadoop 30245 Apr 26 2016 pom.xml -rw-r--r-- 1 hadoop hadoop 1610 Apr 26 2016 README.txt drwxr-xr-x 5 hadoop hadoop 4096 Apr 26 2016 repository drwxr-xr-x 3 hadoop hadoop 4096 Apr 26 2016 security drwxr-xr-x 10 hadoop hadoop 4096 Apr 26 2016 server drwxr-xr-x 4 hadoop hadoop 4096 Apr 26 2016 shell drwxr-xr-x 3 hadoop hadoop 4096 Apr 26 2016 submission drwxr-xr-x 3 hadoop hadoop 4096 Apr 26 2016 test drwxr-xr-x 3 hadoop hadoop 4096 Apr 26 2016 tomcat drwxr-xr-x 3 hadoop hadoop 4096 Apr 26 2016 tools [hadoop@bigdatamaster sqoop]$

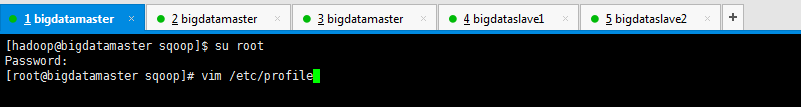

配置环境变量

[hadoop@bigdatamaster sqoop]$ su root

Password:

[root@bigdatamaster sqoop]# vim /etc/profile

#sqoop1 #export SQOOP_HOME=/home/hadoop/app/sqoop #export PATH=$PATH:$SQOOP_HOME/bin #sqoop2 export SQOOP_HOME=/home/hadoop/app/sqoop export PATH=$PATH:$SQOOP_HOME/bin export CATALINA_BASE=/home/hadoop/app/sqoop/server export LOGDIR=$SQOOP_HOME/logs/

对于Sqoop2的server端安装配置(说明)

- 解压软件包到一个目录下。(我一般是放在/home/hadoop/app下)

- 修改环境变

export SQOOP_HOME=/home/hadoop/app/sqoop (因为我是用的软链接)

export PATH=$PATH:$SQOOP_HOME/bin

export CATALINA_BASE=$SQOOP_HOME/server

export LOGDIR=$SQOOP_HOME/logs/ - 生效环境变量的配置

- source 配置文件名

-

修改sqoop配置:

vi server/conf/sqoop.properties

将org.apache.sqoop.submission.engine.mapreduce.configuration.directory后面hadoop的位置修改为自己安装的hadoop配置文件位置,我的为:/home/hadoop/app/hadoop/ -

修改sqoop读取hadoop的jar包的路径 vi /sqoop/server/conf/catalina.properties

将common.loader行后的/usr/lib/hadoop/lib/.jar改成自己的hadoop jar 包目录,我的为:

/home/hadoop/app/hadoop/share/hadoop/common/.jar,

/home/hadoop/app/hadoop/share/hadoop/common/lib/.jar,

/home/hadoop/app/hadoop/share/hadoop/hdfs/.jar,

/home/hadoop/app/hadoop/share/hadoop/hdfs/lib/.jar,

/home/hadoop/app/hadoop/share/hadoop/mapreduce/.jar,

/home/hadoop/app/hadoop/share/hadoop/mapreduce/lib/.jar,

/home/hadoop/app/hadoop/share/hadoop/tools/.jar,

/home/hadoop/app/hadoop/share/hadoop/tools/lib/.jar,

/home/hadoop/app/hadoop/share/hadoop/yarn/.jar,

/home/hadoop/app/hadoopshare/hadoop/yarn/lib/*.jar

注意: 在修改common.loader的过程中, 不能换行

本步骤的另外的一种方法是: 直接将上诉的包 拷贝到$SQOOP_HOME/server/lib文件夹内部- 将mysql的连接jar包拷贝的$SQOOP_HOME/lib文件夹中(lib文件夹需要自己创建)到此sqoop就基本配置完成可以直接运行.

下面是,对sqoop2的配置文件进行配置

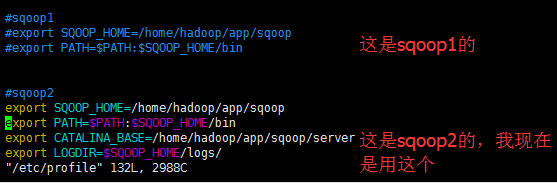

1、修改$SQOOP_HOME/server/conf/catalina.properties文件中的common.loader属性,在其后增加(写到一行):

$HADOOP_HOME/share/hadoop/common/*.jar, $HADOOP_HOME/share/hadoop/common/lib/*.jar, $HADOOP_HOME/share/hadoop/yarn/*.jar, $HADOOP_HOME/share/hadoop/hdfs/*.jar, $HADOOP_HOME,/share/hadoop/mapreduce/*.jar

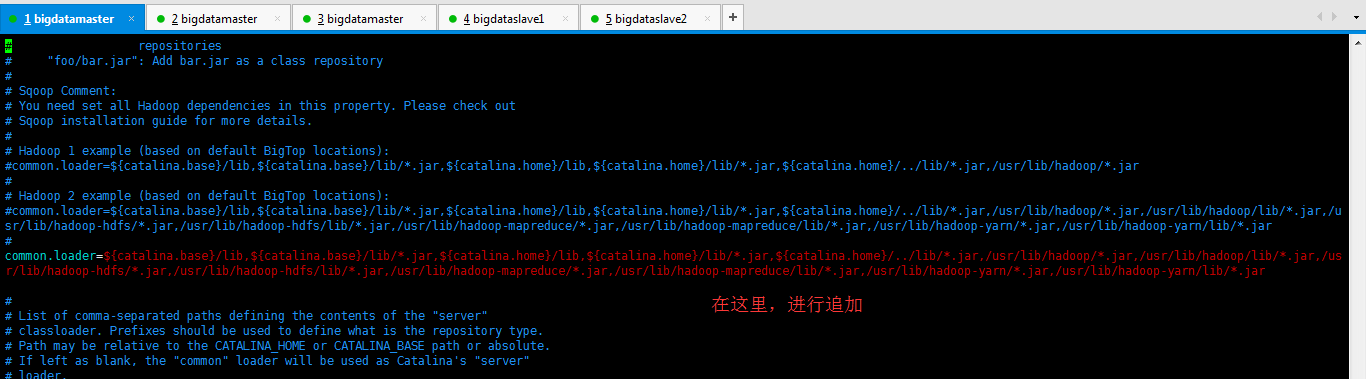

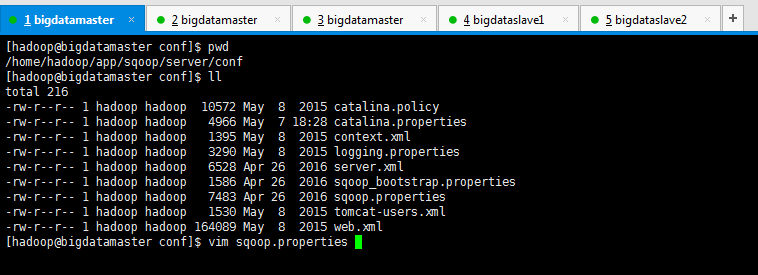

[hadoop@bigdatamaster conf]$ pwd /home/hadoop/app/sqoop/server/conf [hadoop@bigdatamaster conf]$ ll total 216 -rw-r--r-- 1 hadoop hadoop 10572 May 8 2015 catalina.policy -rw-r--r-- 1 hadoop hadoop 4767 Apr 26 2016 catalina.properties -rw-r--r-- 1 hadoop hadoop 1395 May 8 2015 context.xml -rw-r--r-- 1 hadoop hadoop 3290 May 8 2015 logging.properties -rw-r--r-- 1 hadoop hadoop 6528 Apr 26 2016 server.xml -rw-r--r-- 1 hadoop hadoop 1586 Apr 26 2016 sqoop_bootstrap.properties -rw-r--r-- 1 hadoop hadoop 7483 Apr 26 2016 sqoop.properties -rw-r--r-- 1 hadoop hadoop 1530 May 8 2015 tomcat-users.xml -rw-r--r-- 1 hadoop hadoop 164089 May 8 2015 web.xml [hadoop@bigdatamaster conf]$ vim catalina.properties

$HADOOP_HOME/share/hadoop/common/*.jar,$HADOOP_HOME/share/hadoop/common/lib/*.jar,$HADOOP_HOME/share/hadoop/yarn/*.jar,$HADOOP_HOME/share/hadoop/hdfs/*.jar,$HADOOP_HOME,/share/hadoop/mapreduce/*.jar

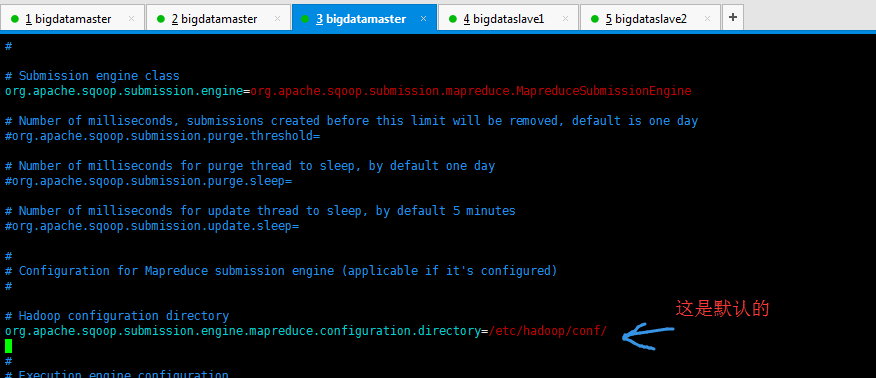

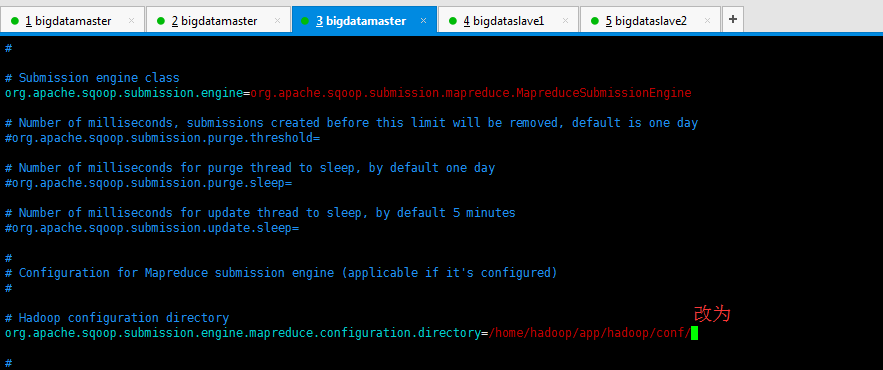

2、修改$SQOOP_HOME/server/conf/sqoop.properties文件org.apache.sqoop.submission.engine.mapreduce.configuration.directory属性,指向本机hadoop配置目录。

[hadoop@bigdatamaster conf]$ pwd /home/hadoop/app/sqoop/server/conf [hadoop@bigdatamaster conf]$ ll total 216 -rw-r--r-- 1 hadoop hadoop 10572 May 8 2015 catalina.policy -rw-r--r-- 1 hadoop hadoop 4966 May 7 18:28 catalina.properties -rw-r--r-- 1 hadoop hadoop 1395 May 8 2015 context.xml -rw-r--r-- 1 hadoop hadoop 3290 May 8 2015 logging.properties -rw-r--r-- 1 hadoop hadoop 6528 Apr 26 2016 server.xml -rw-r--r-- 1 hadoop hadoop 1586 Apr 26 2016 sqoop_bootstrap.properties -rw-r--r-- 1 hadoop hadoop 7483 Apr 26 2016 sqoop.properties -rw-r--r-- 1 hadoop hadoop 1530 May 8 2015 tomcat-users.xml -rw-r--r-- 1 hadoop hadoop 164089 May 8 2015 web.xml [hadoop@bigdatamaster conf]$ vim sqoop.properties

# Hadoop configuration directory

org.apache.sqoop.submission.engine.mapreduce.configuration.directory=/home/hadoop/app/hadoop/conf/

3、$SQOOP_HOME/server/conf/server.xml是Tomcat的配置文件,端口什么的可以在这个文件设置

这里,我暂时不设置。

4、复制mysql-connector-java-5.1.21.jar到$SQOOP_HOME/server/lib/下

[hadoop@bigdatamaster lib]$ pwd /home/hadoop/app/sqoop2-1.99.5-cdh5.5.4/server/lib [hadoop@bigdatamaster lib]$ ls annotations-api.jar catalina-ha.jar catalina-tribes.jar el-api.jar jasper.jar servlet-api.jar tomcat-coyote.jar tomcat-i18n-es.jar tomcat-i18n-ja.jar catalina-ant.jar catalina.jar ecj-4.3.1.jar jasper-el.jar jsp-api.jar sqoop-tomcat-1.99.5-cdh5.5.4.jar tomcat-dbcp.jar tomcat-i18n-fr.jar [hadoop@bigdatamaster lib]$ rz [hadoop@bigdatamaster lib]$ ls annotations-api.jar catalina.jar el-api.jar jsp-api.jar sqoop-tomcat-1.99.5-cdh5.5.4.jar tomcat-i18n-es.jar catalina-ant.jar catalina-tribes.jar jasper-el.jar mysql-connector-java-5.1.21.jar tomcat-coyote.jar tomcat-i18n-fr.jar catalina-ha.jar ecj-4.3.1.jar jasper.jar servlet-api.jar tomcat-dbcp.jar tomcat-i18n-ja.jar [hadoop@bigdatamaster lib]$

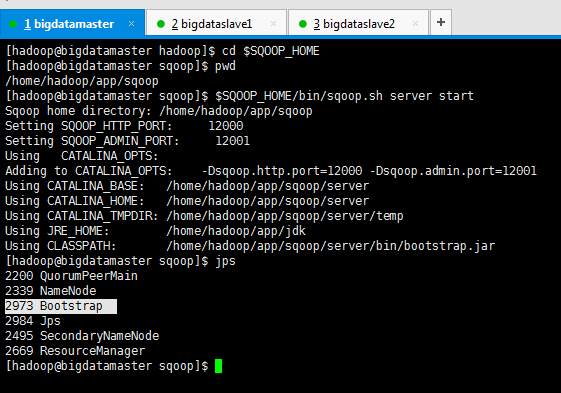

Sqoop2全部配置好之后,按照如下的顺序来

1、启动Sqoop2的server(我这里做个最简单的,在bigdatamaster上)。启动sqoop服务

$SQOOP_HOME/bin/sqoop.sh server start

[hadoop@bigdatamaster hadoop]$ cd $SQOOP_HOME [hadoop@bigdatamaster sqoop]$ pwd /home/hadoop/app/sqoop [hadoop@bigdatamaster sqoop]$ $SQOOP_HOME/bin/sqoop.sh server start Sqoop home directory: /home/hadoop/app/sqoop Setting SQOOP_HTTP_PORT: 12000 Setting SQOOP_ADMIN_PORT: 12001 Using CATALINA_OPTS: Adding to CATALINA_OPTS: -Dsqoop.http.port=12000 -Dsqoop.admin.port=12001 Using CATALINA_BASE: /home/hadoop/app/sqoop/server Using CATALINA_HOME: /home/hadoop/app/sqoop/server Using CATALINA_TMPDIR: /home/hadoop/app/sqoop/server/temp Using JRE_HOME: /home/hadoop/app/jdk Using CLASSPATH: /home/hadoop/app/sqoop/server/bin/bootstrap.jar [hadoop@bigdatamaster sqoop]$ jps 2200 QuorumPeerMain 2339 NameNode 2973 Bootstrap 2984 Jps 2495 SecondaryNameNode 2669 ResourceManager [hadoop@bigdatamaster sqoop]$

jsp命令看到Bootstrap进程。

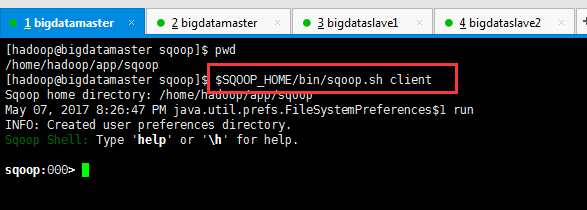

2、启动Sqoop2的client(我这里做个最简单的,在bigdatamaster上),进入客户端交互模式。进入sqoop控制台

$SQOOP_HOME/bin/sqoop.sh client

[hadoop@bigdatamaster sqoop]$ pwd /home/hadoop/app/sqoop [hadoop@bigdatamaster sqoop]$ $SQOOP_HOME/bin/sqoop.sh client Sqoop home directory: /home/hadoop/app/sqoop May 07, 2017 8:26:47 PM java.util.prefs.FileSystemPreferences$1 run INFO: Created user preferences directory. Sqoop Shell: Type 'help' or '\h' for help. sqoop:000>

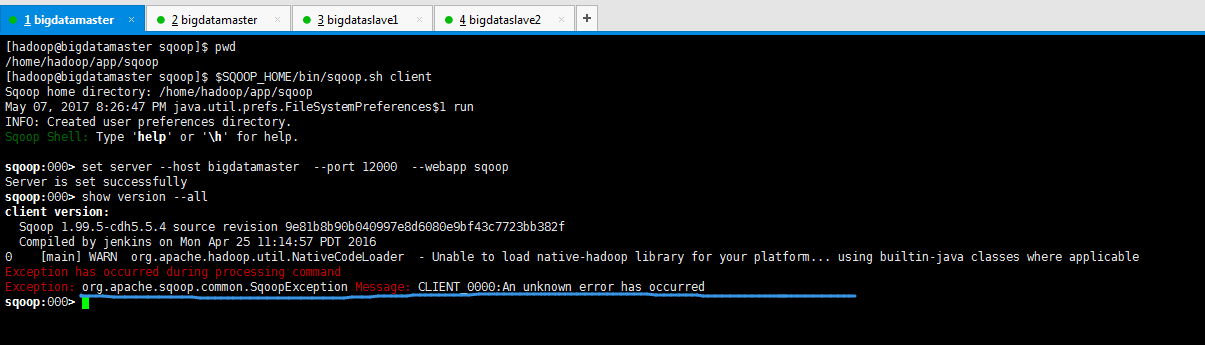

3、在Sqoop2的client连接Sqoop2的server 。 连接服务器

sqoop:000> set server --host bigdatamaster --port 12000 --webapp sqoop

sqoop:000> set server --host localhost --port 12000 --webapp sqoop

sqoop:000> show version --all 当看到show version -all正确的显示 就说明了Sqoop2的client连接上了Sqoop2的服务器。

show version --all 显示服务器、客户端的版本信息,如果server显示错误,

重启一下 server./sqoop.sh server stop

[hadoop@bigdatamaster sqoop]$ pwd /home/hadoop/app/sqoop [hadoop@bigdatamaster sqoop]$ $SQOOP_HOME/bin/sqoop.sh client Sqoop home directory: /home/hadoop/app/sqoop May 07, 2017 8:26:47 PM java.util.prefs.FileSystemPreferences$1 run INFO: Created user preferences directory. Sqoop Shell: Type 'help' or '\h' for help. sqoop:000> set server --host bigdatamaster --port 12000 --webapp sqoop Server is set successfully sqoop:000> show version --all client version: Sqoop 1.99.5-cdh5.5.4 source revision 9e81b8b90b040997e8d6080e9bf43c7723bb382f Compiled by jenkins on Mon Apr 25 11:14:57 PDT 2016 0 [main] WARN org.apache.hadoop.util.NativeCodeLoader - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Exception has occurred during processing command Exception: org.apache.sqoop.common.SqoopException Message: CLIENT_0000:An unknown error has occurred sqoop:000>

sqoop:000> show connector --all 查看连接器

sqoop:000> show connection --all 查看连接

sqoop:000> show connection --xid 1 查看id为1的连接

sqoop:000> create connection --cid 1 创建id为1的连接

<pre name="code" class="java">Creating connection for connector with id 1 Please fill following values to create new connection object Name: mysql --输入名称 Connection configuration JDBC Driver Class: com.mysql.jdbc.Driver --输入 JDBC Connection String: jdbc:mysql://bigdatamaster:3306/sqoop --输入 Username: root --输入 Password: ****** --输入 JDBC Connection Properties: There are currently 0 values in the map: entry# Security related configuration options Max connections: 20 --输入 New connection was successfully created with validation status FINE and persistent id 1

sqoop:000> create job --xid 1 --type import

Creating job for connection with id 1 Please fill following values to create new job object Name: mysql_job Database configuration Schema name: Table name: userinfo 要全量导出一张表,请填写表名,table name 和 table sql statement不能同时配置 Table SQL statement: 如果填写格式必须为 select * from userinfo where ${CONDITIONS} Table column names: Partition column name: id 使用哪个字段来填充过滤条件 userid Nulls in partition column: Boundary query: 如果选择sql方式,这里要写一个查询语句,返回值需为整形,sqoop运行job时,会自动填充${CONDITIONS} 这个占位符,如:select 0,3 from userinfo Output configuration Storage type: 0 : HDFS Choose: 0 Output format: 0 : TEXT_FILE 1 : SEQUENCE_FILE Choose: 1 Compression format: 0 : NONE 1 : DEFAULT 2 : DEFLATE 3 : GZIP 4 : BZIP2 5 : LZO 6 : LZ4 7 : SNAPPY Choose: 0 Output directory: /home/hadoop/out Throttling resources Extractors: Loaders: New job was successfully created with validation status FINE and persistent id 1

sqoop:000> start job --jid 1 启动 Submission details Job ID: 1 Server URL: http://localhost:12000/sqoop/

<span style="font-family: Consolas, 'Courier New', Courier, mono, serif; line-height: 18px;">hadoop fs -ls /mysql/out </span>

更详细,请见

http://sqoop.apache.org/docs/1.99.5/Sqoop5MinutesDemo.html

作者:大数据和人工智能躺过的坑

出处:http://www.cnblogs.com/zlslch/

本文版权归作者和博客园共有,欢迎转载,但未经作者同意必须保留此段声明,且在文章页面明显位置给出原文链接,否则保留追究法律责任的权利。

如果您认为这篇文章还不错或者有所收获,您可以通过右边的“打赏”功能 打赏我一杯咖啡【物质支持】,也可以点击右下角的【好文要顶】按钮【精神支持】,因为这两种支持都是我继续写作,分享的最大动力!