HBase编程 API入门系列之create(管理端而言)(8)

大家,若是看过我前期的这篇博客的话,则

HBase编程 API入门系列之put(客户端而言)(1)

就知道,在这篇博文里,我是在HBase Shell里创建HBase表的。

这里,我带领大家,学习更高级的,因为,在开发中,尽量不能去服务器上创建表。

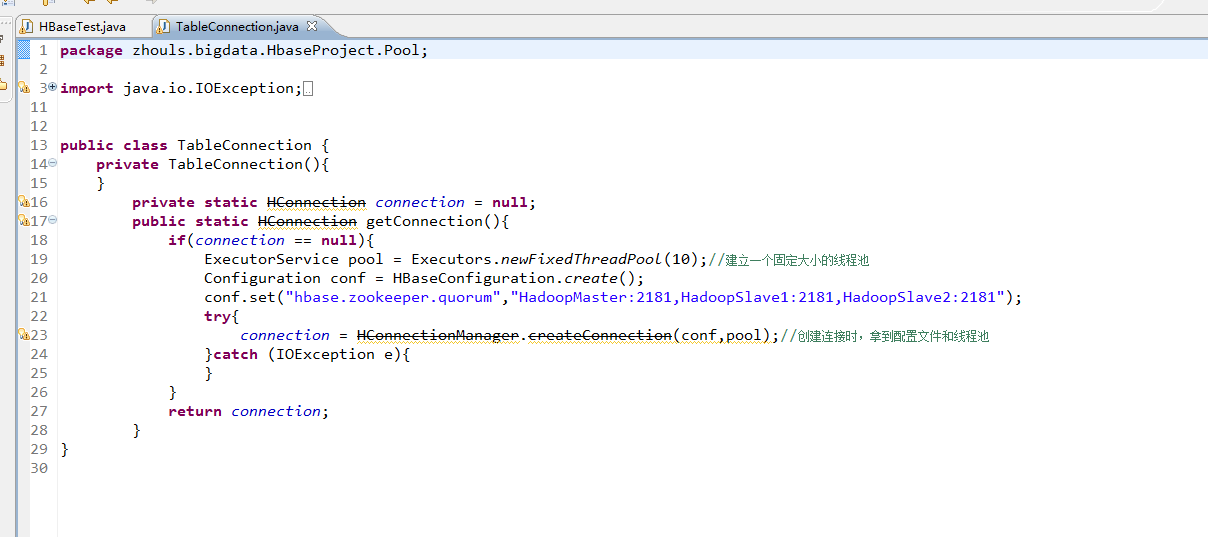

所以,在管理端来创建HBase表。采用线程池的方式(也是生产开发里首推的)。

1 package zhouls.bigdata.HbaseProject.Pool; 2 3 import java.io.IOException; 4 import java.util.concurrent.ExecutorService; 5 import java.util.concurrent.Executors; 6 7 import org.apache.hadoop.conf.Configuration; 8 import org.apache.hadoop.hbase.HBaseConfiguration; 9 import org.apache.hadoop.hbase.client.HConnection; 10 import org.apache.hadoop.hbase.client.HConnectionManager; 11 12 13 public class TableConnection { 14 private TableConnection(){ 15 } 16 17 private static HConnection connection = null; 18 19 public static HConnection getConnection(){ 20 if(connection == null){ 21 ExecutorService pool = Executors.newFixedThreadPool(10);//建立一个固定大小的线程池 22 Configuration conf = HBaseConfiguration.create(); 23 conf.set("hbase.zookeeper.quorum","HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181"); 24 try{ 25 connection = HConnectionManager.createConnection(conf,pool);//创建连接时,拿到配置文件和线程池 26 }catch (IOException e){ 27 } 28 } 29 return connection; 30 } 31 }

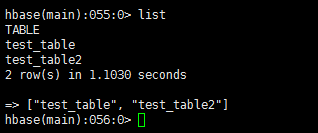

1、最简单的创建HBase表

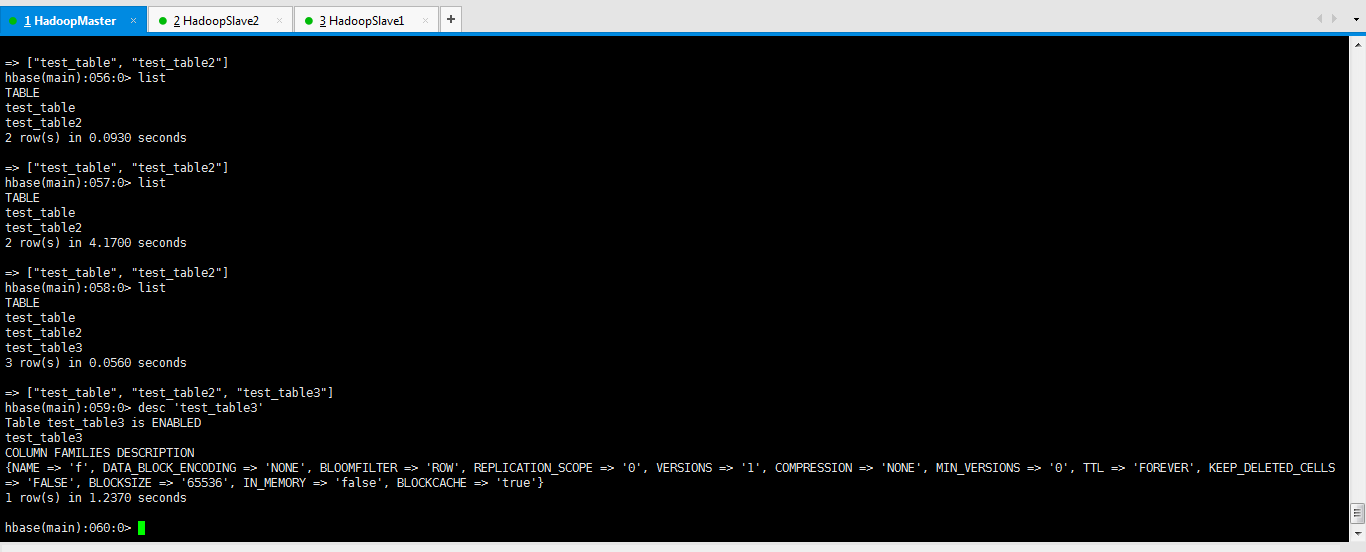

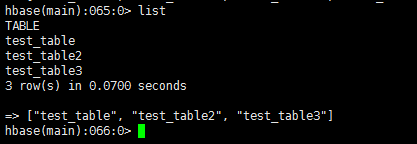

查看,当前已创建有的表

hbase(main):055:0> list

TABLE

test_table

test_table2

2 row(s) in 1.1030 seconds

=> ["test_table", "test_table2"]

hbase(main):056:0>

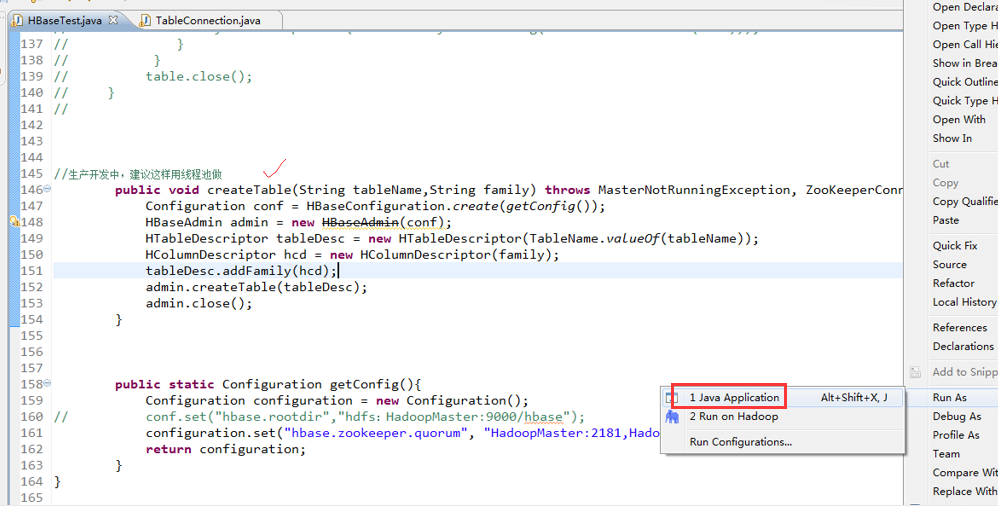

1 package zhouls.bigdata.HbaseProject.Pool; 2 3 import java.io.IOException; 4 5 import zhouls.bigdata.HbaseProject.Pool.TableConnection; 6 7 import javax.xml.transform.Result; 8 9 import org.apache.hadoop.conf.Configuration; 10 import org.apache.hadoop.hbase.Cell; 11 import org.apache.hadoop.hbase.CellUtil; 12 import org.apache.hadoop.hbase.HBaseConfiguration; 13 import org.apache.hadoop.hbase.HColumnDescriptor; 14 import org.apache.hadoop.hbase.HTableDescriptor; 15 import org.apache.hadoop.hbase.MasterNotRunningException; 16 import org.apache.hadoop.hbase.TableName; 17 import org.apache.hadoop.hbase.ZooKeeperConnectionException; 18 import org.apache.hadoop.hbase.client.Delete; 19 import org.apache.hadoop.hbase.client.Get; 20 import org.apache.hadoop.hbase.client.HBaseAdmin; 21 import org.apache.hadoop.hbase.client.HTable; 22 import org.apache.hadoop.hbase.client.HTableInterface; 23 import org.apache.hadoop.hbase.client.Put; 24 import org.apache.hadoop.hbase.client.ResultScanner; 25 import org.apache.hadoop.hbase.client.Scan; 26 import org.apache.hadoop.hbase.util.Bytes; 27 28 public class HBaseTest { 29 public static void main(String[] args) throws Exception { 30 // HTable table = new HTable(getConfig(),TableName.valueOf("test_table"));//表名是test_table 31 // Put put = new Put(Bytes.toBytes("row_04"));//行键是row_04 32 // put.add(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("Andy1"));//列簇是f,列修饰符是name,值是Andy0 33 // put.add(Bytes.toBytes("f2"),Bytes.toBytes("name"),Bytes.toBytes("Andy3"));//列簇是f2,列修饰符是name,值是Andy3 34 // table.put(put); 35 // table.close(); 36 37 // Get get = new Get(Bytes.toBytes("row_04")); 38 // get.addColumn(Bytes.toBytes("f1"), Bytes.toBytes("age"));如现在这样,不指定,默认把所有的全拿出来 39 // org.apache.hadoop.hbase.client.Result rest = table.get(get); 40 // System.out.println(rest.toString()); 41 // table.close(); 42 43 // Delete delete = new Delete(Bytes.toBytes("row_2")); 44 // delete.deleteColumn(Bytes.toBytes("f1"), Bytes.toBytes("email")); 45 // delete.deleteColumn(Bytes.toBytes("f1"), Bytes.toBytes("name")); 46 // table.delete(delete); 47 // table.close(); 48 49 // Delete delete = new Delete(Bytes.toBytes("row_04")); 50 //// delete.deleteColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));//deleteColumn是删除某一个列簇里的最新时间戳版本。 51 // delete.deleteColumns(Bytes.toBytes("f"), Bytes.toBytes("name"));//delete.deleteColumns是删除某个列簇里的所有时间戳版本。 52 // table.delete(delete); 53 // table.close(); 54 55 56 // Scan scan = new Scan(); 57 // scan.setStartRow(Bytes.toBytes("row_01"));//包含开始行键 58 // scan.setStopRow(Bytes.toBytes("row_03"));//不包含结束行键 59 // scan.addColumn(Bytes.toBytes("f"), Bytes.toBytes("name")); 60 // ResultScanner rst = table.getScanner(scan);//整个循环 61 // System.out.println(rst.toString()); 62 // for (org.apache.hadoop.hbase.client.Result next = rst.next();next !=null;next = rst.next() ){ 63 // for(Cell cell:next.rawCells()){//某个row key下的循坏 64 // System.out.println(next.toString()); 65 // System.out.println("family:" + Bytes.toString(CellUtil.cloneFamily(cell))); 66 // System.out.println("col:" + Bytes.toString(CellUtil.cloneQualifier(cell))); 67 // System.out.println("value" + Bytes.toString(CellUtil.cloneValue(cell))); 68 // } 69 // } 70 // table.close(); 71 72 HBaseTest hbasetest =new HBaseTest(); 73 // hbasetest.insertValue(); 74 // hbasetest.getValue(); 75 // hbasetest.delete(); 76 // hbasetest.scanValue(); 77 hbasetest.createTable("test_table3", "f"); 78 } 79 80 81 //生产开发中,建议这样用线程池做 82 // public void insertValue() throws Exception{ 83 // HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table")); 84 // Put put = new Put(Bytes.toBytes("row_01"));//行键是row_01 85 // put.add(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("Andy0")); 86 // table.put(put); 87 // table.close(); 88 // } 89 90 91 92 //生产开发中,建议这样用线程池做 93 // public void getValue() throws Exception{ 94 // HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table")); 95 // Get get = new Get(Bytes.toBytes("row_03")); 96 // get.addColumn(Bytes.toBytes("f"), Bytes.toBytes("name")); 97 // org.apache.hadoop.hbase.client.Result rest = table.get(get); 98 // System.out.println(rest.toString()); 99 // table.close(); 100 // } 101 // 102 //生产开发中,建议这样用线程池做 103 // public void delete() throws Exception{ 104 // HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table")); 105 // Delete delete = new Delete(Bytes.toBytes("row_01")); 106 // delete.deleteColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));//deleteColumn是删除某一个列簇里的最新时间戳版本。 107 //// delete.deleteColumns(Bytes.toBytes("f"), Bytes.toBytes("name"));//delete.deleteColumns是删除某个列簇里的所有时间戳版本。 108 // table.delete(delete); 109 // table.close(); 110 // } 111 //生产开发中,建议这样用线程池做 112 // public void scanValue() throws Exception{ 113 // HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table")); 114 // Scan scan = new Scan(); 115 // scan.setStartRow(Bytes.toBytes("row_02"));//包含开始行键 116 // scan.setStopRow(Bytes.toBytes("row_04"));//不包含结束行键 117 // scan.addColumn(Bytes.toBytes("f"), Bytes.toBytes("name")); 118 // ResultScanner rst = table.getScanner(scan);//整个循环 119 // System.out.println(rst.toString()); 120 // for (org.apache.hadoop.hbase.client.Result next = rst.next();next !=null;next = rst.next() ){ 121 // for(Cell cell:next.rawCells()){//某个row key下的循坏 122 // System.out.println(next.toString()); 123 // System.out.println("family:" + Bytes.toString(CellUtil.cloneFamily(cell))); 124 // System.out.println("col:" + Bytes.toString(CellUtil.cloneQualifier(cell))); 125 // System.out.println("value" + Bytes.toString(CellUtil.cloneValue(cell))); 126 // } 127 // } 128 // table.close(); 129 // } 130 // 131 132 //生产开发中,建议这样用线程池做 133 public void createTable(String tableName,String family) throws MasterNotRunningException, ZooKeeperConnectionException, IOException{ 134 Configuration conf = HBaseConfiguration.create(getConfig()); 135 HBaseAdmin admin = new HBaseAdmin(conf); 136 HTableDescriptor tableDesc = new HTableDescriptor(TableName.valueOf(tableName)); 137 HColumnDescriptor hcd = new HColumnDescriptor(family); 138 tableDesc.addFamily(hcd); 139 admin.createTable(tableDesc); 140 admin.close(); 141 } 142 143 144 145 public static Configuration getConfig(){ 146 Configuration configuration = new Configuration(); 147 // conf.set("hbase.rootdir","hdfs:HadoopMaster:9000/hbase"); 148 configuration.set("hbase.zookeeper.quorum", "HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181"); 149 return configuration; 150 } 151 }

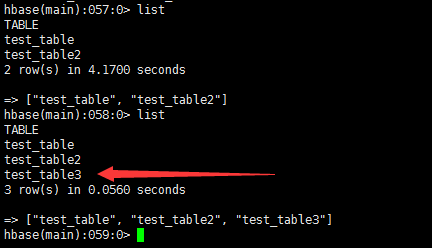

即,创建成功。

hbase(main):057:0> list

TABLE

test_table

test_table2

2 row(s) in 4.1700 seconds

=> ["test_table", "test_table2"]

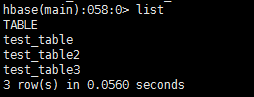

hbase(main):058:0> list

TABLE

test_table

test_table2

test_table3

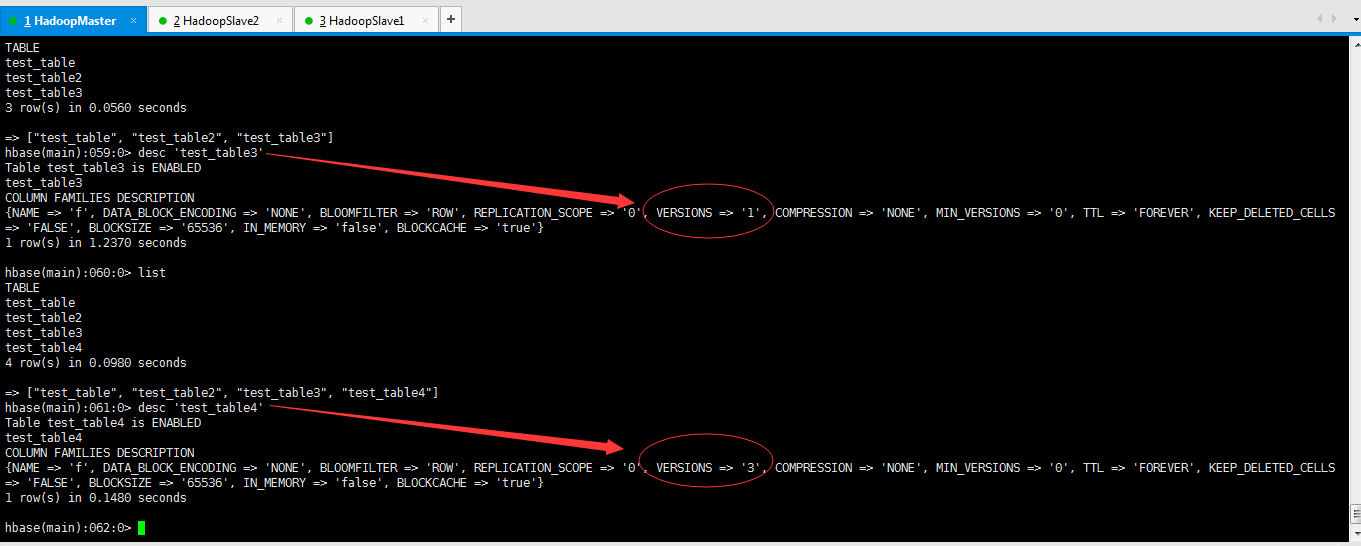

3 row(s) in 0.0560 seconds

=> ["test_table", "test_table2", "test_table3"]

hbase(main):059:0> desc 'test_table3'

Table test_table3 is ENABLED

test_table3

COLUMN FAMILIES DESCRIPTION

{NAME => 'f', DATA_BLOCK_ENCODING => 'NONE', BLOOMFILTER => 'ROW', REPLICATION_SCOPE => '0', VERSIONS => '1', COMPRESSION => 'NONE', MIN_VERSIONS => '0', TTL => 'FOREVER', KEEP_DELETED_CELLS

=> 'FALSE', BLOCKSIZE => '65536', IN_MEMORY => 'false', BLOCKCACHE => 'true'}

1 row(s) in 1.2370 seconds

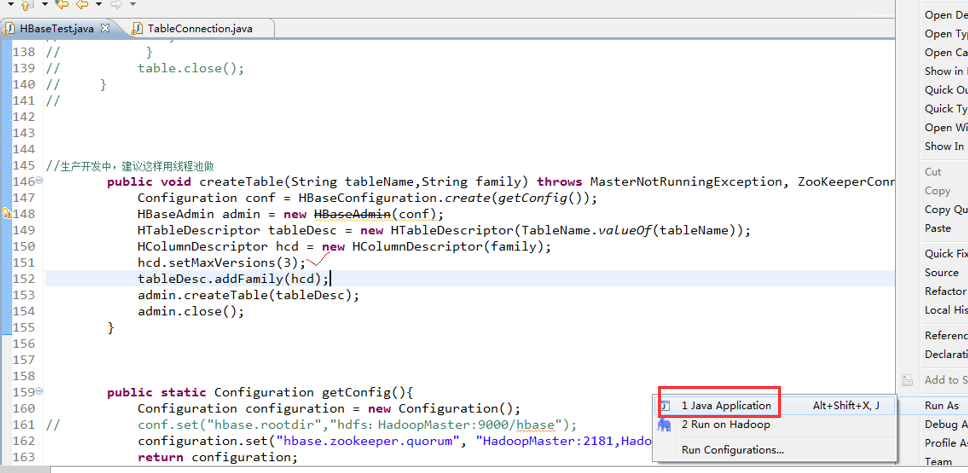

2、带最大版本数,创建HBase表

1 package zhouls.bigdata.HbaseProject.Pool; 2 3 import java.io.IOException; 4 5 import zhouls.bigdata.HbaseProject.Pool.TableConnection; 6 7 import javax.xml.transform.Result; 8 9 import org.apache.hadoop.conf.Configuration; 10 import org.apache.hadoop.hbase.Cell; 11 import org.apache.hadoop.hbase.CellUtil; 12 import org.apache.hadoop.hbase.HBaseConfiguration; 13 import org.apache.hadoop.hbase.HColumnDescriptor; 14 import org.apache.hadoop.hbase.HTableDescriptor; 15 import org.apache.hadoop.hbase.MasterNotRunningException; 16 import org.apache.hadoop.hbase.TableName; 17 import org.apache.hadoop.hbase.ZooKeeperConnectionException; 18 import org.apache.hadoop.hbase.client.Delete; 19 import org.apache.hadoop.hbase.client.Get; 20 import org.apache.hadoop.hbase.client.HBaseAdmin; 21 import org.apache.hadoop.hbase.client.HTable; 22 import org.apache.hadoop.hbase.client.HTableInterface; 23 import org.apache.hadoop.hbase.client.Put; 24 import org.apache.hadoop.hbase.client.ResultScanner; 25 import org.apache.hadoop.hbase.client.Scan; 26 import org.apache.hadoop.hbase.util.Bytes; 27 28 public class HBaseTest { 29 public static void main(String[] args) throws Exception { 30 // HTable table = new HTable(getConfig(),TableName.valueOf("test_table"));//表名是test_table 31 // Put put = new Put(Bytes.toBytes("row_04"));//行键是row_04 32 // put.add(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("Andy1"));//列簇是f,列修饰符是name,值是Andy0 33 // put.add(Bytes.toBytes("f2"),Bytes.toBytes("name"),Bytes.toBytes("Andy3"));//列簇是f2,列修饰符是name,值是Andy3 34 // table.put(put); 35 // table.close(); 36 37 // Get get = new Get(Bytes.toBytes("row_04")); 38 // get.addColumn(Bytes.toBytes("f1"), Bytes.toBytes("age"));如现在这样,不指定,默认把所有的全拿出来 39 // org.apache.hadoop.hbase.client.Result rest = table.get(get); 40 // System.out.println(rest.toString()); 41 // table.close(); 42 43 // Delete delete = new Delete(Bytes.toBytes("row_2")); 44 // delete.deleteColumn(Bytes.toBytes("f1"), Bytes.toBytes("email")); 45 // delete.deleteColumn(Bytes.toBytes("f1"), Bytes.toBytes("name")); 46 // table.delete(delete); 47 // table.close(); 48 49 // Delete delete = new Delete(Bytes.toBytes("row_04")); 50 //// delete.deleteColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));//deleteColumn是删除某一个列簇里的最新时间戳版本。 51 // delete.deleteColumns(Bytes.toBytes("f"), Bytes.toBytes("name"));//delete.deleteColumns是删除某个列簇里的所有时间戳版本。 52 // table.delete(delete); 53 // table.close(); 54 55 56 // Scan scan = new Scan(); 57 // scan.setStartRow(Bytes.toBytes("row_01"));//包含开始行键 58 // scan.setStopRow(Bytes.toBytes("row_03"));//不包含结束行键 59 // scan.addColumn(Bytes.toBytes("f"), Bytes.toBytes("name")); 60 // ResultScanner rst = table.getScanner(scan);//整个循环 61 // System.out.println(rst.toString()); 62 // for (org.apache.hadoop.hbase.client.Result next = rst.next();next !=null;next = rst.next() ){ 63 // for(Cell cell:next.rawCells()){//某个row key下的循坏 64 // System.out.println(next.toString()); 65 // System.out.println("family:" + Bytes.toString(CellUtil.cloneFamily(cell))); 66 // System.out.println("col:" + Bytes.toString(CellUtil.cloneQualifier(cell))); 67 // System.out.println("value" + Bytes.toString(CellUtil.cloneValue(cell))); 68 // } 69 // } 70 // table.close(); 71 72 HBaseTest hbasetest =new HBaseTest(); 73 // hbasetest.insertValue(); 74 // hbasetest.getValue(); 75 // hbasetest.delete(); 76 // hbasetest.scanValue(); 77 hbasetest.createTable("test_table4", "f"); 78 } 79 80 81 //生产开发中,建议这样用线程池做 82 // public void insertValue() throws Exception{ 83 // HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table")); 84 // Put put = new Put(Bytes.toBytes("row_01"));//行键是row_01 85 // put.add(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("Andy0")); 86 // table.put(put); 87 // table.close(); 88 // } 89 90 91 92 //生产开发中,建议这样用线程池做 93 // public void getValue() throws Exception{ 94 // HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table")); 95 // Get get = new Get(Bytes.toBytes("row_03")); 96 // get.addColumn(Bytes.toBytes("f"), Bytes.toBytes("name")); 97 // org.apache.hadoop.hbase.client.Result rest = table.get(get); 98 // System.out.println(rest.toString()); 99 // table.close(); 100 // } 101 // 102 //生产开发中,建议这样用线程池做 103 // public void delete() throws Exception{ 104 // HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table")); 105 // Delete delete = new Delete(Bytes.toBytes("row_01")); 106 // delete.deleteColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));//deleteColumn是删除某一个列簇里的最新时间戳版本。 107 //// delete.deleteColumns(Bytes.toBytes("f"), Bytes.toBytes("name"));//delete.deleteColumns是删除某个列簇里的所有时间戳版本。 108 // table.delete(delete); 109 // table.close(); 110 // } 111 112 113 //生产开发中,建议这样用线程池做 114 // public void scanValue() throws Exception{ 115 // HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table")); 116 // Scan scan = new Scan(); 117 // scan.setStartRow(Bytes.toBytes("row_02"));//包含开始行键 118 // scan.setStopRow(Bytes.toBytes("row_04"));//不包含结束行键 119 // scan.addColumn(Bytes.toBytes("f"), Bytes.toBytes("name")); 120 // ResultScanner rst = table.getScanner(scan);//整个循环 121 // System.out.println(rst.toString()); 122 // for (org.apache.hadoop.hbase.client.Result next = rst.next();next !=null;next = rst.next() ){ 123 // for(Cell cell:next.rawCells()){//某个row key下的循坏 124 // System.out.println(next.toString()); 125 // System.out.println("family:" + Bytes.toString(CellUtil.cloneFamily(cell))); 126 // System.out.println("col:" + Bytes.toString(CellUtil.cloneQualifier(cell))); 127 // System.out.println("value" + Bytes.toString(CellUtil.cloneValue(cell))); 128 // } 129 // } 130 // table.close(); 131 // } 132 // 133 134 //生产开发中,建议这样用线程池做 135 public void createTable(String tableName,String family) throws MasterNotRunningException, ZooKeeperConnectionException, IOException{ 136 Configuration conf = HBaseConfiguration.create(getConfig()); 137 HBaseAdmin admin = new HBaseAdmin(conf); 138 HTableDescriptor tableDesc = new HTableDescriptor(TableName.valueOf(tableName)); 139 HColumnDescriptor hcd = new HColumnDescriptor(family); 140 hcd.setMaxVersions(3); 141 tableDesc.addFamily(hcd); 142 admin.createTable(tableDesc); 143 admin.close(); 144 } 145 146 147 148 public static Configuration getConfig(){ 149 Configuration configuration = new Configuration(); 150 // conf.set("hbase.rootdir","hdfs:HadoopMaster:9000/hbase"); 151 configuration.set("hbase.zookeeper.quorum", "HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181"); 152 return configuration; 153 } 154 }

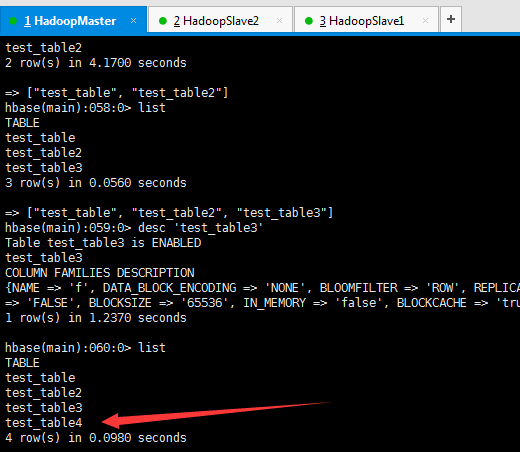

hbase(main):060:0> list

TABLE

test_table

test_table2

test_table3

test_table4

4 row(s) in 0.0980 seconds

=> ["test_table", "test_table2", "test_table3", "test_table4"]

hbase(main):061:0> desc 'test_table4'

Table test_table4 is ENABLED

test_table4

COLUMN FAMILIES DESCRIPTION

{NAME => 'f', DATA_BLOCK_ENCODING => 'NONE', BLOOMFILTER => 'ROW', REPLICATION_SCOPE => '0', VERSIONS => '3', COMPRESSION => 'NONE', MIN_VERSIONS => '0', TTL => 'FOREVER', KEEP_DELETED_CELLS

=> 'FALSE', BLOCKSIZE => '65536', IN_MEMORY => 'false', BLOCKCACHE => 'true'}

1 row(s) in 0.1480 seconds

hbase(main):062:0>

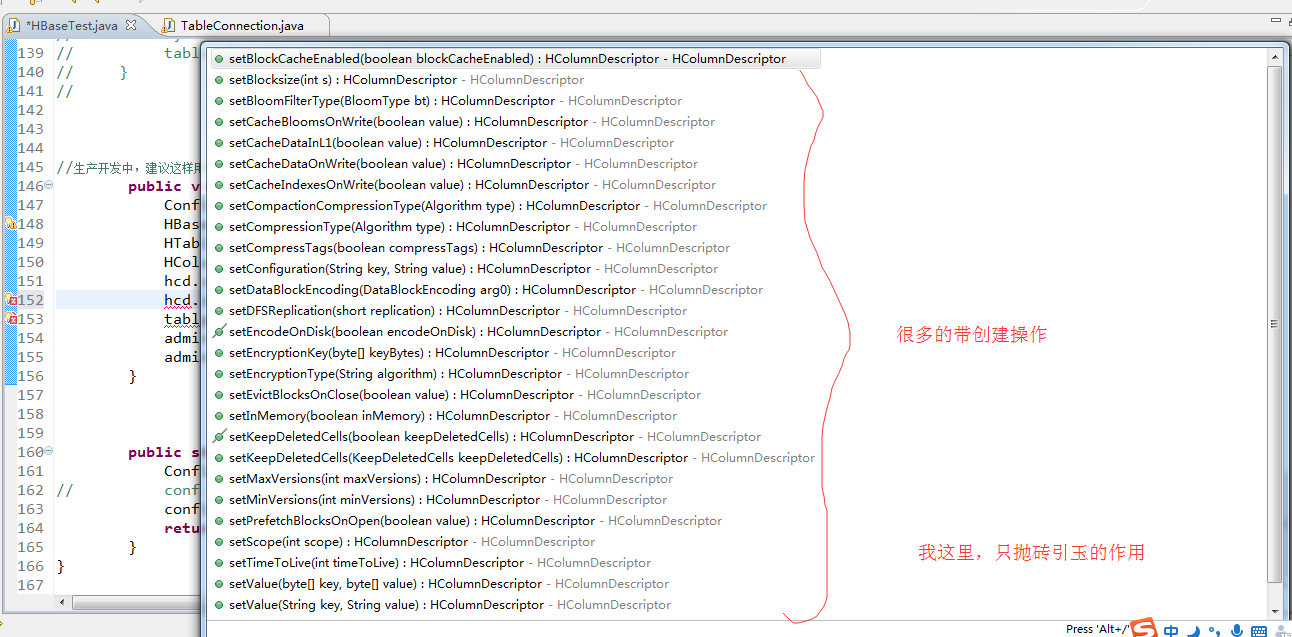

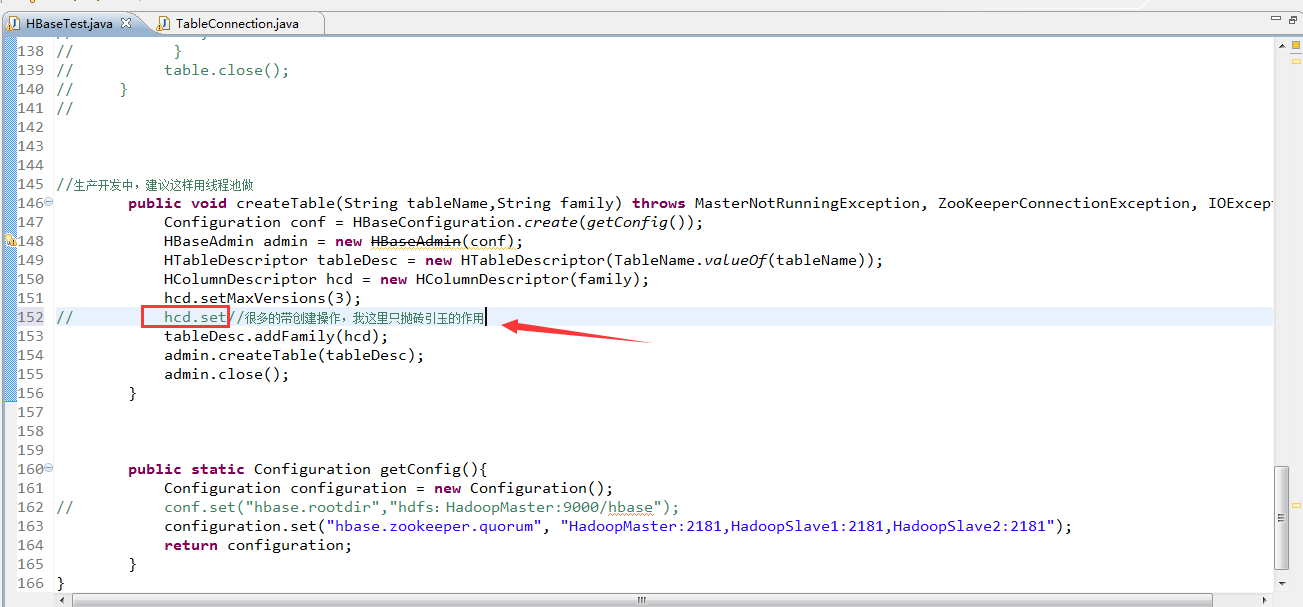

3、更多带的创建操作,创建HBase表

1 package zhouls.bigdata.HbaseProject.Pool; 2 3 import java.io.IOException; 4 5 import zhouls.bigdata.HbaseProject.Pool.TableConnection; 6 7 import javax.xml.transform.Result; 8 9 import org.apache.hadoop.conf.Configuration; 10 import org.apache.hadoop.hbase.Cell; 11 import org.apache.hadoop.hbase.CellUtil; 12 import org.apache.hadoop.hbase.HBaseConfiguration; 13 import org.apache.hadoop.hbase.HColumnDescriptor; 14 import org.apache.hadoop.hbase.HTableDescriptor; 15 import org.apache.hadoop.hbase.MasterNotRunningException; 16 import org.apache.hadoop.hbase.TableName; 17 import org.apache.hadoop.hbase.ZooKeeperConnectionException; 18 import org.apache.hadoop.hbase.client.Delete; 19 import org.apache.hadoop.hbase.client.Get; 20 import org.apache.hadoop.hbase.client.HBaseAdmin; 21 import org.apache.hadoop.hbase.client.HTable; 22 import org.apache.hadoop.hbase.client.HTableInterface; 23 import org.apache.hadoop.hbase.client.Put; 24 import org.apache.hadoop.hbase.client.ResultScanner; 25 import org.apache.hadoop.hbase.client.Scan; 26 import org.apache.hadoop.hbase.util.Bytes; 27 28 public class HBaseTest { 29 public static void main(String[] args) throws Exception { 30 // HTable table = new HTable(getConfig(),TableName.valueOf("test_table"));//表名是test_table 31 // Put put = new Put(Bytes.toBytes("row_04"));//行键是row_04 32 // put.add(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("Andy1"));//列簇是f,列修饰符是name,值是Andy0 33 // put.add(Bytes.toBytes("f2"),Bytes.toBytes("name"),Bytes.toBytes("Andy3"));//列簇是f2,列修饰符是name,值是Andy3 34 // table.put(put); 35 // table.close(); 36 37 // Get get = new Get(Bytes.toBytes("row_04")); 38 // get.addColumn(Bytes.toBytes("f1"), Bytes.toBytes("age"));如现在这样,不指定,默认把所有的全拿出来 39 // org.apache.hadoop.hbase.client.Result rest = table.get(get); 40 // System.out.println(rest.toString()); 41 // table.close(); 42 43 // Delete delete = new Delete(Bytes.toBytes("row_2")); 44 // delete.deleteColumn(Bytes.toBytes("f1"), Bytes.toBytes("email")); 45 // delete.deleteColumn(Bytes.toBytes("f1"), Bytes.toBytes("name")); 46 // table.delete(delete); 47 // table.close(); 48 49 // Delete delete = new Delete(Bytes.toBytes("row_04")); 50 //// delete.deleteColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));//deleteColumn是删除某一个列簇里的最新时间戳版本。 51 // delete.deleteColumns(Bytes.toBytes("f"), Bytes.toBytes("name"));//delete.deleteColumns是删除某个列簇里的所有时间戳版本。 52 // table.delete(delete); 53 // table.close(); 54 55 56 // Scan scan = new Scan(); 57 // scan.setStartRow(Bytes.toBytes("row_01"));//包含开始行键 58 // scan.setStopRow(Bytes.toBytes("row_03"));//不包含结束行键 59 // scan.addColumn(Bytes.toBytes("f"), Bytes.toBytes("name")); 60 // ResultScanner rst = table.getScanner(scan);//整个循环 61 // System.out.println(rst.toString()); 62 // for (org.apache.hadoop.hbase.client.Result next = rst.next();next !=null;next = rst.next() ){ 63 // for(Cell cell:next.rawCells()){//某个row key下的循坏 64 // System.out.println(next.toString()); 65 // System.out.println("family:" + Bytes.toString(CellUtil.cloneFamily(cell))); 66 // System.out.println("col:" + Bytes.toString(CellUtil.cloneQualifier(cell))); 67 // System.out.println("value" + Bytes.toString(CellUtil.cloneValue(cell))); 68 // } 69 // } 70 // table.close(); 71 72 HBaseTest hbasetest =new HBaseTest(); 73 // hbasetest.insertValue(); 74 // hbasetest.getValue(); 75 // hbasetest.delete(); 76 // hbasetest.scanValue(); 77 hbasetest.createTable("test_table4", "f"); 78 } 79 80 81 //生产开发中,建议这样用线程池做 82 // public void insertValue() throws Exception{ 83 // HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table")); 84 // Put put = new Put(Bytes.toBytes("row_01"));//行键是row_01 85 // put.add(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("Andy0")); 86 // table.put(put); 87 // table.close(); 88 // } 89 90 91 92 //生产开发中,建议这样用线程池做 93 // public void getValue() throws Exception{ 94 // HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table")); 95 // Get get = new Get(Bytes.toBytes("row_03")); 96 // get.addColumn(Bytes.toBytes("f"), Bytes.toBytes("name")); 97 // org.apache.hadoop.hbase.client.Result rest = table.get(get); 98 // System.out.println(rest.toString()); 99 // table.close(); 100 // } 101 // 102 //生产开发中,建议这样用线程池做 103 // public void delete() throws Exception{ 104 // HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table")); 105 // Delete delete = new Delete(Bytes.toBytes("row_01")); 106 // delete.deleteColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));//deleteColumn是删除某一个列簇里的最新时间戳版本。 107 //// delete.deleteColumns(Bytes.toBytes("f"), Bytes.toBytes("name"));//delete.deleteColumns是删除某个列簇里的所有时间戳版本。 108 // table.delete(delete); 109 // table.close(); 110 // } 111 112 113 //生产开发中,建议这样用线程池做 114 // public void scanValue() throws Exception{ 115 // HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table")); 116 // Scan scan = new Scan(); 117 // scan.setStartRow(Bytes.toBytes("row_02"));//包含开始行键 118 // scan.setStopRow(Bytes.toBytes("row_04"));//不包含结束行键 119 // scan.addColumn(Bytes.toBytes("f"), Bytes.toBytes("name")); 120 // ResultScanner rst = table.getScanner(scan);//整个循环 121 // System.out.println(rst.toString()); 122 // for (org.apache.hadoop.hbase.client.Result next = rst.next();next !=null;next = rst.next() ){ 123 // for(Cell cell:next.rawCells()){//某个row key下的循坏 124 // System.out.println(next.toString()); 125 // System.out.println("family:" + Bytes.toString(CellUtil.cloneFamily(cell))); 126 // System.out.println("col:" + Bytes.toString(CellUtil.cloneQualifier(cell))); 127 // System.out.println("value" + Bytes.toString(CellUtil.cloneValue(cell))); 128 // } 129 // } 130 // table.close(); 131 // } 132 // 133 134 //生产开发中,建议这样用线程池做 135 public void createTable(String tableName,String family) throws MasterNotRunningException, ZooKeeperConnectionException, IOException{ 136 Configuration conf = HBaseConfiguration.create(getConfig()); 137 HBaseAdmin admin = new HBaseAdmin(conf); 138 HTableDescriptor tableDesc = new HTableDescriptor(TableName.valueOf(tableName)); 139 HColumnDescriptor hcd = new HColumnDescriptor(family); 140 hcd.setMaxVersions(3); 141 // hcd.set//很多的带创建操作,我这里只抛砖引玉的作用 142 tableDesc.addFamily(hcd); 143 admin.createTable(tableDesc); 144 admin.close(); 145 } 146 147 148 149 public static Configuration getConfig(){ 150 Configuration configuration = new Configuration(); 151 // conf.set("hbase.rootdir","hdfs:HadoopMaster:9000/hbase"); 152 configuration.set("hbase.zookeeper.quorum", "HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181"); 153 return configuration; 154 } 155 }

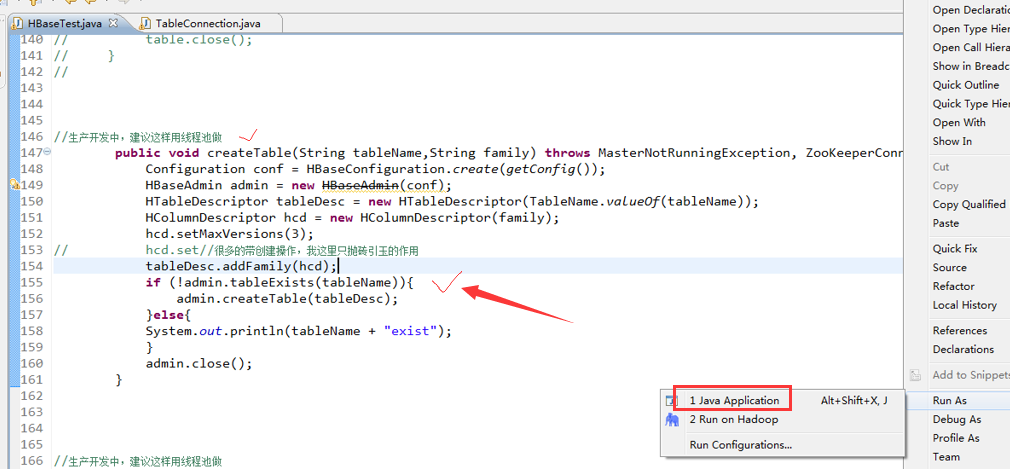

4、先判断表是否存在,再来删创建HBase表(生产开发首推)

1 package zhouls.bigdata.HbaseProject.Pool; 2 3 import java.io.IOException; 4 5 import zhouls.bigdata.HbaseProject.Pool.TableConnection; 6 7 import javax.xml.transform.Result; 8 9 import org.apache.hadoop.conf.Configuration; 10 import org.apache.hadoop.hbase.Cell; 11 import org.apache.hadoop.hbase.CellUtil; 12 import org.apache.hadoop.hbase.HBaseConfiguration; 13 import org.apache.hadoop.hbase.HColumnDescriptor; 14 import org.apache.hadoop.hbase.HTableDescriptor; 15 import org.apache.hadoop.hbase.MasterNotRunningException; 16 import org.apache.hadoop.hbase.TableName; 17 import org.apache.hadoop.hbase.ZooKeeperConnectionException; 18 import org.apache.hadoop.hbase.client.Delete; 19 import org.apache.hadoop.hbase.client.Get; 20 import org.apache.hadoop.hbase.client.HBaseAdmin; 21 import org.apache.hadoop.hbase.client.HTable; 22 import org.apache.hadoop.hbase.client.HTableInterface; 23 import org.apache.hadoop.hbase.client.Put; 24 import org.apache.hadoop.hbase.client.ResultScanner; 25 import org.apache.hadoop.hbase.client.Scan; 26 import org.apache.hadoop.hbase.util.Bytes; 27 28 public class HBaseTest { 29 public static void main(String[] args) throws Exception { 30 // HTable table = new HTable(getConfig(),TableName.valueOf("test_table"));//表名是test_table 31 // Put put = new Put(Bytes.toBytes("row_04"));//行键是row_04 32 // put.add(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("Andy1"));//列簇是f,列修饰符是name,值是Andy0 33 // put.add(Bytes.toBytes("f2"),Bytes.toBytes("name"),Bytes.toBytes("Andy3"));//列簇是f2,列修饰符是name,值是Andy3 34 // table.put(put); 35 // table.close(); 36 37 // Get get = new Get(Bytes.toBytes("row_04")); 38 // get.addColumn(Bytes.toBytes("f1"), Bytes.toBytes("age"));如现在这样,不指定,默认把所有的全拿出来 39 // org.apache.hadoop.hbase.client.Result rest = table.get(get); 40 // System.out.println(rest.toString()); 41 // table.close(); 42 43 // Delete delete = new Delete(Bytes.toBytes("row_2")); 44 // delete.deleteColumn(Bytes.toBytes("f1"), Bytes.toBytes("email")); 45 // delete.deleteColumn(Bytes.toBytes("f1"), Bytes.toBytes("name")); 46 // table.delete(delete); 47 // table.close(); 48 49 // Delete delete = new Delete(Bytes.toBytes("row_04")); 50 //// delete.deleteColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));//deleteColumn是删除某一个列簇里的最新时间戳版本。 51 // delete.deleteColumns(Bytes.toBytes("f"), Bytes.toBytes("name"));//delete.deleteColumns是删除某个列簇里的所有时间戳版本。 52 // table.delete(delete); 53 // table.close(); 54 55 56 // Scan scan = new Scan(); 57 // scan.setStartRow(Bytes.toBytes("row_01"));//包含开始行键 58 // scan.setStopRow(Bytes.toBytes("row_03"));//不包含结束行键 59 // scan.addColumn(Bytes.toBytes("f"), Bytes.toBytes("name")); 60 // ResultScanner rst = table.getScanner(scan);//整个循环 61 // System.out.println(rst.toString()); 62 // for (org.apache.hadoop.hbase.client.Result next = rst.next();next !=null;next = rst.next() ){ 63 // for(Cell cell:next.rawCells()){//某个row key下的循坏 64 // System.out.println(next.toString()); 65 // System.out.println("family:" + Bytes.toString(CellUtil.cloneFamily(cell))); 66 // System.out.println("col:" + Bytes.toString(CellUtil.cloneQualifier(cell))); 67 // System.out.println("value" + Bytes.toString(CellUtil.cloneValue(cell))); 68 // } 69 // } 70 // table.close(); 71 72 HBaseTest hbasetest =new HBaseTest(); 73 // hbasetest.insertValue(); 74 // hbasetest.getValue(); 75 // hbasetest.delete(); 76 // hbasetest.scanValue(); 77 hbasetest.createTable("test_table3", "f");//先判断表是否存在,再来创建HBase表(生产开发首推) 78 // hbasetest.deleteTable("test_table4");//先判断表是否存在,再来删除HBase表(生产开发首推) 79 } 80 81 82 //生产开发中,建议这样用线程池做 83 // public void insertValue() throws Exception{ 84 // HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table")); 85 // Put put = new Put(Bytes.toBytes("row_01"));//行键是row_01 86 // put.add(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("Andy0")); 87 // table.put(put); 88 // table.close(); 89 // } 90 91 92 93 //生产开发中,建议这样用线程池做 94 // public void getValue() throws Exception{ 95 // HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table")); 96 // Get get = new Get(Bytes.toBytes("row_03")); 97 // get.addColumn(Bytes.toBytes("f"), Bytes.toBytes("name")); 98 // org.apache.hadoop.hbase.client.Result rest = table.get(get); 99 // System.out.println(rest.toString()); 100 // table.close(); 101 // } 102 103 104 //生产开发中,建议这样用线程池做 105 // public void delete() throws Exception{ 106 // HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table")); 107 // Delete delete = new Delete(Bytes.toBytes("row_01")); 108 // delete.deleteColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));//deleteColumn是删除某一个列簇里的最新时间戳版本。 109 //// delete.deleteColumns(Bytes.toBytes("f"), Bytes.toBytes("name"));//delete.deleteColumns是删除某个列簇里的所有时间戳版本。 110 // table.delete(delete); 111 // table.close(); 112 // } 113 114 115 //生产开发中,建议这样用线程池做 116 // public void scanValue() throws Exception{ 117 // HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table")); 118 // Scan scan = new Scan(); 119 // scan.setStartRow(Bytes.toBytes("row_02"));//包含开始行键 120 // scan.setStopRow(Bytes.toBytes("row_04"));//不包含结束行键 121 // scan.addColumn(Bytes.toBytes("f"), Bytes.toBytes("name")); 122 // ResultScanner rst = table.getScanner(scan);//整个循环 123 // System.out.println(rst.toString()); 124 // for (org.apache.hadoop.hbase.client.Result next = rst.next();next !=null;next = rst.next() ){ 125 // for(Cell cell:next.rawCells()){//某个row key下的循坏 126 // System.out.println(next.toString()); 127 // System.out.println("family:" + Bytes.toString(CellUtil.cloneFamily(cell))); 128 // System.out.println("col:" + Bytes.toString(CellUtil.cloneQualifier(cell))); 129 // System.out.println("value" + Bytes.toString(CellUtil.cloneValue(cell))); 130 // } 131 // } 132 // table.close(); 133 // } 134 135 136 //生产开发中,建议这样用线程池做 137 public void createTable(String tableName,String family) throws MasterNotRunningException, ZooKeeperConnectionException, IOException{ 138 Configuration conf = HBaseConfiguration.create(getConfig()); 139 HBaseAdmin admin = new HBaseAdmin(conf); 140 HTableDescriptor tableDesc = new HTableDescriptor(TableName.valueOf(tableName)); 141 HColumnDescriptor hcd = new HColumnDescriptor(family); 142 hcd.setMaxVersions(3); 143 // hcd.set//很多的带创建操作,我这里只抛砖引玉的作用 144 tableDesc.addFamily(hcd); 145 if (!admin.tableExists(tableName)){ 146 admin.createTable(tableDesc); 147 }else{ 148 System.out.println(tableName + "exist"); 149 } 150 admin.close(); 151 } 152 153 154 //生产开发中,建议这样用线程池做 155 // public void deleteTable(String tableName)throws MasterNotRunningException, ZooKeeperConnectionException, IOException{ 156 // Configuration conf = HBaseConfiguration.create(getConfig()); 157 // HBaseAdmin admin = new HBaseAdmin(conf); 158 // if (admin.tableExists(tableName)){ 159 // admin.disableTable(tableName); 160 // admin.deleteTable(tableName); 161 // }else{ 162 // System.out.println(tableName + "not exist"); 163 // } 164 // admin.close(); 165 // } 166 167 168 169 170 public static Configuration getConfig(){ 171 Configuration configuration = new Configuration(); 172 // conf.set("hbase.rootdir","hdfs:HadoopMaster:9000/hbase"); 173 configuration.set("hbase.zookeeper.quorum", "HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181"); 174 return configuration; 175 } 176 }

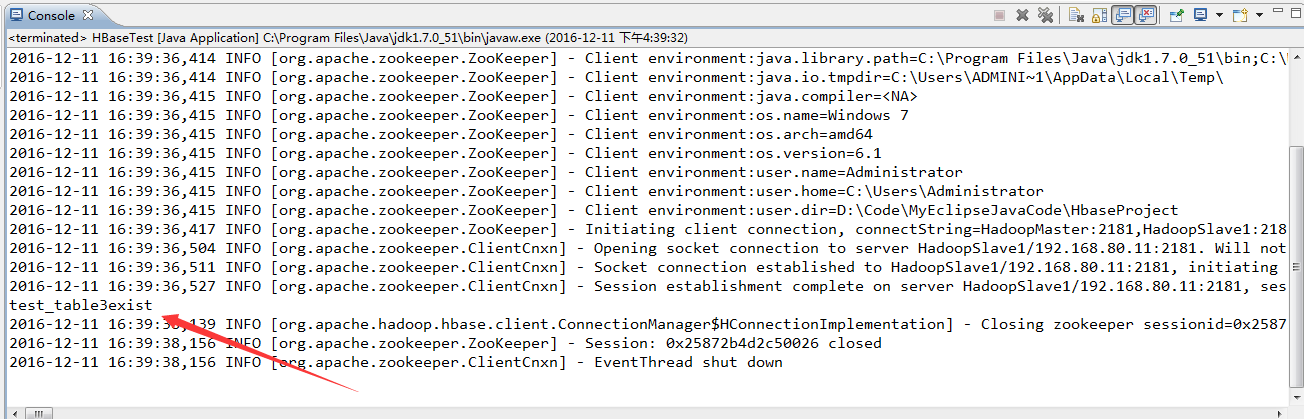

2016-12-11 16:39:36,396 INFO [org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper] - Process identifier=hconnection-0x7cb96ac0 connecting to ZooKeeper ensemble=HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181

2016-12-11 16:39:36,413 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:zookeeper.version=3.4.6-1569965, built on 02/20/2014 09:09 GMT

2016-12-11 16:39:36,413 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:host.name=WIN-BQOBV63OBNM

2016-12-11 16:39:36,413 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.version=1.7.0_51

2016-12-11 16:39:36,413 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.vendor=Oracle Corporation

2016-12-11 16:39:36,413 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.home=C:\Program Files\Java\jdk1.7.0_51\jre

2016-12-11 16:39:36,413 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.class.path=D:\Code\MyEclipseJavaCode\HbaseProject\bin;D:\SoftWare\hbase-1.2.3\lib\activation-1.1.jar;D:\SoftWare\hbase-1.2.3\lib\aopalliance-1.0.jar;D:\SoftWare\hbase-1.2.3\lib\apacheds-i18n-2.0.0-M15.jar;D:\SoftWare\hbase-1.2.3\lib\apacheds-kerberos-codec-2.0.0-M15.jar;D:\SoftWare\hbase-1.2.3\lib\api-asn1-api-1.0.0-M20.jar;D:\SoftWare\hbase-1.2.3\lib\api-util-1.0.0-M20.jar;D:\SoftWare\hbase-1.2.3\lib\asm-3.1.jar;D:\SoftWare\hbase-1.2.3\lib\avro-1.7.4.jar;D:\SoftWare\hbase-1.2.3\lib\commons-beanutils-1.7.0.jar;D:\SoftWare\hbase-1.2.3\lib\commons-beanutils-core-1.8.0.jar;D:\SoftWare\hbase-1.2.3\lib\commons-cli-1.2.jar;D:\SoftWare\hbase-1.2.3\lib\commons-codec-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\commons-collections-3.2.2.jar;D:\SoftWare\hbase-1.2.3\lib\commons-compress-1.4.1.jar;D:\SoftWare\hbase-1.2.3\lib\commons-configuration-1.6.jar;D:\SoftWare\hbase-1.2.3\lib\commons-daemon-1.0.13.jar;D:\SoftWare\hbase-1.2.3\lib\commons-digester-1.8.jar;D:\SoftWare\hbase-1.2.3\lib\commons-el-1.0.jar;D:\SoftWare\hbase-1.2.3\lib\commons-httpclient-3.1.jar;D:\SoftWare\hbase-1.2.3\lib\commons-io-2.4.jar;D:\SoftWare\hbase-1.2.3\lib\commons-lang-2.6.jar;D:\SoftWare\hbase-1.2.3\lib\commons-logging-1.2.jar;D:\SoftWare\hbase-1.2.3\lib\commons-math-2.2.jar;D:\SoftWare\hbase-1.2.3\lib\commons-math3-3.1.1.jar;D:\SoftWare\hbase-1.2.3\lib\commons-net-3.1.jar;D:\SoftWare\hbase-1.2.3\lib\disruptor-3.3.0.jar;D:\SoftWare\hbase-1.2.3\lib\findbugs-annotations-1.3.9-1.jar;D:\SoftWare\hbase-1.2.3\lib\guava-12.0.1.jar;D:\SoftWare\hbase-1.2.3\lib\guice-3.0.jar;D:\SoftWare\hbase-1.2.3\lib\guice-servlet-3.0.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-annotations-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-auth-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-client-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-common-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-hdfs-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-app-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-common-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-core-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-jobclient-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-shuffle-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-yarn-api-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-yarn-client-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-yarn-common-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-yarn-server-common-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-annotations-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-annotations-1.2.3-tests.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-client-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-common-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-common-1.2.3-tests.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-examples-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-external-blockcache-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-hadoop2-compat-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-hadoop-compat-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-it-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-it-1.2.3-tests.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-prefix-tree-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-procedure-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-protocol-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-resource-bundle-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-rest-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-server-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-server-1.2.3-tests.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-shell-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-thrift-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\htrace-core-3.1.0-incubating.jar;D:\SoftWare\hbase-1.2.3\lib\httpclient-4.2.5.jar;D:\SoftWare\hbase-1.2.3\lib\httpcore-4.4.1.jar;D:\SoftWare\hbase-1.2.3\lib\jackson-core-asl-1.9.13.jar;D:\SoftWare\hbase-1.2.3\lib\jackson-jaxrs-1.9.13.jar;D:\SoftWare\hbase-1.2.3\lib\jackson-mapper-asl-1.9.13.jar;D:\SoftWare\hbase-1.2.3\lib\jackson-xc-1.9.13.jar;D:\SoftWare\hbase-1.2.3\lib\jamon-runtime-2.4.1.jar;D:\SoftWare\hbase-1.2.3\lib\jasper-compiler-5.5.23.jar;D:\SoftWare\hbase-1.2.3\lib\jasper-runtime-5.5.23.jar;D:\SoftWare\hbase-1.2.3\lib\javax.inject-1.jar;D:\SoftWare\hbase-1.2.3\lib\java-xmlbuilder-0.4.jar;D:\SoftWare\hbase-1.2.3\lib\jaxb-api-2.2.2.jar;D:\SoftWare\hbase-1.2.3\lib\jaxb-impl-2.2.3-1.jar;D:\SoftWare\hbase-1.2.3\lib\jcodings-1.0.8.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-client-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-core-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-guice-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-json-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-server-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jets3t-0.9.0.jar;D:\SoftWare\hbase-1.2.3\lib\jettison-1.3.3.jar;D:\SoftWare\hbase-1.2.3\lib\jetty-6.1.26.jar;D:\SoftWare\hbase-1.2.3\lib\jetty-sslengine-6.1.26.jar;D:\SoftWare\hbase-1.2.3\lib\jetty-util-6.1.26.jar;D:\SoftWare\hbase-1.2.3\lib\joni-2.1.2.jar;D:\SoftWare\hbase-1.2.3\lib\jruby-complete-1.6.8.jar;D:\SoftWare\hbase-1.2.3\lib\jsch-0.1.42.jar;D:\SoftWare\hbase-1.2.3\lib\jsp-2.1-6.1.14.jar;D:\SoftWare\hbase-1.2.3\lib\jsp-api-2.1-6.1.14.jar;D:\SoftWare\hbase-1.2.3\lib\junit-4.12.jar;D:\SoftWare\hbase-1.2.3\lib\leveldbjni-all-1.8.jar;D:\SoftWare\hbase-1.2.3\lib\libthrift-0.9.3.jar;D:\SoftWare\hbase-1.2.3\lib\log4j-1.2.17.jar;D:\SoftWare\hbase-1.2.3\lib\metrics-core-2.2.0.jar;D:\SoftWare\hbase-1.2.3\lib\netty-all-4.0.23.Final.jar;D:\SoftWare\hbase-1.2.3\lib\paranamer-2.3.jar;D:\SoftWare\hbase-1.2.3\lib\protobuf-java-2.5.0.jar;D:\SoftWare\hbase-1.2.3\lib\servlet-api-2.5.jar;D:\SoftWare\hbase-1.2.3\lib\servlet-api-2.5-6.1.14.jar;D:\SoftWare\hbase-1.2.3\lib\slf4j-api-1.7.7.jar;D:\SoftWare\hbase-1.2.3\lib\slf4j-log4j12-1.7.5.jar;D:\SoftWare\hbase-1.2.3\lib\snappy-java-1.0.4.1.jar;D:\SoftWare\hbase-1.2.3\lib\spymemcached-2.11.6.jar;D:\SoftWare\hbase-1.2.3\lib\xmlenc-0.52.jar;D:\SoftWare\hbase-1.2.3\lib\xz-1.0.jar;D:\SoftWare\hbase-1.2.3\lib\zookeeper-3.4.6.jar

2016-12-11 16:39:36,414 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.library.path=C:\Program Files\Java\jdk1.7.0_51\bin;C:\Windows\Sun\Java\bin;C:\Windows\system32;C:\Windows;C:\ProgramData\Oracle\Java\javapath;C:\Python27\;C:\Python27\Scripts;C:\Windows\system32;C:\Windows;C:\Windows\System32\Wbem;C:\Windows\System32\WindowsPowerShell\v1.0\;D:\SoftWare\MATLAB R2013a\runtime\win64;D:\SoftWare\MATLAB R2013a\bin;C:\Program Files (x86)\IDM Computer Solutions\UltraCompare;C:\Program Files\Java\jdk1.7.0_51\bin;C:\Program Files\Java\jdk1.7.0_51\jre\bin;D:\SoftWare\apache-ant-1.9.0\bin;HADOOP_HOME\bin;D:\SoftWare\apache-maven-3.3.9\bin;D:\SoftWare\Scala\bin;D:\SoftWare\Scala\jre\bin;%MYSQL_HOME\bin;D:\SoftWare\MySQL Server\MySQL Server 5.0\bin;D:\SoftWare\apache-tomcat-7.0.69\bin;%C:\Windows\System32;%C:\Windows\SysWOW64;D:\SoftWare\SSH Secure Shell;.

2016-12-11 16:39:36,414 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.io.tmpdir=C:\Users\ADMINI~1\AppData\Local\Temp\

2016-12-11 16:39:36,415 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.compiler=<NA>

2016-12-11 16:39:36,415 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:os.name=Windows 7

2016-12-11 16:39:36,415 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:os.arch=amd64

2016-12-11 16:39:36,415 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:os.version=6.1

2016-12-11 16:39:36,415 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:user.name=Administrator

2016-12-11 16:39:36,415 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:user.home=C:\Users\Administrator

2016-12-11 16:39:36,415 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:user.dir=D:\Code\MyEclipseJavaCode\HbaseProject

2016-12-11 16:39:36,417 INFO [org.apache.zookeeper.ZooKeeper] - Initiating client connection, connectString=HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181 sessionTimeout=90000 watcher=hconnection-0x7cb96ac00x0, quorum=HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181, baseZNode=/hbase

2016-12-11 16:39:36,504 INFO [org.apache.zookeeper.ClientCnxn] - Opening socket connection to server HadoopSlave1/192.168.80.11:2181. Will not attempt to authenticate using SASL (unknown error)

2016-12-11 16:39:36,511 INFO [org.apache.zookeeper.ClientCnxn] - Socket connection established to HadoopSlave1/192.168.80.11:2181, initiating session

2016-12-11 16:39:36,527 INFO [org.apache.zookeeper.ClientCnxn] - Session establishment complete on server HadoopSlave1/192.168.80.11:2181, sessionid = 0x25872b4d2c50026, negotiated timeout = 40000

test_table3exist

2016-12-11 16:39:38,139 INFO [org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation] - Closing zookeeper sessionid=0x25872b4d2c50026

2016-12-11 16:39:38,156 INFO [org.apache.zookeeper.ZooKeeper] - Session: 0x25872b4d2c50026 closed

2016-12-11 16:39:38,156 INFO [org.apache.zookeeper.ClientCnxn] - EventThread shut down

作者:大数据和人工智能躺过的坑

出处:http://www.cnblogs.com/zlslch/

本文版权归作者和博客园共有,欢迎转载,但未经作者同意必须保留此段声明,且在文章页面明显位置给出原文链接,否则保留追究法律责任的权利。

如果您认为这篇文章还不错或者有所收获,您可以通过右边的“打赏”功能 打赏我一杯咖啡【物质支持】,也可以点击右下角的【好文要顶】按钮【精神支持】,因为这两种支持都是我继续写作,分享的最大动力!

浙公网安备 33010602011771号

浙公网安备 33010602011771号