Spark源码的编译过程详细解读(各版本)(博主推荐)

不多说,直接上干货!

说在前面的话

如果出现缺少了某个文件的情况,则要先清理maven(使用命令 mvn clean) 再重新编译。

Spark源码编译的3大方式

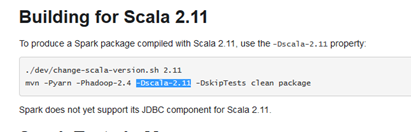

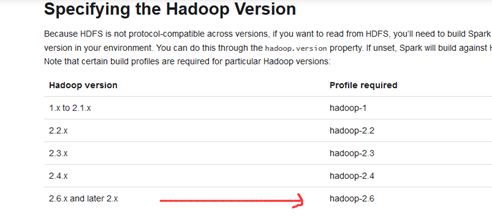

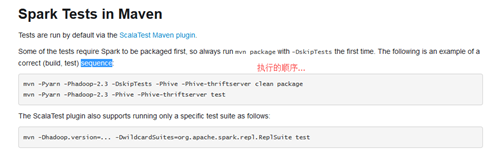

1、Maven编译

2、SBT编译 (暂时没)

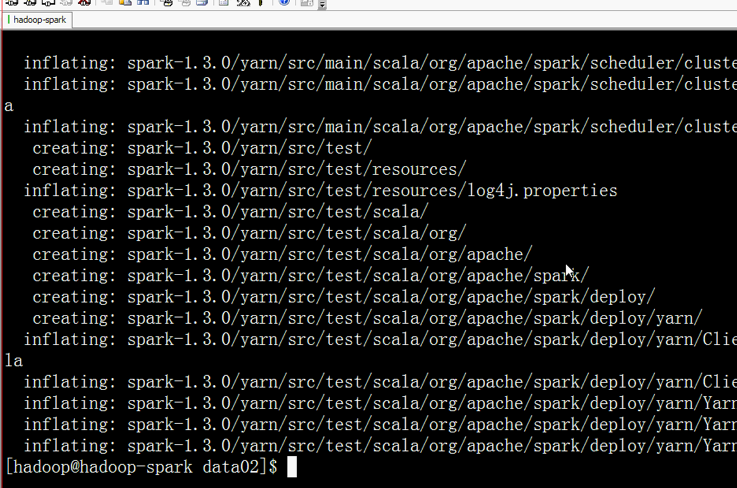

3、打包编译make-distribution.sh

注意的是,spark1.6.X 需要搭配1.7.x的jdk和maven3.3.3版本

spark2.X需要搭配1.8.X的jdk和maven3.3.9版本

前言

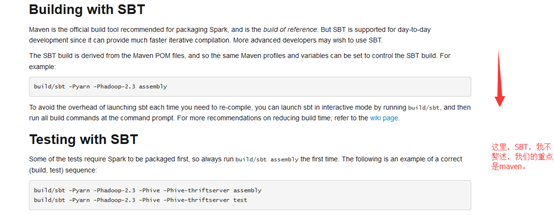

Spark可以通过SBT和Maven两种方式进行编译,再通过make-distribution.sh脚本生成部署包。

SBT编译需要安装git工具,而Maven安装则需要maven工具,两种方式均需要在联网 下进行。

尽管maven是Spark官网推荐的编译方式,但是sbt的编译速度更胜一筹。因此,对于spark的开发者来说,sbt编译可能是更好的选择。由于sbt编译也是基于maven的POM文件,因此sbt的编译参数与maven的编译参数是一致的。

心得

有时间,自己一定要动手编译源码,想要成为高手和大数据领域大牛,前面的苦,是必定要吃的。

无论是编译spark源码,还是hadoop源码。新手初次编译,一路会碰到很多问题,也许会花上个一天甚至几天,这个是正常。把心态端正就是!有错误,更好,解决错误,是最好锻炼和提升能力的。

更不要小看它们,能碰到是幸运,能弄懂和深入研究,之所以然,是福气。

各大版本简介

1、Apache版------可自己编译,也可采用预编译的版本

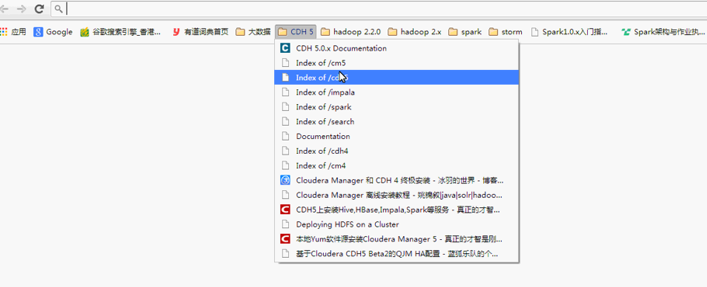

2、CDH版---------无需自己编译

Cloudera Manager安装之利用parcels方式安装3节点集群(包含最新稳定版本或指定版本的安装)(添加服务)

3、HDP版----------无需自己编译

Ambari安装部署搭建hdp集群(图文分五大步详解)(博主强烈推荐)

主流是这3大版本,其实,是有9大版本。CDH的CM是要花钱的,当然它的预编译包,是免费的。

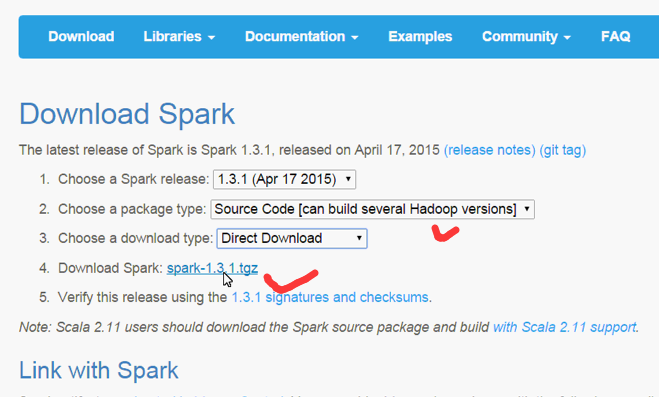

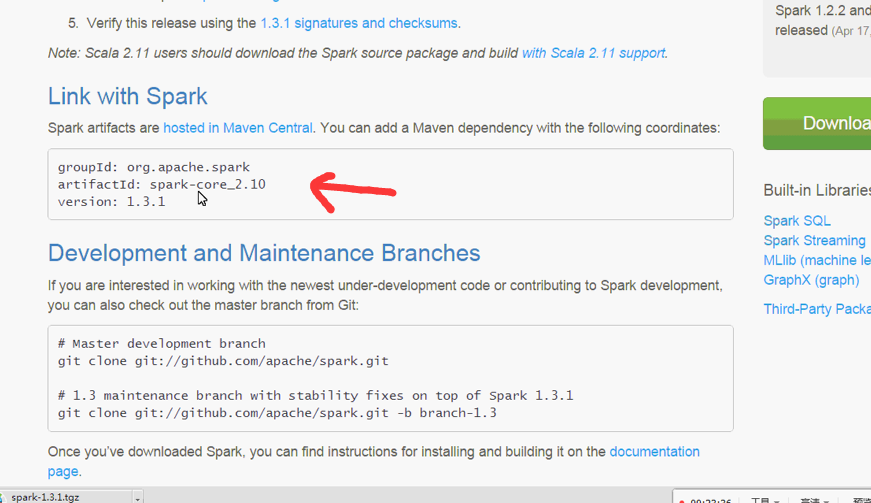

hadoop/spark源码的下载方式:

1、官网下载

2、Github下载(仅source code)

以下是从官网下载:

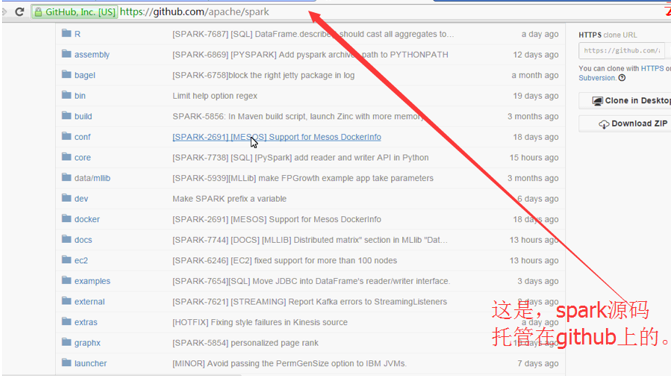

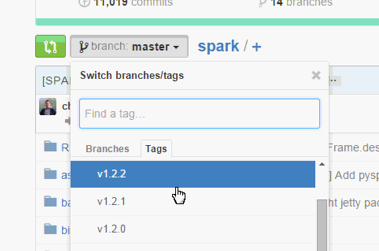

以下是Github下载(仅source code)

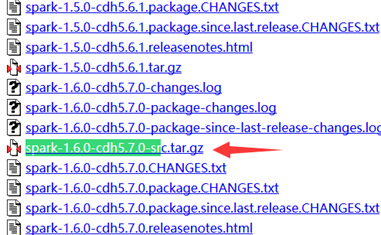

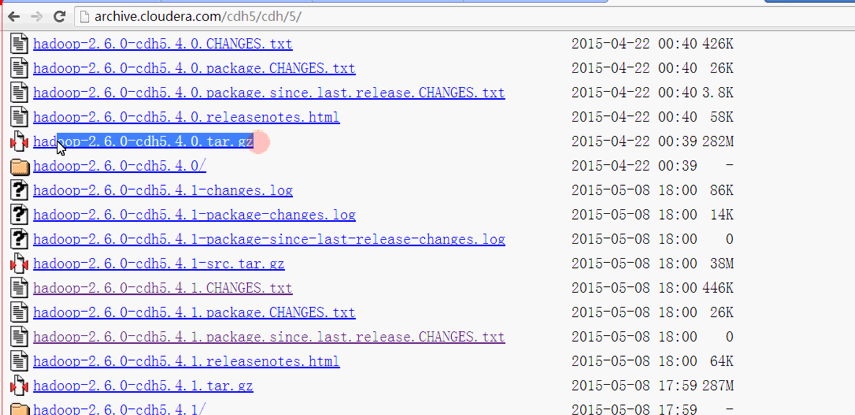

CDH的下载

http://archive-primary.cloudera.com/cdh5/cdh/5/

HDP的下载

http://zh.hortonworks.com/products/

好的,那我这里就以,Githud为例。

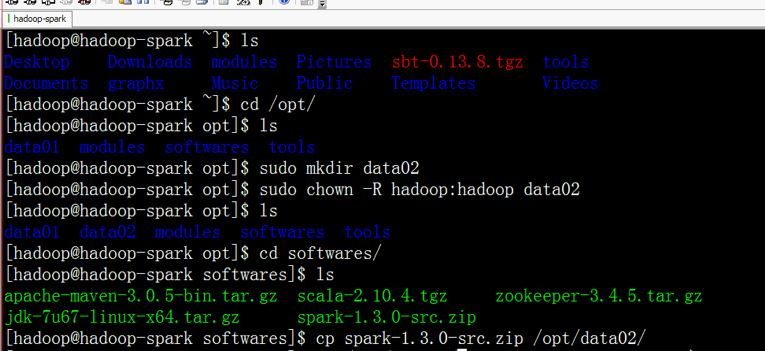

准备Linux系统环境(如CentOS6.5)

********************************************************************************

* 思路流程:

* 第一大步:在线安装git

* 第二大步:创建一个目录来克隆spark源代码(mkdir -p /root/projects/opensource)

* 第三大步:切换分支

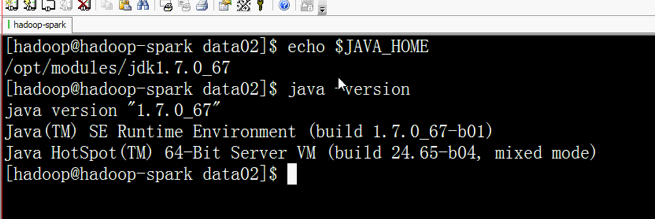

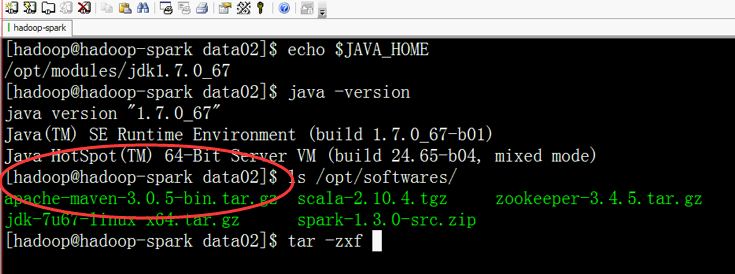

* 第四大步:安装jdk1.7+

* 第五大步:安装maven

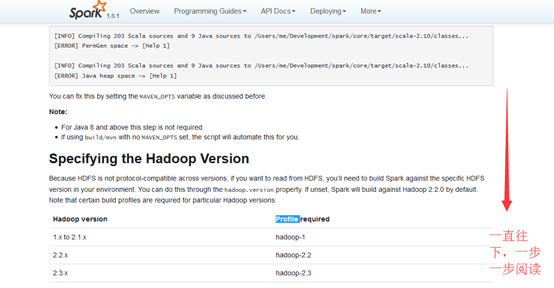

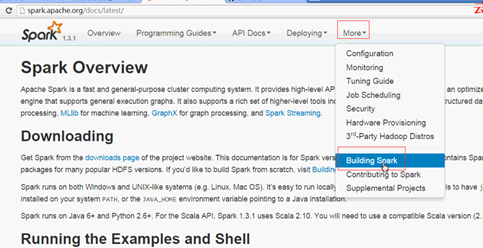

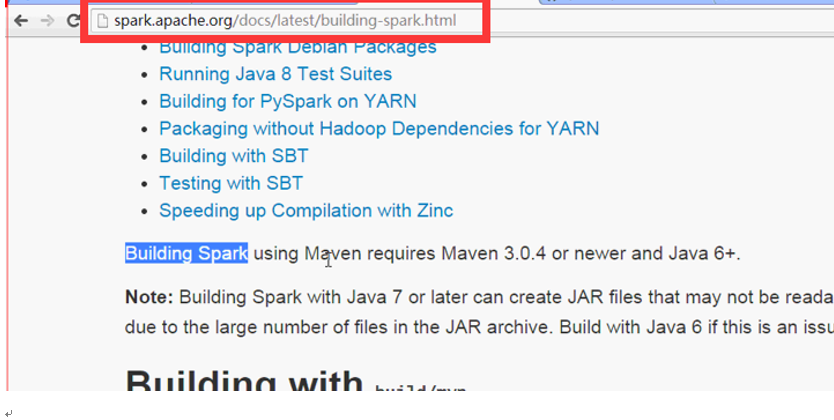

* 第六大步:看官网,跟着走

* 第七大步:通过MVN下载对应的包

********************************************************************************

当然,可以参考官网给出的文档,

http://spark.apache.org/docs/1.6.1/building-spark.html

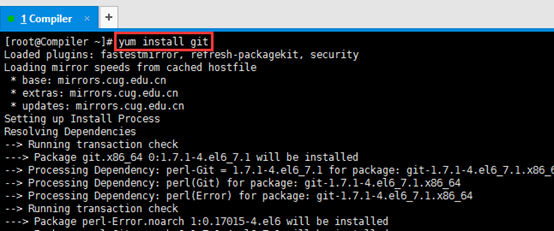

第一大步:在线安装git(root 用户下)

yum install git (root用户)

或者

Sudo yum install git (普通用户)

[root@Compiler ~]# yum install git Loaded plugins: fastestmirror, refresh-packagekit, security Loading mirror speeds from cached hostfile * base: mirrors.cug.edu.cn * extras: mirrors.cug.edu.cn * updates: mirrors.cug.edu.cn Setting up Install Process Resolving Dependencies --> Running transaction check ---> Package git.x86_64 0:1.7.1-4.el6_7.1 will be installed --> Processing Dependency: perl-Git = 1.7.1-4.el6_7.1 for package: git-1.7.1-4.el6_7.1.x86_64 --> Processing Dependency: perl(Git) for package: git-1.7.1-4.el6_7.1.x86_64 --> Processing Dependency: perl(Error) for package: git-1.7.1-4.el6_7.1.x86_64 --> Running transaction check ---> Package perl-Error.noarch 1:0.17015-4.el6 will be installed ---> Package perl-Git.noarch 0:1.7.1-4.el6_7.1 will be installed --> Finished Dependency Resolution Dependencies Resolved =============================================================================================================================================================================================== Package Arch Version Repository Size =============================================================================================================================================================================================== Installing: git x86_64 1.7.1-4.el6_7.1 base 4.6 M Installing for dependencies: perl-Error noarch 1:0.17015-4.el6 base 29 k perl-Git noarch 1.7.1-4.el6_7.1 base 28 k Transaction Summary =============================================================================================================================================================================================== Install 3 Package(s) Total download size: 4.7 M Installed size: 15 M Is this ok [y/N]: y Downloading Packages: (1/3): git-1.7.1-4.el6_7.1.x86_64.rpm | 4.6 MB 00:01 (2/3): perl-Error-0.17015-4.el6.noarch.rpm | 29 kB 00:00 (3/3): perl-Git-1.7.1-4.el6_7.1.noarch.rpm | 28 kB 00:00 ----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- Total 683 kB/s | 4.7 MB 00:06 warning: rpmts_HdrFromFdno: Header V3 RSA/SHA1 Signature, key ID c105b9de: NOKEY Retrieving key from file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-6 Importing GPG key 0xC105B9DE: Userid : CentOS-6 Key (CentOS 6 Official Signing Key) <centos-6-key@centos.org> Package: centos-release-6-5.el6.centos.11.1.x86_64 (@anaconda-CentOS-201311272149.x86_64/6.5) From : /etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-6 Is this ok [y/N]: y Running rpm_check_debug Running Transaction Test Transaction Test Succeeded Running Transaction Installing : 1:perl-Error-0.17015-4.el6.noarch 1/3 Installing : git-1.7.1-4.el6_7.1.x86_64 2/3 Installing : perl-Git-1.7.1-4.el6_7.1.noarch 3/3 Verifying : perl-Git-1.7.1-4.el6_7.1.noarch 1/3 Verifying : 1:perl-Error-0.17015-4.el6.noarch 2/3 Verifying : git-1.7.1-4.el6_7.1.x86_64 3/3 Installed: git.x86_64 0:1.7.1-4.el6_7.1 Dependency Installed: perl-Error.noarch 1:0.17015-4.el6 perl-Git.noarch 0:1.7.1-4.el6_7.1 Complete! [root@Compiler ~]#

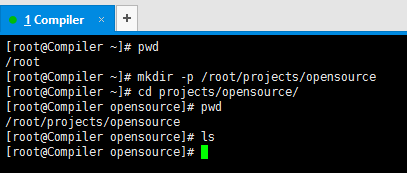

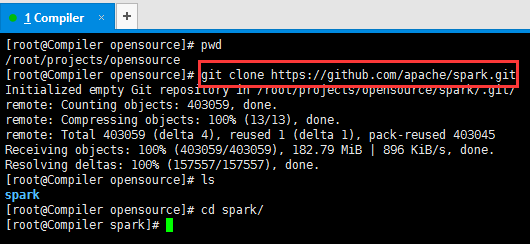

第二大步:创建一个目录克隆spark源代码

mkdir -p /root/projects/opensource

cd /root/projects/opensource

git clone https://github.com/apache/spark.git

[root@Compiler ~]# pwd /root [root@Compiler ~]# mkdir -p /root/projects/opensource [root@Compiler ~]# cd projects/opensource/ [root@Compiler opensource]# pwd /root/projects/opensource [root@Compiler opensource]# ls [root@Compiler opensource]#

[root@Compiler opensource]# pwd /root/projects/opensource [root@Compiler opensource]# git clone https://github.com/apache/spark.git

Initialized empty Git repository in /root/projects/opensource/spark/.git/ remote: Counting objects: 403059, done. remote: Compressing objects: 100% (13/13), done. remote: Total 403059 (delta 4), reused 1 (delta 1), pack-reused 403045 Receiving objects: 100% (403059/403059), 182.79 MiB | 896 KiB/s, done. Resolving deltas: 100% (157557/157557), done. [root@Compiler opensource]# ls spark [root@Compiler opensource]# cd spark/ [root@Compiler spark]#

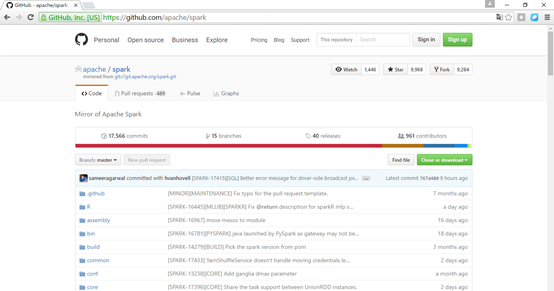

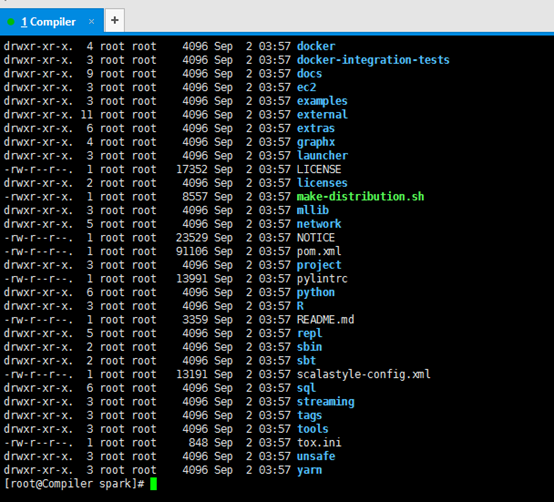

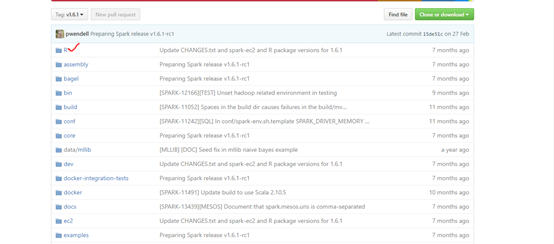

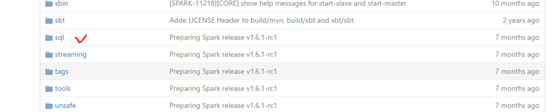

其实就是,对应着,如下网页界面。

[root@Compiler spark]# pwd /root/projects/opensource/spark [root@Compiler spark]# ll total 280 -rw-r--r--. 1 root root 1804 Sep 2 03:53 appveyor.yml drwxr-xr-x. 3 root root 4096 Sep 2 03:53 assembly drwxr-xr-x. 2 root root 4096 Sep 2 03:53 bin drwxr-xr-x. 2 root root 4096 Sep 2 03:53 build drwxr-xr-x. 8 root root 4096 Sep 2 03:53 common drwxr-xr-x. 2 root root 4096 Sep 2 03:53 conf -rw-r--r--. 1 root root 988 Sep 2 03:53 CONTRIBUTING.md drwxr-xr-x. 3 root root 4096 Sep 2 03:53 core drwxr-xr-x. 5 root root 4096 Sep 2 03:53 data drwxr-xr-x. 6 root root 4096 Sep 2 03:53 dev drwxr-xr-x. 9 root root 4096 Sep 2 03:53 docs drwxr-xr-x. 3 root root 4096 Sep 2 03:53 examples drwxr-xr-x. 15 root root 4096 Sep 2 03:53 external drwxr-xr-x. 3 root root 4096 Sep 2 03:53 graphx drwxr-xr-x. 3 root root 4096 Sep 2 03:53 launcher -rw-r--r--. 1 root root 17811 Sep 2 03:53 LICENSE drwxr-xr-x. 2 root root 4096 Sep 2 03:53 licenses drwxr-xr-x. 3 root root 4096 Sep 2 03:53 mesos drwxr-xr-x. 3 root root 4096 Sep 2 03:53 mllib drwxr-xr-x. 3 root root 4096 Sep 2 03:53 mllib-local -rw-r--r--. 1 root root 24749 Sep 2 03:53 NOTICE -rw-r--r--. 1 root root 97324 Sep 2 03:53 pom.xml drwxr-xr-x. 2 root root 4096 Sep 2 03:53 project drwxr-xr-x. 6 root root 4096 Sep 2 03:53 python drwxr-xr-x. 3 root root 4096 Sep 2 03:53 R -rw-r--r--. 1 root root 3828 Sep 2 03:53 README.md drwxr-xr-x. 5 root root 4096 Sep 2 03:53 repl drwxr-xr-x. 2 root root 4096 Sep 2 03:53 sbin -rw-r--r--. 1 root root 16952 Sep 2 03:53 scalastyle-config.xml drwxr-xr-x. 6 root root 4096 Sep 2 03:53 sql drwxr-xr-x. 3 root root 4096 Sep 2 03:53 streaming drwxr-xr-x. 3 root root 4096 Sep 2 03:53 tools drwxr-xr-x. 3 root root 4096 Sep 2 03:53 yarn [root@Compiler spark]#

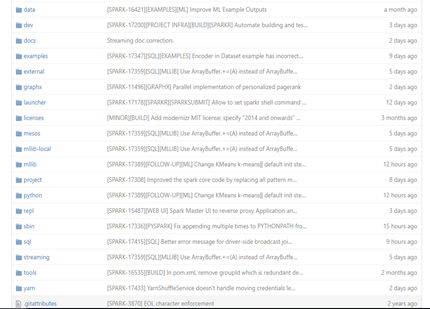

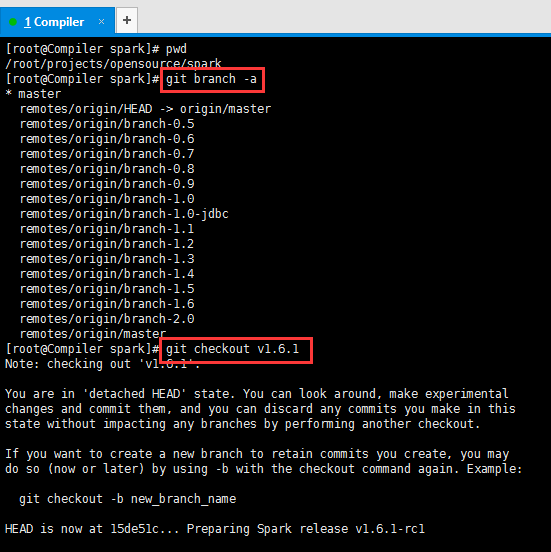

第三大步:切换分支

git checkout v1.6.1 //在spark目录下执行

[root@Compiler spark]# pwd /root/projects/opensource/spark [root@Compiler spark]# git branch -a * master remotes/origin/HEAD -> origin/master remotes/origin/branch-0.5 remotes/origin/branch-0.6 remotes/origin/branch-0.7 remotes/origin/branch-0.8 remotes/origin/branch-0.9 remotes/origin/branch-1.0 remotes/origin/branch-1.0-jdbc remotes/origin/branch-1.1 remotes/origin/branch-1.2 remotes/origin/branch-1.3 remotes/origin/branch-1.4 remotes/origin/branch-1.5 remotes/origin/branch-1.6 remotes/origin/branch-2.0 remotes/origin/master [root@Compiler spark]# git checkout v1.6.1 Note: checking out 'v1.6.1'. You are in 'detached HEAD' state. You can look around, make experimental changes and commit them, and you can discard any commits you make in this state without impacting any branches by performing another checkout. If you want to create a new branch to retain commits you create, you may do so (now or later) by using -b with the checkout command again. Example: git checkout -b new_branch_name HEAD is now at 15de51c... Preparing Spark release v1.6.1-rc1 [root@Compiler spark]#

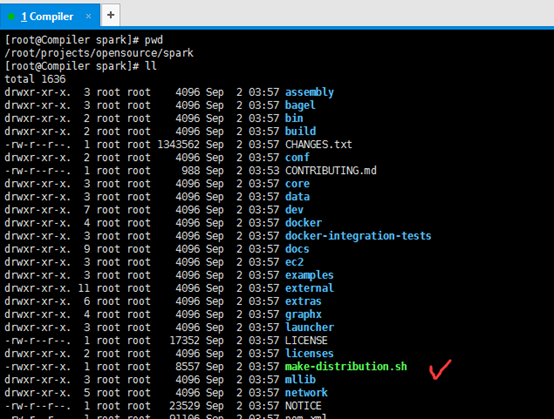

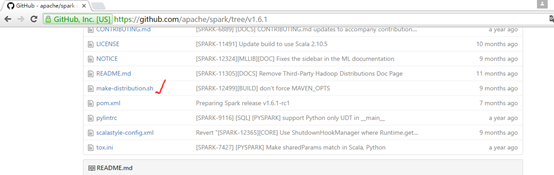

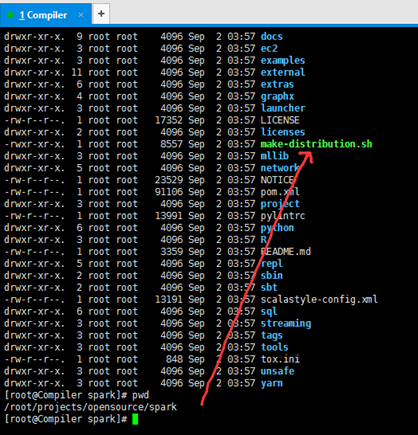

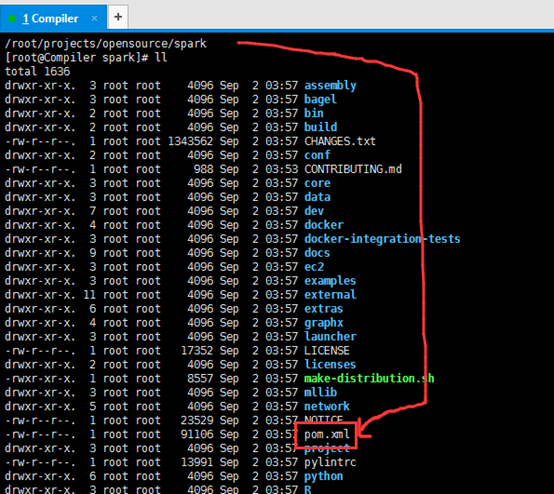

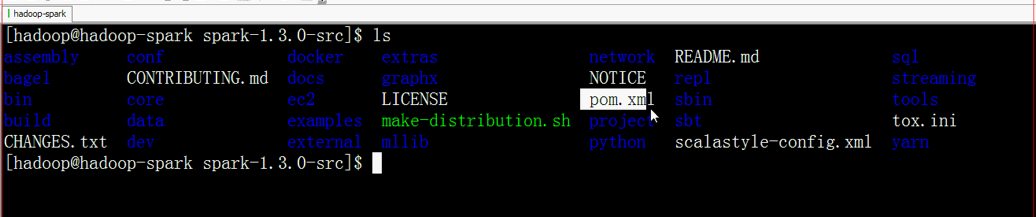

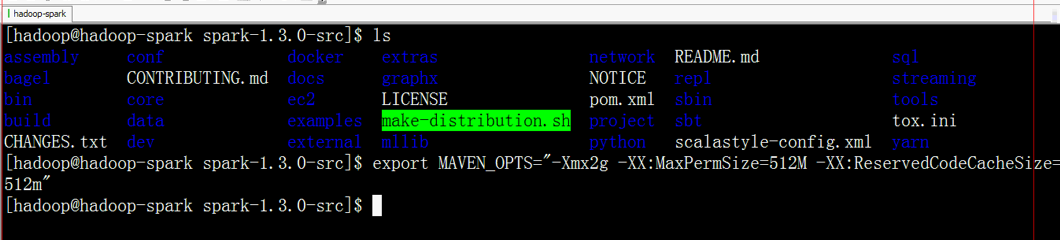

那么,就有了。make-distribution.sh

[root@Compiler spark]# pwd /root/projects/opensource/spark [root@Compiler spark]# ll total 1636 drwxr-xr-x. 3 root root 4096 Sep 2 03:57 assembly drwxr-xr-x. 3 root root 4096 Sep 2 03:57 bagel drwxr-xr-x. 2 root root 4096 Sep 2 03:57 bin drwxr-xr-x. 2 root root 4096 Sep 2 03:57 build -rw-r--r--. 1 root root 1343562 Sep 2 03:57 CHANGES.txt drwxr-xr-x. 2 root root 4096 Sep 2 03:57 conf -rw-r--r--. 1 root root 988 Sep 2 03:53 CONTRIBUTING.md drwxr-xr-x. 3 root root 4096 Sep 2 03:57 core drwxr-xr-x. 3 root root 4096 Sep 2 03:57 data drwxr-xr-x. 7 root root 4096 Sep 2 03:57 dev drwxr-xr-x. 4 root root 4096 Sep 2 03:57 docker drwxr-xr-x. 3 root root 4096 Sep 2 03:57 docker-integration-tests drwxr-xr-x. 9 root root 4096 Sep 2 03:57 docs drwxr-xr-x. 3 root root 4096 Sep 2 03:57 ec2 drwxr-xr-x. 3 root root 4096 Sep 2 03:57 examples drwxr-xr-x. 11 root root 4096 Sep 2 03:57 external drwxr-xr-x. 6 root root 4096 Sep 2 03:57 extras drwxr-xr-x. 4 root root 4096 Sep 2 03:57 graphx drwxr-xr-x. 3 root root 4096 Sep 2 03:57 launcher -rw-r--r--. 1 root root 17352 Sep 2 03:57 LICENSE drwxr-xr-x. 2 root root 4096 Sep 2 03:57 licenses -rwxr-xr-x. 1 root root 8557 Sep 2 03:57 make-distribution.sh drwxr-xr-x. 3 root root 4096 Sep 2 03:57 mllib drwxr-xr-x. 5 root root 4096 Sep 2 03:57 network -rw-r--r--. 1 root root 23529 Sep 2 03:57 NOTICE -rw-r--r--. 1 root root 91106 Sep 2 03:57 pom.xml drwxr-xr-x. 3 root root 4096 Sep 2 03:57 project -rw-r--r--. 1 root root 13991 Sep 2 03:57 pylintrc drwxr-xr-x. 6 root root 4096 Sep 2 03:57 python drwxr-xr-x. 3 root root 4096 Sep 2 03:57 R -rw-r--r--. 1 root root 3359 Sep 2 03:57 README.md drwxr-xr-x. 5 root root 4096 Sep 2 03:57 repl drwxr-xr-x. 2 root root 4096 Sep 2 03:57 sbin drwxr-xr-x. 2 root root 4096 Sep 2 03:57 sbt -rw-r--r--. 1 root root 13191 Sep 2 03:57 scalastyle-config.xml drwxr-xr-x. 6 root root 4096 Sep 2 03:57 sql drwxr-xr-x. 3 root root 4096 Sep 2 03:57 streaming drwxr-xr-x. 3 root root 4096 Sep 2 03:57 tags drwxr-xr-x. 3 root root 4096 Sep 2 03:57 tools -rw-r--r--. 1 root root 848 Sep 2 03:57 tox.ini drwxr-xr-x. 3 root root 4096 Sep 2 03:57 unsafe drwxr-xr-x. 3 root root 4096 Sep 2 03:57 yarn [root@Compiler spark]#

其实啊,对应下面的这个界面

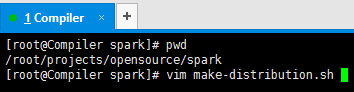

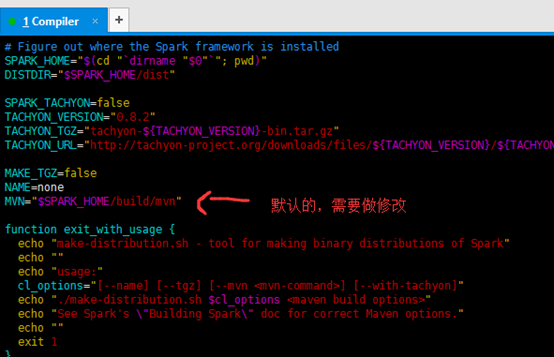

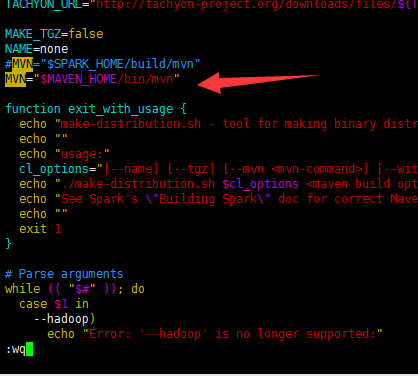

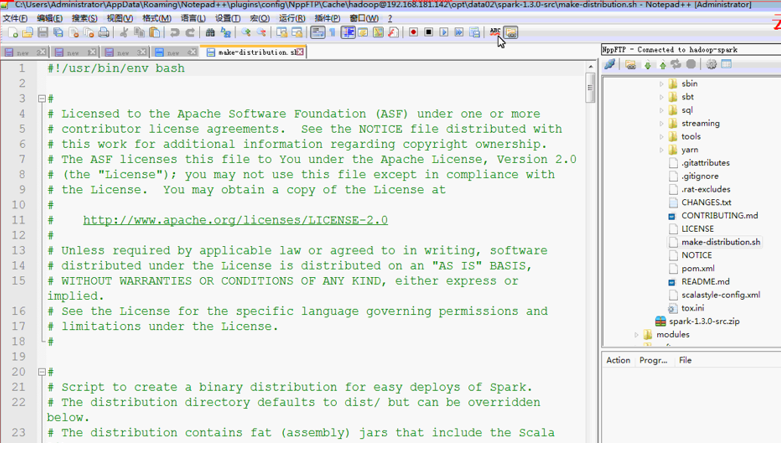

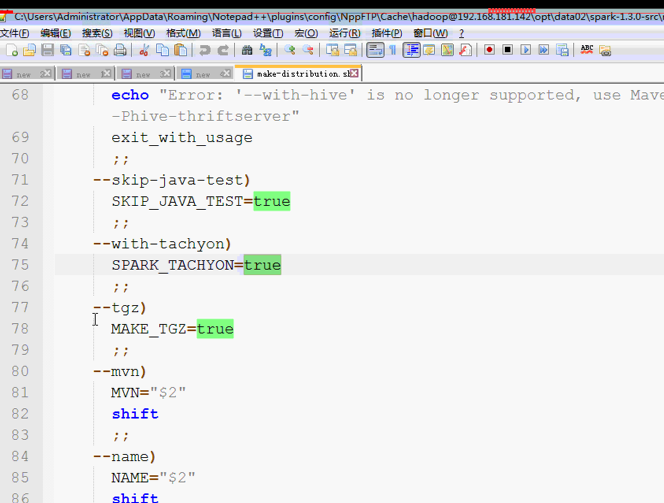

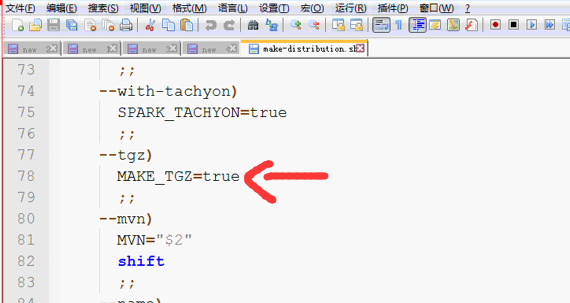

修改make-distribution.sh文件

[root@Compiler spark]# pwd /root/projects/opensource/spark [root@Compiler spark]# vim make-distribution.sh

我自己安装的maven,是 MAVEN_HOME=/usr/local/apache-maven-3.3.3

改为。 MVN="/usr/local/apache-maven-3.3.3/bin/mvn" 或 MVN="$MAVEN_HOME/bin /mvn"

MAKE_TGZ=false NAME=none #MVN="$SPARK_HOME/build/mvn" MVN="$MAVEN_HOME/bin/mvn"

第四大步 安装jdk7+

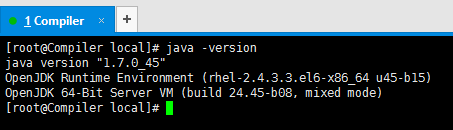

一般将获得如下信息:

java version "1.7.0_45"

OpenJDK Runtime Environment (rhel-2.4.3.3.el6-x86_64 u45-b15)

OpenJDK 64-Bit Server VM (build 24.45-b08, mixed mode)

第一步:查看Centos6.5自带的JDK是否已安装

<1> 检测原OPENJDK版本

# java -version

一般将获得如下信息:

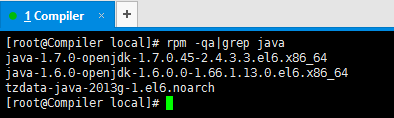

tzdata-java-2013g-1.el6.noarch

java-1.7.0-openjdk-1.7.0.45-2.4.3.3.el6.x86_64

java-1.6.0-openjdk-1.6.0.0-1.66.1.13.0.el6.x86_64

<2>进一步查看JDK信息

# rpm -qa|grep java

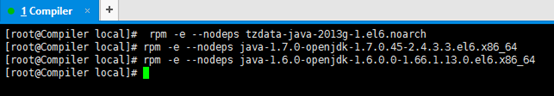

rpm -e --nodeps tzdata-java-2013g-1.el6.noarch

rpm -e --nodeps java-1.7.0-openjdk-1.7.0.45-2.4.3.3.el6.x86_64

rpm -e --nodeps java-1.6.0-openjdk-1.6.0.0-1.66.1.13.0.el6.x86_64

<3>卸载OPENJDK

自带的jdk已经没了。

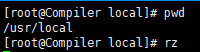

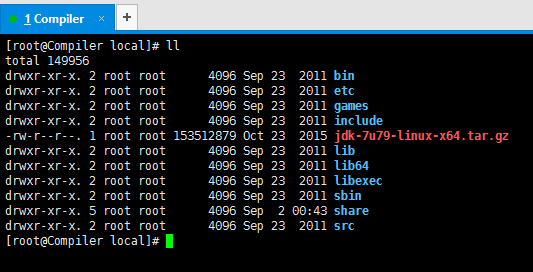

在root用户下安装jdk-7u79-linux-x64.tar.gz

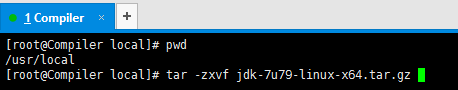

在/usr/local上传

解压,tar -zxvf jdk-7u79-linux-x64.tar.gz

删除压缩包,rm -rf jdk-7u79-linux-x64.tar.gz

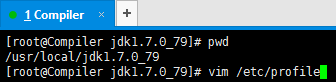

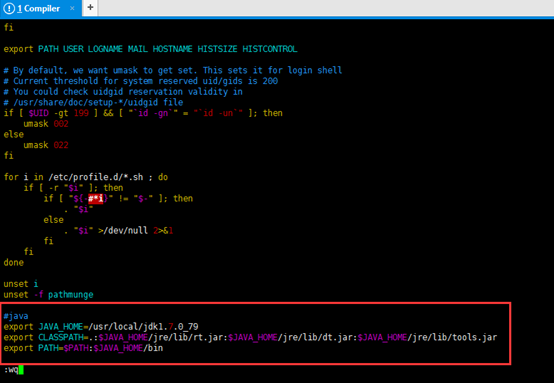

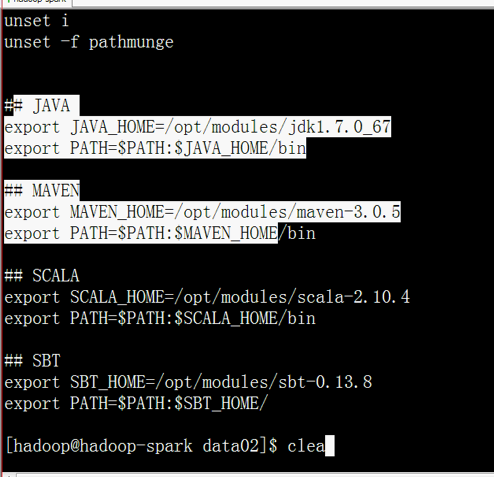

配置环境变量,vim /etc/profile

#java export JAVA_HOME=/usr/local/jdk1.7.0_79 export CLASSPATH=.:$JAVA_HOME/jre/lib/rt.jar:$JAVA_HOME/jre/lib/dt.jar:$JAVA_HOME/jre/lib/tools.jar export PATH=$PATH:$JAVA_HOME/bin

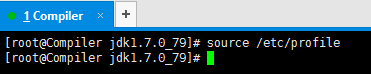

文件生效,source /etc/profile

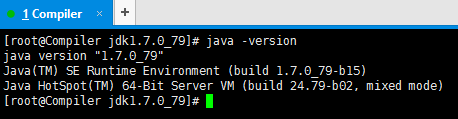

查看是否安装成功,java –version

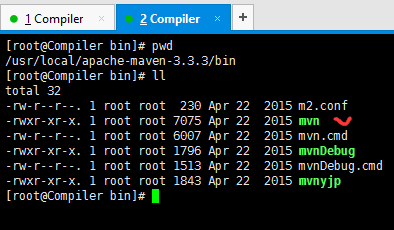

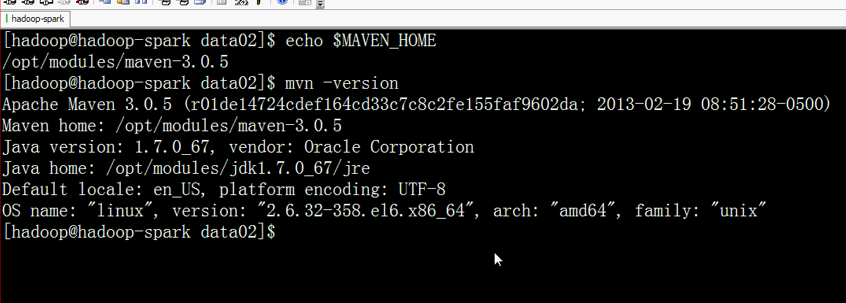

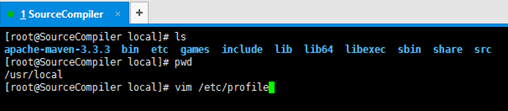

第五大步、安装maven

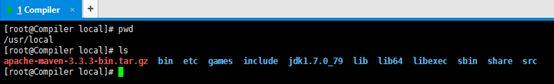

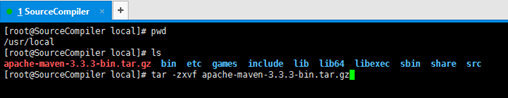

下载apache-maven-3.3.3-bin.tar.gz

/usr/local/

上传apache-maven-3.3.3-bin.tar.gz

解压,tar -zxvf apache-maven-3.3.3-bin.tar.gz

删除压缩包,rm -rf apache-maven-3.3.3-bin.tar.gz

maven的配置环境变量,vim /etc/profile

#maven

export MAVEN_HOME=/usr/local/apache-maven-3.3.3

export PATH=$PATH:$MAVEN_HOME/bin

文件生效,source /etc/profile

查看是否安装成功,mvn -v

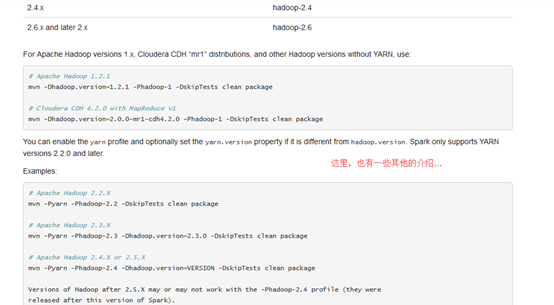

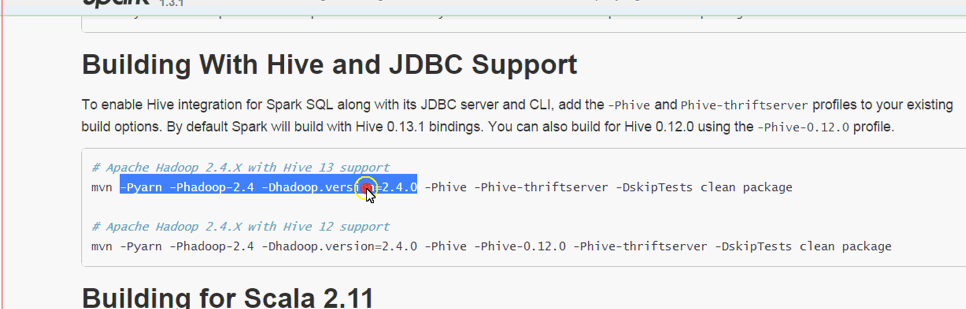

第六大步:看官网,跟着走,初步了解

http://spark.apache.org/docs/1.6.1/building-spark.html

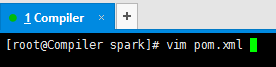

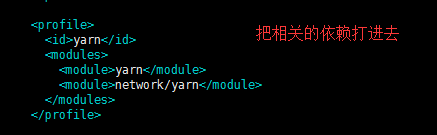

[root@Compiler spark]# vim pom.xml

先来初步认识下这个pom.xml文件

P是profile的意思,

我们可以同时激活多个嘛

其他的不再赘述,这是对它的一些初步认识。

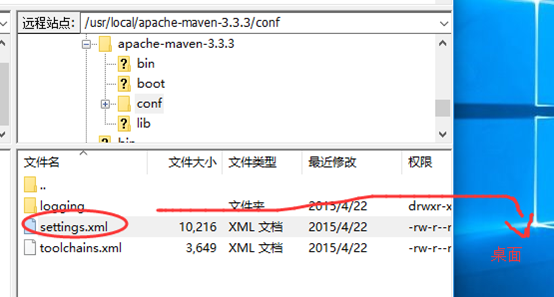

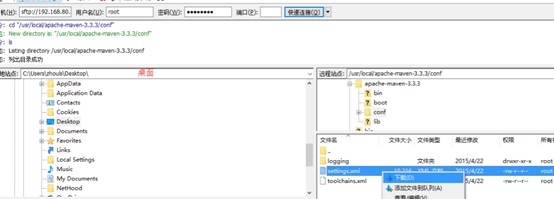

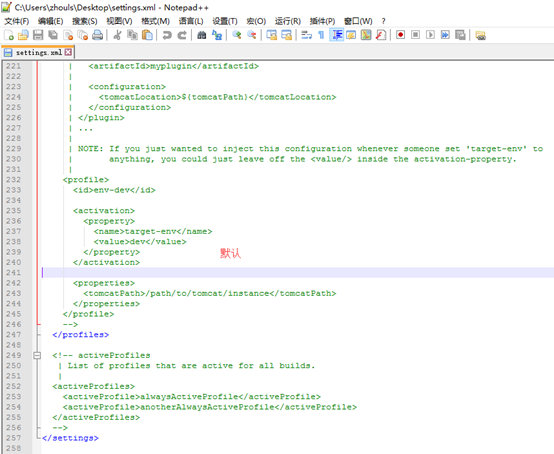

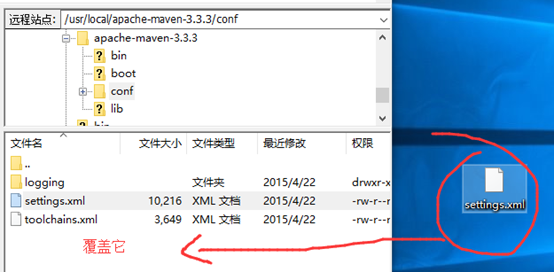

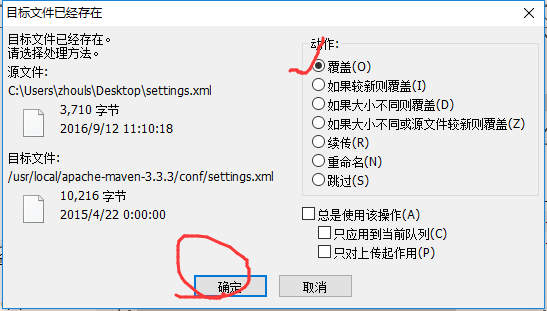

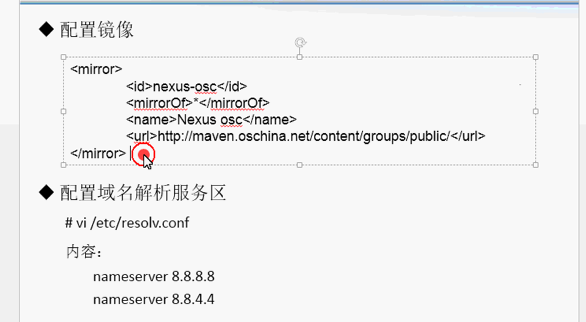

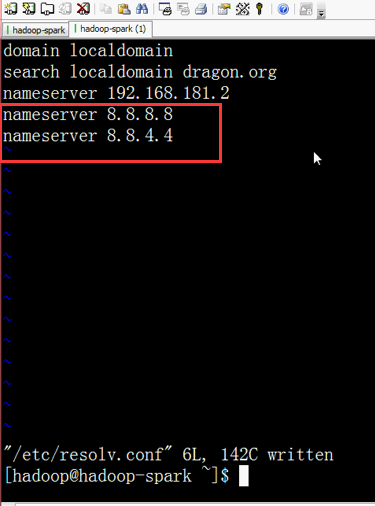

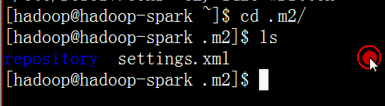

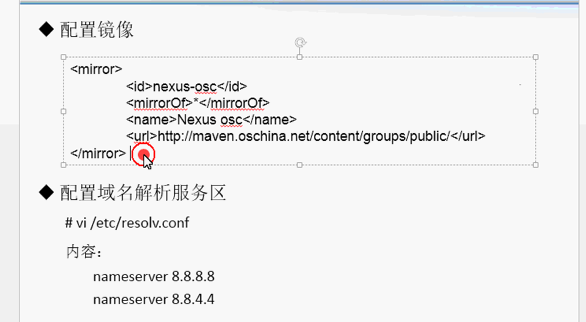

有了对pom.xml的初步了解,之后呢?经验之谈,一般都会对$MAVEN_HOME/conf/settings.xml修改,这是大牛在生产环境下的心血总结啊!!!

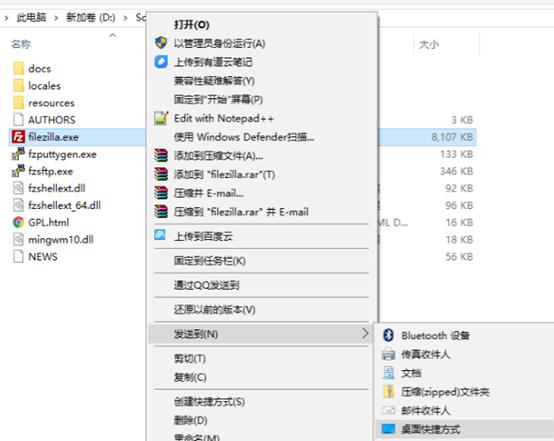

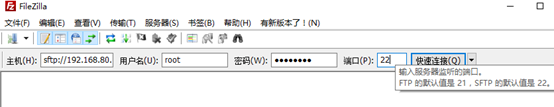

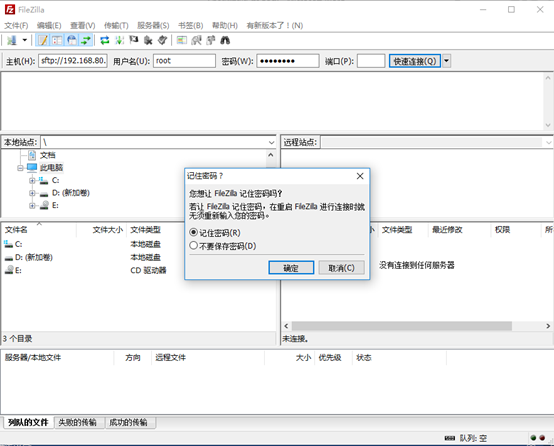

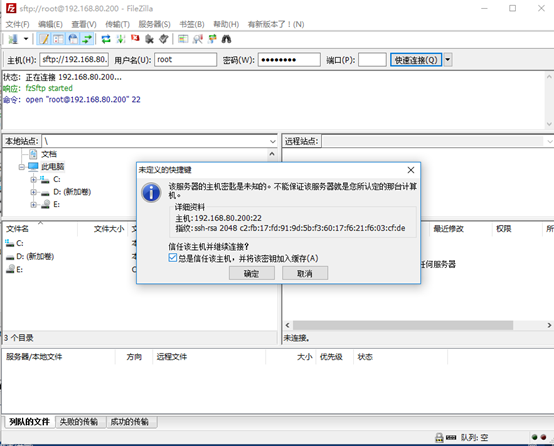

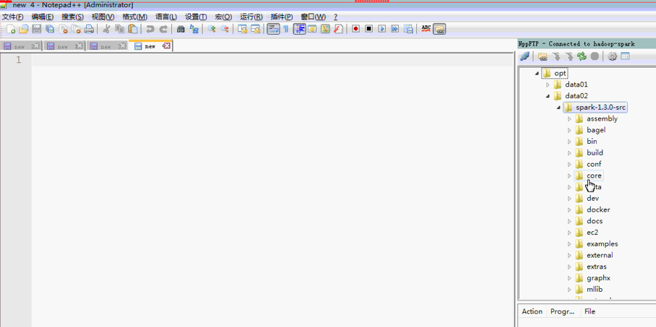

这里啊,给大家推荐一款很实用的软件!

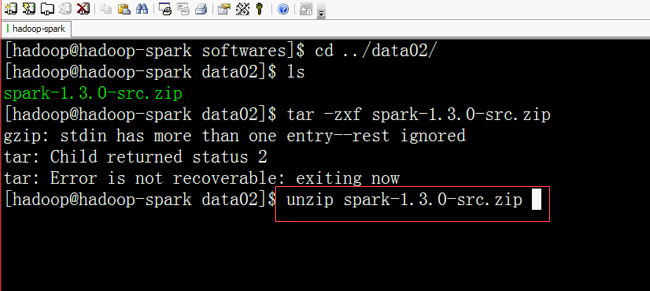

解压,

这是不行的

是因为,左侧 本地站点 这个位置选的是 计算机 ,而非具体的某个盘。

以下是默认的

<?xml version="1.0" encoding="UTF-8"?>

<!--

Licensed to the Apache Software Foundation (ASF) under one

or more contributor license agreements. See the NOTICE file

distributed with this work for additional information

regarding copyright ownership. The ASF licenses this file

to you under the Apache License, Version 2.0 (the

"License"); you may not use this file except in compliance

with the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing,

software distributed under the License is distributed on an

"AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

KIND, either express or implied. See the License for the

specific language governing permissions and limitations

under the License.

-->

<!--

| This is the configuration file for Maven. It can be specified at two levels:

|

| 1. User Level. This settings.xml file provides configuration for a single user,

| and is normally provided in ${user.home}/.m2/settings.xml.

|

| NOTE: This location can be overridden with the CLI option:

|

| -s /path/to/user/settings.xml

|

| 2. Global Level. This settings.xml file provides configuration for all Maven

| users on a machine (assuming they're all using the same Maven

| installation). It's normally provided in

| ${maven.home}/conf/settings.xml.

|

| NOTE: This location can be overridden with the CLI option:

|

| -gs /path/to/global/settings.xml

|

| The sections in this sample file are intended to give you a running start at

| getting the most out of your Maven installation. Where appropriate, the default

| values (values used when the setting is not specified) are provided.

|

|-->

<settings xmlns="http://maven.apache.org/SETTINGS/1.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/SETTINGS/1.0.0 http://maven.apache.org/xsd/settings-1.0.0.xsd">

<!-- localRepository

| The path to the local repository maven will use to store artifacts.

|

| Default: ${user.home}/.m2/repository

<localRepository>/path/to/local/repo</localRepository>

-->

<!-- interactiveMode

| This will determine whether maven prompts you when it needs input. If set to false,

| maven will use a sensible default value, perhaps based on some other setting, for

| the parameter in question.

|

| Default: true

<interactiveMode>true</interactiveMode>

-->

<!-- offline

| Determines whether maven should attempt to connect to the network when executing a build.

| This will have an effect on artifact downloads, artifact deployment, and others.

|

| Default: false

<offline>false</offline>

-->

<!-- pluginGroups

| This is a list of additional group identifiers that will be searched when resolving plugins by their prefix, i.e.

| when invoking a command line like "mvn prefix:goal". Maven will automatically add the group identifiers

| "org.apache.maven.plugins" and "org.codehaus.mojo" if these are not already contained in the list.

|-->

<pluginGroups>

<!-- pluginGroup

| Specifies a further group identifier to use for plugin lookup.

<pluginGroup>com.your.plugins</pluginGroup>

-->

</pluginGroups>

<!-- proxies

| This is a list of proxies which can be used on this machine to connect to the network.

| Unless otherwise specified (by system property or command-line switch), the first proxy

| specification in this list marked as active will be used.

|-->

<proxies>

<!-- proxy

| Specification for one proxy, to be used in connecting to the network.

|

<proxy>

<id>optional</id>

<active>true</active>

<protocol>http</protocol>

<username>proxyuser</username>

<password>proxypass</password>

<host>proxy.host.net</host>

<port>80</port>

<nonProxyHosts>local.net|some.host.com</nonProxyHosts>

</proxy>

-->

</proxies>

<!-- servers

| This is a list of authentication profiles, keyed by the server-id used within the system.

| Authentication profiles can be used whenever maven must make a connection to a remote server.

|-->

<servers>

<!-- server

| Specifies the authentication information to use when connecting to a particular server, identified by

| a unique name within the system (referred to by the 'id' attribute below).

|

| NOTE: You should either specify username/password OR privateKey/passphrase, since these pairings are

| used together.

|

<server>

<id>deploymentRepo</id>

<username>repouser</username>

<password>repopwd</password>

</server>

-->

<!-- Another sample, using keys to authenticate.

<server>

<id>siteServer</id>

<privateKey>/path/to/private/key</privateKey>

<passphrase>optional; leave empty if not used.</passphrase>

</server>

-->

</servers>

<!-- mirrors

| This is a list of mirrors to be used in downloading artifacts from remote repositories.

|

| It works like this: a POM may declare a repository to use in resolving certain artifacts.

| However, this repository may have problems with heavy traffic at times, so people have mirrored

| it to several places.

|

| That repository definition will have a unique id, so we can create a mirror reference for that

| repository, to be used as an alternate download site. The mirror site will be the preferred

| server for that repository.

|-->

<mirrors>

<!-- mirror

| Specifies a repository mirror site to use instead of a given repository. The repository that

| this mirror serves has an ID that matches the mirrorOf element of this mirror. IDs are used

| for inheritance and direct lookup purposes, and must be unique across the set of mirrors.

|

<mirror>

<id>mirrorId</id>

<mirrorOf>repositoryId</mirrorOf>

<name>Human Readable Name for this Mirror.</name>

<url>http://my.repository.com/repo/path</url>

</mirror>

-->

</mirrors>

<!-- profiles

| This is a list of profiles which can be activated in a variety of ways, and which can modify

| the build process. Profiles provided in the settings.xml are intended to provide local machine-

| specific paths and repository locations which allow the build to work in the local environment.

|

| For example, if you have an integration testing plugin - like cactus - that needs to know where

| your Tomcat instance is installed, you can provide a variable here such that the variable is

| dereferenced during the build process to configure the cactus plugin.

|

| As noted above, profiles can be activated in a variety of ways. One way - the activeProfiles

| section of this document (settings.xml) - will be discussed later. Another way essentially

| relies on the detection of a system property, either matching a particular value for the property,

| or merely testing its existence. Profiles can also be activated by JDK version prefix, where a

| value of '1.4' might activate a profile when the build is executed on a JDK version of '1.4.2_07'.

| Finally, the list of active profiles can be specified directly from the command line.

|

| NOTE: For profiles defined in the settings.xml, you are restricted to specifying only artifact

| repositories, plugin repositories, and free-form properties to be used as configuration

| variables for plugins in the POM.

|

|-->

<profiles>

<!-- profile

| Specifies a set of introductions to the build process, to be activated using one or more of the

| mechanisms described above. For inheritance purposes, and to activate profiles via <activatedProfiles/>

| or the command line, profiles have to have an ID that is unique.

|

| An encouraged best practice for profile identification is to use a consistent naming convention

| for profiles, such as 'env-dev', 'env-test', 'env-production', 'user-jdcasey', 'user-brett', etc.

| This will make it more intuitive to understand what the set of introduced profiles is attempting

| to accomplish, particularly when you only have a list of profile id's for debug.

|

| This profile example uses the JDK version to trigger activation, and provides a JDK-specific repo.

<profile>

<id>jdk-1.4</id>

<activation>

<jdk>1.4</jdk>

</activation>

<repositories>

<repository>

<id>jdk14</id>

<name>Repository for JDK 1.4 builds</name>

<url>http://www.myhost.com/maven/jdk14</url>

<layout>default</layout>

<snapshotPolicy>always</snapshotPolicy>

</repository>

</repositories>

</profile>

-->

<!--

| Here is another profile, activated by the system property 'target-env' with a value of 'dev',

| which provides a specific path to the Tomcat instance. To use this, your plugin configuration

| might hypothetically look like:

|

| ...

| <plugin>

| <groupId>org.myco.myplugins</groupId>

| <artifactId>myplugin</artifactId>

|

| <configuration>

| <tomcatLocation>${tomcatPath}</tomcatLocation>

| </configuration>

| </plugin>

| ...

|

| NOTE: If you just wanted to inject this configuration whenever someone set 'target-env' to

| anything, you could just leave off the <value/> inside the activation-property.

|

<profile>

<id>env-dev</id>

<activation>

<property>

<name>target-env</name>

<value>dev</value>

</property>

</activation>

<properties>

<tomcatPath>/path/to/tomcat/instance</tomcatPath>

</properties>

</profile>

-->

</profiles>

<!-- activeProfiles

| List of profiles that are active for all builds.

|

<activeProfiles>

<activeProfile>alwaysActiveProfile</activeProfile>

<activeProfile>anotherAlwaysActiveProfile</activeProfile>

</activeProfiles>

-->

</settings>

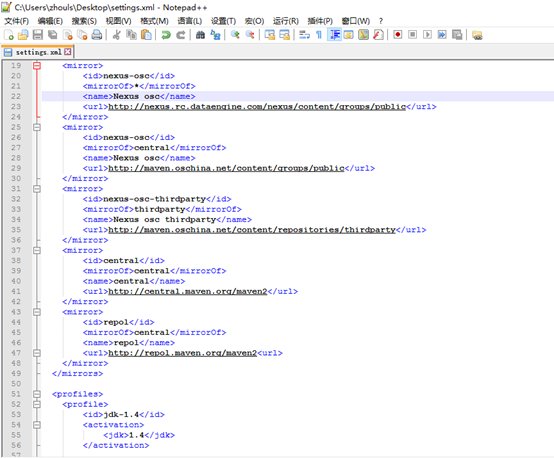

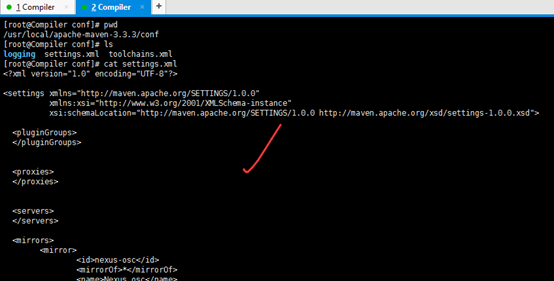

改为,

<?xml version="1.0" encoding="UTF-8"?>

<settings xmlns="http://maven.apache.org/SETTINGS/1.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/SETTINGS/1.0.0 http://maven.apache.org/xsd/settings-1.0.0.xsd">

<pluginGroups>

</pluginGroups>

<proxies>

</proxies>

<servers>

</servers>

<mirrors>

<mirror>

<id>nexus-osc</id>

<mirrorOf>*</mirrorOf>

<name>Nexus osc</name>

<url>http://nexus.rc.dataengine.com/nexus/content/groups/public</url>

</mirror>

<mirror>

<id>nexus-osc</id>

<mirrorOf>central</mirrorOf>

<name>Nexus osc</name>

<url>http://maven.oschina.net/content/groups/public</url>

</mirror>

<mirror>

<id>nexus-osc-thirdparty</id>

<mirrorOf>thirdparty</mirrorOf>

<name>Nexus osc thirdparty</name>

<url>http://maven.oschina.net/content/repositories/thirdparty</url>

</mirror>

<mirror>

<id>central</id>

<mirrorOf>central</mirrorOf>

<name>central</name>

<url>http://central.maven.org/maven2</url>

</mirror>

<mirror>

<id>repol</id>

<mirrorOf>central</mirrorOf>

<name>repol</name>

<url>http://repol.maven.org/maven2</url>

</mirror>

</mirrors>

<profiles>

<profile>

<id>jdk-1.4</id>

<activation>

<jdk>1.4</jdk>

</activation>

<repositories>

<repository>

<id>rc</id>

<name>rc nexus</name>

<url>http://nexus.rc.dataengine.com/nexus/content/groups/public</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

<repository>

<id>nexus</id>

<name>local private nexus</name>

<url>http://maven.oschina.net/content/groups/public</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

<repository>

<id>central</id>

<name>central</name>

<url>http://central.maven.org/maven2/</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

<repository>

<id>repol</id>

<name>repol</name>

<url>http://repol.maven.org/maven2/</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

</repositories>

<pluginRepositories>

<pluginRepository>

<id>rc</id>

<name>rc nexus</name>

<url>http://nexus.rc.dataengine.com/nexus/content/groups/public</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</pluginRepository>

<pluginRepository>

<id>nexus</id>

<name>local private nexus</name>

<url>http://maven.oschina.net/content/groups/public</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</pluginRepository>

<pluginRepository>

<id>central</id>

<name>central</name>

<url>http://central.maven.org/maven2/</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</pluginRepository>

<pluginRepository>

<id>repol</id>

<name>repol</name>

<url>http://repol.maven.org/maven2/</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</pluginRepository>

</pluginRepositories>

</profile>

</profiles>

<activateProfiles>

<activateProfile>jdk-1.4</activateProfile>

</activateProfiles>

</settings>

好啦,上述,是初步的解读!!!

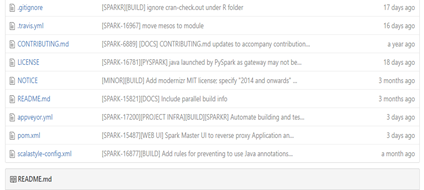

我们继续,解读spark根目录,

这样,我们就对这个目录结构,有了一个里里外外的认识。

https://github.com/apache/spark/tree/v1.6.1

好吧,到此,我对https://github.com/apache/spark/tree/v1.6.1 的解读到此结束。其他的,以后多深入研究。

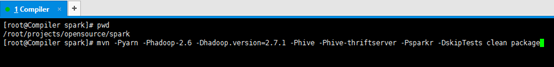

第七大步:先通过mvn下载相应的jar包

mvn -Pyarn -Phadoop-2.6 -Dhadoop.version=2.7.1 -Phive -Phive-thriftserver -Psparkr -DskipTests clean package //在spark 源码父目录下执行

[root@Compiler spark]# pwd

/root/projects/opensource/spark

[root@Compiler spark]# mvn -Pyarn -Phadoop-2.6 -Dhadoop.version=2.7.1 -Phive -Phive-thriftserver -Psparkr -DskipTests clean package

也许,要

[root@Compiler spark]# mvn -Pyarn -Phadoop-2.6 -Dhadoop.version=2.7.1 -Phive -Phive-thriftserver -Psparkr -DskipTests clean package

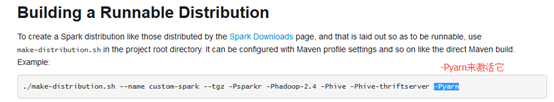

第八大步: 编译spark

./make-distribution.sh --name custom-spark --tgz -Psparkr -Phadoop-2.6 -Dhadoop.version=2.7.1 -Phive -Phive-thriftserver -Pyarn //在spark 源码父目录下执行

[root@Compiler spark]# ./make-distribution.sh --name custom-spark --tgz -Psparkr -Phadoop-2.6 -Dhadoop.version=2.7.1 -Phive -Phive-thriftserver -Pyarn

总结:

必须要注意!!!(重要的话,说三遍)

必须要注意!!!(重要的话,说三遍)

必须要注意!!!(重要的话,说三遍)

总结,比如,在每个问题出现之后,都先解决各自的对应问题,之后都如下重复!

执行第七大步的命令

[root@Compiler spark]# mvn -Pyarn -Phadoop-2.6 -Dhadoop.version=2.7.1 -Phive -Phive-thriftserver -Psparkr -DskipTests clean package

,等待成功后,再执行第八大步。

[root@Compiler spark]# ./make-distribution.sh --name custom-spark --tgz -Psparkr -Phadoop-2.6 -Dhadoop.version=2.7.1 -Phive -Phive-thriftserver -Pyarn

技巧1:多试几次确保所需的依赖能正常下载!!!

在这里,注意技巧,多用上述命令,多跑几次,也许,中间会报什么错误,缺少什么包。多用mvn -Pyarn -Phadoop-2.6 -Dhadoop.version=2.7.1 -Phive -Phive-thriftserver -Psparkr -DskipTests clean package

命令,跑几次,会自动下载缺少的包。

技巧2:个别的包可能需要手工下载并安装到本地仓库

比如,是报如下缺少的包。

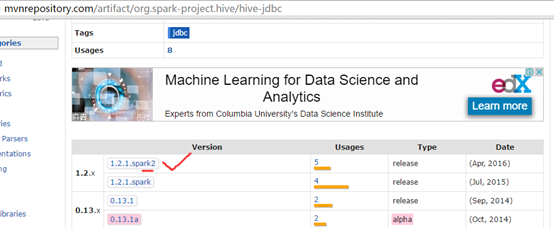

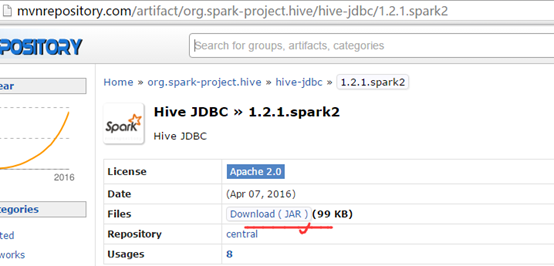

mvn install:install-file -DgroupId=org.spark-project.hive -DartifactId=hive-jdbc -Dversion=1.2.1.spark2 -Dpackaging=jar -Dfile=/root/Downloads/hive-jdbc-1.2.1.spark2.jar

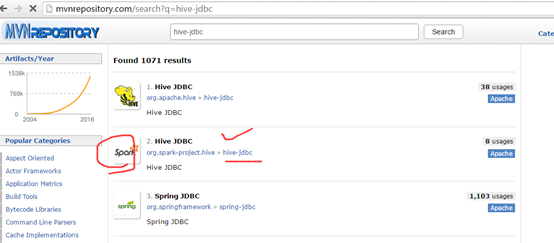

说个知识,maven啊,其实有很多仓库,如开源中国啊(http://maven.oschina.net/ )、http://mvnrepository.com/

http://mvnrepository.com/search?q=

我们搜索方式也有很多种,比如,我们可以用DgroupId或DartifactId去搜。

具体演示,如下

-DartifactId=hive-jdbc

找到它

-Dversion=1.2.1.spark2

找到它

下载

比如,上传到这个目录。

-Dfile=/root/Downloads/hive-jdbc-1.2.1.spark2.jar

技巧3:

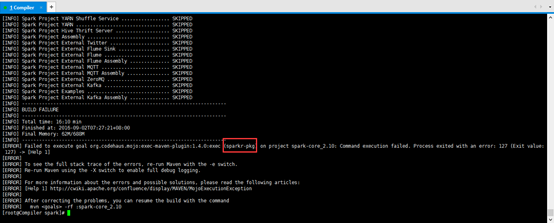

1、比如,报如下错误,sparkr-pkg,即R包

[ERROR] Failed to execute goal org.codehaus.mojo:exec-maven-plugin:1.4.0:exec (sparkr-pkg) on project spark-core_2.10: Command execution failed. Process exited with an error: 127 (Exit value: 127) -> [Help 1]

[ERROR]

[ERROR] To see the full stack trace of the errors, re-run Maven with the -e switch.

[ERROR] Re-run Maven using the -X switch to enable full debug logging.

[ERROR]

[ERROR] For more information about the errors and possible solutions, please read the following articles:

[ERROR] [Help 1] http://cwiki.apache.org/confluence/display/MAVEN/MojoExecutionException

[ERROR]

[ERROR] After correcting the problems, you can resume the build with the command

[ERROR] mvn <goals> -rf :spark-core_2.10

[root@Compiler spark]#

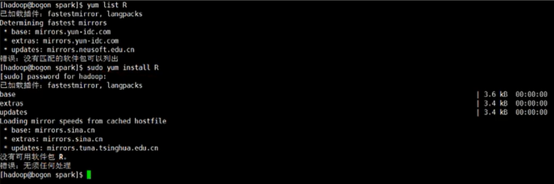

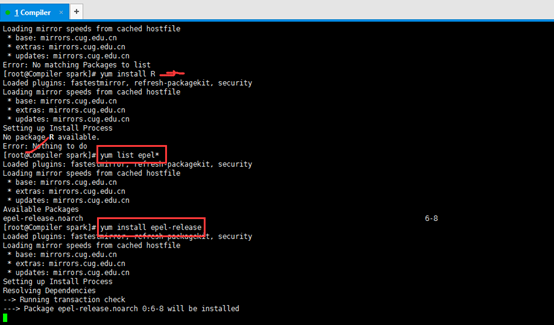

2、安装,先试试R包试试

[root@Compiler spark]# yum list R

Loaded plugins: fastestmirror, refresh-packagekit, security

Loading mirror speeds from cached hostfile

* base: mirrors.cug.edu.cn

* extras: mirrors.cug.edu.cn

* updates: mirrors.cug.edu.cn

Error: No matching Packages to list

[root@Compiler spark]# yum install R

Loaded plugins: fastestmirror, refresh-packagekit, security

Loading mirror speeds from cached hostfile

* base: mirrors.cug.edu.cn

* extras: mirrors.cug.edu.cn

* updates: mirrors.cug.edu.cn

Setting up Install Process

No package R available.

Error: Nothing to do

[root@Compiler spark]#

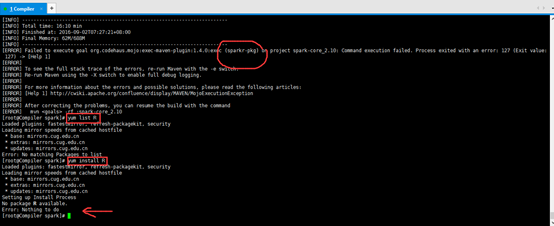

可见,当安装第三方包,也说,没有可用软件包,怎么办呢?

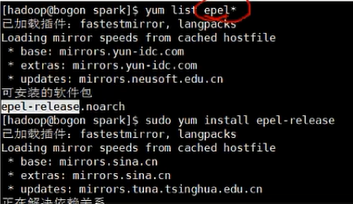

3、安装epl,来解决

[root@Compiler spark]# yum list epel*

Loaded plugins: fastestmirror, refresh-packagekit, security

Loading mirror speeds from cached hostfile

* base: mirrors.cug.edu.cn

* extras: mirrors.cug.edu.cn

* updates: mirrors.cug.edu.cn

Available Packages

epel-release.noarch 6-8 extras

[root@Compiler spark]# yum install epel-release

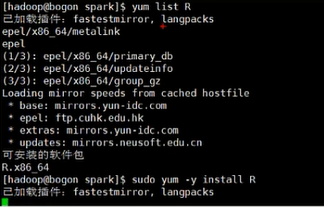

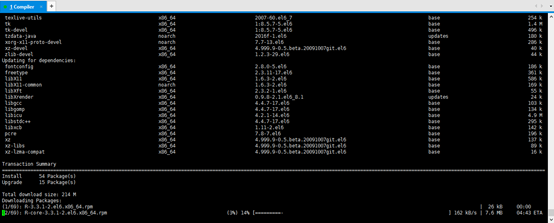

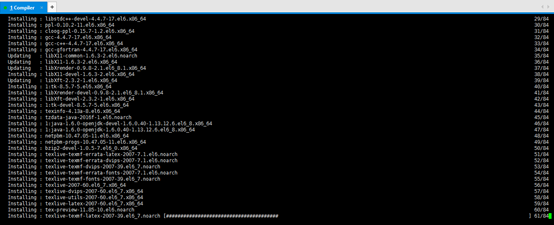

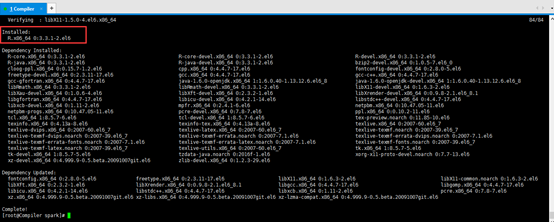

4、再来安装R包

Installed:

R.x86_64 0:3.3.1-2.el6

Dependency Installed:

R-core.x86_64 0:3.3.1-2.el6 R-core-devel.x86_64 0:3.3.1-2.el6 R-devel.x86_64 0:3.3.1-2.el6

R-java.x86_64 0:3.3.1-2.el6 R-java-devel.x86_64 0:3.3.1-2.el6 bzip2-devel.x86_64 0:1.0.5-7.el6_0

cloog-ppl.x86_64 0:0.15.7-1.2.el6 cpp.x86_64 0:4.4.7-17.el6 fontconfig-devel.x86_64 0:2.8.0-5.el6

freetype-devel.x86_64 0:2.3.11-17.el6 gcc.x86_64 0:4.4.7-17.el6 gcc-c++.x86_64 0:4.4.7-17.el6

gcc-gfortran.x86_64 0:4.4.7-17.el6 java-1.6.0-openjdk.x86_64 1:1.6.0.40-1.13.12.6.el6_8 java-1.6.0-openjdk-devel.x86_64 1:1.6.0.40-1.13.12.6.el6_8

libRmath.x86_64 0:3.3.1-2.el6 libRmath-devel.x86_64 0:3.3.1-2.el6 libX11-devel.x86_64 0:1.6.3-2.el6

libXau-devel.x86_64 0:1.0.6-4.el6 libXft-devel.x86_64 0:2.3.2-1.el6 libXrender-devel.x86_64 0:0.9.8-2.1.el6_8.1

libgfortran.x86_64 0:4.4.7-17.el6 libicu-devel.x86_64 0:4.2.1-14.el6 libstdc++-devel.x86_64 0:4.4.7-17.el6

libxcb-devel.x86_64 0:1.11-2.el6 mpfr.x86_64 0:2.4.1-6.el6 netpbm.x86_64 0:10.47.05-11.el6

netpbm-progs.x86_64 0:10.47.05-11.el6 pcre-devel.x86_64 0:7.8-7.el6 ppl.x86_64 0:0.10.2-11.el6

tcl.x86_64 1:8.5.7-6.el6 tcl-devel.x86_64 1:8.5.7-6.el6 tex-preview.noarch 0:11.85-10.el6

texinfo.x86_64 0:4.13a-8.el6 texinfo-tex.x86_64 0:4.13a-8.el6 texlive.x86_64 0:2007-60.el6_7

texlive-dvips.x86_64 0:2007-60.el6_7 texlive-latex.x86_64 0:2007-60.el6_7 texlive-texmf.noarch 0:2007-39.el6_7

texlive-texmf-dvips.noarch 0:2007-39.el6_7 texlive-texmf-errata.noarch 0:2007-7.1.el6 texlive-texmf-errata-dvips.noarch 0:2007-7.1.el6

texlive-texmf-errata-fonts.noarch 0:2007-7.1.el6 texlive-texmf-errata-latex.noarch 0:2007-7.1.el6 texlive-texmf-fonts.noarch 0:2007-39.el6_7

texlive-texmf-latex.noarch 0:2007-39.el6_7 texlive-utils.x86_64 0:2007-60.el6_7 tk.x86_64 1:8.5.7-5.el6

tk-devel.x86_64 1:8.5.7-5.el6 tzdata-java.noarch 0:2016f-1.el6 xorg-x11-proto-devel.noarch 0:7.7-13.el6

xz-devel.x86_64 0:4.999.9-0.5.beta.20091007git.el6 zlib-devel.x86_64 0:1.2.3-29.el6

Dependency Updated:

fontconfig.x86_64 0:2.8.0-5.el6 freetype.x86_64 0:2.3.11-17.el6 libX11.x86_64 0:1.6.3-2.el6 libX11-common.noarch 0:1.6.3-2.el6

libXft.x86_64 0:2.3.2-1.el6 libXrender.x86_64 0:0.9.8-2.1.el6_8.1 libgcc.x86_64 0:4.4.7-17.el6 libgomp.x86_64 0:4.4.7-17.el6

libicu.x86_64 0:4.2.1-14.el6 libstdc++.x86_64 0:4.4.7-17.el6 libxcb.x86_64 0:1.11-2.el6 pcre.x86_64 0:7.8-7.el6

xz.x86_64 0:4.999.9-0.5.beta.20091007git.el6 xz-libs.x86_64 0:4.999.9-0.5.beta.20091007git.el6 xz-lzma-compat.x86_64 0:4.999.9-0.5.beta.20091007git.el6

Complete!

[root@Compiler spark]#

则,成功!

技巧4:

比如,报如下错

我们搜索方式也有很多种,比如,我们可以用DgroupId或DartifactId去搜。

这时,报错误,需要版本1.0.1。而这个库里,没有1.0.1版本。当然,换其他的库,如开源中国等。再比如,其他的库也没有这个版本呢?怎么办

此时,解决办法是,我们去改下pom.xml文件,依赖改为1.0.2即可。

当然这里,我们简便方法,用filezilla软件,同setting.xml一样哦。下载下来,修改,很方便。

http://www.cnblogs.com/zlslch/p/5843141.html

迅雷下载,

或者,比如在/root/Downloads目录下。

可以直接在集群里,

这里, 又是学到知识,如何查看DgroupId和DartifactId。

DgroupId

DartifactId

mvn install:install-file -DgroupId=org.eclipse.paho -DartifactId=org.eclipse.paho.client.mqttv3

-Dversion=1.0.1 -Dpackaging=jar -Dfile=/root/Downloads/org.eclipse.paho.client.mqttv3-1.0.1.jar

技巧5

如上图,即报错,说缺少spark-test-tags_2.10包。

[root@Compiler Downloads]# pwd

/root/Downloads

[root@Compiler Downloads]# ls

[root@Compiler Downloads]#

wget -c http://mvnrepository.com/artifact/net.alchim31.maven/scala-maven-plugin/3.2.2

[root@Compiler Downloads]# pwd

/root/Downloads

[root@Compiler Downloads]# ls

[root@Compiler Downloads]#

wget -c http://mvnrepository.com/artifact/net.alchim31.maven/scala-maven-plugin/3.2.2

--2016-09-02 09:56:03-- http://mvnrepository.com/artifact/net.alchim31.maven/scala-maven-plugin/3.2.2

Resolving mvnrepository.com... 107.23.60.39, 52.86.107.201

Connecting to mvnrepository.com|107.23.60.39|:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 31792 (31K) [text/html]

Saving to: “3.2.2”

100%[=====================================================================================================================================================>] 31,792 29.8K/s in 1.0s

2016-09-02 09:56:07 (29.8 KB/s) - “3.2.2” saved [31792/31792]

[root@Compiler Downloads]# ls

3.2.2

[root@Compiler Downloads]# ll

total 32

-rw-r--r--. 1 root root 31792 Sep 2 09:56 3.2.2

[root@Compiler Downloads]#

查看它的DgroupId和DartifactId。

<!-- https://mvnrepository.com/artifact/net.alchim31.maven/scala-maven-plugin -->

<dependency>

<groupId>net.alchim31.maven</groupId>

<artifactId>scala-maven-plugin</artifactId>

<version>3.2.2</version>

</dependency>

所以,

mvn install:install-file -DgroupId=net.alchim31.maven -DartifactId=scala-maven-plugin -Dversion=1.0.13.2.2 -Dpackaging=jar -Dfile=/root/Downloads/3.2.2

[INFO] Spark Project Networking ........................... SKIPPED

[INFO] Spark Project Shuffle Streaming Service ............ SKIPPED

[INFO] Spark Project Unsafe ............................... SKIPPED

[INFO] Spark Project Core ................................. SKIPPED

[INFO] Spark Project Bagel ................................ SKIPPED

[INFO] Spark Project GraphX ............................... SKIPPED

[INFO] Spark Project Streaming ............................ SKIPPED

[INFO] Spark Project Catalyst ............................. SKIPPED

[INFO] Spark Project SQL .................................. SKIPPED

[INFO] Spark Project ML Library ........................... SKIPPED

[INFO] Spark Project Tools ................................ SKIPPED

[INFO] Spark Project Hive ................................. SKIPPED

[INFO] Spark Project Docker Integration Tests ............. SKIPPED

[INFO] Spark Project REPL ................................. SKIPPED

[INFO] Spark Project Assembly ............................. SKIPPED

[INFO] Spark Project External Twitter ..................... SKIPPED

[INFO] Spark Project External Flume Sink .................. SKIPPED

[INFO] Spark Project External Flume ....................... SKIPPED

[INFO] Spark Project External Flume Assembly .............. SKIPPED

[INFO] Spark Project External MQTT ........................ SKIPPED

[INFO] Spark Project External MQTT Assembly ............... SKIPPED

[INFO] Spark Project External ZeroMQ ...................... SKIPPED

[INFO] Spark Project External Kafka ....................... SKIPPED

[INFO] Spark Project Examples ............................. SKIPPED

[INFO] Spark Project External Kafka Assembly .............. SKIPPED

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 11.905 s

[INFO] Finished at: 2016-09-02T10:04:14+08:00

[INFO] Final Memory: 28M/76M

[INFO] ------------------------------------------------------------------------

[root@Compiler spark]#

由此,可见,这个错误,我们已经得到解决。

总结,每个问题出现之后,都先解决各自的对应问题,之后都如下重复!

然后呢?

继续

[root@Compiler spark]# mvn -Pyarn -Phadoop-2.6 -Dhadoop.version=2.7.1 -Phive -Phive-thriftserver -Psparkr -DskipTests clean package

贴上典型的一些问题

得要把pom.xml对应的默认版本,改过来

[root@Compiler spark]# ./dev/change-scala-version.sh 2.11

./dev/../sql/hive/pom.xml

./dev/../sql/core/pom.xml

./dev/../sql/catalyst/pom.xml

./dev/../sql/hive-thriftserver/pom.xml

./dev/../streaming/pom.xml

./dev/../assembly/pom.xml

./dev/../core/pom.xml

./dev/../network/yarn/pom.xml

./dev/../network/shuffle/pom.xml

./dev/../network/common/pom.xml

./dev/../yarn/pom.xml

./dev/../docker-integration-tests/pom.xml

./dev/../graphx/pom.xml

./dev/../repl/pom.xml

./dev/../tags/pom.xml

./dev/../launcher/pom.xml

./dev/../dev/audit-release/maven_app_core/pom.xml

./dev/../dev/audit-release/blank_maven_build/pom.xml

./dev/../external/kafka-assembly/pom.xml

./dev/../external/flume-sink/pom.xml

./dev/../external/flume-assembly/pom.xml

./dev/../external/kafka/pom.xml

./dev/../external/zeromq/pom.xml

./dev/../external/mqtt/pom.xml

./dev/../external/mqtt-assembly/pom.xml

./dev/../external/twitter/pom.xml

./dev/../external/flume/pom.xml

./dev/../bagel/pom.xml

./dev/../tools/pom.xml

./dev/../pom.xml

./dev/../unsafe/pom.xml

./dev/../mllib/pom.xml

./dev/../extras/java8-tests/pom.xml

./dev/../extras/kinesis-asl/pom.xml

./dev/../extras/kinesis-asl-assembly/pom.xml

./dev/../extras/spark-ganglia-lgpl/pom.xml

./dev/../examples/pom.xml

./dev/../docs/_plugins/copy_api_dirs.rb

[root@Compiler spark]#

[root@Compiler spark]# mvn -Pyarn -Phadoop-2.6 -Dhadoop.version=2.7.1 -Phive -Phive-thriftserver -Psparkr -Dscala-2.11 -DskipTests clean package

由此,所以,啊,要参考官网,

如spark-1.6.1要求scala是2.11。则,默认是2.10,

先更改pom.xml里,对应地

[root@Compiler spark]# ./dev/change-scala-version.sh 2.11

[root@Compiler spark]# mvn -Pyarn -Phadoop-2.6 -Dhadoop.version=2.7.1 -Phive -Phive-thriftserver -Psparkr -Dscala-2.11 -DskipTests clean package

成功地,将scala.10升为scala2.11。

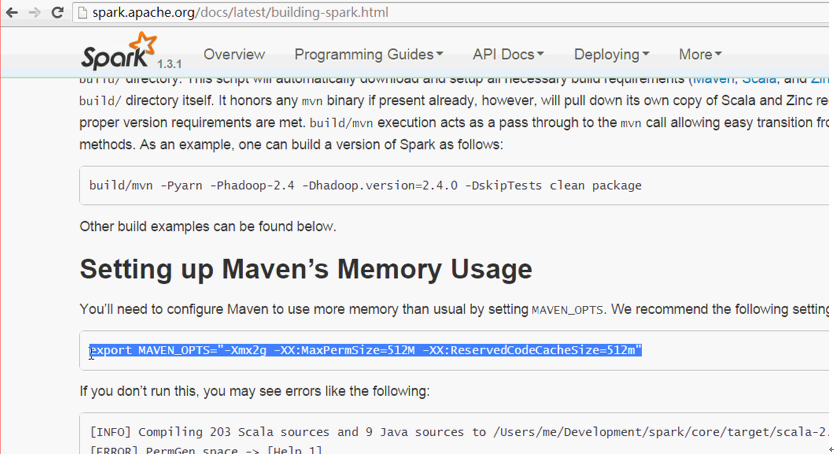

[root@Compiler spark]# export MAVEN_OPTS="-Xmx4g -XX:MaxPermSize=1g -XX:ReservedCodeCacheSize=1g"

这里,就不一一赘述了。类似的问题,都是这样的一个解决方法。直至,把对应的包都下载好。然后就最后成功了!

网速好的话,1个小时吧,网速慢的话,好几个小时。

setting.xml文件的内容是:

<?xml version="1.0" encoding="UTF-8"?>

<!--

Licensed to the Apache Software Foundation (ASF) under one

or more contributor license agreements. See the NOTICE file

distributed with this work for additional information

regarding copyright ownership. The ASF licenses this file

to you under the Apache License, Version 2.0 (the

"License"); you may not use this file except in compliance

with the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing,

software distributed under the License is distributed on an

"AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

KIND, either express or implied. See the License for the

specific language governing permissions and limitations

under the License.

-->

<!--

| This is the configuration file for Maven. It can be specified at two levels:

|

| 1. User Level. This settings.xml file provides configuration for a single user,

| and is normally provided in ${user.home}/.m2/settings.xml.

|

| NOTE: This location can be overridden with the CLI option:

|

| -s /path/to/user/settings.xml

|

| 2. Global Level. This settings.xml file provides configuration for all Maven

| users on a machine (assuming they're all using the same Maven

| installation). It's normally provided in

| ${maven.home}/conf/settings.xml.

|

| NOTE: This location can be overridden with the CLI option:

|

| -gs /path/to/global/settings.xml

|

| The sections in this sample file are intended to give you a running start at

| getting the most out of your Maven installation. Where appropriate, the default

| values (values used when the setting is not specified) are provided.

|

|-->

<settings xmlns="http://maven.apache.org/SETTINGS/1.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/SETTINGS/1.0.0 http://maven.apache.org/xsd/settings-1.0.0.xsd">

<!-- localRepository

| The path to the local repository maven will use to store artifacts.

|

| Default: ${user.home}/.m2/repository

<localRepository>/path/to/local/repo</localRepository>

-->

<!-- interactiveMode

| This will determine whether maven prompts you when it needs input. If set to false,

| maven will use a sensible default value, perhaps based on some other setting, for

| the parameter in question.

|

| Default: true

<interactiveMode>true</interactiveMode>

-->

<!-- offline

| Determines whether maven should attempt to connect to the network when executing a build.

| This will have an effect on artifact downloads, artifact deployment, and others.

|

| Default: false

<offline>false</offline>

-->

<!-- pluginGroups

| This is a list of additional group identifiers that will be searched when resolving plugins by their prefix, i.e.

| when invoking a command line like "mvn prefix:goal". Maven will automatically add the group identifiers

| "org.apache.maven.plugins" and "org.codehaus.mojo" if these are not already contained in the list.

|-->

<pluginGroups>

<!-- pluginGroup

| Specifies a further group identifier to use for plugin lookup.

<pluginGroup>com.your.plugins</pluginGroup>

-->

</pluginGroups>

<!-- proxies

| This is a list of proxies which can be used on this machine to connect to the network.

| Unless otherwise specified (by system property or command-line switch), the first proxy

| specification in this list marked as active will be used.

|-->

<proxies>

<!-- proxy

| Specification for one proxy, to be used in connecting to the network.

|

<proxy>

<id>optional</id>

<active>true</active>

<protocol>http</protocol>

<username>proxyuser</username>

<password>proxypass</password>

<host>proxy.host.net</host>

<port>80</port>

<nonProxyHosts>local.net|some.host.com</nonProxyHosts>

</proxy>

-->

</proxies>

<!-- servers

| This is a list of authentication profiles, keyed by the server-id used within the system.

| Authentication profiles can be used whenever maven must make a connection to a remote server.

|-->

<servers>

<!-- server

| Specifies the authentication information to use when connecting to a particular server, identified by

| a unique name within the system (referred to by the 'id' attribute below).

|

| NOTE: You should either specify username/password OR privateKey/passphrase, since these pairings are

| used together.

|

<server>

<id>deploymentRepo</id>

<username>repouser</username>

<password>repopwd</password>

</server>

-->

<!-- Another sample, using keys to authenticate.

<server>

<id>siteServer</id>

<privateKey>/path/to/private/key</privateKey>

<passphrase>optional; leave empty if not used.</passphrase>

</server>

-->

</servers>

<!-- mirrors

| This is a list of mirrors to be used in downloading artifacts from remote repositories.

|

| It works like this: a POM may declare a repository to use in resolving certain artifacts.

| However, this repository may have problems with heavy traffic at times, so people have mirrored

| it to several places.

|

| That repository definition will have a unique id, so we can create a mirror reference for that

| repository, to be used as an alternate download site. The mirror site will be the preferred

| server for that repository.

|-->

<mirrors>

<!-- mirror

| Specifies a repository mirror site to use instead of a given repository. The repository that

| this mirror serves has an ID that matches the mirrorOf element of this mirror. IDs are used

| for inheritance and direct lookup purposes, and must be unique across the set of mirrors.

|

<mirror>

<id>mirrorId</id>

<mirrorOf>repositoryId</mirrorOf>

<name>Human Readable Name for this Mirror.</name>

<url>http://my.repository.com/repo/path</url>

</mirror>

-->

<mirror>

<id>osc</id>

<mirrorOf>central</mirrorOf>

<url>http://maven.aliyun.com/nexus/content/repositories/central</url>

</mirror>

<mirror>

<id>osc_thirdparty</id>

<mirrorOf>thirdparty</mirrorOf>

<url>http://maven.aliyun.com/nexus/content/repositories/central</url>

</mirror>

</mirrors>

<!-- profiles

| This is a list of profiles which can be activated in a variety of ways, and which can modify

| the build process. Profiles provided in the settings.xml are intended to provide local machine-

| specific paths and repository locations which allow the build to work in the local environment.

|

| For example, if you have an integration testing plugin - like cactus - that needs to know where

| your Tomcat instance is installed, you can provide a variable here such that the variable is

| dereferenced during the build process to configure the cactus plugin.

|

| As noted above, profiles can be activated in a variety of ways. One way - the activeProfiles

| section of this document (settings.xml) - will be discussed later. Another way essentially

| relies on the detection of a system property, either matching a particular value for the property,

| or merely testing its existence. Profiles can also be activated by JDK version prefix, where a

| value of '1.4' might activate a profile when the build is executed on a JDK version of '1.4.2_07'.

| Finally, the list of active profiles can be specified directly from the command line.

|

| NOTE: For profiles defined in the settings.xml, you are restricted to specifying only artifact

| repositories, plugin repositories, and free-form properties to be used as configuration

| variables for plugins in the POM.

|

|-->

<profiles>

<!-- profile

| Specifies a set of introductions to the build process, to be activated using one or more of the

| mechanisms described above. For inheritance purposes, and to activate profiles via <activatedProfiles/>

| or the command line, profiles have to have an ID that is unique.

|

| An encouraged best practice for profile identification is to use a consistent naming convention

| for profiles, such as 'env-dev', 'env-test', 'env-production', 'user-jdcasey', 'user-brett', etc.

| This will make it more intuitive to understand what the set of introduced profiles is attempting

| to accomplish, particularly when you only have a list of profile id's for debug.

|

| This profile example uses the JDK version to trigger activation, and provides a JDK-specific repo.

<profile>

<id>jdk-1.4</id>

<activation>

<jdk>1.4</jdk>

</activation>

<repositories>

<repository>

<id>jdk14</id>

<name>Repository for JDK 1.4 builds</name>

<url>http://www.myhost.com/maven/jdk14</url>

<layout>default</layout>

<snapshotPolicy>always</snapshotPolicy>

</repository>

</repositories>

</profile>

-->

<!--

| Here is another profile, activated by the system property 'target-env' with a value of 'dev',

| which provides a specific path to the Tomcat instance. To use this, your plugin configuration

| might hypothetically look like:

|

| ...

| <plugin>

| <groupId>org.myco.myplugins</groupId>

| <artifactId>myplugin</artifactId>

|

| <configuration>

| <tomcatLocation>${tomcatPath}</tomcatLocation>

| </configuration>

| </plugin>

| ...

|

| NOTE: If you just wanted to inject this configuration whenever someone set 'target-env' to

| anything, you could just leave off the <value/> inside the activation-property.

|

<profile>

<id>env-dev</id>

<activation>

<property>

<name>target-env</name>

<value>dev</value>

</property>

</activation>

<properties>

<tomcatPath>/path/to/tomcat/instance</tomcatPath>

</properties>

</profile>

-->

<profile>

<id>jdk-1.4</id>

<activation>

<jdk>1.4</jdk>

</activation>

<repositories>

<repository>

<id>nexus</id>

<name>local private nexus</name>

<url>http://maven.aliyun.com/nexus/content/repositories/central</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

</repositories>

<pluginRepositories>

<pluginRepository>

<id>nexus</id>

<name>local private nexus</name>

<url>http://maven.aliyun.com/nexus/content/repositories/central</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</pluginRepository>

</pluginRepositories>

</profile>

<profile>

<id>osc</id>

<activation>

<activeByDefault>true</activeByDefault>

</activation>

<repositories>

<repository>

<id>osc</id>

<url>http://maven.aliyun.com/nexus/content/repositories/central</url>

</repository>

<repository>

<id>osc_thirdparty</id>

<url>http://maven.aliyun.com/nexus/content/repositories/central</url>

</repository>

</repositories>

<pluginRepositories>

<pluginRepository>

<id>osc</id>

<url>http://maven.aliyun.com/nexus/content/repositories/central</url>

</pluginRepository>

</pluginRepositories>

</profile>

</profiles>

<!-- activeProfiles

| List of profiles that are active for all builds.

|

<activeProfiles>

<activeProfile>alwaysActiveProfile</activeProfile>

<activeProfile>anotherAlwaysActiveProfile</activeProfile>

</activeProfiles>

-->

</settings>

setting.xml文件的内容是:

<?xml version="1.0" encoding="UTF-8"?>

<settings xmlns="http://maven.apache.org/SETTINGS/1.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/SETTINGS/1.0.0 http://maven.apache.org/xsd/settings-1.0.0.xsd">

<pluginGroups>

</pluginGroups>

<proxies>

</proxies>

<servers>

</servers>

<mirrors>

<mirror>

<id>nexus-osc</id>

<mirrorOf>*</mirrorOf>

<name>Nexus osc</name>

<url>http://nexus.rc.dataengine.com/nexus/content/groups/public</url>

</mirror>

<mirror>

<id>nexus-osc</id>

<mirrorOf>central</mirrorOf>

<name>Nexus osc</name>

<url>http://maven.oschina.net/content/groups/public</url>

</mirror>

<mirror>

<id>nexus-osc-thirdparty</id>

<mirrorOf>thirdparty</mirrorOf>

<name>Nexus osc thirdparty</name>

<url>http://maven.oschina.net/content/repositories/thirdparty</url>

</mirror>

<mirror>

<id>central</id>

<mirrorOf>central</mirrorOf>

<name>central</name>

<url>http://central.maven.org/maven2</url>

</mirror>

<mirror>

<id>repol</id>

<mirrorOf>central</mirrorOf>

<name>repol</name>

<url>http://repol.maven.org/maven2</url>

</mirror>

</mirrors>

<profiles>

<profile>

<id>jdk-1.7</id>

<activation>

<jdk>1.7</jdk>

</activation>

<repositories>

<repository>

<id>rc</id>

<name>rc nexus</name>

<url>http://nexus.rc.dataengine.com/nexus/content/groups/public</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

<repository>

<id>nexus</id>

<name>local private nexus</name>

<url>http://maven.oschina.net/content/groups/public</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

<repository>

<id>central</id>

<name>central</name>

<url>http://central.maven.org/maven2/</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

<repository>

<id>repol</id>

<name>repol</name>

<url>http://repol.maven.org/maven2/</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

</repositories>

<pluginRepositories>

<pluginRepository>

<id>rc</id>

<name>rc nexus</name>

<url>http://nexus.rc.dataengine.com/nexus/content/groups/public</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</pluginRepository>

<pluginRepository>

<id>nexus</id>

<name>local private nexus</name>

<url>http://maven.oschina.net/content/groups/public</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</pluginRepository>

<pluginRepository>

<id>central</id>

<name>central</name>

<url>http://central.maven.org/maven2/</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</pluginRepository>

<pluginRepository>

<id>repol</id>

<name>repol</name>

<url>http://repol.maven.org/maven2/</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</pluginRepository>

</pluginRepositories>

</profile>

</profiles>

</settings>

参考以下博主,感谢!

https://www.iteblog.com/archives/999

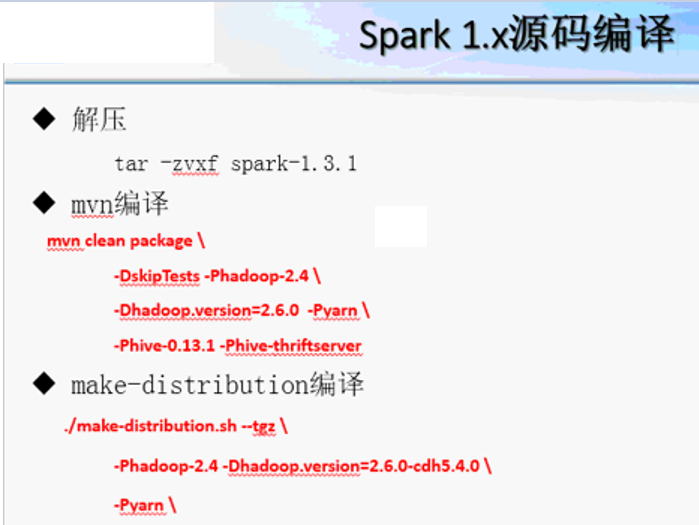

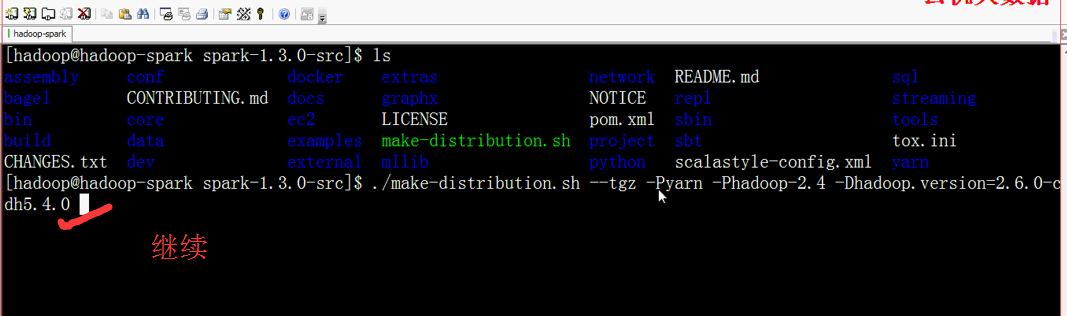

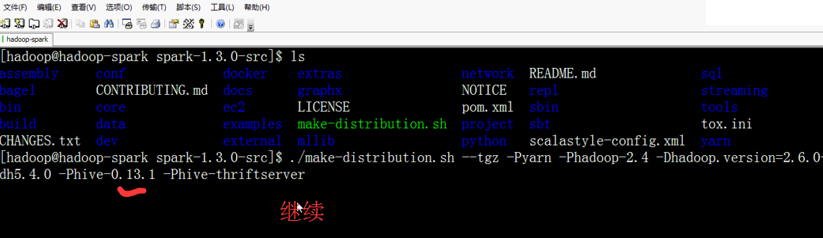

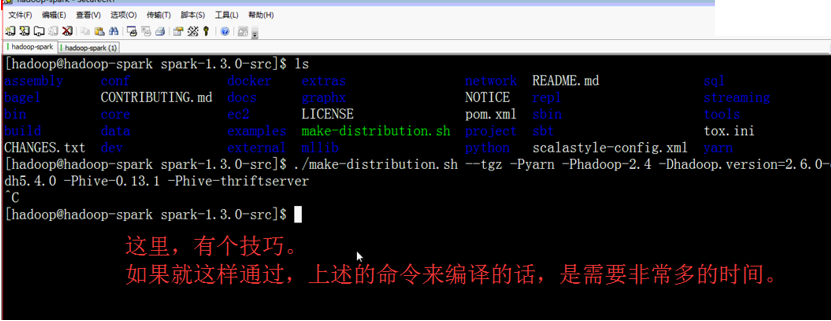

3、打包编译make-distribution.sh

现在开始

为什么要我们自己编译?

答:因为,在实际生产中,spark与我们的hadoop版本、hive版本等不一样。

所以,

官网上的spark预编译包,是,只与部分hadoop版本、hive版本等,相关。

1、

2、

SBT,是Single Build Tool,专门为Scala设计的。

Maven,是用java语言。

这里,我终于知道了,

为什么里面有个pom.xml文件,因为是为maven编译考虑的。

那好,那我们这里采用,make-distribution.sh方式。

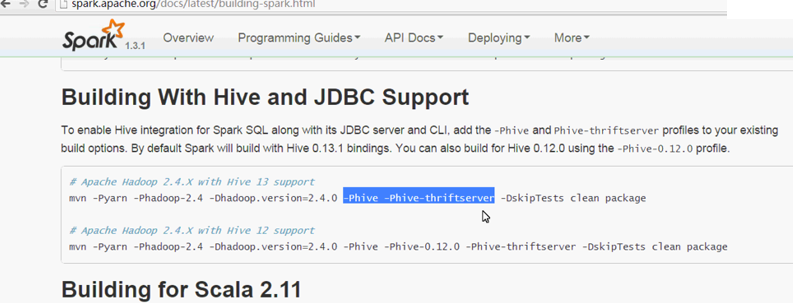

如果,我们用maven方式编译的话,则需要注意下面这个问题。

但是,我们这里,采用的是打包的方式来编译。上述内存问题,就无需考虑了。

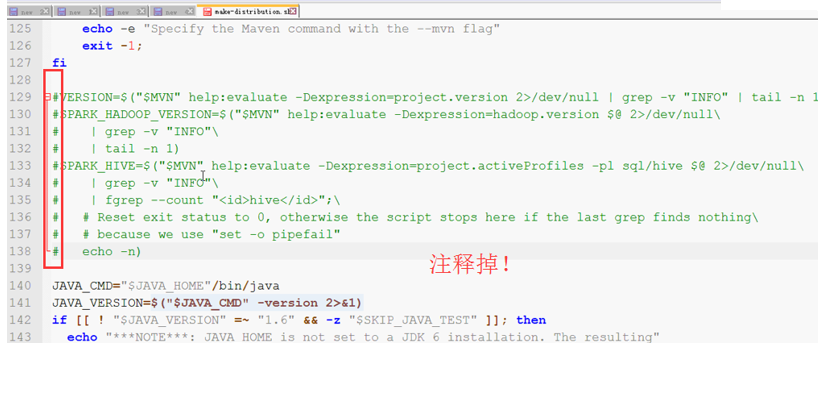

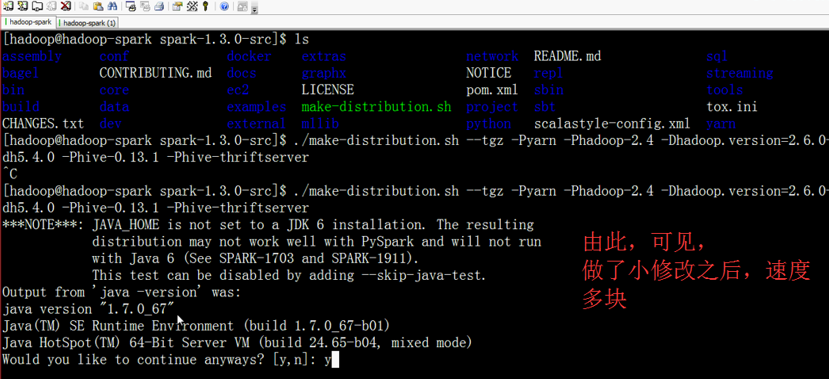

进一步带着,解读make-distribution.sh文件

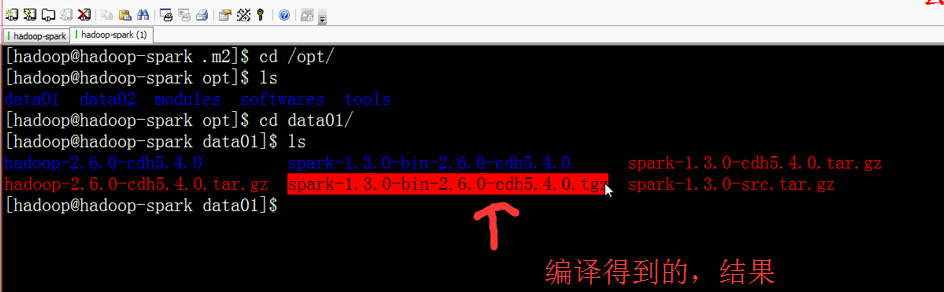

大工告成!

同时,大家可以关注我的个人博客:

http://www.cnblogs.com/zlslch/ 和 http://www.cnblogs.com/lchzls/ http://www.cnblogs.com/sunnyDream/

详情请见:http://www.cnblogs.com/zlslch/p/7473861.html

人生苦短,我愿分享。本公众号将秉持活到老学到老学习无休止的交流分享开源精神,汇聚于互联网和个人学习工作的精华干货知识,一切来于互联网,反馈回互联网。

目前研究领域:大数据、机器学习、深度学习、人工智能、数据挖掘、数据分析。 语言涉及:Java、Scala、Python、Shell、Linux等 。同时还涉及平常所使用的手机、电脑和互联网上的使用技巧、问题和实用软件。 只要你一直关注和呆在群里,每天必须有收获

对应本平台的讨论和答疑QQ群:大数据和人工智能躺过的坑(总群)(161156071)

作者:大数据和人工智能躺过的坑

出处:http://www.cnblogs.com/zlslch/

本文版权归作者和博客园共有,欢迎转载,但未经作者同意必须保留此段声明,且在文章页面明显位置给出原文链接,否则保留追究法律责任的权利。

如果您认为这篇文章还不错或者有所收获,您可以通过右边的“打赏”功能 打赏我一杯咖啡【物质支持】,也可以点击右下角的【好文要顶】按钮【精神支持】,因为这两种支持都是我继续写作,分享的最大动力!

浙公网安备 33010602011771号

浙公网安备 33010602011771号