使用Maven将Hadoop2.2.0源码编译成Eclipse项目

编译环境:

OS:RHEL 6.3 x64

Maven:3.2.1

Eclipse:Juno SR2 Linux x64

libprotoc:2.5.0

JDK:1.7.0_51 x64

步骤:

1. 下载Hadoop2.2.0源码包 http://mirror.bit.edu.cn/apache/hadoop/common/hadoop-2.2.0/hadoop-2.2.0-src.tar.gz

2. 解压缩到Eclipse的workspace中。这里最好解压缩到workspace中,编译完成后直接导入就好了,不用移动,移动的话容易造成依赖关系的确实,我比较懒,所以就放在workspace中编译,省的还要build path。

3. 安装Maven。Hadoop前期使用的是ant+ivy,后面改成了Maven,在源码包的BUILD文件中有写。下载Maven http://apache.fayea.com/apache-mirror/maven/maven-3/3.2.1/binaries/apache-maven-3.2.1-bin.tar.gz,解压缩到安装目录,在.bashrc中添加以下内容

export MAVEN_HOME=/root/Software/Maven321

export PATH=/root/Software/Maven321/bin:$PATH

在控制台中输入mvn -version,打印以下信息则安装成功

Apache Maven 3.2.1 (ea8b2b07643dbb1b84b6d16e1f08391b666bc1e9; 2014-02-15T01:37:52+08:00)

Maven home: /root/Software/maven321

Java version: 1.7.0_51, vendor: Oracle Corporation

Java home: /usr/java/jdk1.7.0_51/jre

Default locale: zh_CN, platform encoding: UTF-8

OS name: "linux", version: "2.6.32-431.5.1.el6.x86_64", arch: "amd64", family: "unix"

4. 安装protobuf-2.5.0,首先安装一下gcc

yum install gcc

yum install gcc-c++

然后下载protocbuf https://protobuf.googlecode.com/files/protobuf-2.5.0.tar.gz 下载完成后解压到安装目录。进入安装目录执行如下命令进行安装

./configure

make

make check

make install

安装完成后在控制台输入protoc --version,有以下输出则安装成功。安装protoc的原因是要用到它啦,并且官方文档里貌似也没提到,就是编译的时候遇到错误了。并且这里是要编译Eclipse项目,如果要编译成可执行的Hadoop的话需要安装更多的软件进行支持,详情可以见这里 http://my.oschina.net/cloudcoder/blog/192224

[root@dell ~]# protoc --version

libprotoc 2.5.0

5. 下面就可以开始编译了,进入到Hadoop2.2.0的源码包目录里,ls查看一下,可以看到hadoop-maven-plugins文件夹,先进入到这个文件家,执行mvn install。过程有点长,如果显示说有jar包下载不下来就多执行几次,总有下载下来的一天。显示BUILD SUCCESS后则返回到hadoop-2.2.0-src的根目录下,执行mvn eclipse:eclipse –DskipTests,生成Eclipse项目。同样,显示BUILD SUCCESS后就是编译成功了。

[root@dell hadoop-2.2.0-src]# ls

BUILDING.txt hadoop-hdfs-project hadoop-tools

dev-support hadoop-mapreduce-project hadoop-yarn-project

hadoop-assemblies hadoop-maven-plugins LICENSE.txt

hadoop-client hadoop-minicluster NOTICE.txt

hadoop-common-project hadoop-project pom.xml

hadoop-dist hadoop-project-dist README.txt

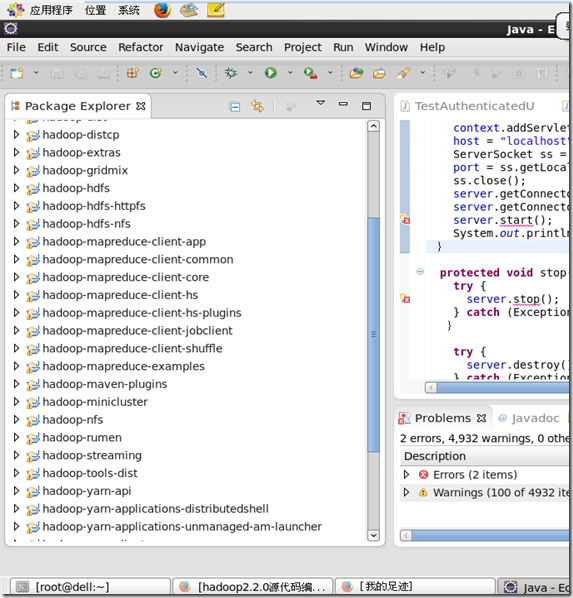

6. 将编译好的项目导入到Eclipse中,依次执行[File] > [Import] > [Existing Projects into Workspace]即可。由于生成了很多个项目,所以导入后是这个样子的。并且还会有一些错误,下面对如何修复错误写一下。

Error#1. hadoop-streaming里面的build path有问题,显示/root/workspace/hadoop-2.2.0-src/hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-resourcemanager/conf(missing)

解决办法,remove掉引用就好。

Error#2. hadoop-hdfs/src/test/java/org/apache/hadoop/hdfs/TestDFSClientFailover.java中报sun.net.spi.nameservice.NameService错误,这是一个需要import的包,存在于openjdk中,在Oracle Jdk中没找到,需要下载一个。NameService是一个接口,在网上找一个NameService放到该包中就好。 http://grepcode.com/file/repository.grepcode.com/java/root/jdk/openjdk/7u40-b43/sun/net/spi/nameservice/NameService.java#NameService

Error#3. /hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/tools/offlineEditsViewer/XmlEditsVisitor.java里面显示

import com.sun.org.apache.xml.internal.serialize.OutputFormat;

import com.sun.org.apache.xml.internal.serialize.XMLSerializer;

失败,这是由于Eclipse的强检查原则,打开Java -> Compiler -> Errors/Warnings and under "Deprecated and restricted API" change the setting of "Forbidden reference (access rules)" 将error级别调整到warning级别就好。

Error#4. /hadoop-common/src/test/java/org/apache/hadoop/io/serializer/avro/TestAvroSerialization.java显示没有AvroRecord类,在网上搜索到AvroRecord类放入到同级包中就行了。 http://grepcode.com/file/repo1.maven.org/maven2/org.apache.hadoop/hadoop-common/2.2.0/org/apache/hadoop/io/serializer/avro/AvroRecord.java#AvroRecord

Error#5. org.apache.hadoop.ipc.protobuf包是空的,需要在/hadoop-common/target/generated-sources/java中找到profobuf拷贝到/hadoop-common/src/test/java中就好了. 同时包里面还缺少了以下三个引用,在GrepCode上找一下,把hadoop-common2.2.0的相应文件下下来导入。

org.apache.hadoop.ipc.protobuf.TestProtos.EchoRequestProto;

org.apache.hadoop.ipc.protobuf.TestProtos.EchoResponseProto;

org.apache.hadoop.ipc.protobuf.TestRpcServiceProtos.TestProtobufRpcProto;

Error#6. /hadoop-auth/org/apache/hadoop/security/authentication/client/AuthenricatorTestCase.java中显示server.start()和server.stop()错误,还没找到原因所在,待检查~~~

浙公网安备 33010602011771号

浙公网安备 33010602011771号