基于Calico网络模式构建Kubernetes高可用容器云 - v1.10.5

版权声明

出处:https://www.cnblogs.com/xeoon

邮箱:xeon@xeon.org.cn

本文版权归作者和博客园共有,欢迎转载,但未经作者同意必须保留此段声明,且在文章页面明显位置给出原文连接。通过本博客学习的内容,造成任何后果均与本人无关!!!

第1章 Kubernetes集群规划

1.1 集群架构

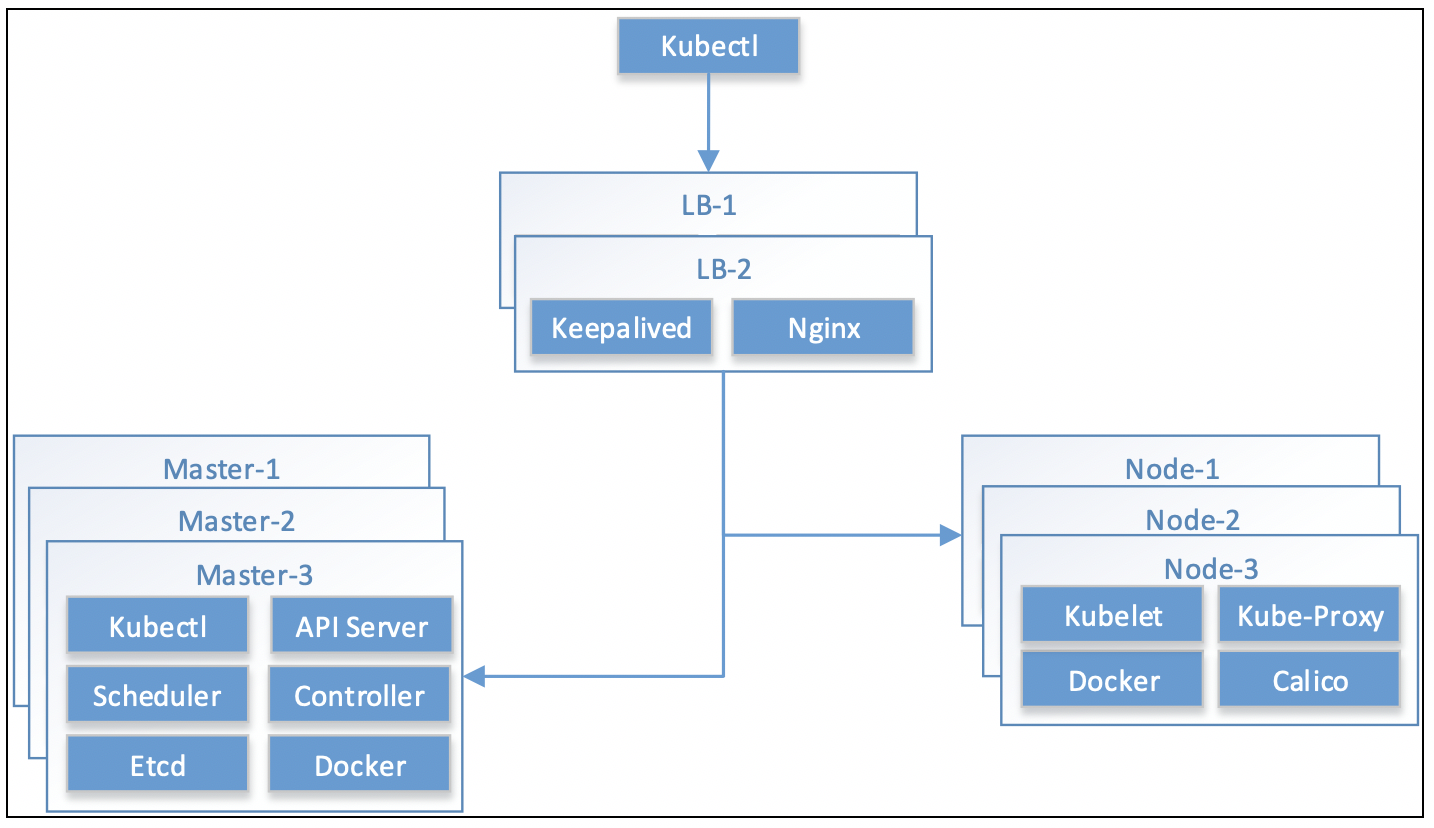

1.1.1 总架构图

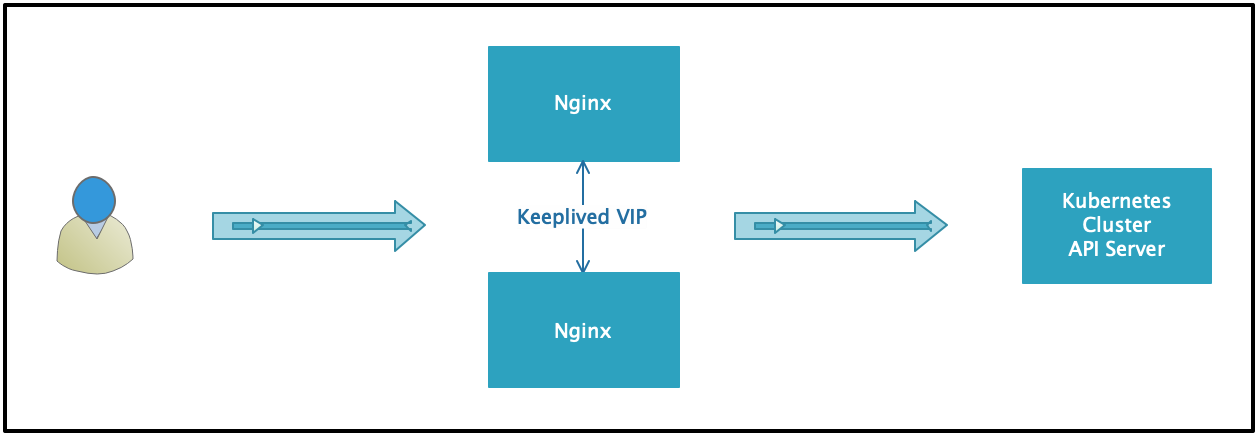

1.1.2 LB节点逻辑图

1.2 组件介绍

1.2.1 Master组件

| 组件名称 | 说明 |

|---|---|

| kube-apiserver | 集群的统一入口,各组件协调者,以HTTP API提供接口服务,所有对象资源的增删改查和监听操作都交给APIServer处理后再提交给Etcd存储。 |

| kube-controller-manager | 处理集群中常规后台任务,一个资源对应一个控制器,而ControllerManager就是负责管理这些控制器的。 |

| kube-scheduler | 根据调度算法为新创建的Pod选择一个Node节点。 |

1.2.2 Node组件

| 组件名称 | 说明 |

|---|---|

| kubelet | kubelet是Master在Node节点上的Agent,管理本机运行容器的生命周期,比如创建容器、Pod挂载数据卷、下载secret、获取容器和节点状态等工作。kubelet将每个Pod转换成一组容器。 |

| kube-proxy | 在Node节点上实现Pod网络代理,维护网络规则和四层负载均衡工作。 |

1.2.3 第三方服务

| 组件名称 | 说明 |

|---|---|

| etcd | 分布式键值存储系统。用于保持集群状态,比如Pod、Service等对象信息。 |

1.3 集群环境规划

1.3.1 软件版本

| 软件名称 | 版本 |

|---|---|

| Linux操作系统 | CentOS 7.4 x86_64 |

| Keepalived | 1.3.5 |

| Nginx | 1.12.2 |

| Cfssl | 1.2 |

| Kubernetes | 1.10.5 |

| Docker | 17.03.2 |

| Etcd | 3.3.8 |

| Calico | 3.1.3 |

1.3.2 节点信息

| 软件名称 | IP | VIP | 组件 | 硬件配置 |

|---|---|---|---|---|

| LB-1 | 192.168.56.97 | 192.168.56.99 | keepalived、nginx | 1c * 2g |

| LB-1 | 192.168.56.98 | 192.168.56.99 | keepalived、nginx | 1c * 2g |

| master-1 | 192.168.56.101 | kube-apiserver、docker、kube-controller-manager、kube-scheduler、kubectl、etcd | 1c * 2g | |

| master-2 | 192.168.56.102 | kube-apiserver、docker、kube-controller-manager、kube-scheduler、kubectl、etcd | 1c * 2g | |

| master-3 | 192.168.56.103 | kube-apiserver、docker、kube-controller-manager、kube-scheduler、kubectl、etcd | 1c * 2g | |

| node-1 | 192.168.56.104 | kubelet、kube-proxy、docker、calico | 1c * 2g | |

| node-2 | 192.168.56.105 | kubelet、kube-proxy、docker、calico | 1c * 2g | |

| node-3 | 192.168.56.106 | kubelet、kube-proxy、docker、calico | 1c * 2g |

1.3.3 系统优化

我们使用最新的(截止2018.05.08),Centos7.4迷你版本(CentOS-7-x86_64-Minimal-1708.iso)

1.备份本地yum源

mkdir -p /etc/yum.repos.d/bak

mv /etc/yum.repos.d/* /etc/yum.repos.d/bak/ > /dev/null 2>&1

2.指定本地cnetos yum源

tee > /etc/yum.repos.d/centos7.repo <<-'EOF'

[CentOS-7-x86_64-base]

name=CentOS-7-x86_64-base

baseurl=http://192.168.56.253/cobbler/repo_mirror/CentOS-7-x86_64-base

enabled=1

priority=99

gpgcheck=0

[CentOS-7-x86_64-epel]

name=CentOS-7-x86_64-epel

baseurl=http://192.168.56.253/cobbler/repo_mirror/CentOS-7-x86_64-epel

enabled=1

priority=99

gpgcheck=0

[CentOS-7-x86_64-extras]

name=CentOS-7-x86_64-extras

baseurl=http://192.168.56.253/cobbler/repo_mirror/CentOS-7-x86_64-extras

enabled=1

priority=99

gpgcheck=0

[CentOS-7-x86_64-updates]

name=CentOS-7-x86_64-updates

baseurl=http://192.168.56.253/cobbler/repo_mirror/CentOS-7-x86_64-updates

enabled=1

priority=99

gpgcheck=0

EOF

3.指定本地docker yum源

tee > cat /etc/yum.repos.d/docker-ce.repo <<-'EOF'

[CentOS-7-x86_64-Docker-ce]

name=CentOS-7-x86_64-Docker-ce

baseurl=http://192.168.56.253/cobbler/repo_mirror/CentOS-7-x86_64-Docker-ce

enabled=1

priority=99

gpgcheck=0

EOF

2.安装常用包组

因为是迷你安装,好多软件包都没有需要我们手动安装

yum clean all && yum repolist

yum install lrzsz nmap tree dos2unix nc htop wget vim bash-completion screen lsof net-tools sshpass -y

yum groupinstall "Compatibility libraries" "Base" "Development tools" "debugging Tools" "Dial-up Networking Support" -y --exclude=bash

reboot

3.使用chrony服务同步时间(centos7)

yum install chrony -y

systemctl enable chronyd.service

systemctl start chronyd.service

4.闭防火墙相关服务(生产中按需操作)

systemctl disable firewalld

systemctl disable NetworkManager

systemctl disable postfix

systemctl disable kdump.service

systemctl disable NetworkManager-wait-online.service

sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

grep SELINUX=disabled /etc/selinux/config

setenforce 0

systemctl list-unit-files -t service | grep enable

5.调整文件描述符

echo '* - nofile 100000 ' >>/etc/security/limits.conf

6.grep高亮

echo "alias grep='grep --color=auto'" >> /etc/profile

echo "alias egrep='egrep --color=auto'" >> /etc/profile

tail -2 /etc/profile

source /etc/profile

1.4 基础环境准备

1.4.1 Kernel 和swap

1.所有节点配置内核属性

cat > /etc/sysctl.d/k8s.conf <<-'EOF'

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl -p /etc/sysctl.d/k8s.conf

2.swap交换分区设置

所有节点 Kubernetes v1.8+ 要求关闭 Swap,否则 kubelet 无法正常启动

记得/etc/fstab也要注解掉SWAP挂载

for NODE in master-2 master-3 node-1 node-2 node-3; do

echo "--- $NODE ---"

scp /etc/sysctl.d/k8s.conf $NODE:/etc/sysctl.d/

ssh $NODE 'sysctl -p /etc/sysctl.d/k8s.conf'

ssh $NODE 'swapoff -a && sysctl -w vm.swappiness=0'

done

1.4.2 免密钥互信

1.ssh加速设置

sed -i 's@#UseDNS yes@UseDNS no@g' /etc/ssh/sshd_config

systemctl restart sshd

2.查看脚本内容

tee > configure_ssh_without_pass.sh <<-'EOF'

#!/bin/bash

ssh-keygen -t rsa -b 2048 -N "" -f $HOME/.ssh/id_rsa

cat $HOME/.ssh/id_rsa.pub >> $HOME/.ssh/authorized_keys

chmod 600 $HOME/.ssh/authorized_keys

for ip in 97 98 101 102 103 104 105 106; do

echo "------ $ip ------"

sshpass -p '123456' rsync -av -e 'ssh -o StrictHostKeyChecking=no' $HOME/.ssh/authorized_keys root@192.168.56.$ip:$HOME/.ssh/

# rsync -av -e 'ssh -o StrictHostKeyChecking=no' $HOME/.ssh/authorized_keys root@192.168.56.$ip:$HOME/.ssh/

done

EOF

3.在所有节点执行

for ip in 97 98 101 102 103 104 105 106; do

echo "------ $ip ------"

scp configure_ssh_without_pass.sh 192.168.56.$ip:/root/

ssh 192.168.56.$ip 'sh configure_ssh_without_pass.sh'

done

1.4.3 同步hosts文件

1.设定/etc/hosts解析到所有集群主机

tee > /etc/hosts <<-'EOF'

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 centos74

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 centos74

#lb

192.168.56.97 lb-1

192.168.56.98 lb-2

#kubernetes master

192.168.56.101 master-1

192.168.56.102 master-2

192.168.56.103 master-3

#kubernetes node

192.168.56.104 node-1

192.168.56.105 node-2

192.168.56.106 node-3

EOF

2.拷贝到所有节点

for ip in 97 98 101 102 103 104 105 106; do

echo "------ $ip ------"

scp /etc/hosts root@192.168.56.$ip:/etc/hosts

done

1.4.4 安装Docker

所有node和master节点安装Docker服务,并设置开机自启动

cd /tmp

RPM='http://192.168.56.253/cobbler/repo_mirror/CentOS-7-x86_64-Docker-ce/Packages'

wget $RPM/docker-ce-17.03.2.ce-1.el7.centos.x86_64.rpm

wget $RPM/docker-ce-selinux-17.03.2.ce-1.el7.centos.noarch.rpm

yum localinstall docker-ce*.rpm -y

systemctl restart docker && systemctl enable docker

1.4.5 准备部署目录

所有节点需要统一操作

1.创建kubernetes安装目录

mkdir -p /opt/kubernetes/{cfg,bin,ssl,log}

2.修改.bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

PATH=$PATH:$HOME/bin:/opt/kubernetes/bin

export PATH

3.生效环境变量

source .bash_profile

1.4.6 安装 CFSSL

1.下载或上传软件包

[root@master-1 ~]# cd /usr/local/src/

[root@master-1 src]# wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

[root@master-1 src]# wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

[root@master-1 src]# chmod +x cfssl*

[root@master-1 src]# mv cfssljson_linux-amd64 cfssljson

[root@master-1 src]# mv cfssl_linux-amd64 cfssl

2.复制cfssl到其他master节点

for NODE in master-1 master-2 master-3; do

echo "------ $NODE ------"

for FILE in cfssljson cfssl; do

scp /usr/local/src/$FILE $NODE:/opt/kubernetes/bin/

done

done

3.master节点上传需要的容器

[root@master-1 src]# ll *.tar.gz

-rw-r--r-- 1 root root 97142947 Jul 2 21:11 calico-3.1.3-images.tar.gz

-rw-r--r-- 1 root root 1016923 Jun 25 22:50 pause-amd64-3.1-images.tar.gz

-rw-r--r-- 1 root root 38167936 Jul 5 18:18 kubernetes-dashboard-amd64-1.8.3.tar.gz

4.分发到其他master节点和node节点

for NODE in master-1 master-2 master-3 node-1 node-2 node-3; do

echo "------ $NODE ------"

for FILE in pause-amd64-3.1-images.tar.gz calico-3.1.3-images.tar.gz kubernetes-dashboard-amd64-1.8.3.tar.gz; do

scp /usr/local/src/$FILE $NODE:/usr/local/src/

ssh $NODE "docker load --input /usr/local/src/$FILE"

ssh $NODE "docker images"

done

done

1.5 基础证书生成

1.5.1 初始化cfssl

[root@master-1 src]# mkdir ssl && cd ssl

[root@master-1 ssl]# cfssl print-defaults config > config.json

[root@master-1 ssl]# cfssl print-defaults csr > csr.json

1.5.2 生成证书

1.创建用来生成 CA 文件的 JSON 配置文件

[root@master-1 ssl]# tee > ca-config.json <<-'EOF'

{

"signing": {

"default": {

"expiry": "8760h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "8760h"

}

}

}

}

EOF

2.创建用来生成 CA 证书签名请求(CSR)的 JSON 配置文件

[root@master-1 ssl]# tee > ca-csr.json <<-'EOF'

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

3.生成CA证书(ca.pem)和密钥(ca-key.pem)

[root@master-1 ssl]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca

[root@master-1 ssl]# ll ca*

-rw-r--r-- 1 root root 290 Jun 29 23:24 ca-config.json

-rw-r--r-- 1 root root 1001 Jun 29 23:25 ca.csr

-rw-r--r-- 1 root root 208 Jun 29 23:24 ca-csr.json

-rw------- 1 root root 1675 Jun 29 23:25 ca-key.pem

-rw-r--r-- 1 root root 1359 Jun 29 23:25 ca.pem

1.5.3 分发证书

1.这些是最基本的,master和node都需要。

for NODE in master-1 master-2 master-3 node-1 node-2 node-3; do

echo "------ $NODE ------"

for FILE in ca.csr ca.pem ca-key.pem ca-config.json; do

scp /usr/local/src/ssl/$FILE $NODE:/opt/kubernetes/ssl/

done

done

第2章 部署LB节点

2.1 安装Keepalived

1.LB所有节点安装

yum install keepalived -y

2.配置keepalived

注意state和priority参数参数不能一样

tee > /etc/keepalived/keepalived.conf <<-'EOF'

! Configuration File for keepalived

global_defs {

router_id Kubernetes_LB

}

vrrp_instance K8S_LB {

state MASTER

# state BACKUP

interface eth0

virtual_router_id 51

priority 200

# priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.56.99

}

}

EOF

3.启动keepalived

systemctl restart keepalived.service

systemctl status keepalived.service

systemctl enable keepalived.service

4.检查VIP是否存在

for NODE in lb-1 lb-2; do

echo "------ $NODE ------"

ssh $NODE "ip a | grep eth0"

echo " "

done

# 下面是输出的结果

------ lb-1 ------

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

inet 192.168.56.97/24 brd 192.168.56.255 scope global eth0

inet 192.168.56.99/32 scope global eth0

------ lb-2 ------

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

inet 192.168.56.98/24 brd 192.168.56.255 scope global eth0

2.2 安装Nginx

1.所有LB节点安装nginx

yum install nginx -y

2.配置nginx为反向代理kubernetes的apiserver

tee > /etc/nginx/nginx.conf <<-'EOF'

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

# Load dynamic modules. See /usr/share/nginx/README.dynamic.

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream kube_apiserver {

least_conn;

server 192.168.56.101:6443 weight=1;

server 192.168.56.102:6443 weight=1;

server 192.168.56.103:6443 weight=1;

}

server {

listen 0.0.0.0:6443;

proxy_pass kube_apiserver;

proxy_timeout 10m;

proxy_connect_timeout 1s;

}

}

EOF

3.LB节点启动nginx服务

systemctl restart nginx.service

systemctl status nginx.service

systemctl enable nginx.service

2.3 测试

以node-1节点为例,ping LB节点的虚拟IP,同时关闭keepalived主,查看,然后切换。

[root@node-1 ~]# ping 192.168.56.99 -s 6000

PING 192.168.56.99 (192.168.56.99) 6000(6028) bytes of data.

6008 bytes from 192.168.56.99: icmp_seq=1 ttl=64 time=0.995 ms

6008 bytes from 192.168.56.99: icmp_seq=2 ttl=64 time=0.723 ms

6008 bytes from 192.168.56.99: icmp_seq=3 ttl=64 time=0.725 ms

6008 bytes from 192.168.56.99: icmp_seq=4 ttl=64 time=0.877 ms

6008 bytes from 192.168.56.99: icmp_seq=5 ttl=64 time=0.781 ms

6008 bytes from 192.168.56.99: icmp_seq=6 ttl=64 time=0.846 ms

6008 bytes from 192.168.56.99: icmp_seq=8 ttl=64 time=0.924 ms

6008 bytes from 192.168.56.99: icmp_seq=9 ttl=64 time=0.806 ms

6008 bytes from 192.168.56.99: icmp_seq=10 ttl=64 time=1.06 ms

6008 bytes from 192.168.56.99: icmp_seq=11 ttl=64 time=0.813 ms

6008 bytes from 192.168.56.99: icmp_seq=12 ttl=64 time=0.759 ms

^C

--- 192.168.56.99 ping statistics ---

12 packets transmitted, 11 received, 8% packet loss, time 11015ms

rtt min/avg/max/mdev = 0.723/0.846/1.062/0.108 ms

[root@ib-1 ~]# systemctl stop keepalived.service

[root@ib-1 ~]# for NODE in lb-1 lb-2; do

> echo "------ $NODE ------"

> ssh $NODE "ip a | grep eth0"

> echo " "

> done

------ lb-1 ------

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

inet 192.168.56.97/24 brd 192.168.56.255 scope global eth0

------ lb-2 ------

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

inet 192.168.56.98/24 brd 192.168.56.255 scope global eth0

inet 192.168.56.99/32 scope global eth0

[root@ib-1 ~]# systemctl start keepalived.service