Redis 自定义 RedisAppender 插件, 实现日志缓冲队列,集中日志输出.

因为某些异步日志设置了即使队列满了,也不可丢弃,在并发高的时候,导致请求方法同步执行,响应变慢.

编写这个玩意,除了集中日志输出以外,还希望在高并发的时间点有缓冲作用.

之前用Kafka实现了一次入队速度不太理想,应该说比我写本地机械硬盘还慢..................不知道是不是我方式不对,而且估计是因为针对有序写入做了极大的优化,写出固态硬盘下居然比机械还慢.............

后来用Redis实现了一次,由于Redis的连接方式问题,所以必须使用管道来减少获取连接的性能损耗,入队效率非常不错

瞎扯,增加程序复杂度,又增加运维成本,完全不科学,拿固态硬盘阵列的服务器组分布式文件系统,挂载到应用服务器上才是王道!

由于管道没有到达需要写出得大小时(默认53个 arges),如果刚好又没有日志进来,那么可能存在一直等待写出得情况,所以里面写了一个定时线程,可以根据需要修改写出得周期时间

logback KafkaAppender 写入Kafka队列,集中日志输出.

生产者程序完整POM

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>org.lzw</groupId> <artifactId>logqueue</artifactId> <version>1.0-SNAPSHOT</version> <dependencies> <dependency> <groupId>redis.clients</groupId> <artifactId>jedis</artifactId> <version>2.9.0</version> <scope>provided</scope> </dependency> <dependency> <groupId>ch.qos.logback</groupId> <artifactId>logback-core</artifactId> <version>1.2.3</version> <scope>provided</scope> </dependency> <dependency> <groupId>org.slf4j</groupId> <artifactId>slf4j-api</artifactId> <version>1.7.25</version> <scope>provided</scope> </dependency> </dependencies> <distributionManagement> <repository> <id>maven-releases</id> <name>maven-releases</name> <url>http://192.168.91.137:8081/repository/maven-releases/</url> </repository> <snapshotRepository> <id>maven-snapshots</id> <name>maven-snapshots</name> <url>http://192.168.91.137:8081/repository/maven-snapshots/</url> </snapshotRepository> </distributionManagement> <build> <plugins> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-compiler-plugin</artifactId> <configuration> <source>1.8</source> <target>1.8</target> </configuration> </plugin> </plugins> </build> </project>

只有一个类RedisAppender.java

package org.lzw.log.appender; import ch.qos.logback.core.Layout; import redis.clients.jedis.Client; import redis.clients.jedis.Pipeline; import ch.qos.logback.core.AppenderBase; import ch.qos.logback.core.status.ErrorStatus; import org.slf4j.Logger; import org.slf4j.LoggerFactory; import java.io.IOException; import java.io.StringReader; import java.util.List; import java.util.Properties; import java.util.concurrent.ScheduledThreadPoolExecutor; import java.util.concurrent.TimeUnit; /** * User: laizhenwei */ public class RedisAppender<E> extends AppenderBase<E> { protected Layout<E> layout; private static final Logger LOGGER = LoggerFactory.getLogger("local"); private Pipeline pipeline; private Client client; private static ScheduledThreadPoolExecutor exec = new ScheduledThreadPoolExecutor(1); private String queueKey; private String redisProperties; public void start() { super.start(); int errors = 0; if (this.layout == null) { this.addStatus(new ErrorStatus("No layout set for the appender named \"" + this.name + "\".", this)); ++errors; } LOGGER.info("Starting RedisAppender..."); final Properties properties = new Properties(); try { properties.load(new StringReader(redisProperties)); pipeline = new Pipeline(); client = new Client(properties.get("host").toString(), Integer.parseInt(properties.get("port").toString())); pipeline.setClient(client); } catch (Exception exception) { ++errors; LOGGER.warn(String.join("Kafka日志线程被拒绝:记录倒本地日志:"), exception); } if (queueKey == null) { ++errors; System.out.println("未配置queueKey"); } else { System.out.println("日志将进入key为:[" + queueKey + "]的队列!"); } if (errors == 0) { super.start(); exec.scheduleAtFixedRate(() -> this.sync(), 5, 5, TimeUnit.SECONDS); } } @Override public void stop() { super.stop(); pipeline.sync(); try { pipeline.close(); } catch (IOException e) { LOGGER.warn("Stopping RedisAppender...",e); } LOGGER.info("Stopping RedisAppender..."); } @Override protected void append(E event) { String msg = layout.doLayout(event); this.lpush(msg); } private void lpush(String msg){ try { pipeline.lpush(queueKey,msg); }catch (Exception ex){ LOGGER.warn(String.join(":","推送redis队列失败!",msg),ex); } } private void sync(){ try { pipeline.sync(); }catch (Exception ex){ List<Object> datas = client.getAll(); datas.stream().forEach(d->LOGGER.warn(String.join(":","推送redis队列失败!记录到本地!",d.toString()))); } } public String getQueueKey() { return queueKey; } public void setQueueKey(String queueKey) { this.queueKey = queueKey; } public void setLayout(Layout<E> layout) { this.layout = layout; } public String getRedisProperties() { return redisProperties; } public void setRedisProperties(String redisProperties) { this.redisProperties = redisProperties; } }

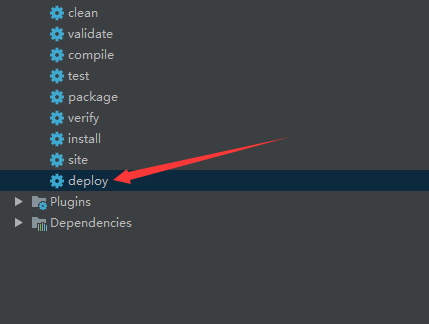

写完了,发布到Nexus

消费者application-test.yml

spring:

application:

name: logconsumer

profiles:

#指定读取配置文件:dev(开发环境),prod(生产环境),qa(测试环境)

active: test

logKey:

basic-info-api: basic-info-api

redisParam:

host: 192.168.1.207

port: 6379

pool:

maxIdle: 20

maxTotal: 200

maxWaitMillis: -1

testOnBorrow: false

testOnReturn: false

logback-test.xml

<?xml version="1.0" encoding="UTF-8"?> <configuration debug="true"> <contextName>logback</contextName> <property name="LOG_HOME" value="/logconsumer"/> <!-- basicInfoApi --> <appender name="basicInfoApiAppender" class="ch.qos.logback.core.rolling.RollingFileAppender"> <file>${LOG_HOME}/basic-info-api/logback.log</file> <rollingPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedRollingPolicy"> <fileNamePattern>${LOG_HOME}/basic-info-api/logback-%d{yyyy-MM-dd}.%i.log</fileNamePattern> <!--<fileNamePattern>${LOG_HOME}/e9/e9-%d{yyyy-MM-dd}.%i.tar.gz</fileNamePattern>--> <!-- 日志文件保留天数 --> <MaxHistory>30</MaxHistory> <!-- 文件大小触发重写新文件 --> <MaxFileSize>50MB</MaxFileSize> <totalSizeCap>10GB</totalSizeCap> </rollingPolicy> <encoder> <pattern>%msg%n</pattern> <charset>UTF-8</charset> </encoder> </appender> <!--basicInfoApi异步输出--> <appender name="basicInfoApiAasyncFile" class="ch.qos.logback.classic.AsyncAppender"> <discardingThreshold>0</discardingThreshold> <queueSize>2048</queueSize> <appender-ref ref="basicInfoApiAppender"/> </appender> <!--basicInfoApi消费者所在包路径--> <logger name="org.lzw.logconsumer.consumer.BasicInfoApiConsumer" level="INFO" additivity="false"> <appender-ref ref="basicInfoApiAasyncFile"/> </logger> <!--<!– ############################## 我是分割线 ############################################ –>--> <appender name="file" class="ch.qos.logback.core.rolling.RollingFileAppender"> <file>${LOG_HOME}/logconsumer/logback.log</file> <rollingPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedRollingPolicy"> <fileNamePattern>${LOG_HOME}/logconsumer/logback-%d{yyyy-MM-dd}.%i.log</fileNamePattern> <!--<fileNamePattern>${LOG_HOME}/front/front-%d{yyyy-MM-dd}.%i.tar.gz</fileNamePattern>--> <!-- 日志文件保留天数 --> <MaxHistory>30</MaxHistory> <!-- 文件大小触发重写新文件 --> <MaxFileSize>50MB</MaxFileSize> <totalSizeCap>1GB</totalSizeCap> </rollingPolicy> <encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder"> <pattern>%date %level [%thread] %logger{36} [%file : %line] %msg%n</pattern> <charset>UTF-8</charset> </encoder> </appender> <root level="warn"> <appender-ref ref="file" /> </root> </configuration>

启动类LogconsumerApplication.java

/** * User: laizhenwei */ @SpringBootApplication public class LogconsumerApplication { public static void main(String[] args) { SpringApplication.run(LogconsumerApplication.class, args); } @Component public static class ConsumersStartup implements CommandLineRunner { ExecutorService executorService = Executors.newCachedThreadPool(); @Autowired private BasicInfoApiConsumer basicInfoApiConsumer; @Override public void run(String... strings) { executorService.execute(()-> basicInfoApiConsumer.writeLog()); } } }

RedisService.java

@Component public class RedisService { Logger logger = LoggerFactory.getLogger(this.getClass()); @Value("${redisParam.host}") private String host; @Value("${redisParam.port}") private Integer port; @Value("${redisParam.pool.maxIdle}") private Integer maxIdle; @Value("${redisParam.pool.maxTotal}") private Integer maxTotal; @Value("${redisParam.pool.maxWaitMillis}") private Integer maxWaitMillis; @Value("${redisParam.pool.testOnBorrow}") private Boolean testOnBorrow; @Value("${redisParam.pool.testOnReturn}") private Boolean testOnReturn; private static JedisPoolConfig config = new JedisPoolConfig(); private static JedisPool pool; @PostConstruct public void init(){ config.setMaxIdle(maxIdle); config.setMaxTotal(maxTotal); config.setMaxWaitMillis(maxWaitMillis); config.setTestOnBorrow(testOnBorrow); config.setTestOnReturn(testOnReturn); pool = new JedisPool(config, host, port); } public String brpop(int timeOut, String key) { Jedis jedis = null; try { jedis = pool.getResource(); return jedis.brpop(timeOut, key).get(1); } catch (Exception ex) { logger.warn("redis消费异常",ex); return "redis消费异常"; } finally { if (jedis != null) jedis.close(); } } }

BasicInfoApiConsumer.java

/** * 日志消费者 */ @Component public class BasicInfoApiConsumer { private Logger logger = LoggerFactory.getLogger(this.getClass()); @Value("${logKey.basic-info-api}") private String logKey; @Autowired private RedisService redisService; public void writeLog() { while (true){ System.out.println(1); logger.info(redisService.brpop(0, logKey)); } } }

随便拿个应用跑一个 这里用basic-info-api

pom.xml

<dependency> <groupId>org.lzw</groupId> <artifactId>logqueue</artifactId> <version>1.0-SNAPSHOT</version> </dependency> <dependency> <groupId>redis.clients</groupId> <artifactId>jedis</artifactId> <version>2.9.0</version> </dependency>

logback.xml

<?xml version="1.0" encoding="UTF-8"?> <configuration debug="true"> <contextName>logback</contextName> <property name="log.path" value="/home/logs/basic-info-api/logback.log"/> <appender name="redisAppender" class="org.lzw.log.appender.RedisAppender"> <filter class="ch.qos.logback.classic.filter.ThresholdFilter"> <level>warn</level> </filter> <queueKey>basic-info-api</queueKey> <redisProperties> host=192.168.1.207 port=6379 </redisProperties> <layout class="ch.qos.logback.classic.PatternLayout"> <pattern>%date %level [%thread] %logger{36} [%file : %line] %msg%n</pattern> </layout> </appender> <appender name="localAppender" class="ch.qos.logback.core.rolling.RollingFileAppender"> <filter class="ch.qos.logback.classic.filter.ThresholdFilter"> <level>warn</level> </filter> <file>${log.path}</file> <filter class="ch.qos.logback.classic.filter.ThresholdFilter"> <level>warn</level> </filter> <rollingPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedRollingPolicy"> <fileNamePattern>${log.path}.%d{yyyy-MM-dd}.%i.tar.gz</fileNamePattern> <!-- 日志文件保留天数 --> <MaxHistory>30</MaxHistory> <!-- 文件大小触发重写新文件 --> <MaxFileSize>50MB</MaxFileSize> <totalSizeCap>10GB</totalSizeCap> </rollingPolicy> <encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder"> <pattern>%date %level [%thread] %logger{36} [%file : %line] %msg%n</pattern> <charset>UTF-8</charset> </encoder> </appender> <appender name="asyncLocal" class="ch.qos.logback.classic.AsyncAppender"> <!-- 不丢失日志.默认的,如果队列的80%已满,则会丢弃TRACT、DEBUG、INFO级别的日志 --> <discardingThreshold>0</discardingThreshold> <queueSize>2048</queueSize> <appender-ref ref="localAppender"/> </appender> <appender name="console" class="ch.qos.logback.core.ConsoleAppender"> <filter class="ch.qos.logback.classic.filter.ThresholdFilter"> <level>debug</level> </filter> <encoder> <pattern>%d{HH:mm:ss.SSS} %contextName [%thread] %-5level %logger{36} - %msg%n </pattern> </encoder> </appender> <!--万一redis队列不通,记录到本地--> <logger name="local" additivity="false"> <appender-ref ref="asyncLocal"/> </logger> <appender name="asyncRedisAppender" class="ch.qos.logback.classic.AsyncAppender"> <!-- 不丢失日志.默认的,如果队列的80%已满,则会丢弃TRACT、DEBUG、INFO级别的日志 --> <discardingThreshold>0</discardingThreshold> <queueSize>2048</queueSize> <appender-ref ref="redisAppender"/> </appender> <root level="warn"> <appender-ref ref="asyncRedisAppender"/> </root> <logger name="org.springframework.session.web.http.SessionRepositoryFilter" level="error"/> <logger name="org.springframework.scheduling" level="error"/> <Logger name="org.apache.catalina.util.LifecycleBase" level="error"/> <Logger name="org.springframework.amqp" level="warn"/> </configuration>

写一段长日志

@Slf4j @EnableEurekaClient @EnableCircuitBreaker @SpringBootApplication public class BasicInfoApiApplication { public static void main(String[] args) { SpringApplication.run(BasicInfoApiApplication.class, args); } @Component public static class ConsumersStartup implements CommandLineRunner { ExecutorService executorService = Executors.newCachedThreadPool(); String msg = "--endpoints=https://192.168.91.138:2379,https://192.168.91.139:2379," + "https://192.168.91.140:2379--endpoints=https://192.168.91.138:2379," + "https://192.168.91.139:2379,https://192.168.91.140:2379--endpoints=https://192.168.91.138:2379," + "https://192.168.91.139:2379,https://192.168.91.140:2379--endpoints=https://192.168.91.138:2379," + "https://192.168.91.139:2379,https://192.168.91.140:2379--endpoints=https://192.168.91.138:2379," + "https://192.168.91.139:2379,https://192.168.91.140:2379--endpoints=https://192.168.91.138:2379," + "https://192.168.91.139:2379,https://192.168.91.140:2379--endpoints=https://192.168.91.138:2379," + "https://192.168.91.139:2379,https://192.168.91.140:2379--endpoints=https://192.168.91.138:2379," + "https://192.168.91.139:2379,https://192.168.91.140:2379--endpoints=https://192.168.91.138:2379," + "https://192.168.91.139:2379,https://192.168.91.140:2379--endpoints=https://192.168.91.138:2379," + "https://192.168.91.139:2379,https://192.168.91.140:2379--endpoints=https://192.168.91.138:2379," + "https://192.168.91.139:2379,https://192.168.91.140:2379--endpoints=https://192.168.91.138:2379," + "https://192.168.91.139:2379,https://192.168.91.140:2379--endpoints=https://192.168.91.138:2379," + "https://192.168.91.139:2379,https://192.168.91.140:2379--endpoints=https://192.168.91.138:2379," + "https://192.168.91.139:2379,https://192.168.91.140:2379--endpoints=https://192.168.91.138:2379," + "https://192.168.91.139:2379,https://192.168.91.140:2379--endpoints=https://192.168.91.138:2379," + "https://192.168.91.139:2379,https://192.168.91.140:2379--endpoints=https://192.168.91.138:2379," + "https://192.168.91.139:2379,https://192.168.91.140:2379--endpoints=https://192.168.91.138:2379," + "https://192.168.91.139:2379,https://192.168.91.140:2379--endpoints=https://192.168.91.138:2379," + "https://192.168.91.139:2379,https://192.168.91.140:2379--endpoints=https://192.168.91.138:2379,h" + "ttps://192.168.91.139:2379,https://192.168.91.140:2379--endpoints=https://192.168.91.138:2379,ht" + "tps://192.168.91.139:2379,https://192.168.91.140:2379--endpoints=https://192.168.91.138:2379,http" + "s://192.168.91.139:2379,https://192.168.91.140:2379--endpoints=https://192.168.91.138:2379,https://" + "192.168.91.139:2379,https://192.168.91.140:2379--endpoints=https://192.168.91.138:2379,https://192.1" + "68.91.139:2379,https://192.168.91.140:2379--endpoints=https://192.168.91.138:2379,https://192.168.91." + "139:2379,https://192.168.91.140:2379--endpoints=https://192.168.91.138:2379,https://192.168.91.139:23" + "79,https://192.168.91.140:2379--endpoints=https://192.168.91.138:2379,https://192.168.91.139:2379,http" + "s://192.168.91.140:2379--endpoints=https://192.168.91.138:2379,https://192.168.91.139:2379,https://192" + ".168.91.140:2379--endpoints=https://192.168.91.138:2379,https://192.168.91.139:2379,https://192.168.9" + "1.140:2379--endpoints=https://192.168.91.138:2379,https://192.168.91.139:2379,https://192.168.91.140" + ":2379--endpoints=https://192.168.91.138:2379,https://192.168.91.139:2379,https://192.168.91.140:2379-" + "-endpoints=https://192.168.91.138:2379,https://192.168.91.139:2379,https://192.168.91.140:2379--endpoi" + "nts=https://192.168.91.138:2379,https://192.168.91.139:2379,https://192.168.91.140:2379--endpoints=h" + "ttps://192.168.91.138:2379,https://192.168.91.139:2379,https://192.168.91.140:2379--endpoints=https:/" + "/192.168.91.138:2379,https://192.168.91.139:2379,https://192.168.91.140:2379--endpoints=https://192." + "168.91.138:2379,https://192.168.91.139:2379,https://192.168.91.140:2379--endpoints=https://192.168.91" + ".138:2379,https://192.168.91.139:2379,https://192.168.91.140:2379"; @Override public void run(String... strings) { long begin = System.nanoTime(); for(int i=0;i<10000;i++) executorService.execute(() -> log.warn(msg)); executorService.shutdown(); for(;;){ if(executorService.isTerminated()){ System.out.println((System.nanoTime()-begin)/1000000); break; } } } } }

输出 1328 毫秒,这里仅仅只是进去队列的时间就需要1秒多,感觉还是很慢.这里进入了以后并没有完全写入硬盘,看另一个消费程序,还不停地在消费.