在上一节提到,Openvswitch的内核模块openvswitch.ko会在网卡上注册一个函数netdev_frame_hook,每当有网络包到达网卡的时候,这个函数就会被调用。

static

struct sk_buff *netdev_frame_hook(struct sk_buff *skb)

{

if (unlikely(skb->pkt_type == PACKET_LOOPBACK))

return skb;

port_receive(skb);

return NULL;

}

|

调用port_receive即是调用netdev_port_receive

#define port_receive(skb) netdev_port_receive(skb, NULL)

void netdev_port_receive(struct sk_buff *skb, struct ip_tunnel_info *tun_info)

{

struct vport *vport;

vport = ovs_netdev_get_vport(skb->dev);

……

skb_push(skb, ETH_HLEN);

ovs_skb_postpush_rcsum(skb, skb->data, ETH_HLEN);

ovs_vport_receive(vport, skb, tun_info);

return;

error:

kfree_skb(skb);

}

|

在函数int ovs_vport_receive(struct vport *vport, struct sk_buff *skb, const struct ip_tunnel_info *tun_info)实现如下

int ovs_vport_receive(struct vport *vport, struct sk_buff *skb,

const

struct ip_tunnel_info *tun_info)

{

struct sw_flow_key key;

......

/* Extract flow from 'skb' into 'key'. */

error = ovs_flow_key_extract(tun_info, skb, &key);

if (unlikely(error)) {

kfree_skb(skb);

return error;

}

ovs_dp_process_packet(skb, &key);

return 0;

}

|

在这个函数里面,首先声明了变量struct sw_flow_key key;

如果我们看这个key的定义

struct sw_flow_key {

u8 tun_opts[255];

u8 tun_opts_len;

struct ip_tunnel_key tun_key; /* Encapsulating tunnel key. */

struct {

u32 priority; /* Packet QoS priority. */

u32 skb_mark; /* SKB mark. */

u16 in_port; /* Input switch port (or DP_MAX_PORTS). */

} __packed phy; /* Safe when right after 'tun_key'. */

u32 ovs_flow_hash; /* Datapath computed hash value. */

u32 recirc_id; /* Recirculation ID. */

struct {

u8 src[ETH_ALEN]; /* Ethernet source address. */

u8 dst[ETH_ALEN]; /* Ethernet destination address. */

__be16 tci; /* 0 if no VLAN, VLAN_TAG_PRESENT set otherwise. */

__be16 type; /* Ethernet frame type. */

} eth;

union {

struct {

__be32 top_lse; /* top label stack entry */

} mpls;

struct {

u8 proto; /* IP protocol or lower 8 bits of ARP opcode. */

u8 tos; /* IP ToS. */

u8 ttl; /* IP TTL/hop limit. */

u8 frag; /* One of OVS_FRAG_TYPE_*. */

} ip;

};

struct {

__be16 src; /* TCP/UDP/SCTP source port. */

__be16 dst; /* TCP/UDP/SCTP destination port. */

__be16 flags; /* TCP flags. */

} tp;

union {

struct {

struct {

__be32 src; /* IP source address. */

__be32 dst; /* IP destination address. */

} addr;

struct {

u8 sha[ETH_ALEN]; /* ARP source hardware address. */

u8 tha[ETH_ALEN]; /* ARP target hardware address. */

} arp;

} ipv4;

struct {

struct {

struct

in6_addr src; /* IPv6 source address. */

struct

in6_addr dst; /* IPv6 destination address. */

} addr;

__be32 label; /* IPv6 flow label. */

struct {

struct

in6_addr target; /* ND target address. */

u8 sll[ETH_ALEN]; /* ND source link layer address. */

u8 tll[ETH_ALEN]; /* ND target link layer address. */

} nd;

} ipv6;

};

struct {

/* Connection tracking fields. */

u16 zone;

u32 mark;

u8 state;

struct ovs_key_ct_labels labels;

} ct;

} __aligned(BITS_PER_LONG/8); /* Ensure that we can do comparisons as longs. */

|

可见这个key里面是一个大杂烩,数据包里面的几乎任何部分都可以作为key来查找flow表

- tunnel可以作为key

- 在物理层,in_port即包进入的网口的ID

- 在MAC层,源和目的MAC地址

- 在IP层,源和目的IP地址

- 在传输层,源和目的端口号

- IPV6

所以,要在内核态匹配流表,首先需要调用ovs_flow_key_extract,从包的正文中提取key的值。

接下来就是要调用ovs_dp_process_packet了。

void ovs_dp_process_packet(struct sk_buff *skb, struct sw_flow_key *key)

{

const

struct vport *p = OVS_CB(skb)->input_vport;

struct datapath *dp = p->dp;

struct sw_flow *flow;

struct sw_flow_actions *sf_acts;

struct dp_stats_percpu *stats;

u64 *stats_counter;

u32 n_mask_hit;

stats = this_cpu_ptr(dp->stats_percpu);

/* Look up flow. */

flow = ovs_flow_tbl_lookup_stats(&dp->table, key, skb_get_hash(skb),

&n_mask_hit);

if (unlikely(!flow)) {

struct dp_upcall_info upcall;

int error;

memset(&upcall, 0, sizeof(upcall));

upcall.cmd = OVS_PACKET_CMD_MISS;

upcall.portid = ovs_vport_find_upcall_portid(p, skb);

upcall.mru = OVS_CB(skb)->mru;

error = ovs_dp_upcall(dp, skb, key, &upcall);

if (unlikely(error))

kfree_skb(skb);

else

consume_skb(skb);

stats_counter = &stats->n_missed;

goto

out;

}

ovs_flow_stats_update(flow, key->tp.flags, skb);

sf_acts = rcu_dereference(flow->sf_acts);

ovs_execute_actions(dp, skb, sf_acts, key);

stats_counter = &stats->n_hit;

out:

/* Update datapath statistics. */

u64_stats_update_begin(&stats->syncp);

(*stats_counter)++;

stats->n_mask_hit += n_mask_hit;

u64_stats_update_end(&stats->syncp);

}

|

这个函数首先在内核里面的流表中查找符合key的flow,也即ovs_flow_tbl_lookup_stats,如果找到了,很好说明用户态的流表已经放入内核,则走fast path就可了。于是直接调用ovs_execute_actions,执行这个key对应的action。

如果不能找到,则只好调用ovs_dp_upcall,让用户态去查找流表。会调用static int queue_userspace_packet(struct datapath *dp, struct sk_buff *skb, const struct sw_flow_key *key, const struct dp_upcall_info *upcall_info)

它会调用err = genlmsg_unicast(ovs_dp_get_net(dp), user_skb, upcall_info->portid);通过netlink将消息发送给用户态。在用户态,有线程监听消息,一旦有消息,则触发udpif_upcall_handler。

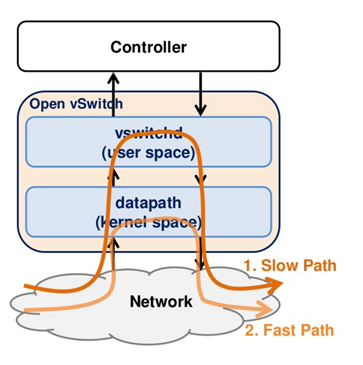

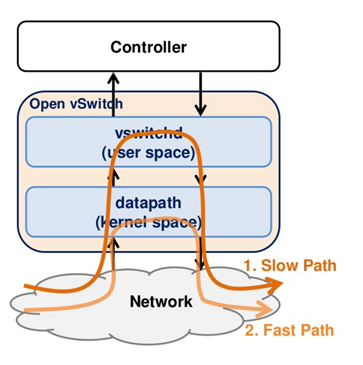

Slow Path & Fast Path

Slow Path:

当Datapath找不到flow rule对packet进行处理时

Vswitchd使用flow rule对packet进行处理。

Fast Path:

将slow path的flow rule放在内核态,对packet进行处理

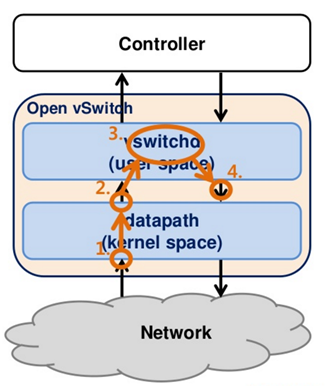

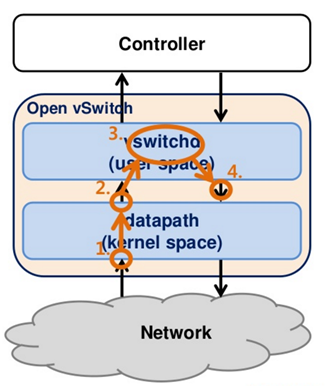

Unknown Packet Processing

Datapath使用flow rule对packet进行处理,如果没有,则有vswitchd使用flow rule进行处理

- 从Device接收Packet交给事先注册的event handler进行处理

- 接收Packet后识别是否是unknown packet,是则交由upcall处理

- vswitchd对unknown packet找到flow rule进行处理

- 将Flow rule发送给datapath