Openvswitch手册(6): QoS

这一节我们看QoS,Qos的设置往往是和flow中的policy一起使用的

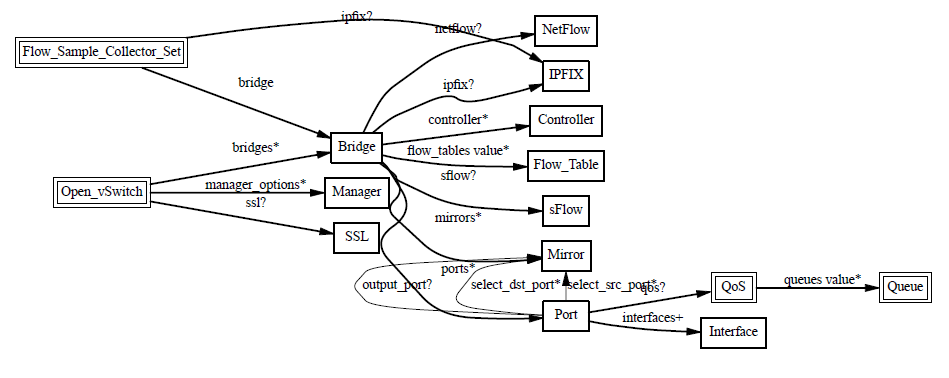

Open vSwitch QoS capabilities

- 1 Interface rate limiting

- 2 Port QoS policy

QoS: Interface rate limiting

- A rate and burst can be assigned to an Interface

- Conceptually similar to Xen’s netback credit scheduler

- # ovs-vsctl set Interface tap0 ingress_policing_rate=100000

- # ovs-vsctl set Interface tap0 ingress_policing_burst=10000

- Simple

- Appears to work as expected

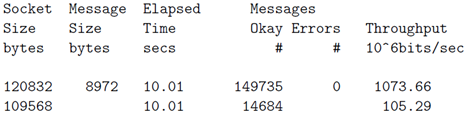

QoS: No interface rate limiting example

# netperf -4 -t UDP_STREAM -H 172.17.50.253 -- -m 8972

UDP UNIDIRECTIONAL SEND TEST from 0.0.0.0 (0.0.0.0) port 0 AF_INET to 172.17.50.253 (172.17.50.253) port 0 AF_INET

# netperf -4 -t UDP_STREAM -H 172.17.50.253

UDP UNIDIRECTIONAL SEND TEST from 0.0.0.0 (0.0.0.0) port 0 AF_INET to 172.17.50.253 (172.17.50.253) port 0 AF_INET

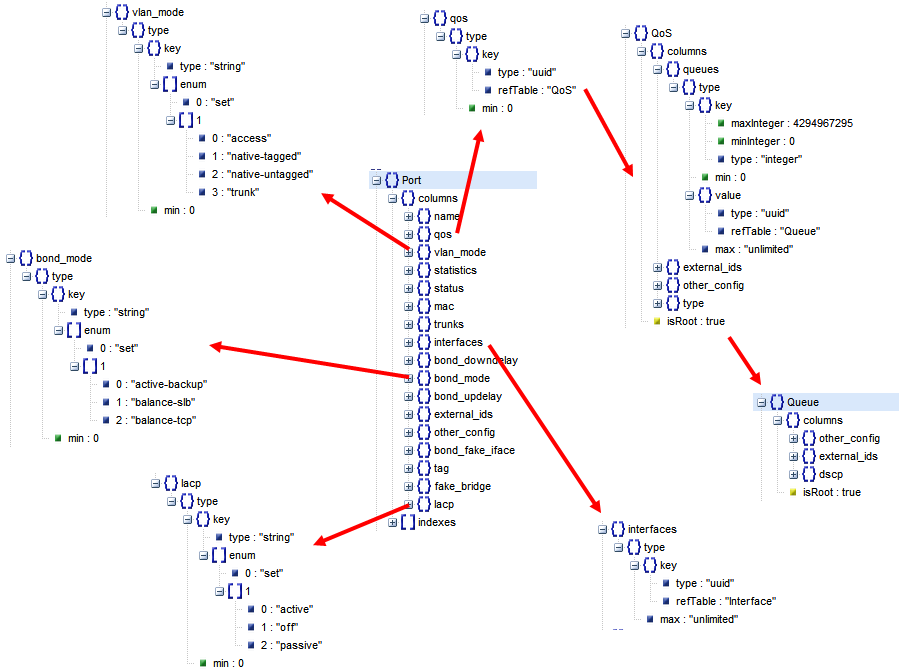

QoS: Port QoS policy

- A port may be assigned one ore more QoS policy

- Each QoS policy consists of a class and a qdisc

- Classes and qdisc use the Linux kernel’s tc implementation

- Only HTB classes are supported at this time

- Each class has a single qdisc associated with it

- The class of a flow is chosen by the controller

- The QoS policy (i.e. class) of a flow is chosen by the controller

Create the QoS Policy

# ovs-vsctl set port eth1 qos=@newqos -- --id=@newqos create qos type=linux-htb other-config:max-rate=200000000 queues=0=@q0,1=@q1 -- --id=@q0 create queue other-config:min-rate=100000000 other-config:max-rate=100000000 -- --id=@q1 create queue other-config:min-rate=50000000 other-config:max-rate=50000000

Add flow for the Queue (enqueue:port:queue)

# ovs-ofctl add-flow br0 "in_port=2 ip nw_dst=172.17.50.253 idle_timeout=0 actions=enqueue:1:0"

# ovs-ofctl add-flow br0 "in_port=3 ip nw_dst=172.17.50.253 idle_timeout=0 actions=enqueue:1:1"

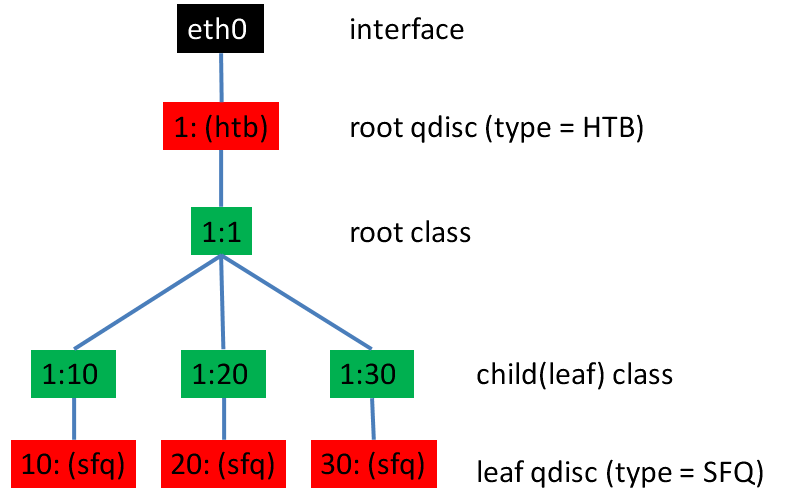

Hierarchical Token Bucket

例子一:

# tc qdisc add dev eth0 root handle 1: htb default 30 # tc class add dev eth0 parent 1: classid 1:1 htb rate 6mbit burst 15k # tc class add dev eth0 parent 1:1 classid 1:10 htb rate 5mbit burst 15k # tc class add dev eth0 parent 1:1 classid 1:20 htb rate 3mbit ceil 6mbit burst 15k # tc class add dev eth0 parent 1:1 classid 1:30 htb rate 1kbit ceil 6mbit burst 15k

The author then recommends SFQ for beneath these classes:

# tc qdisc add dev eth0 parent 1:10 handle 10: sfq perturb 10 # tc qdisc add dev eth0 parent 1:20 handle 20: sfq perturb 10 # tc qdisc add dev eth0 parent 1:30 handle 30: sfq perturb 10

Add the filters which direct traffic to the right classes:

# U32="tc filter add dev eth0 protocol ip parent 1:0 prio 1 u32" # $U32 match ip dport 80 0xffff flowid 1:10 # $U32 match ip sport 25 0xffff flowid 1:20

同一个root class下的子类可以相互借流量,如果直接不在qdisc下面创建一个root,而是直接创建三个class,他们之间是不能相互借流量的。

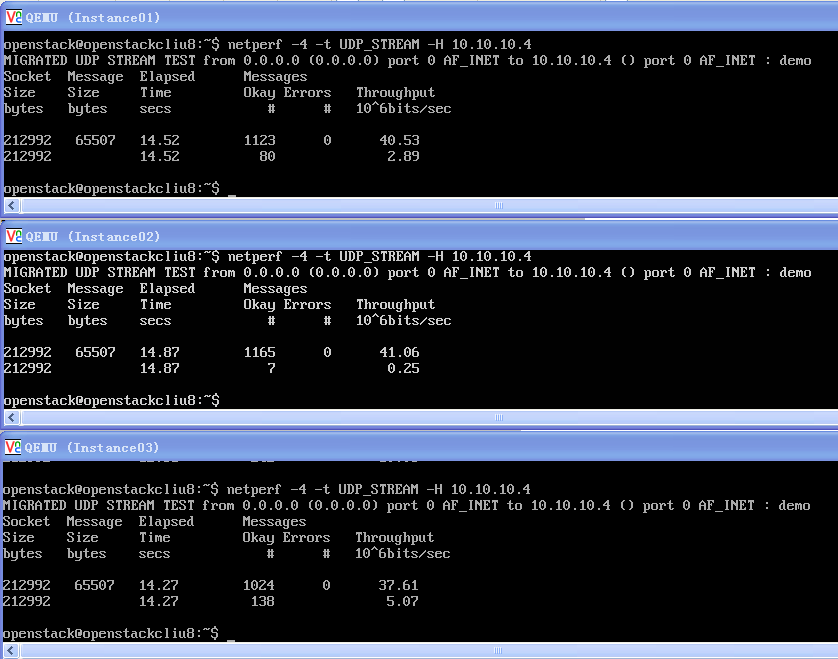

例子二:

tc qdisc add dev eth0 root handle 1: htb default 12

- This command attaches queue discipline HTB to eth0 and gives it the "handle" 1:.

- This is just a name or identifier with which to refer to it below.

- The default 12 means that any traffic that is not otherwise classified will be assigned to class 1:12.

tc class add dev eth0 parent 1: classid 1:1 htb rate 100kbps ceil 100kbps

tc class add dev eth0 parent 1:1 classid 1:10 htb rate 30kbps ceil 100kbps

tc class add dev eth0 parent 1:1 classid 1:11 htb rate 10kbps ceil 100kbps

tc class add dev eth0 parent 1:1 classid 1:12 htb rate 60kbps ceil 100kbps

The first line creates a "root" class, 1:1 under the qdisc 1:. The definition of a root class is one with the htb qdisc as its parent.

同一个root class下的子类可以相互借流量,如果直接不在qdisc下面创建一个root,而是直接创建三个class,他们之间是不能相互借流量的。

tc qdisc add dev eth0 parent 1:10 handle 20: pfifo limit 5 tc qdisc add dev eth0 parent 1:11 handle 30: pfifo limit 5 tc qdisc add dev eth0 parent 1:12 handle 40: sfq perturb 10

tc filter add dev eth0 protocol ip parent 1:0 prio 1 u32 match ip src 1.2.3.4 match ip dport 80 0xffff flowid 1:10

tc filter add dev eth0 protocol ip parent 1:0 prio 1 u32 match ip src 1.2.3.4 flowid 1:11

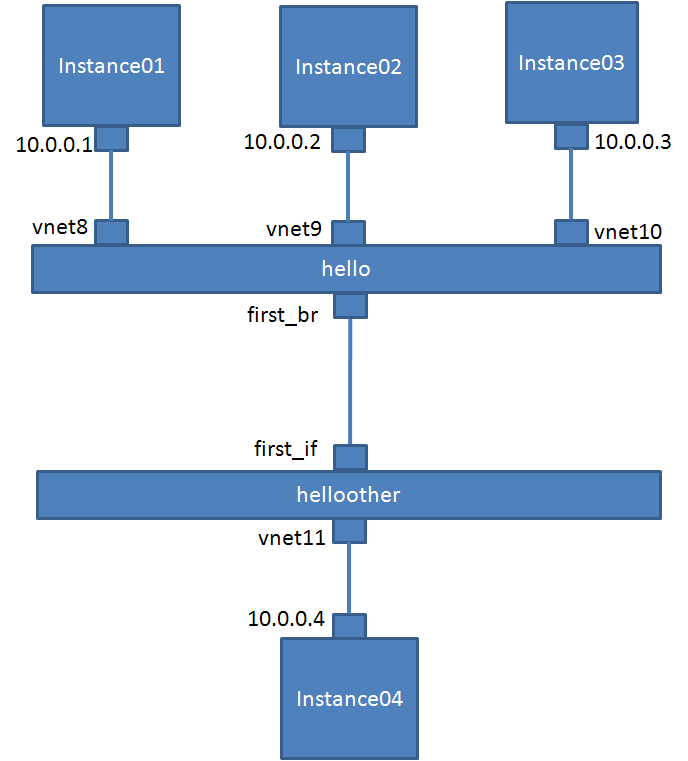

实验

sudo iptables -t nat -A POSTROUTING -o eth0 -j MASQUERADE

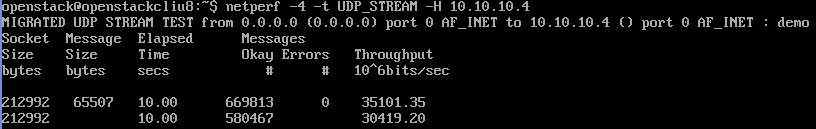

首先我们测试在Instance04上设置ingress speed control

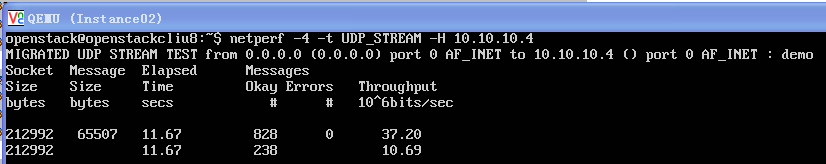

在什么都没有配置的时候,我们测试一下速度

从10.10.10.1到10.10.10.4

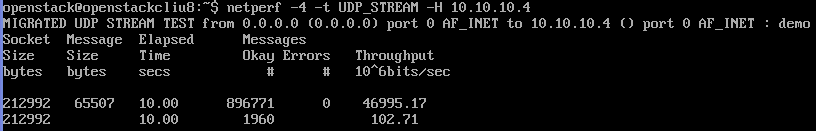

我们设置 一下first_if

# ovs-vsctl set Interface first_if ingress_policing_rate=100000

# ovs-vsctl set Interface first_if ingress_policing_burst=10000

下面将ingress speed control删除

$ sudo ovs-vsctl set Interface first_if ingress_policing_burst=0

$ sudo ovs-vsctl set Interface first_if ingress_policing_rate=0

然后我们测试QoS

$ sudo ovs-vsctl set port first_br qos=@newqos -- --id=@newqos create qos type=linux-htb other-config:max-rate=10000000 queues=0=@q0,1=@q1,2=@q2 -- --id=@q0 create queue other-config:min-rate=3000000 other-config:max-rate=10000000 -- --id=@q1 create queue other-config:min-rate=1000000 other-config:max-rate=10000000 -- --id=@q2 create queue other-config:min-rate=6000000 other-config:max-rate=10000000

ce7cfd43-8369-4ce6-a7fd-4e0b79307c10

04e7abdc-406b-422c-82bc-3d229a3eb191

be03dd29-0ec2-40f0-a342-95f118322673

834bd432-3314-4d68-94ab-bb233c82082b

$ sudo ovs-ofctl add-flow hello "in_port=1 nw_src=10.10.10.1 actions=enqueue:4:0"

2014-06-23T12:24:54Z|00001|ofp_util|INFO|normalization changed ofp_match, details:

2014-06-23T12:24:54Z|00002|ofp_util|INFO| pre: in_port=1,nw_src=10.10.10.1

2014-06-23T12:24:54Z|00003|ofp_util|INFO|post: in_port=1

$ sudo ovs-ofctl add-flow hello "in_port=2 nw_src=10.10.10.2 actions=enqueue:4:1"

2014-06-23T12:25:21Z|00001|ofp_util|INFO|normalization changed ofp_match, details:

2014-06-23T12:25:21Z|00002|ofp_util|INFO| pre: in_port=2,nw_src=10.10.10.2

2014-06-23T12:25:21Z|00003|ofp_util|INFO|post: in_port=2

$ sudo ovs-ofctl add-flow hello "in_port=3 nw_src=10.10.10.3 actions=enqueue:4:2"

2014-06-23T12:26:44Z|00001|ofp_util|INFO|normalization changed ofp_match, details:

2014-06-23T12:26:44Z|00002|ofp_util|INFO| pre: in_port=3,nw_src=10.10.10.3

2014-06-23T12:26:44Z|00003|ofp_util|INFO|post: in_port=3

$ sudo ovs-ofctl dump-flows hello

NXST_FLOW reply (xid=0x4):

cookie=0x0, duration=23.641s, table=0, n_packets=0, n_bytes=0, idle_age=23, in_port=3 actions=enqueue:4q2

cookie=0x0, duration=133.215s, table=0, n_packets=0, n_bytes=0, idle_age=133, in_port=1 actions=enqueue:4q0

cookie=0x0, duration=107.042s, table=0, n_packets=0, n_bytes=0, idle_age=107, in_port=2 actions=enqueue:4q1

cookie=0x0, duration=8327.633s, table=0, n_packets=2986900, n_bytes=195019185978, idle_age=1419, priority=0 actions=NORMAL

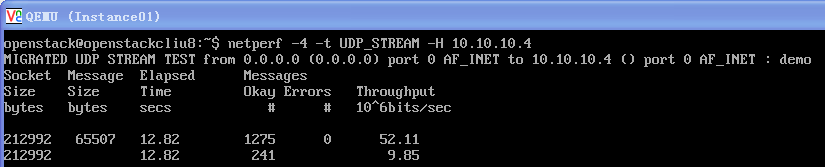

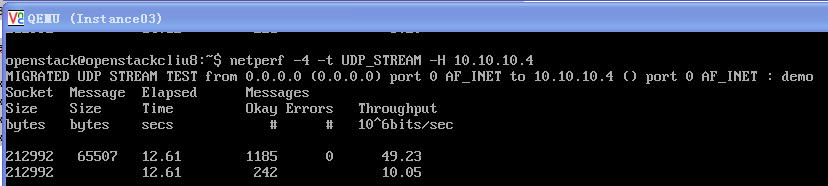

如果我们单独测试从10.10.10.1,10.10.10.2,10.10.10.3到10.10.10.4

发现他们的速度都能达到最大的10M

如果三个一起测试,我们发现是按照比例3:1:6进行的

所以QoS中的HTB是实现了borrow的功能的

浙公网安备 33010602011771号

浙公网安备 33010602011771号