the basic of neural network

It turns out that when you implement a neural network , there are some techniques that are going to be really important.

In the the basic of neural network , I want to convey these ideas using logistic regression.

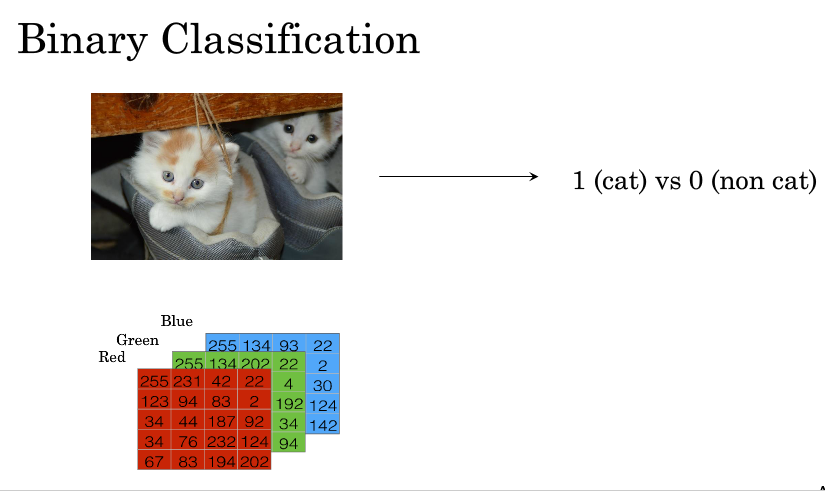

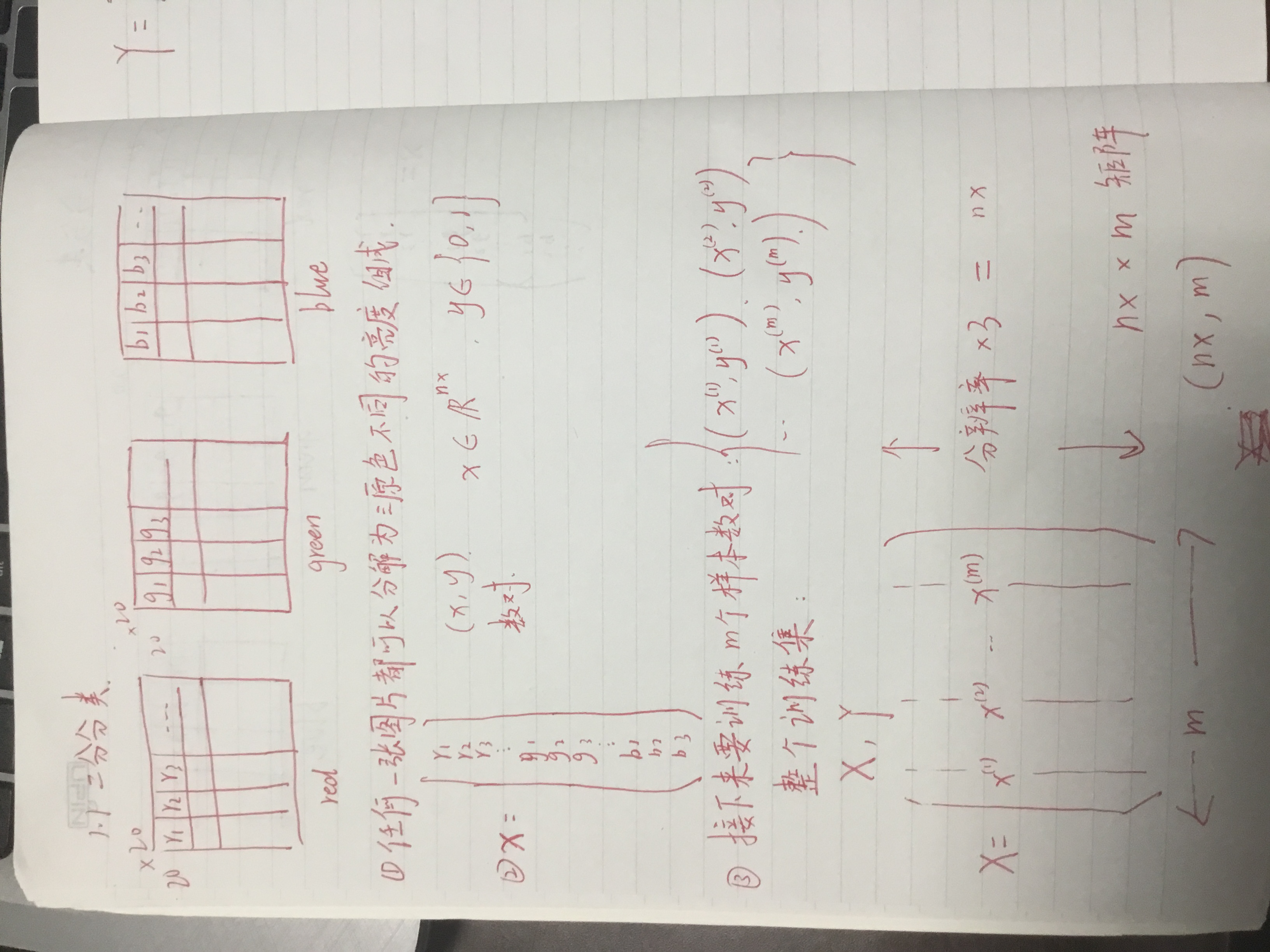

1.1 Logistic regression is an algorithm for binary classification.so let`s start by setting up th example of a binary classification problem.

(引入二分分类的概念)

you might have an input of an image,like that,and want to output a label to recognize this image , and we`re going to use y denote the output lable ,as being either a cat ,in which case you output 1.

To store an image your computer stores three separate matrices corresponding to the red,green and blue color channels of this image.so if my input image is 20 pixels by 20 pixels,then there are 3 20 by 20 matrices corresponding to the red,green and blue pixel intensity values for the images.

so to turn these pixel intensity values into a feature etor,what we`re going to do is unroll of these pixel values into an input feature vector x.and we should define a feature vector x corresponding to this image as follows.tare all the pixel values nutil we get a long feature vector listing outlaw the red ,green and blue pixel intensity values of this image.so the total dimension of this vector x will be 20 by 20 by 3,and turns out to be 1200.represent the dimension of the input fatures x(nx).

so in binary classification,our goal is to learn a classifie,and predict whether the corresponding label y is 1 or 0,wether this is a cat image or a no cat image.

X.shape = (nx,m)

Y.shape = (1,m)

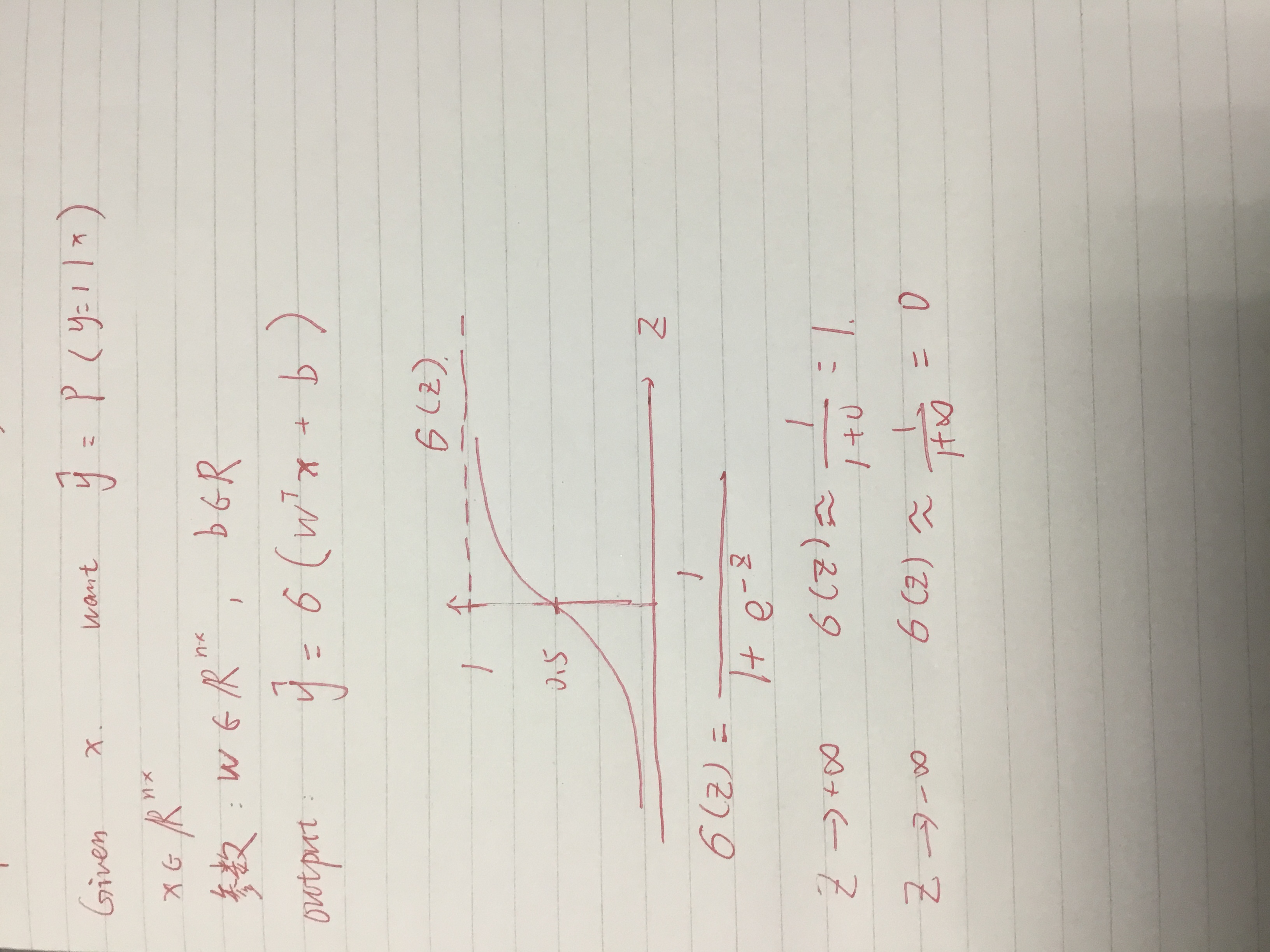

1.2 logistic regression W and b

(回归基本模型,引入sigmoid函数,图像,基本二分分类运算)

w is an nx dimensional vector

b is a real number

(关键点在于训练N维向量W,和实参数b)

Given an input X and the parameters W and b,we can generate the output y hat (y hat should really be between zero and one)

so in the logistic regression our output is instead going to be y hat equals the sigmoid function applied to this quantity.

this is what the sigmoid function looks like.

It goes smoothly from zero up to one.

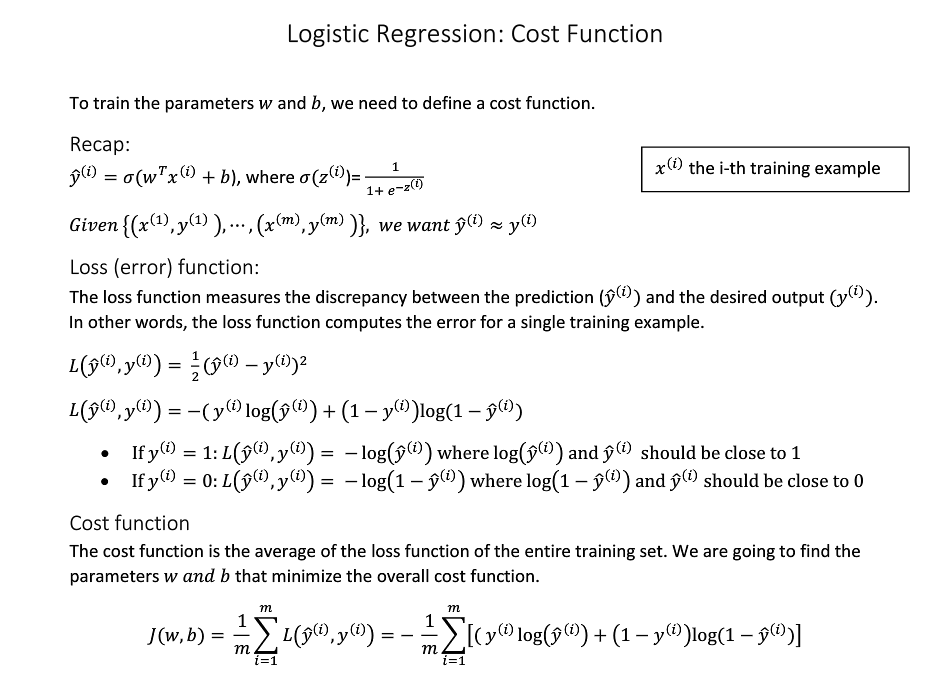

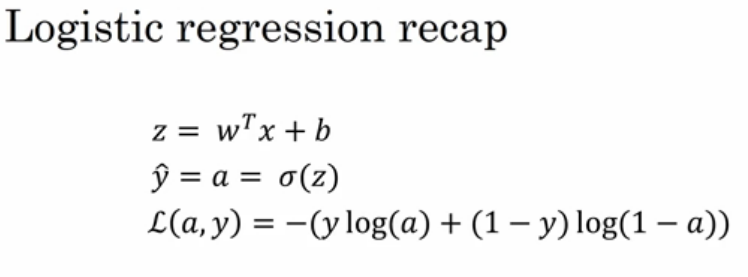

1.3 Logistic Regression:Cost Functon

To train the parameters w and b,we need to define a cost function.

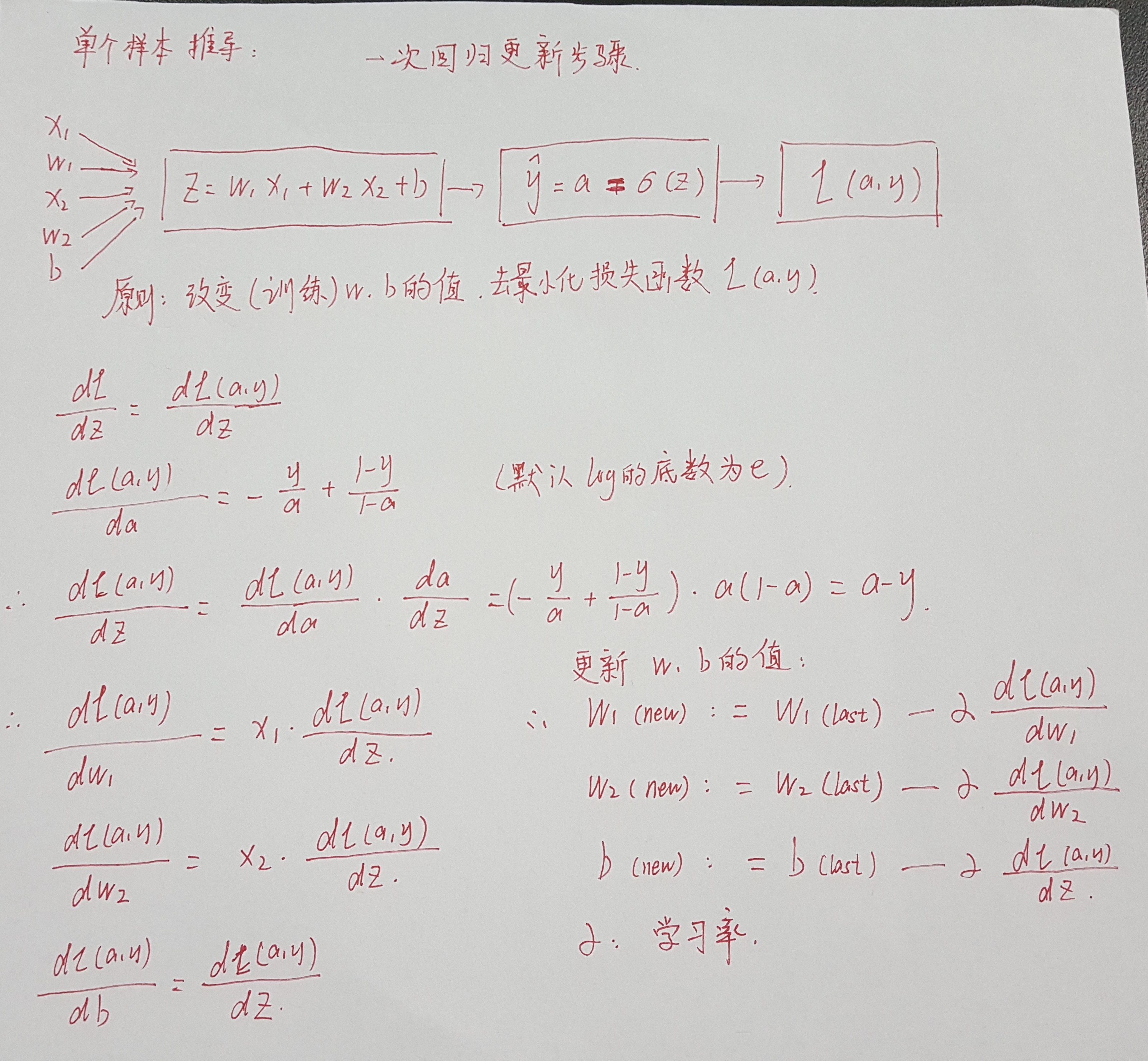

1.4 Gradient Descent

modify the parameters w and b,in order to reduce this loss .

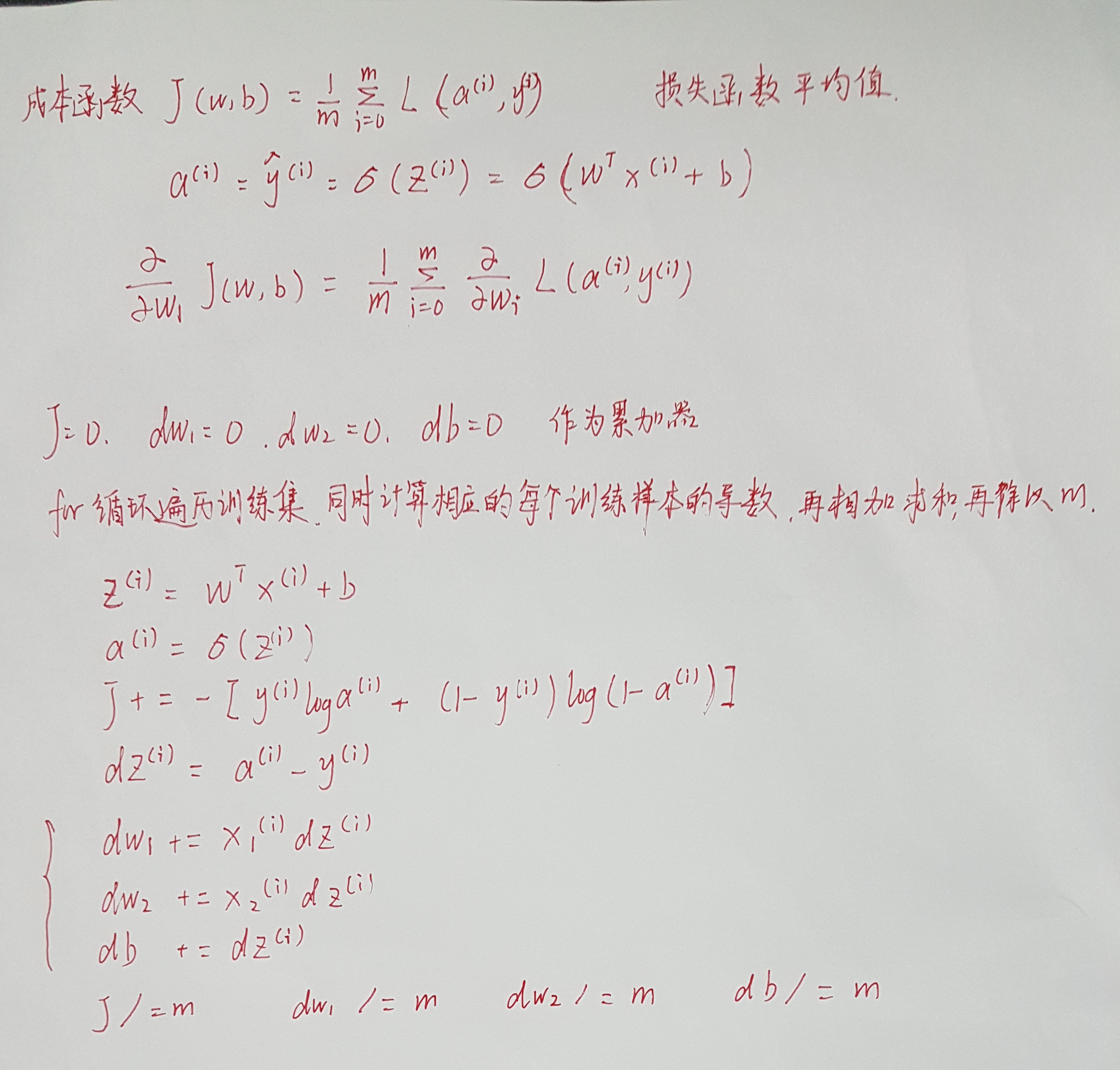

now we want to do it for m training examples to get started.

There are set of techniques called vectorization techniques that allows you to get rid of these explicit for loops in your code.

1.5 Vectorzation

(向量化,消除for循环,简化代码)