spark 源码导读6 App, Driver 及 Worker的容错恢复

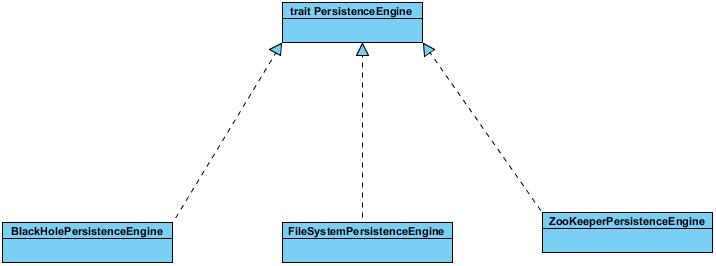

为了防止应用在运行时,应用所在机器或某个worker由于某种原因crash了,这些信息也随之消失,spark引入了HA机制,由trait PersistenceEngine提供实现。

spark中提供了三种容错机制:ZooKeeper、FileSystem、BlackHole,它们分别由对应的*PersistenceEngine实现,都继承自trait PersistenceEngine。

1. ZooKeeperPersistenceEngine 它是基于Zookeeper这个分布式协调系统。

首先会根据配置 spark.deploy.zookeeper.dir (如果没有设置,就为/spark),获取工作目录,其次根据配置spark.deploy.zookeeper.url,创建zk client。最后根据这两个参数(zk, dir)创建工作目录。方法persist是对app,driver,worker对象进行序列化,unpersist是删除保存的对象文件,read是反序列化由zk client提供的文件。

private val WORKING_DIR = conf.get("spark.deploy.zookeeper.dir", "/spark") + "/master_status" private val zk: CuratorFramework = SparkCuratorUtil.newClient(conf) SparkCuratorUtil.mkdir(zk, WORKING_DIR) override def persist(name: String, obj: Object): Unit = { serializeIntoFile(WORKING_DIR + "/" + name, obj) } override def unpersist(name: String): Unit = { zk.delete().forPath(WORKING_DIR + "/" + name) } override def read[T: ClassTag](prefix: String): Seq[T] = { val file = zk.getChildren.forPath(WORKING_DIR).filter(_.startsWith(prefix)) file.map(deserializeFromFile[T]).flatten }

2. FileSystemPersistenceEngine 是将每一个app和woker,在磁盘上保存成一个单独的文件。persist,unpersist,read等方法功能与前面一样。

3. BlackHolePersistenceEngine 不存储app和woker信息,也就是没有容错啦。

以上三种PersistenceEnging, spark究竟会选哪一种是由配置spark.deploy.recoveryMode决定的。默认是None, 即BlackHolePersistenceEngine模式。

val (persistenceEngine_, leaderElectionAgent_) = RECOVERY_MODE match { case "ZOOKEEPER" => logInfo("Persisting recovery state to ZooKeeper") val zkFactory = new ZooKeeperRecoveryModeFactory(conf, SerializationExtension(context.system)) (zkFactory.createPersistenceEngine(), zkFactory.createLeaderElectionAgent(this)) case "FILESYSTEM" => val fsFactory = new FileSystemRecoveryModeFactory(conf, SerializationExtension(context.system)) (fsFactory.createPersistenceEngine(), fsFactory.createLeaderElectionAgent(this)) case "CUSTOM" => val clazz = Class.forName(conf.get("spark.deploy.recoveryMode.factory")) val factory = clazz.getConstructor(conf.getClass, Serialization.getClass) .newInstance(conf, SerializationExtension(context.system)) .asInstanceOf[StandaloneRecoveryModeFactory] (factory.createPersistenceEngine(), factory.createLeaderElectionAgent(this)) case _ => (new BlackHolePersistenceEngine(), new MonarchyLeaderAgent(this)) } persistenceEngine = persistenceEngine_ leaderElectionAgent = leaderElectionAgent_ }

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步