spark 源码导读3 进一步理解脚本调用关系

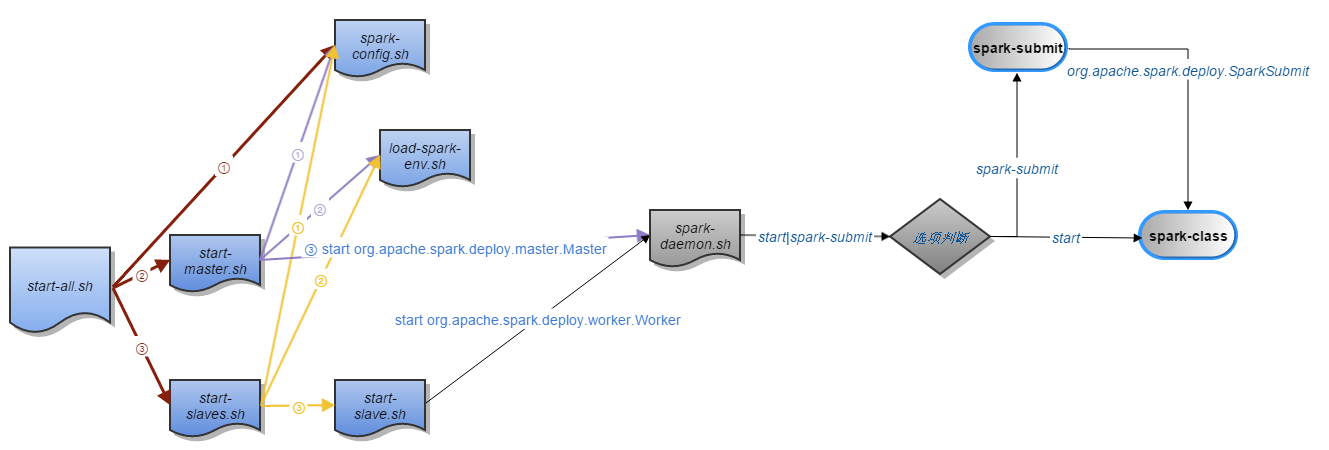

前面文章 spark 源码导读1 从spark启动脚本开始 详述了spark启动脚本的功能,下面用一张图来说明这些脚本的调用关系。

从此图可以清楚的看到,最终是通过向spark-class 传入参数Master, Worker, SparkSubmit来调用java命令启动进程。

下面代码查找java程序

1 # Find the java binary 2 if [ -n "${JAVA_HOME}" ]; then 3 RUNNER="${JAVA_HOME}/bin/java" 4 else 5 if [ `command -v java` ]; then 6 RUNNER="java" 7 else 8 echo "JAVA_HOME is not set" >&2 9 exit 1 10 fi 11 fi

当找着java程序后,调用java命令: java [-options] class [args...]

1 if [ -n "$SPARK_SUBMIT_BOOTSTRAP_DRIVER" ]; then 2 # This is used only if the properties file actually contains these special configs 3 # Export the environment variables needed by SparkSubmitDriverBootstrapper 4 export RUNNER 5 export CLASSPATH 6 export JAVA_OPTS 7 export OUR_JAVA_MEM 8 export SPARK_CLASS=1 9 shift # Ignore main class (org.apache.spark.deploy.SparkSubmit) and use our own 10 exec "$RUNNER" org.apache.spark.deploy.SparkSubmitDriverBootstrapper "$@" 11 else 12 # Note: The format of this command is closely echoed in SparkSubmitDriverBootstrapper.scala 13 if [ -n "$SPARK_PRINT_LAUNCH_COMMAND" ]; then 14 echo -n "Spark Command: " 1>&2 15 echo "$RUNNER" -cp "$CLASSPATH" $JAVA_OPTS "$@" 1>&2 16 echo -e "========================================\n" 1>&2 17 fi 18 exec "$RUNNER" -cp "$CLASSPATH" $JAVA_OPTS "$@" 19 fi

浙公网安备 33010602011771号

浙公网安备 33010602011771号