hadoop namenode HA集群搭建

hadoop集群搭建(namenode是单点的) http://www.cnblogs.com/kisf/p/7456290.html

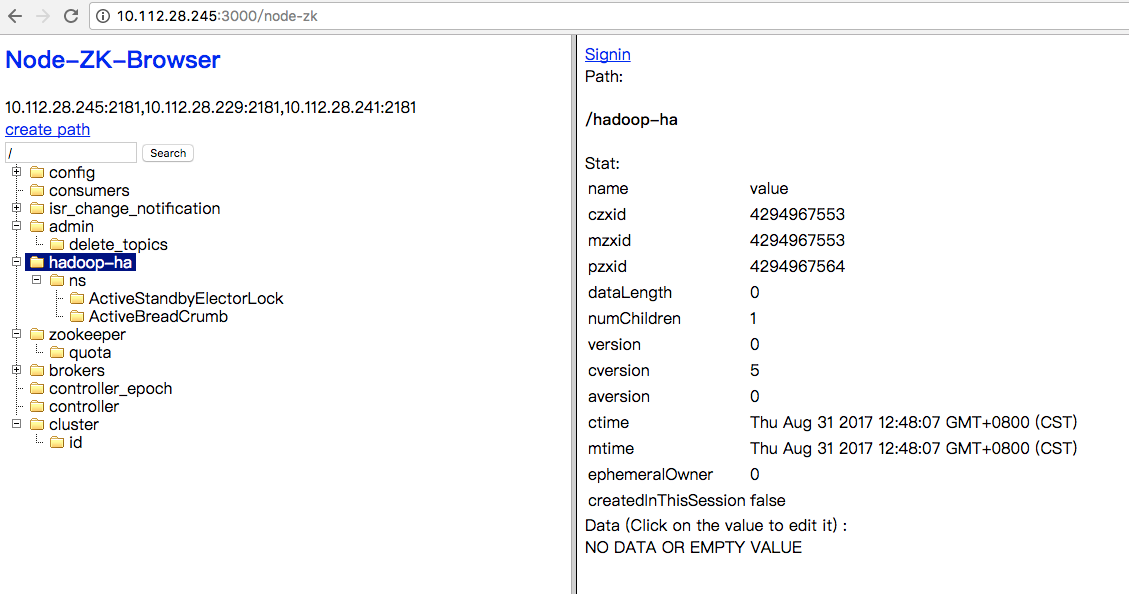

HA集群需要zk, zk搭建:http://www.cnblogs.com/kisf/p/7357184.html zk可视化管理工具:http://www.cnblogs.com/kisf/p/7365690.html

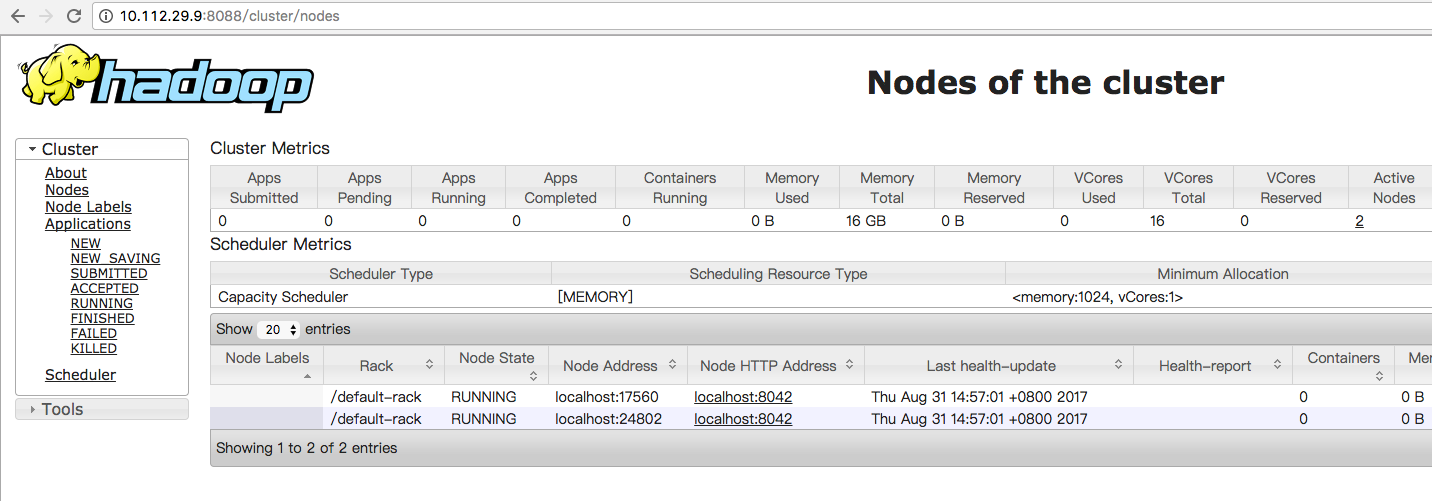

| hostname | ip | 安装软件 | 启动进程 |

| master1 | 10.112.29.9 | jdk,hadoop | NameNode,ResourceManager,JournalNode,DFSZKFailoverController |

| master2,slave1 | 10.112.29.10 | jdk,hadoop | NameNode,JournalNode,DFSZKFailoverController,DataNode,NodeManager |

| slave2 | 10.112.28.237 | jdk,hadoop | JournalNode,DataNode,NodeManager |

1. 修改/etc/hosts, 三个机器一致。

vim /etc/hosts 10.112.29.9 master1 10.112.29.10 master2 10.112.29.10 slave1 10.112.28.237 slave2 10.112.28.245 zk1 10.112.28.229 zk2 10.112.28.241 zk3

2. 修改core-site.xml, hdfs-site.xml及yarn-site.xml, mapred-site.xml不变。

core-site.xml

<configuration>

<!-- 指定hdfs的nameservice为ns -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://ns</value>

</property>

<!-- 指定hadoop运行时产生文件的存储路径 -->

<property>

<name>hadoop.tmp.dir</name>

<value>file:/xxx/soft/hadoop-2.7.3/tmp</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>4096</value>

</property>

<!--指定zookeeper地址-->

<property>

<name>ha.zookeeper.quorum</name>

<value>zk1:2181,zk2:2181,zk3:2181</value>

</property>

</configuration>

hdfs-site.xml

<configuration>

<!--指定hdfs的nameservice为ns,需要和core-site.xml中的保持一致 -->

<property>

<name>dfs.nameservices</name>

<value>ns</value>

</property>

<!-- ns下面有两个NameNode,分别是nn1,nn2 -->

<property>

<name>dfs.ha.namenodes.ns</name>

<value>nn1,nn2</value>

</property>

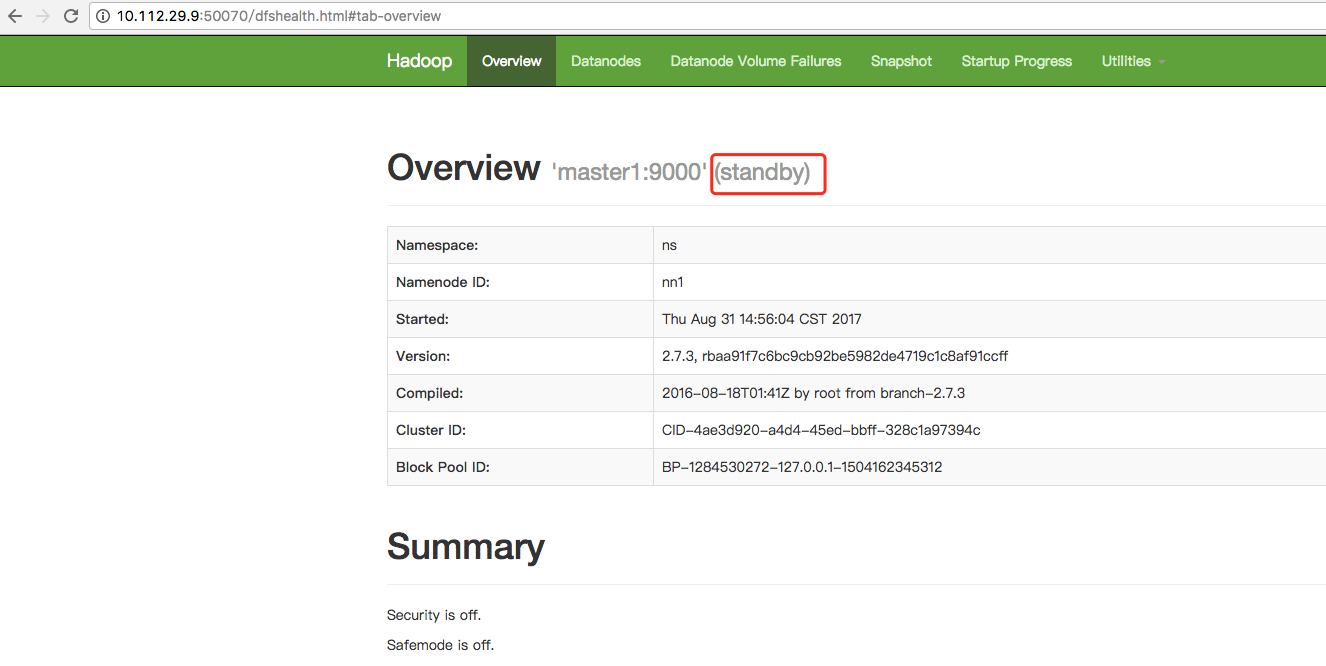

<!-- nn1的RPC通信地址 -->

<property>

<name>dfs.namenode.rpc-address.ns.nn1</name>

<value>master1:9000</value>

</property>

<!-- nn1的http通信地址 -->

<property>

<name>dfs.namenode.http-address.ns.nn1</name>

<value>master1:50070</value>

</property>

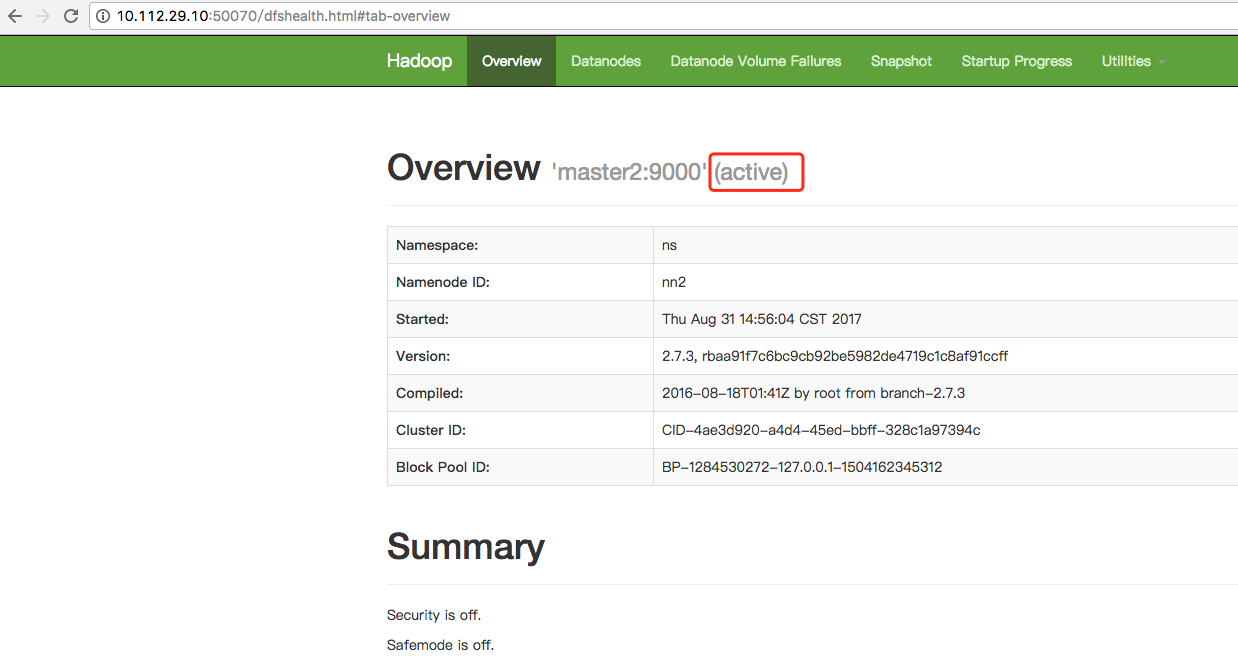

<!-- nn2的RPC通信地址 -->

<property>

<name>dfs.namenode.rpc-address.ns.nn2</name>

<value>master2:9000</value>

</property>

<!-- nn2的http通信地址 -->

<property>

<name>dfs.namenode.http-address.ns.nn2</name>

<value>master2:50070</value>

</property>

<!-- 指定NameNode的元数据在JournalNode上的存放位置 -->

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://slave1:8485;slave2:8485/ns</value>

</property>

<!-- 指定JournalNode在本地磁盘存放数据的位置 -->

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/xxx/soft/hadoop-2.7.3/journal</value>

</property>

<!-- 开启NameNode故障时自动切换 -->

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<!-- 配置失败自动切换实现方式 -->

<property>

<name>dfs.client.failover.proxy.provider.ns</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!-- 配置隔离机制,如果ssh是默认22端口,value直接写sshfence即可 -->

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<!-- 使用隔离机制时需要ssh免登陆 -->

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/root/.ssh/id_rsa</value>

</property>

<!-- 设置namenode存放的路径 -->

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/xxx/soft/hadoop-2.7.3/tmp/name</value>

</property>

<!-- 设置hdfs副本数量 -->

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<!-- 设置datanode存放的路径 -->

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/xxx/soft/hadoop-2.7.3/tmp/data</value>

</property>

<!-- 在NN和DN上开启WebHDFS (REST API)功能,不是必须 -->

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

</configuration>

yarn-site.xml

<configuration>

<!-- Site specific YARN configuration properties -->

<!-- 设置 resourcemanager 在哪个节点-->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>master1</value>

</property>

<!-- reducer取数据的方式是mapreduce_shuffle -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

</configuration>

3. 将/xxx/soft/hadoop-2.7.3 scp至其他机器。注意清理一下 logs, tmp下文件。

4. 启动

(1)格式化zkfc

./bin/hdfs zkfc -formatZK

(2)格式化namenode,格式化namenode之前需要在master1, slave1, slave2上分别启动journalnode。特别注意,如果不启动,namenode格式化会抛错。

在master1, slave1, slave2上分别启动journalnode。(单独启动进程用hadoop-daemon.sh start xxx)

./sbin/hadoop-daemon.sh start journalnode

在master1上格式化namenode

./bin/hdfs namenode -format ns

将./tmp 拷贝至master2

scp -r ./tmp/ master2:/xxx/soft/hadoop-2.7.3/

(3)启动namenode和yarn

./sbin/start-dfs.sh ./sbin/start-yarn.sh

5. 查看进程

[root@vm-10-112-29-9 hadoop-2.7.3]# jps 13349 NameNode 13704 DFSZKFailoverController 13018 JournalNode 14108 Jps 13836 ResourceManager [root@vm-10-112-29-10 hadoop-2.7.3]# jps 31412 NodeManager 30566 JournalNode 31174 DataNode 31576 Jps 31307 DFSZKFailoverController 31069 NameNode [root@vm-10-112-28-237 hadoop-2.7.3]# jps 27482 Jps 27338 NodeManager 27180 DataNode 26686 JournalNode

6. 验证HDFS

hadoop fs -put ./NOTICE.txt hdfs://ns/

7. 访问