[Stats385] Lecture 05: Avoid the curse of dimensionality

Lecturer 咖中咖 Tomaso A. Poggio

三个基本问题:

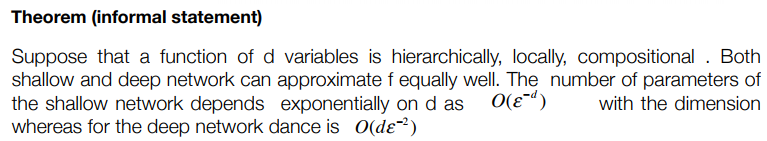

- Approximation Theory: When and why are deep networks better than shallow networks?

- Optimization: What is the landscape of the empirical risk?

- Learning Theory: How can deep learning not overfit?

Q1:

貌似在说“浅层网络”也会有好表现的可行性。

但浅网络表达能力有限。

Q2,Q3:

稀疏表达(sparse representation)和降维(dimensionality reduction)

一个是subspace,一个是union of subspaces,这就是降维和稀疏表达的本质区别。

When more parameters than data, let's increase the number of training data, training error starts rise from 0, conversely test error continues to decline.